kaggle—酒店预订需求预测分析(Hotel booking demand)

项目背景:该项目为酒店线上预订业务的研究内容,从酒店运营的角度,分析酒店的房型供给、不同时间段的需求,核心消费群体,影响退订的因素,并建立分类算法模型对酒店订单退订进行预测。

数据来源:kaggle:Hotel booking demand,此项目数据为kaggle上的一个Hotel booking数据集,感兴趣的朋友可以去下载进行练习。

数据介绍:

字段名字段含义hotel酒店名is_canceled是否退订lead_time入住时间arrival_date_year入住的年份arrival_date_month入住的月份arrival_date_week_number一年中的第几周arrival_date_day_of_month一年中的第几号stays_in_weekend_nights周末过夜数stays_in_week_nights周中过夜数adults成人数children儿童数babies婴儿数meal订餐情况country国籍market_segment细分市场distribution_channel市场is_repeated_guest是否回头客previous_cancellations客户在预订前取消的预订数量previous_bookings_not_canceled客户在预订之前未取消的预订数量reserved_room_type房型assigned_room_type房间类型编码booking_changes对预订做出的更改数量deposit_type是否交押金agent旅行社idcompany公司days_in_waiting_list确认订单前的审核天数customer_type预订类型adr平均每日放假required_car_parking_spaces客户要求的车位数量total_of_special_requests特殊要求的数量reservation_status订单状态reservation_status_date订单状态的最后设置日期

一共包含32个字段,119390条记录。

项目流程

- 数据预处理 - 缺失值处理- 数据类型转换- 异常值处理

- 特征工程 - 数值型特征标准化- 类别型特征 one-hot编码- 特征选择

- 模型训练

- 模型预测与评估

一、数据预处理

- 导入需要的库

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

import seaborn as sns

- 查看并理解数据

df = pd.read_csv('hotel_bookings.csv',encoding='gbk')

df.head()

df.info()

结果:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 119390 entries, 0 to 119389

Data columns (total 32 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 hotel 119390 non-null object

1 is_canceled 119390 non-null int64

2 lead_time 119390 non-null int64

3 arrival_date_year 119390 non-null int64

4 arrival_date_month 119390 non-null object

5 arrival_date_week_number 119390 non-null int64

6 arrival_date_day_of_month 119390 non-null int64

7 stays_in_weekend_nights 119390 non-null int64

8 stays_in_week_nights 119390 non-null int64

9 adults 119390 non-null int64

10 children 119386 non-null float64

11 babies 119390 non-null int64

12 meal 119390 non-null object

13 country 118902 non-null object

14 market_segment 119390 non-null object

15 distribution_channel 119390 non-null object

16 is_repeated_guest 119390 non-null int64

17 previous_cancellations 119390 non-null int64

18 previous_bookings_not_canceled 119390 non-null int64

19 reserved_room_type 119390 non-null object

20 assigned_room_type 119390 non-null object

21 booking_changes 119390 non-null int64

22 deposit_type 119390 non-null object

23 agent 103050 non-null float64

24 company 6797 non-null float64

25 days_in_waiting_list 119390 non-null int64

26 customer_type 119390 non-null object

27 adr 119390 non-null float64

28 required_car_parking_spaces 119390 non-null int64

29 total_of_special_requests 119390 non-null int64

30 reservation_status 119390 non-null object

31 reservation_status_date 119390 non-null object

dtypes: float64(4), int64(16), object(12)

memory usage: 29.1+ MB

发现数据集一共有32个字段,119389行数据,company列有比较明显缺失值,另外arrival_date等表示时间的列需要合并并且转换为日期格式。

- 日期合并及格式转换

由于数据集中的arrival_date_month月份信息为英文表示,先将其转换为中文月份表示,方便后期合并日期

#修改arrival_date_month的英文月份为中文月份import calendar

month =[]for i in df.arrival_date_month:

mon =list(calendar.month_name).index(i)

month.append(mon)

df.insert(4,"arrival_month",month)

新增一列预订到店的年月日arrival_date,讲原来的年月日拼接

#将年月日拼接#增加一列预订到店的年月日arrival_date

df[["arrival_date_year","arrival_month","arrival_date_day_of_month"]]= df[["arrival_date_year","arrival_month","arrival_date_day_of_month"]].apply(lambda x:x.astype(str))

date = df.arrival_date_year.str.cat([df.arrival_month,df.arrival_date_day_of_month],".")

df.insert(3,"arrival_date",date)

转换为日期格式

# 转换日期格式

df['arrival_date']=pd.to_datetime(df['arrival_date'])

将原来的年月日信息删除,只采用新建立的arrival_date表示

df.drop(['arrival_date_year','arrival_month','arrival_date_month','arrival_date_week_number'],axis=1,inplace=True)

df.drop(['arrival_date_day_of_month'],axis=1,inplace=True)

- 缺失值处理

#统计缺失值

df.isnull().sum()#统计缺失率#df.isnull().sum()/df.shape[0]

结果:

hotel 0

is_canceled 0

lead_time 0

arrival_date_year 0

arrival_date_month 0

arrival_date_week_number 0

arrival_date_day_of_month 0

stays_in_weekend_nights 0

stays_in_week_nights 0

adults 0

children 4

babies 0

meal 0

country **488**

market_segment 0

distribution_channel 0

is_repeated_guest 0

previous_cancellations 0

previous_bookings_not_canceled 0

reserved_room_type 0

assigned_room_type 0

booking_changes 0

deposit_type 0

agent **16340**

company **112593**

days_in_waiting_list 0

customer_type 0

adr 0

required_car_parking_spaces 0

total_of_special_requests 0

reservation_status 0

reservation_status_date 0

dtype: int64

数据的缺失值主要存在于children,country,agent,company4个字段中,缺失最多的是company

一,children缺失4个,且为数值型变量,所以用中位数填充

二,country缺失488个,且为类别型变量,所以使用众数填充

三,agent缺失16340个,缺失率为13.6%,缺失数量较大,但agent表示预订的旅行社,且缺失率小于20%,建议保留,并用0填充,表示没有旅行社ID

四,company缺失112593个,缺失率为94.3%>80%,不具备信息价值有效性,所以直接删除

df.children.fillna(df.children.median(),inplace=True)

df.country.fillna(df.country.mode()[0],inplace=True)

df.agent.fillna(0,inplace=True)

df.drop(['company'],axis=1,inplace=True)

- 异常值处理

通过观察数据集发现,小孩的入住量,旅行社的入住量存在浮点数,数据集中成人,小孩,婴儿字段均为0,即表示该订单入住人数为0,不符合实际。酒店的平均每日消费存在一个大于5000的异常值。我们需要对此进行处理,以免影响到后续的模型建立。

# children、agent字段不可能为浮点数,需修改数据类型

df.children = df.children.astype(int)

df.agent = df.agent.astype(int)# 根据原数据集介绍,餐饮字段中的Undefined / SC –无餐套餐为一类

df.meal.replace("Undefined","SC", inplace=True)#删除异常值的行

zero_guests =list(df["adults"]+ df["children"]+ df["babies"]==0)

df.drop(df.index[zero_guests],inplace=True)#核实adr变量的离群值情况

sns.boxplot(x=df['adr'])#删除离群值

df = df[df["adr"]<5000]

特征工程

数值型特征标准化处理

由于数值型特征的单位量纲均不一样,模型拟合时容易偏拟合,所以需要做归一化处理,统一量纲,并保留数据规律

- 先将数值型特征提取出来

#数值型特征标准化过程

num_feature =["lead_time","stays_nights_total","stays_in_weekend_nights","stays_in_week_nights","number_of_people","adults","children","babies","is_repeated_guest","previous_cancellations","previous_bookings_not_canceled","booking_changes","agent","days_in_waiting_list","adr","required_car_parking_spaces","total_of_special_requests"]

- 对数值特征进行标准化处理

from sklearn.preprocessing import StandardScaler

sc_X = StandardScaler()#df = sc_X.fit_transform(df)

dff=sc_X.fit_transform(df[["lead_time","stays_in_weekend_nights","stays_in_week_nights","adults","children","babies","is_repeated_guest","previous_cancellations","previous_bookings_not_canceled","booking_changes","agent","days_in_waiting_list","adr","required_car_parking_spaces","total_of_special_requests"]])

dff=pd.DataFrame(data=dff, columns=["lead_time","stays_in_weekend_nights","stays_in_week_nights","adults","children","babies","is_repeated_guest","previous_cancellations","previous_bookings_not_canceled","booking_changes","agent","days_in_waiting_list","adr","required_car_parking_spaces","total_of_special_requests"])

类别特征向量化

由于计算机只能识别数值,而不能识别字符串类别信息,所以为了保证信息的完整性,我们需要进行向量化处理,将其转换为模型容易识别的数值型特征

- 提取类别型特征

cat_feature =["hotel","meal","country","market_segment","distribution_channel","reserved_room_type","assigned_room_type","deposit_type","customer_type"]

- one-hot编码

from sklearn.preprocessing import OneHotEncoder

one_hot=OneHotEncoder()

data_temp=pd.DataFrame(one_hot.fit_transform(df[["hotel","meal","country","market_segment","distribution_channel","reserved_room_type","assigned_room_type","deposit_type","customer_type"]]).toarray(),

columns=one_hot.get_feature_names(["hotel","meal","country","market_segment","distribution_channel","reserved_room_type","assigned_room_type","deposit_type","customer_type"]),dtype='int32')

data_onehot=pd.concat((dff,data_temp),axis=1)#也可以用merge,join

data_onehot.head()

data_onehot['is_canceled']= df['is_canceled']

降维

在对类别型特征进行one-hot编码后,数据集由原来的32个字段改变为239个字段,维度大大增加,增加了模型训练的时间复杂性以及可能会造成数据分布稀疏的问题。为了更好的训练模型而又尽量不损失太多的数据信息,在此我们使用决策树模型进行特征选择,保留30个特征进行训练。

# 适用于分类模型from sklearn.tree import DecisionTreeClassifier

from sklearn.feature_selection import RFE

from sklearn.feature_selection import SelectFromModel

defdescTree(x,y,n):# 数据集划分为特征X和标签y,y是分类

X, y = x, y

# 决策树模型print('使用决策树模型')

tree = DecisionTreeClassifier().fit(X, y)

model1 = SelectFromModel(tree, prefit=True, max_features=n)

d = model1.get_support(indices=True)print('特征是...')print(d)return d

d= descTree(x,y,30)

all_fea = pd.DataFrame(d)

结果:

使用决策树模型

特征是...

[ 0 1 2 3 4 7 8 9 10 11 12 13 14 16 17 19 20 64

72 77 80 156 204 211 214 220 223 232 236 237]

进行特征选择后,我们拿到了所选择的30个特征的索引,我们需要对其进行挑选合并处理做最后的训练数据

fea_num =list(d)

data_stand_fea = x.iloc[:,list(fea_num[:])]

data_stand_fea

模型训练

- 切割数据集

#切割数据集 82开

X_train, X_test, y_train, y_test = train_test_split(data_stand_fea, y, test_size=0.2)

- 采用RandomForest模型进行训练

clf3 = RandomForestClassifier(n_estimators=160,

max_features=0.4,

min_samples_split=2,

n_jobs=-1,

random_state=0)

clf3.fit(X_train,y_train)

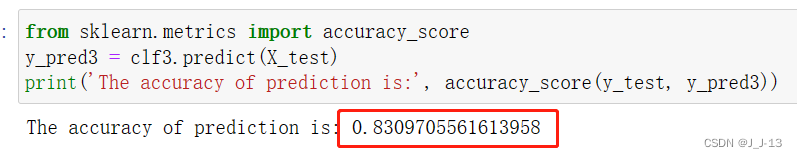

- 模型预测与评估

from sklearn.metrics import accuracy_score

y_pred3 = clf3.predict(X_test)print('The accuracy of prediction is:', accuracy_score(y_test, y_pred3))

随机森林的评分为0.83,作为第一次训练的结果,评分还是不错的,后续我们可以进行模型参数调优或者采用更加复杂的模型进行训练,提高预测精度。在此我们对此进行一个简单的参数调优

- 参数调优

参数调优可以使用GridSearchCV,但在参数数量选择上,不建议太多,否则数据处理量太多,速度会很慢。对应该模型,参数选择"n_estimators":决策树的量;“max_depth”:决策树的深度(预剪枝);“max_features”:选择的最大特征量

rf = RandomForestClassifier()#参数选择

param_dict ={"n_estimators":[100,150,200],"max_depth":[3,5,8,10,15],"max_features":["auto","log2"]}#网络搜索调优器

rf_model = GridSearchCV(rf,param_grid=param_dict,cv=3)#模型拟合

CLF = Pipeline(steps=[('preprocessor', preprocessor),('classifier', rf_model)])

CLF.fit(X_train, y_train)#不同参数下,最好的评分及其参数

CLF.best_score_

CLF.best_params_

版权归原作者 J_J-13 所有, 如有侵权,请联系我们删除。