Qualcomm® AI Engine Direct 使用手册(17)

6.3 执行

qnn 网络运行

qnn-net-run工具用于使用从 QNN 转换器的输出编译的模型库,并在特定后端上运行它。

DESCRIPTION:------------

Example application demonstrating how to load and execute a neural network

using QNN APIs.

REQUIRED ARGUMENTS:---------------------model <FILE> Path to the model containing a QNN network.

To compose multiple graphs, use comma-separated list of

model.so files. The syntax is

<qnn_model_name_1.so>,<qnn_model_name_2.so>.--backend <FILE> Path to a QNN backend to execute the model.--input_list <FILE> Path to a file listing the inputs for the network.

If there are multiple graphs in model.so,this has

to be comma-separated list of input list files.

When multiple graphs are present, to skip execution of a graph use

"__"(double underscore without quotes) as the file name in the

comma-seperated list of input list files.--retrieve_context <VAL> Path to cached binary from which to load a saved

context from and execute graphs.--retrieve_context and--model are mutually exclusive. Only one of the options

can be specified at a time.

OPTIONAL ARGUMENTS:---------------------model_prefix Function prefix to use when loading <qnn_model_name.so>.

Default: QnnModel

--debug Specifies that output from all layers of the network

will be saved. This option can not be used when loading

a saved context through --retrieve_context option.--output_dir <DIR> The directory to save output to. Defaults to ./output.--use_native_output_files Specifies that the output files will be generated in the data

type native to the graph. If not specified, output files will

be generated in floating point.--use_native_input_files Specifies that the input files will be parsed in the data

type native to the graph. If not specified, input files will

be parsed in floating point. Note that options --use_native_input_files

and--native_input_tensor_names are mutually exclusive.

Only one of the options can be specified at a time.--native_input_tensor_names <VAL> Provide a comma-separated list of input tensor names,for which the input files would be read/parsed in native format.

Note that options --use_native_input_files and--native_input_tensor_names are mutually exclusive.

Only one of the options can be specified at a time.

The syntax is: graphName0:tensorName0,tensorName1;graphName1:tensorName0,tensorName1

--op_packages <VAL> Provide a comma-separated list of op packages, interface

providers,and, optionally, targets to register. Valid values

for target are CPU and HTP. The syntax is:

op_package_path:interface_provider:target[,op_package_path:interface_provider:target...]--profiling_level <VAL> Enable profiling. Valid Values:1. basic: captures execution and init time.2. detailed: in addition to basic, captures per Op timing

for execution,if a backend supports it.--perf_profile <VAL> Specifies performance profile to be used. Valid settings are

low_balanced, balanced,default, high_performance,

sustained_high_performance, burst, low_power_saver,

power_saver, high_power_saver, extreme_power_saver

and system_settings.

Note: perf_profile argument is now deprecated for

HTP backend, user can specify performance profile

through backend config now. Please refer to config_file

backend extensions usage section below for more details.--config_file <FILE> Path to a JSON config file. The config file currently

supports options related to backend extensions,

context priority and graph configs. Please refer to SDK

documentation for more details.--log_level <VAL> Specifies max logging level to be set. Valid settings:

error, warn, info, debug,and verbose.--shared_buffer Specifies creation of shared buffers for graph I/O between the application

and the device/coprocessor associated with a backend directly.

This option is currently supported on Android only.--synchronous Specifies that graphs should be executed synchronously rather than asynchronously.

If a backend does not support asynchronous execution,this flag is unnecessary.--num_inferences <VAL> Specifies the number of inferences. Loops over the input_list until

the number of inferences has transpired.--duration <VAL> Specifies the duration of the graph execution in seconds.

Loops over the input_list until this amount of time has transpired.--keep_num_outputs <VAL> Specifies the number of outputs to be saved.

Once the number of outputs reach the limit, subsequent outputs would be just discarded.--batch_multiplier <VAL> Specifies the value with which the batch value in input and output tensors dimensions

will be multiplied. The modified input and output tensors will be used only during

the execute graphs. Composed graphs will still use the tensor dimensions from model.--timeout <VAL> Specifies the value of the timeout for execution of graph in micro seconds. Please note

usingthis option with a backend that does not support timeout signals results in an error.--max_input_cache_tensor_sets <VAL> Specifies the maximum number of input tensor sets that can be cached.

Use value "-1" to cache all the input tensors created.

Note that options --max_input_cache_tensor_sets and--max_input_cache_size_mb are mutually exclusive.

Only one of the options can be specified at a time.--max_input_cache_size_mb <VAL> Specifies the maximum cache size in mega bytes(MB).

Note that options --max_input_cache_tensor_sets and--max_input_cache_size_mb are mutually exclusive.

Only one of the options can be specified at a time.--set_output_tensors <VAL> Provide a comma-separated list of intermediate output tensor names,for which the outputs

will be written in addition to final graph output tensors. Note that options --debug and--set_output_tensors are mutually exclusive. Only one of the options can be specified at a time.

Also note that this option can not be used when graph is retrieved from context binary,

since the graph is already finalized when retrieved from context binary.

The syntax is: graphName0:tensorName0,tensorName1;graphName1:tensorName0,tensorName1

--version Print the QNN SDK version.--help Show this help message.

请参阅<QNN_SDK_ROOT>/examples/QNN/NetRun文件夹以获取有关如何使用qnn-net-run工具的参考示例。

典型论据:

–backend- 适当的参数取决于您想要运行的目标和后端

安卓(aarch64):<QNN_SDK_ROOT>/lib/aarch64-android/

- CPU -libQnnCpu.so

- GPU-libQnnGpu.so

- HTA-libQnnHta.so

- DSP(Hexagon v65)-libQnnDspV65Stub.so

- DSP(Hexagon v66)-libQnnDspV66Stub.so

- DSP-libQnnDsp.so

- HTP(Hexagon v68)-libQnnHtp.so

- [已弃用] HTP 备用准备 (Hexagon v68) -libQnnHtpAltPrepStub.so

- Saver -libQnnSaver.so

Linux x86:<QNN_SDK_ROOT>/lib/x86_64-linux-clang/

- CPU -libQnnCpu.so

- HTP(**Hexagon ** v68)-libQnnHtp.so

- Saver -libQnnSaver.so

Windows x86:<QNN_SDK_ROOT>/lib/x86_64-windows-msvc/

- CPU -QnnCpu.dll

- Saver -QnnSaver.dll

WoS:<QNN_SDK_ROOT>/lib/aarch64-windows-msvc/

- CPU -QnnCpu.dll

- DSP(Hexagon v66)-QnnDspV66Stub.dll

- DSP-QnnDsp.dll

- HTP(Hexagon v68)-QnnHtp.dll

- Saver -QnnSaver.dll

笔记

基于 Hexagon 的后端库是 x86_64 平台上的模拟

–input_list- 此参数提供一个文件,其中包含用于图形执行的输入文件的路径。输入文件可以使用以下格式指定:

<input_layer_name>:=<input_layer_path>[<space><input_layer_name>:=<input_layer_path>][<input_layer_name>:=<input_layer_path>[<space><input_layer_name>:=<input_layer_path>]]...

下面是一个示例,包含 3 组输入,层名称为“Input_1”和“Input_2”,文件位于相对路径“Placeholder_1/real_input_inputs_1/”中:

注意:如果模型的批次维度大于 1,则输入文件中的批次元素数量必须与模型中指定的批次维度匹配,或者必须为 1。在后一种情况下,qnn-net-run 会将多条线组合成一个输入张量。

–op_packages- 仅当您使用自定义 op 包时才需要此参数。本机 QNN 操作已包含在后端库中。

使用自定义 op 包时,提供的每个 op 包都需要一个冒号分隔的命令行参数,其中包含 op 包共享库 (.so) 文件的路径以及接口提供程序的名称,格式为<op_package_path>:<interface_provider>.

interface_provider参数必须是op包库中满足QnnOpPackage_InterfaceProvider_t接口的函数的名称 。在 创建的框架代码中qnn-op-package-generator,该函数将被命名为 <package_name>InterfaceProvider。

有关更多信息,请参阅生成 Op 包。

–config_file- 仅当您需要指定上下文优先级或提供后端扩展相关参数时才需要此参数。这些参数通过 JSON 文件指定。JSON文件的模板如下所示:

{"backend_extensions":{"shared_library_path":"path_to_shared_library","config_file_path":"path_to_config_file"},"context_configs":{"context_priority":"low | normal | normal_high | high","async_execute_queue_depth": uint32_value,"enable_graphs":["<graph_name_1>","<graph_name_2>",...],"memory_limit_hint": uint64_value,"is_persistent_binary": boolean_value

}"graph_configs":[{"graph_name":"graph_name_1","graph_priority":"low | normal | normal_high | high"}]}

JSON 文件中的所有选项都是可选的。context_priority用于指定上下文的优先级作为上下文配置。async_execute_queue_depth用于指定给定时间可以在队列中执行的数量。使用上下文二进制文件时,enable_graphs用于实现图形选择功能。 memory_limit_hint用于设置反序列化上下文的峰值内存限制提示(以 MB 为单位)。 is_persistent_binary表示上下文二进制指针在 QnnContext_createFromBinary 期间可用,直到调用 QnnContext_free 为止。

图形选择:允许指定要加载和执行的上下文中的图形子集。如果指定了enable_graphs,则仅加载这些图表。如果选择了图形名称但它不存在,则会出现错误。如果未指定enable_graphs或将其作为空列表传递,则默认行为将继续加载上下文中的所有图形。

如果后端支持异步执行, graph_configs可用于指定异步执行顺序和深度。每组图形配置都必须与图形名称一起指定。

backend_extensions用于在特定后端中执行自定义选项。如果需要,可以通过提供扩展共享库 (.so) 和配置文件来完成此操作。这也是启用各种性能模式所必需的,可以使用后端配置来执行这些模式。目前HTP通过共享库支持libQnnHtpNetRunExtensions.so,DSP通过libQnnDspNetRunExtensions.so. 对于可以使用 HTP 启用的不同自定义选项,请参阅HTP 后端扩展

–shared_buffer- 此参数仅需要指示 qnn-net-run 使用共享缓冲区进行零复制用例,其中设备/协处理器与特定后端(例如,带有 HTP 后端的 DSP)关联,用于图形输入和输出张量数据。仅 Android 支持此选项。qnn-net-run 使用 rpcmem API 实现此功能,该 API 进一步使用 Android 上的 ION/DMA-BUF 内存分配器创建共享缓冲区(可通过共享库 libcdsprpc.so 获取)。除了指定此选项之外,为了使 qnn-net-run 能够发现 libcdsprpc.so,还需要将共享库所在的路径附加到 LD_LIBRARY_PATH 变量中。

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/vendor/lib64

使用 qnn-net-run 在 HTP 后端运行量化模型

HTP 后端目前允许在 Linux 开发主机(使用x86_64-linux-clang后端库)上离线最终确定/创建量化 QNN 模型的优化版本,然后在设备上执行最终确定的模型(使用hexagon-v68后端库)。

首先,按照设置部分中的说明配置环境。接下来,使用 QNN 转换器之一生成的工件从您的网络构建 QNN 模型库。请参阅构建示例模型以供参考。最后,使用该qnn-context-binary-generator实用程序生成最终图的序列化表示,以在设备上执行序列化二进制文件。

1# Generate the optimized serialized representation of QNN Model on Linux development host.2$ qnn-context-binary-generator --binary_file qnngraph.serialized.bin \

3--model <path_to_model_library>/libQnnModel.so \ # a x86_64-linux-clang built quantized QNN model

4--backend ${QNN_SDK_ROOT}/lib/x86_64-linux-clang/libQnnHtp.so \

5--output_dir <output_dir_for_result_and_qnngraph_serialized_binary> \

要使用生成的最终图的序列化表示 ( qnngraph.serialized.bin),请确保以下二进制文件在 Android 设备上可用:

- libQnnHtpV68Stub.so(ARM)

- libQnnHtpPrepare.so(ARM)

- libQnnModel.so(ARM)

- libQnnHtpV68Skel.so(cDSP v68)

- qnngraph.serialized.bin(在 Linux 开发主机上运行的序列化二进制文件)

<QNN_SDK_ROOT>/examples/QNN/NetRun/android/android-qnn-net-run.sh有关如何在 Android 设备上使用工具的参考,请参阅脚本qnn-net-run。

1# Run the optimized graph on HTP target

2$ qnn-net-run --retrieve_context qnngraph.serialized.bin \

3--backend <path_to_model_library>/libQnnHtp.so \

4--output_dir <output_dir_for_result> \

5--input_list <path_to_input_list.txt>

使用 qnn-net-run 在 HTP 后端运行 Float 模型

QNN HTP 后端可以支持在选定的 Qualcomm SoC 上运行 float32 模型。

首先,按照设置部分中的说明配置环境。接下来,使用 QNN 转换器之一生成的工件从您的网络构建 QNN 模型库。请参阅构建示例模型以供参考。

最后,通过 JSON 文件配置backend_extensions参数,并为 HTP 后端设置自定义选项。使用参数将此文件传递给 qnn-net-run --config_file。backend_extensions采用两个参数,一个扩展共享库 (.so)(用于 HTP 使用libQnnHtpNetRunExtensions.so)和一个后端配置文件。

以下是 JSON 文件的模板:

{"backend_extensions":{"shared_library_path":"path_to_shared_library","config_file_path":"path_to_config_file"}}

对于 HTP 后端扩展配置,您可以通过配置文件设置“vtcm_mb”、“fp16_relaxed_ precision”和“graph_names”。

这是配置文件的示例:

1{2"graphs":3{4"vtcm_mb":8,// Provides performance infrastructure configuration options that are memory specific.5// It is optional and default value is 0 which means this value will not be set.6"fp16_relaxed_precision":true,// Ensures that operations will run with relaxed precision math i.e. float16 math78"graph_names":["qnn_model"]// Provide the list of names of the graph for the inference as specified when using qnn converter tools9// "qnn_model" must be the name of the .cpp file generated during the model conversion (without the .cpp file extension)10.....11}12}

请注意,从 SDK 2.20 版本开始,上述配置结构将被弃用,支持的新配置如下所示:

1{2"graphs":[3{4"vtcm_mb":8,5"fp16_relaxed_precision":true,6"graph_names":["qnn_model"]7},8{9.....// Other graph object10}11]12}

笔记

“fp16_relaxed_ precision”是在 HTP float 运行时上运行 QNN float 模型的关键配置。仅当后端扩展配置中至少提供了一个“graph_name”时,才会应用 HTP 图形配置,例如 fp16_relaxed_ precision、vtcm_mb 等。

<QNN_SDK_ROOT>/examples/QNN/NetRun/android/android-qnn-net-run.sh有关如何在 Android 设备上使用工具的参考,请参阅脚本qnn-net-run。

1# Run the optimized graph on HTP target

2$ qnn-net-run --model <path_to_model_library>/libQnnModel.so \ # a x86_64-linux-clang built float QNN model

3--backend ${QNN_SDK_ROOT}/lib/x86_64-linux-clang/libQnnHtp.so \

4--config_file <path_to_JSON_file.json> \

5--output_dir <output_dir_for_result> \

6--input_list <path_to_input_list.txt>

qnn-吞吐量-网络运行

qnn -throughput-net-run工具用于在 QNN 后端或以多线程方式在不同后端上练习多个模型的执行。它允许在指定的后端上重复执行模型指定的持续时间或迭代次数。

Usage:------

qnn-throughput-net-run [--config <config_file>.json][--output <results>.json]

REQUIRED argument(s):--config <FILE>.json Path to the json config file .

OPTIONAL argument(s):--output <FILE>.json Specify the json file used to save the performance test results.

配置 JSON 文件:

qnn-throughput-net-run使用配置文件作为输入在后端运行模型。配置 json 文件包含四个对象(必需) - backends、models、contexts和 testCase。

以下是 json 配置文件的示例。 有关四个配置对象后端、模型、 上下文和测试用例的详细信息,请参阅以下部分。

{"backends":[{"backendName":"cpu_backend","backendPath":"libQnnCpu.so","profilingLevel":"BASIC","backendExtensions":"libQnnHtpNetRunExtensions.so","perfProfile":"high_performance"},{"backendName":"gpu_backend","backendPath":"libQnnGpu.so","profilingLevel":"OFF"}],"models":[{"modelName":"model_1","modelPath":"libqnn_model_1.so","loadFromCachedBinary":false,"inputPath":"model_1-input_list.txt","inputDataType":"FLOAT","postProcessor":"MSE","outputPath":"model_1-output","outputDataType":"FLOAT_ONLY","saveOutput":"NATIVE_ALL","groundTruthPath":"model_1-golden_list.txt"},{"modelName":"model_2","modelPath":"libqnn_model_2.so","loadFromCachedBinary":false,"inputPath":"model_2-input_list.txt","inputDataType":"FLOAT","postProcessor":"MSE","outputPath":"model_2-output","outputDataType":"FLOAT_ONLY","saveOutput":"NATIVE_LAST"}],"contexts":[{"contextName":"cpu_context_1"},{"contextName":"gpu_context_1"}],"testCase":{"iteration":5,"logLevel":"error","threads":[{"threadName":"cpu_thread_1","backend":"cpu_backend","context":"cpu_context_1","model":"model_1","interval":10,"loopUnit":"count","loop":1},{"threadName":"gpu_thread_1","backend":"gpu_backend","context":"gpu_context_1","model":"model_2","interval":0,"loopUnit":"count","loop":10}]}}

backends:属性值是一个 json 对象数组,其中每个对象包含执行模型所需的后端信息。数组的每个对象都具有以下属性作为键/值对。

models:属性值是一个 json 对象数组,其中每个对象包含有关模型的详细信息以及相应的输入数据和后处理信息。数组的每个对象都具有以下属性作为键/值对。

contexts:属性值是一个json对象数组,其中每个对象包含所有上下文信息。数组的每个对象都具有以下属性作为键/值对。

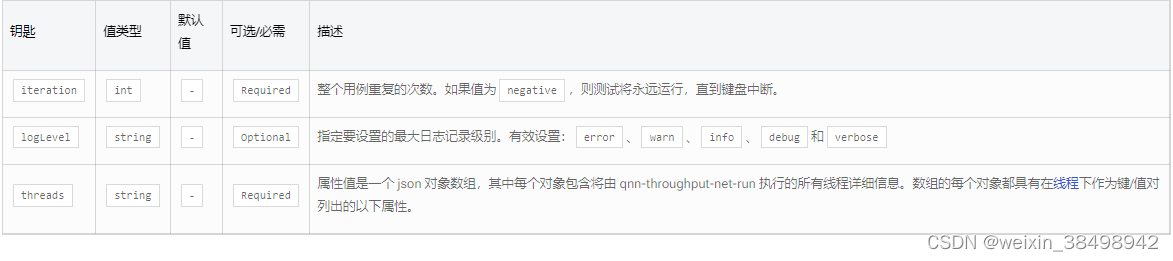

testCase:属性值是一个 json 对象,指定控制多线程执行的测试配置。

threads:属性值是一个数组,包含所有线程以及相应的后端、上下文和模型信息。数组的每个元素都可以具有以下必需/可选属性。

sample_config.json可以在以下位置找到示例 json 文件<QNN_SDK_ROOT>/examples/QNN/ThroughputNetRun。

版权归原作者 weixin_38498942 所有, 如有侵权,请联系我们删除。