YOLOAir:助力YOLO论文改进🏆 、 不同数据集改进🏆、创新点改进👇

- 💡YOLOAir项目:基于 YOLOv5 代码框架,结合不同模块来构建不同的YOLO目标检测模型。

- 🌟本项目包含大量的改进方式,降低改进难度,改进点包含

Backbone、Neck、Head、注意力机制、IoU损失函数、NMS、Loss计算方式、自注意力机制、数据增强部分、激活函数等部分,详情可以关注👉 YOLOAir 的说明文档。 - 🎈同时

附带各种改进点原理及对应的代码改进方式教程,用户可根据自身情况快速排列组合,在不同的数据集上实验, 应用组合写论文, 创造自己的毕业项目!🏆

🎈🎈🎈新的仓库链接👉:YOLOAir仓库:https://github.com/iscyy/yoloair

可以 fork 和 star,持续同步更新完善

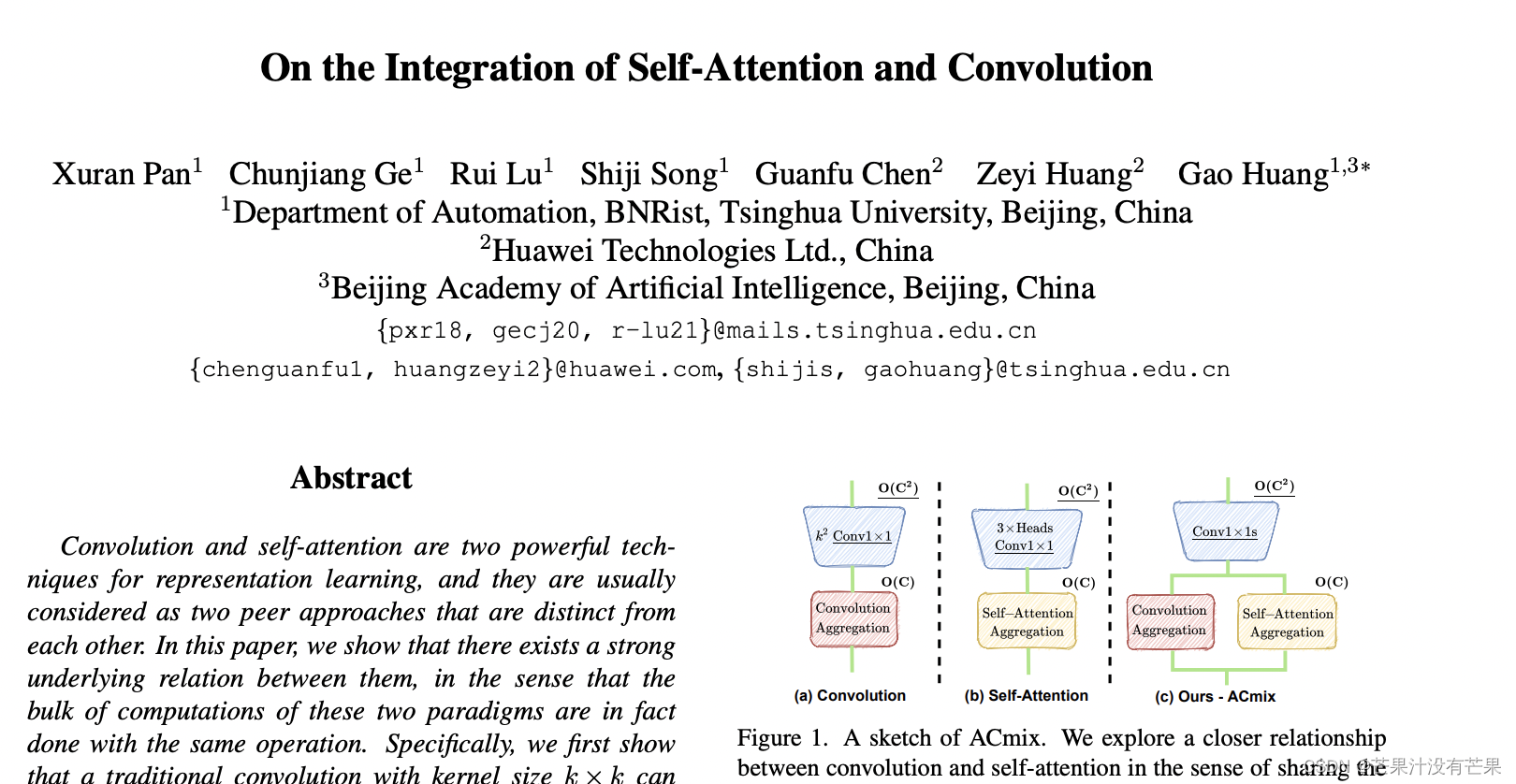

本篇是《ACmix结构🚀自注意力和卷积集成》的修改 演示

使用YOLOv5网络🚀作为示范,可以无缝加入到 YOLOv7、YOLOX、YOLOR、YOLOv4、Scaled_YOLOv4、YOLOv3等一系列YOLO算法模块

文章目录

ACmix结构理论部分

论文:On the Integration of Self-Attention and Convolution

论文地址:https://arxiv.org/pdf/2111.14556.pdf

yolov5的yaml配置文件修改

增加以下yolov5s_acmix.yaml文件

# parameters

nc: 10 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# anchors

anchors:

#- [5,6, 7,9, 12,10] # P2/4

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, ACmix, [512, 512]], #9 修改示例

[-1, 1, SPPF, [1024,5]], #10

]

# YOLOv5 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 14

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 18 (P3/8-small)

[-1, 1, CBAM, [256]], #19

[-1, 1, Conv, [256, 3, 2]],

[[-1, 15], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 22 (P4/16-medium) [256, 256, 1, False]

[-1, 1, CBAM, [512]],

[-1, 1, Conv, [512, 3, 2]], #[256, 256, 3, 2]

[[-1, 11], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 25 (P5/32-large) [512, 512, 1, False]

[-1, 1, CBAM, [1024]],

[[19, 23, 27], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

common.py配置

./models/common.py文件增加以下模块

def position(H, W, is_cuda=True):

if is_cuda:

loc_w = torch.linspace(-1.0, 1.0, W).cuda().unsqueeze(0).repeat(H, 1)

loc_h = torch.linspace(-1.0, 1.0, H).cuda().unsqueeze(1).repeat(1, W)

else:

loc_w = torch.linspace(-1.0, 1.0, W).unsqueeze(0).repeat(H, 1)

loc_h = torch.linspace(-1.0, 1.0, H).unsqueeze(1).repeat(1, W)

loc = torch.cat([loc_w.unsqueeze(0), loc_h.unsqueeze(0)], 0).unsqueeze(0)

return loc

def stride(x, stride):

b, c, h, w = x.shape

return x[:, :, ::stride, ::stride]

def init_rate_half(tensor):

if tensor is not None:

tensor.data.fill_(0.5)

def init_rate_0(tensor):

if tensor is not None:

tensor.data.fill_(0.)

class ACmix(nn.Module):

def __init__(self, in_planes, out_planes, kernel_att=7, head=4, kernel_conv=3, stride=1, dilation=1):

super(ACmix, self).__init__()

self.in_planes = in_planes

self.out_planes = out_planes

self.head = head

self.kernel_att = kernel_att

self.kernel_conv = kernel_conv

self.stride = stride

self.dilation = dilation

self.rate1 = torch.nn.Parameter(torch.Tensor(1))

self.rate2 = torch.nn.Parameter(torch.Tensor(1))

self.head_dim = self.out_planes // self.head

self.conv1 = nn.Conv2d(in_planes, out_planes, kernel_size=1)

self.conv2 = nn.Conv2d(in_planes, out_planes, kernel_size=1)

self.conv3 = nn.Conv2d(in_planes, out_planes, kernel_size=1)

self.conv_p = nn.Conv2d(2, self.head_dim, kernel_size=1)

self.padding_att = (self.dilation * (self.kernel_att - 1) + 1) // 2

self.pad_att = torch.nn.ReflectionPad2d(self.padding_att)

self.unfold = nn.Unfold(kernel_size=self.kernel_att, padding=0, stride=self.stride)

self.softmax = torch.nn.Softmax(dim=1)

self.fc = nn.Conv2d(3*self.head, self.kernel_conv * self.kernel_conv, kernel_size=1, bias=False)

self.dep_conv = nn.Conv2d(self.kernel_conv * self.kernel_conv * self.head_dim, out_planes, kernel_size=self.kernel_conv, bias=True, groups=self.head_dim, padding=1, stride=stride)

self.reset_parameters()

def reset_parameters(self):

init_rate_half(self.rate1)

init_rate_half(self.rate2)

kernel = torch.zeros(self.kernel_conv * self.kernel_conv, self.kernel_conv, self.kernel_conv)

for i in range(self.kernel_conv * self.kernel_conv):

kernel[i, i//self.kernel_conv, i%self.kernel_conv] = 1.

kernel = kernel.squeeze(0).repeat(self.out_planes, 1, 1, 1)

self.dep_conv.weight = nn.Parameter(data=kernel, requires_grad=True)

self.dep_conv.bias = init_rate_0(self.dep_conv.bias)

def forward(self, x):

q, k, v = self.conv1(x), self.conv2(x), self.conv3(x)

scaling = float(self.head_dim) ** -0.5

b, c, h, w = q.shape

h_out, w_out = h//self.stride, w//self.stride

# ### att

# ## positional encoding

pe = self.conv_p(position(h, w, x.is_cuda))

q_att = q.view(b*self.head, self.head_dim, h, w) * scaling

k_att = k.view(b*self.head, self.head_dim, h, w)

v_att = v.view(b*self.head, self.head_dim, h, w)

if self.stride > 1:

q_att = stride(q_att, self.stride)

q_pe = stride(pe, self.stride)

else:

q_pe = pe

unfold_k = self.unfold(self.pad_att(k_att)).view(b*self.head, self.head_dim, self.kernel_att*self.kernel_att, h_out, w_out) # b*head, head_dim, k_att^2, h_out, w_out

unfold_rpe = self.unfold(self.pad_att(pe)).view(1, self.head_dim, self.kernel_att*self.kernel_att, h_out, w_out) # 1, head_dim, k_att^2, h_out, w_out

att = (q_att.unsqueeze(2)*(unfold_k + q_pe.unsqueeze(2) - unfold_rpe)).sum(1) # (b*head, head_dim, 1, h_out, w_out) * (b*head, head_dim, k_att^2, h_out, w_out) -> (b*head, k_att^2, h_out, w_out)

att = self.softmax(att)

out_att = self.unfold(self.pad_att(v_att)).view(b*self.head, self.head_dim, self.kernel_att*self.kernel_att, h_out, w_out)

out_att = (att.unsqueeze(1) * out_att).sum(2).view(b, self.out_planes, h_out, w_out)

## conv

f_all = self.fc(torch.cat([q.view(b, self.head, self.head_dim, h*w), k.view(b, self.head, self.head_dim, h*w), v.view(b, self.head, self.head_dim, h*w)], 1))

f_conv = f_all.permute(0, 2, 1, 3).reshape(x.shape[0], -1, x.shape[-2], x.shape[-1])

out_conv = self.dep_conv(f_conv)

return self.rate1 * out_att + self.rate2 * out_conv

自行插入其他层 换通道的时候,注意匹配上通道

yolo.py配置修改

不需要

提示

出现RuntimeError: Input type (torch.cuda.FloatTensor) and weight type (torch.cuda.HalfTensor) should be the same

解决办法:

1.train加个参数

parser.add_argument('--acmix', action='store_true', help='useacmix')

2.val.run调用的时候加个

(half=not opt.acmix)

传进去,因为val.py默认的

half

为True,要将其设置为false。

或者每次跑包含

acmix模块

的网络,直接将val.py的

half

参数改成false

训练yolov5s_acmix.yaml模型

python train.py --cfg yolov5s_acmix.yaml --acmix

基于以上yolov5s_acmix.yaml文件继续修改

关于yolov5s_acmix.yaml文件配置中的acmix模块,可以针对不同数据集自行再进行模块修改,原理一致

版权归原作者 芒果汁没有芒果 所有, 如有侵权,请联系我们删除。