🍨 本文为[🔗365天深度学习训练营]内部限免文章(版权归 K同学啊 所有)

🍖 作者:[K同学啊]

📌 本周任务:

1.了解并学习InceptionV3相对于InceptionV1改进了哪些地方(重点)

2.使用Inception v3完成天气识别案例

一、理论基础

Inception v3由谷歌研究员Christian Szegedy等人在2015年的论文《Rethinking the Inception Architecture for Computer Vision》中提出。Inception v3是Inception网络系列的第三个版本,它在ImageNet图像识别竞赛中取得了优异成绩,尤其是在大规模图像识别任务中表现出色。

Inception v3的主要特点如下:

1更深的网络结构:Inception v3比之前的Inception网络结构更深,包含了48层卷积层。这使得网络可以提取更多层次的特征,从而在图像识别任务上取得更好的效果。

2使用Factorized Convolutions:Inception v3采用了Factorized Convolutions(分解卷积),将较大的卷积核分解为多个较小的卷积核。这种方法可以降低网络的参数数量,减少计算复杂度,同时保持良好的性能。

3使用Batch Normalization:Inception v3在每个卷积层之后都添加了Batch Normalization(BN),这有助于网络的收敛和泛化能力。BN可以减少Internal Covariate Shift(内部协变量偏移)现象,加快训练速度,同时提高模型的鲁棒性。

4辅助分类器:Inception v3引入了辅助分类器,可以在网络训练过程中提供额外的梯度信息,帮助网络更好地学习特征。辅助分类器位于网络的某个中间层,其输出会与主分类器的输出进行加权融合,从而得到最终的预测结果。

5基于RMSProp的优化器:Inception v3使用了RMSProp优化器进行训练。相比于传统的随机梯度下降(SGD)方法,RMSProp可以自适应地调整学习率,使得训练过程更加稳定,收敛速度更快。

Inception v3在图像分类、物体检测和图像分割等计算机视觉任务中均取得了显著的效果。然而,由于其较大的网络结构和计算复杂度,Inception v3在实际应用中可能需要较高的硬件要求。

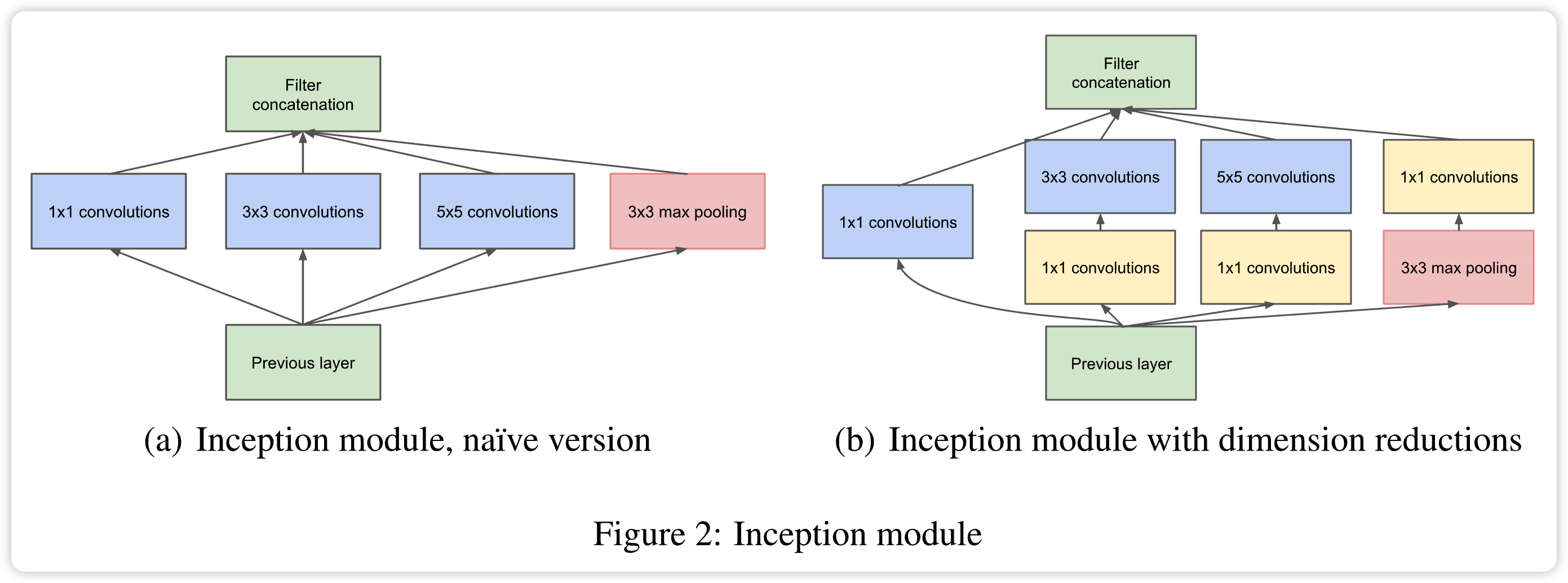

相对于Inception v1的Inception Module结构,Inception v3中做出了如下改动:

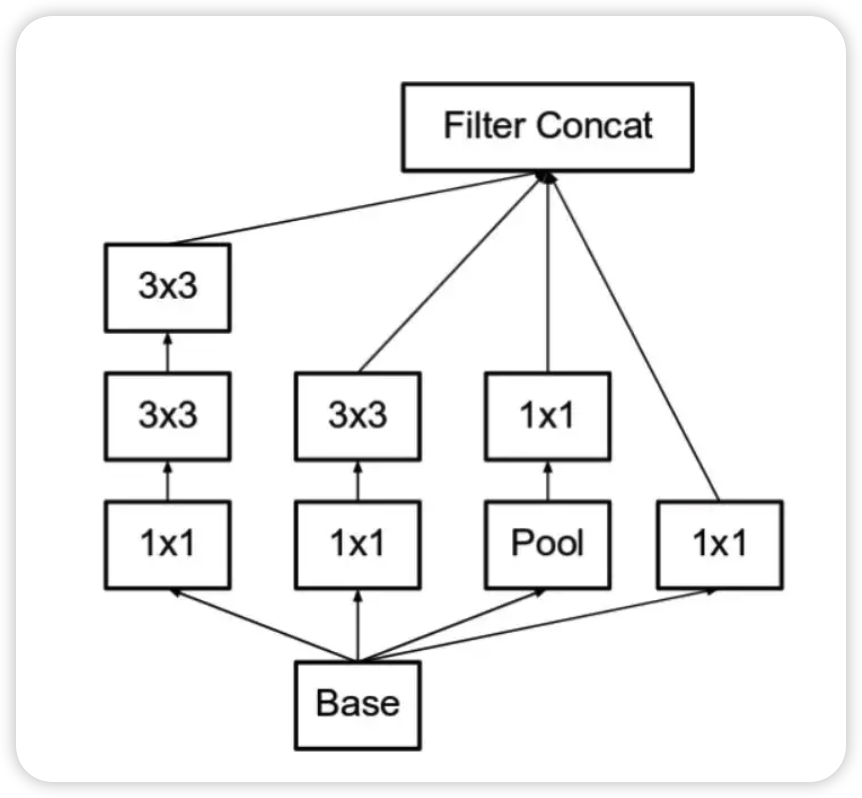

将 5×5 的卷积分解为两个 3×3 的卷积运算以提升计算速度。尽管这有点违反直觉,但一个 5×5 的卷积在计算成本上是一个 3×3 卷积的 2.78 倍。所以叠加两个 3×3 卷积实际上在性能上会有所提升,如下图所示:

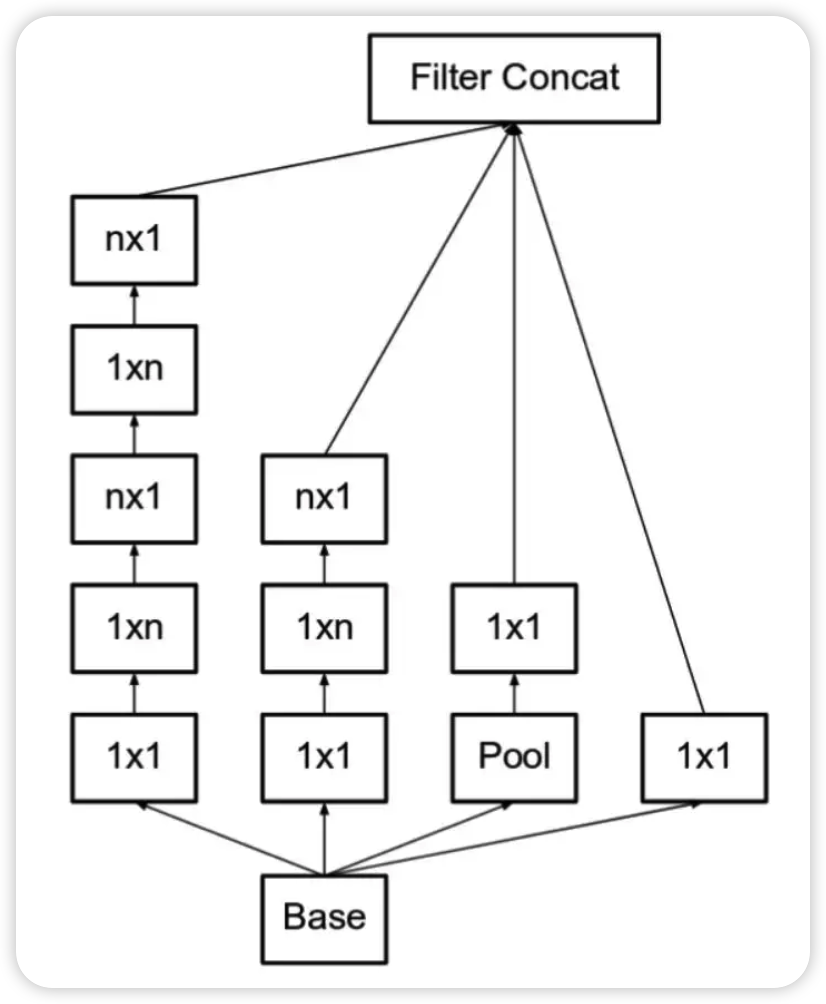

此外,作者将 n×n 的卷积核尺寸分解为 1×n 和 n×1 两个卷积。例如,一个 3×3 的卷积等价于首先执行一个 1×3 的卷积再执行一个 3×1 的卷积。他们还发现这种方法在成本上要比单个 3×3 的卷积降低 33%,这一结构如下图所示:

此处如果 n=3,则与上一张图像一致。最左侧的 5x5 卷积可被表示为两个 3x3 卷积,它们又可以被表示为 1x3 和 3x1 卷积。

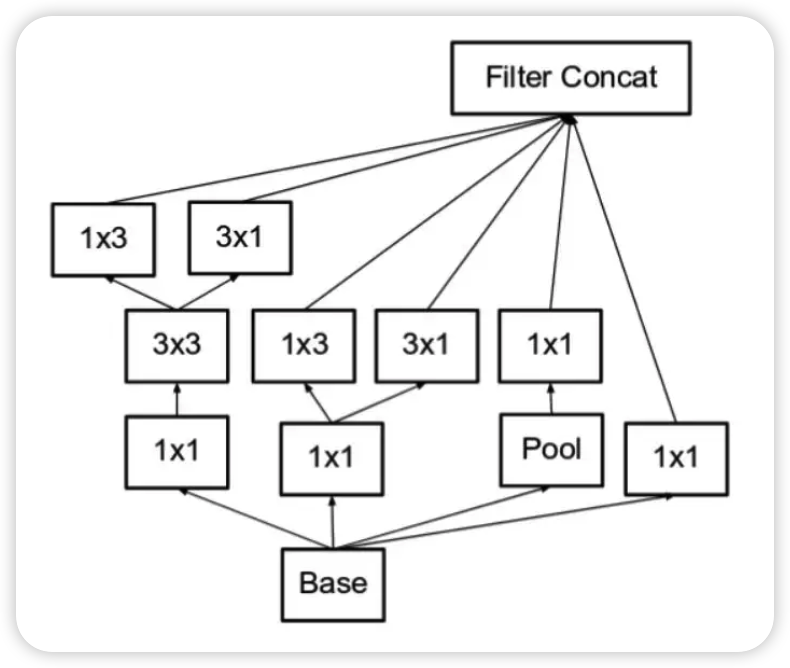

模块中的滤波器组被扩展(即变得更宽而不是更深),以解决表征性瓶颈。如果该模块没有被拓展宽度,而是变得更深,那么维度会过多减少,造成信息损失。如下图所示:

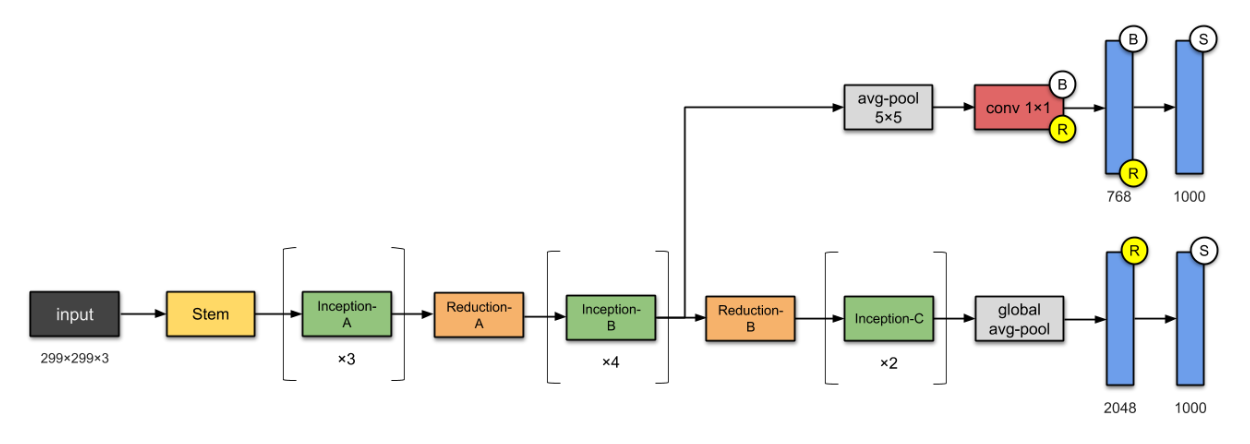

最后实现的inception v3网络是上图结构图如下:

二、pytorch代码复现

1.前期准备

大致模板和以前一样,以后不再详细列,样例可见:深度学习第J4周:ResNet与DenseNet结合探索_牛大了2023的博客-CSDN博客

配置gpu+导入数据集

import os,PIL,random,pathlib

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

data_dir = './data/'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[1] for path in data_paths]

print(classeNames)

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:", image_count)

数据预处理+划分数据集

train_transforms = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

# transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

test_transform = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder("./data/", transform=train_transforms)

print(total_data.class_to_idx)

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

2.代码复现

class BasicConv2d(nn.Module):

def __init__(self, in_channel, out_channel, **kwargs):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channel, out_channel, bias=False, **kwargs)

self.norm = nn.BatchNorm2d(out_channel, eps=0.001)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

x = self.conv(x)

x = self.norm(x)

x = self.relu(x)

return x

class InceptionA(nn.Module):

def __init__(self, in_channels, pool_features):

super(InceptionA, self).__init__()

self.branch1x1 = BasicConv2d(in_channels, 64, kernel_size=1) # 1

self.branch5x5_1 = BasicConv2d(in_channels, 48, kernel_size=1)

self.branch5x5_2 = BasicConv2d(48, 64, kernel_size=5, padding=2)

self.branch3x3dbl_1 = BasicConv2d(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = BasicConv2d(64, 96, kernel_size=3, padding=1)

self.branch3x3dbl_3 = BasicConv2d(96, 96, kernel_size=3, padding=1)

self.branch_pool = BasicConv2d(in_channels, pool_features, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch5x5, branch3x3dbl, branch_pool]

return torch.cat(outputs, 1)

class InceptionB(nn.Module):

def __init__(self, in_channels, channels_7x7):

super(InceptionB, self).__init__()

self.branch1x1 = BasicConv2d(in_channels, 192, kernel_size=1)

c7 = channels_7x7

self.branch7x7_1 = BasicConv2d(in_channels, c7, kernel_size=1)

self.branch7x7_2 = BasicConv2d(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7_3 = BasicConv2d(c7, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_1 = BasicConv2d(in_channels, c7, kernel_size=1)

self.branch7x7dbl_2 = BasicConv2d(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_3 = BasicConv2d(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7dbl_4 = BasicConv2d(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_5 = BasicConv2d(c7, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch_pool = BasicConv2d(in_channels, 192, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch7x7 = self.branch7x7_1(x)

branch7x7 = self.branch7x7_2(branch7x7)

branch7x7 = self.branch7x7_3(branch7x7)

branch7x7dbl = self.branch7x7dbl_1(x)

branch7x7dbl = self.branch7x7dbl_2(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_3(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_4(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_5(branch7x7dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch7x7, branch7x7dbl, branch_pool]

return torch.cat(outputs, 1)

class InceptionC(nn.Module):

def __init__(self, in_channels):

super(InceptionC, self).__init__()

self.branch1x1 = BasicConv2d(in_channels, 320, kernel_size=1)

self.branch3x3_1 = BasicConv2d(in_channels, 384, kernel_size=1)

self.branch3x3_2a = BasicConv2d(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3_2b = BasicConv2d(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch3x3dbl_1 = BasicConv2d(in_channels, 448, kernel_size=1)

self.branch3x3dbl_2 = BasicConv2d(448, 384, kernel_size=3, padding=1)

self.branch3x3dbl_3a = BasicConv2d(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3dbl_3b = BasicConv2d(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch_pool = BasicConv2d(in_channels, 192, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch3x3 = self.branch3x3_1(x)

branch3x3 = [

self.branch3x3_2a(branch3x3),

self.branch3x3_2b(branch3x3),

]

branch3x3 = torch.cat(branch3x3, 1)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = [

self.branch3x3dbl_3a(branch3x3dbl),

self.branch3x3dbl_3b(branch3x3dbl),

]

branch3x3dbl = torch.cat(branch3x3dbl, 1)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch3x3, branch3x3dbl, branch_pool]

return torch.cat(outputs, 1)

class ReductionA(nn.Module):

def __init__(self, in_channels):

super(ReductionA, self).__init__()

self.branch3x3 = BasicConv2d(in_channels, 384, kernel_size=3, stride=2)

self.branch3x3dbl_1 = BasicConv2d(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = BasicConv2d(64, 96, kernel_size=3, padding=1)

self.branch3x3dbl_3 = BasicConv2d(96, 96, kernel_size=3, stride=2)

def forward(self, x):

branch3x3 = self.branch3x3(x)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

outputs = [branch3x3, branch3x3dbl, branch_pool]

return torch.cat(outputs, 1)

class ReductionB(nn.Module):

def __init__(self, in_channels):

super(ReductionB, self).__init__()

self.branch3x3_1 = BasicConv2d(in_channels, 192, kernel_size=1)

self.branch3x3_2 = BasicConv2d(192, 320, kernel_size=3, stride=2)

self.branch7x7x3_1 = BasicConv2d(in_channels, 192, kernel_size=1)

self.branch7x7x3_2 = BasicConv2d(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7x3_3 = BasicConv2d(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7x3_4 = BasicConv2d(192, 192, kernel_size=3, stride=2)

def forward(self, x):

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch7x7x3 = self.branch7x7x3_1(x)

branch7x7x3 = self.branch7x7x3_2(branch7x7x3)

branch7x7x3 = self.branch7x7x3_3(branch7x7x3)

branch7x7x3 = self.branch7x7x3_4(branch7x7x3)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

outputs = [branch3x3, branch7x7x3, branch_pool]

return torch.cat(outputs, 1)

class InceptionAux(nn.Module):

def __init__(self, in_channels, num_classes):

super(InceptionAux, self).__init__()

self.conv0 = BasicConv2d(in_channels, 128, kernel_size=1)

self.conv1 = BasicConv2d(128, 768, kernel_size=5)

self.conv1.stddev = 0.01

self.fc = nn.Linear(768, num_classes)

self.fc.stddev = 0.001

def forward(self, x):

# 17 x 17 x 768

x = F.avg_pool2d(x, kernel_size=5, stride=3)

# 5 x 5 x 768

x = self.conv0(x)

# 5 x 5 x 128

x = self.conv1(x)

# 1 x 1 x 768

x = x.view(x.size(0), -1)

# 768

x = self.fc(x)

# 1000

return x

import torch.nn.functional as F

class InceptionV3(nn.Module):

def __init__(self, num_classes=1000, aux_logits=False, transform_input=False):

super(InceptionV3, self).__init__()

self.aux_logits = aux_logits

self.transform_input = transform_input

self.Conv2d_1a_3x3 = BasicConv2d(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = BasicConv2d(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = BasicConv2d(32, 64, kernel_size=3, padding=1)

self.Conv2d_3b_1x1 = BasicConv2d(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = BasicConv2d(80, 192, kernel_size=3)

self.Mixed_5b = InceptionA(192, pool_features=32)

self.Mixed_5c = InceptionA(256, pool_features=64)

self.Mixed_5d = InceptionA(288, pool_features=64)

self.Mixed_6a = ReductionA(288)

self.Mixed_6b = InceptionB(768, channels_7x7=128)

self.Mixed_6c = InceptionB(768, channels_7x7=160)

self.Mixed_6d = InceptionB(768, channels_7x7=160)

self.Mixed_6e = InceptionB(768, channels_7x7=192)

if aux_logits:

self.AuxLogits = InceptionAux(768, num_classes)

self.Mixed_7a = ReductionB(768)

self.Mixed_7b = InceptionC(1280)

self.Mixed_7c = InceptionC(2048)

self.fc = nn.Linear(2048, num_classes)

def forward(self, x):

if self.transform_input: # 1

x = x.clone()

x[:, 0] = x[:, 0] * (0.229 / 0.5) + (0.485 - 0.5) / 0.5

x[:, 1] = x[:, 1] * (0.224 / 0.5) + (0.456 - 0.5) / 0.5

x[:, 2] = x[:, 2] * (0.225 / 0.5) + (0.406 - 0.5) / 0.5

# 299 x 299 x 3

x = self.Conv2d_1a_3x3(x)

# 149 x 149 x 32

x = self.Conv2d_2a_3x3(x)

# 147 x 147 x 32

x = self.Conv2d_2b_3x3(x)

# 147 x 147 x 64

x = F.max_pool2d(x, kernel_size=3, stride=2)

# 73 x 73 x 64

x = self.Conv2d_3b_1x1(x)

# 73 x 73 x 80

x = self.Conv2d_4a_3x3(x)

# 71 x 71 x 192

x = F.max_pool2d(x, kernel_size=3, stride=2)

# 35 x 35 x 192

x = self.Mixed_5b(x)

# 35 x 35 x 256

x = self.Mixed_5c(x)

# 35 x 35 x 288

x = self.Mixed_5d(x)

# 35 x 35 x 288

x = self.Mixed_6a(x)

# 17 x 17 x 768

x = self.Mixed_6b(x)

# 17 x 17 x 768

x = self.Mixed_6c(x)

# 17 x 17 x 768

x = self.Mixed_6d(x)

# 17 x 17 x 768

x = self.Mixed_6e(x)

# 17 x 17 x 768

if self.training and self.aux_logits:

aux = self.AuxLogits(x)

# 17 x 17 x 768

x = self.Mixed_7a(x)

# 8 x 8 x 1280

x = self.Mixed_7b(x)

# 8 x 8 x 2048

x = self.Mixed_7c(x)

# 8 x 8 x 2048

x = F.avg_pool2d(x, kernel_size=5)

# 1 x 1 x 2048

x = F.dropout(x, training=self.training)

# 1 x 1 x 2048

x = x.view(x.size(0), -1)

# 2048

x = self.fc(x)

# 1000 (num_classes)

if self.training and self.aux_logits:

return x, aux

return x

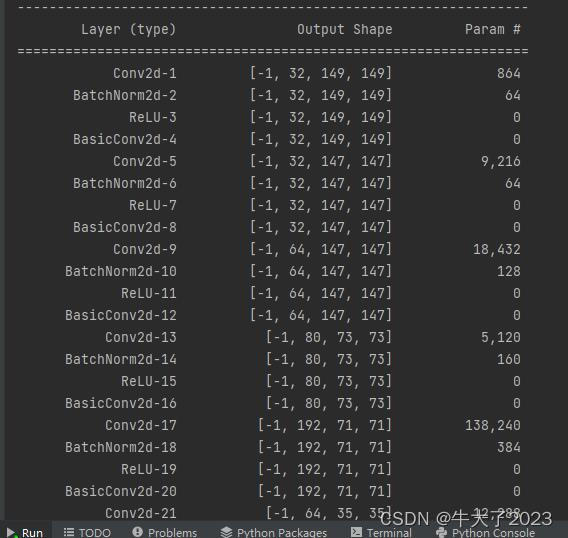

可以打印查看一下

# 统计模型参数量以及其他指标

import torchsummary

# 调用并将模型转移到GPU中

model = InceptionV3().to(device)

# 显示网络结构

torchsummary.summary(model, (3, 299, 299))

print(model)

3.训练运行

代码和以前的差不多,不再细说

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

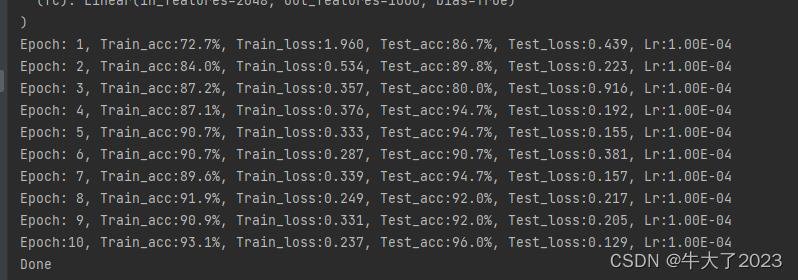

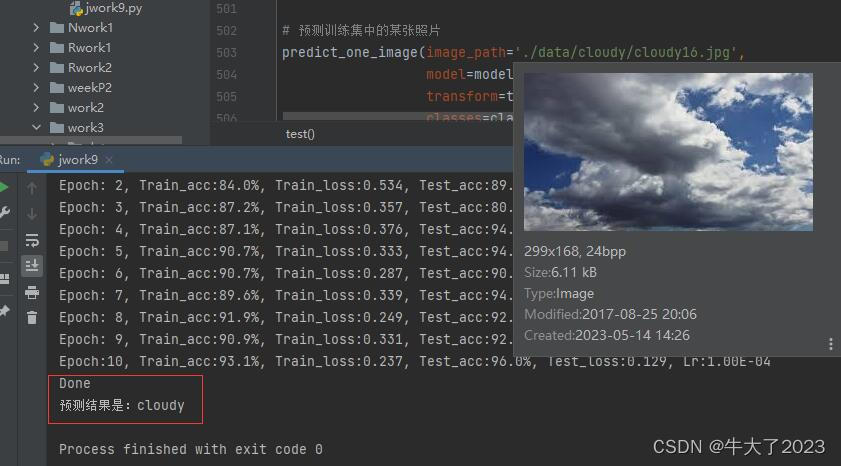

跑十轮并保存模型

import copy

optimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

epochs = 10

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0 # 设置一个最佳准确率,作为最佳模型的判别指标

for epoch in range(epochs):

# 更新学习率(使用自定义学习率时使用)

# adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

# scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

# 保存最佳模型到 best_model

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss,

epoch_test_acc * 100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')

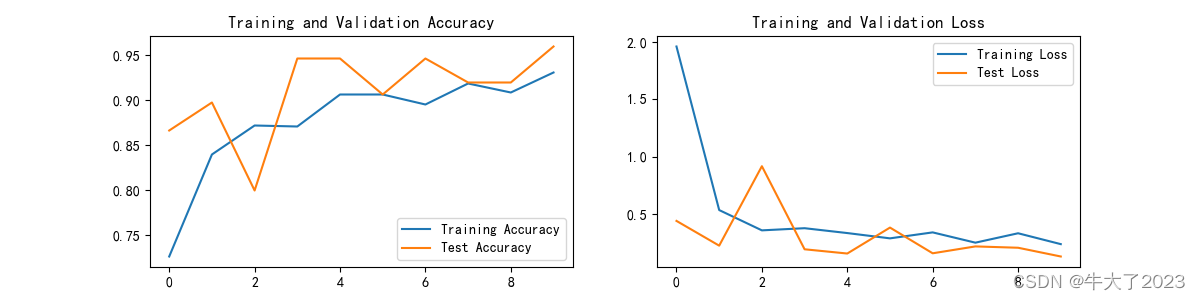

打印训练记录图

import matplotlib.pyplot as plt

# 隐藏警告

import warnings

warnings.filterwarnings("ignore") # 忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

3.2指定图片进行预测

把训练部分注释掉

from PIL import Image

classes = list(total_data.class_to_idx)

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img) # 展示预测的图片

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_, pred = torch.max(output, 1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')

# 预测训练集中的某张照片

predict_one_image(image_path='./data/cloudy/cloudy16.jpg',

model=model,

transform=train_transforms,

classes=classes)

三、总结

总结来说,Inception v3是一种深度卷积神经网络,其主要特点包括更深的网络结构、使用Factorized Convolutions、添加Batch Normalization、引入辅助分类器以及使用基于RMSProp的优化器进行训练。相对于Inception v1的Inception Module结构,Inception v3在卷积操作上做出了改动,使用两个3x3的卷积代替一个5x5的卷积以提高计算速度,并将n x n的卷积核尺寸分解为1 x n和n x 1两个卷积。此外,滤波器组也被扩展以解决表征性瓶颈。在计算机视觉任务中,Inception v3在图像分类、物体检测和图像分割等方面均表现优异。然而,由于其较大的网络结构和计算复杂度,Inception v3在实际应用中可能需要较高的硬件要求。

版权归原作者 牛大了2023 所有, 如有侵权,请联系我们删除。