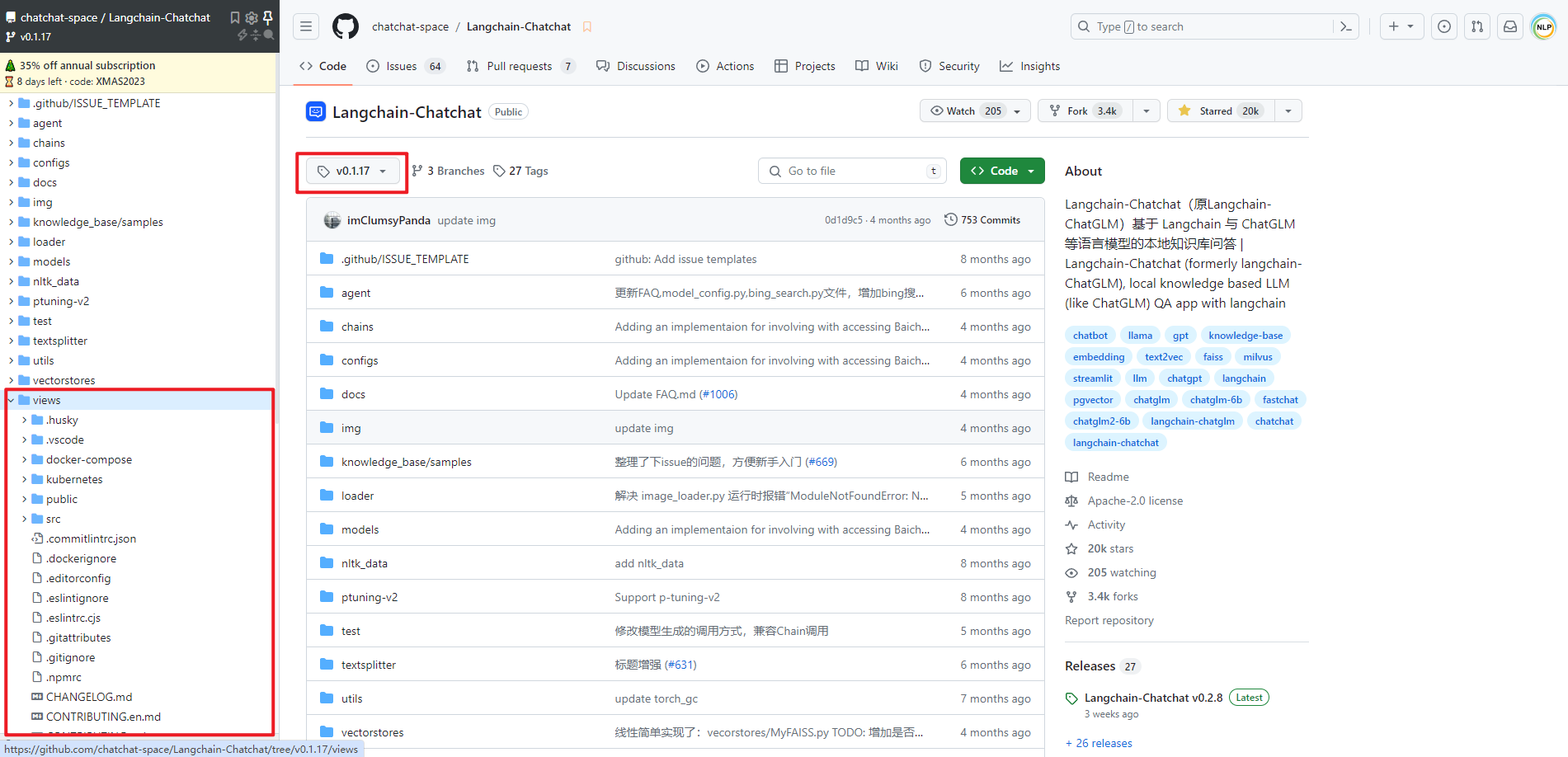

在 Langchain-Chatchat v0.1.17 版本及以前是有前后端分离的 Vue 项目的,但是 v0.2.0 后就没有了。所以本文使用的是 Langchain-Chatchat v0.1.17 版本中的 Vue 项目。经过一番折腾终于将 Langchain-Chatchat v0.1.17 版本前端 Vue 接口和 Langchain-Chatchat v0.2.8 后端 API 接口调通了。

一.运行 Langchain-Chatchat

1.拉取源码

拉取 Langchain-Chatchat 源码(Langchain-Chatchat v0.2.8),如下所示:

git clone https://github.com/chatchat-space/Langchain-Chatchat.git

2.安装依赖包

安装依赖包,如下所示:

pip install -r .\requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

3.模型下载和配置

模型下载和配置(加速参考文献[3]),如下所示:

python hf_download.py --model THUDM/ChatGLM3-6B --save_dir ./hf_hub

python hf_download.py --model BAAI/bge-large-zh --save_dir ./hf_hub

4.初始化知识库和配置文件

初始化知识库和配置文件,如下所示:

$ python copy_config_example.py

$ python init_database.py --recreate-vs

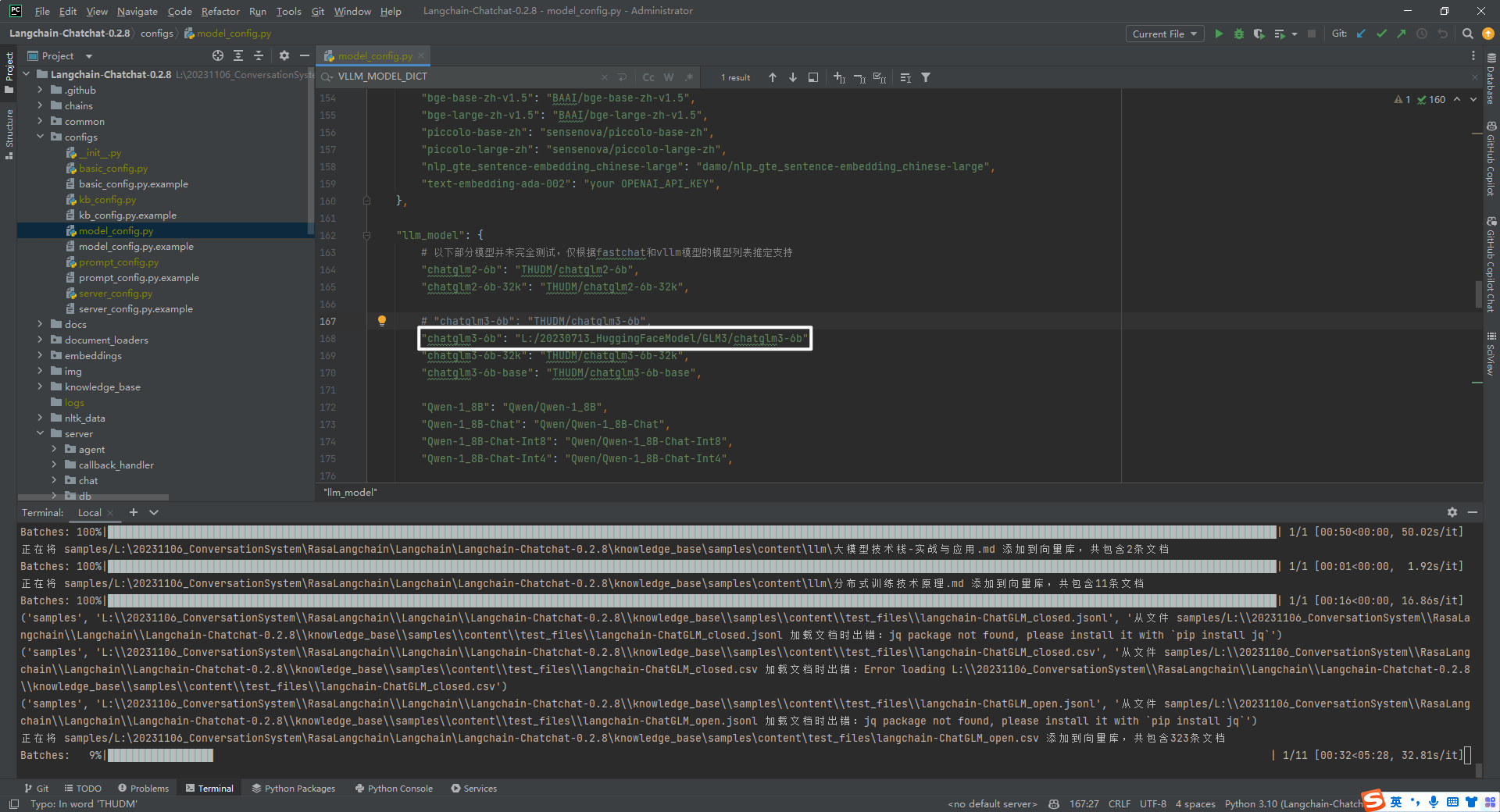

Langchain-Chatchat-0.2.8->configs->model_config.py->embed_model-> 设置 bge-large-zh 本地路径,如下所示:

Langchain-Chatchat-0.2.8->configs->model_config.py->llm_model-> 设置 chatglm3-6b 本地路径,如下所示:

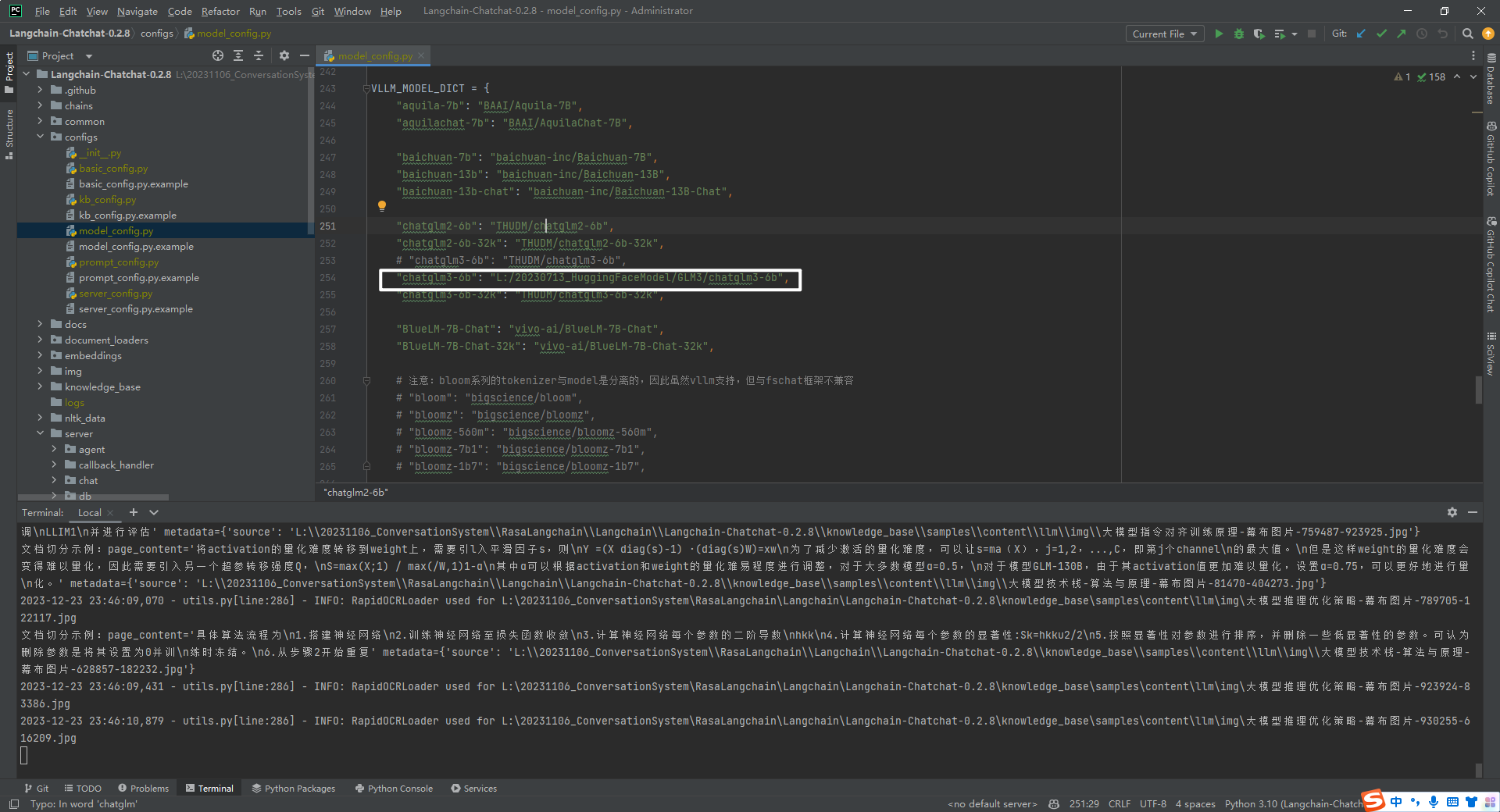

Langchain-Chatchat-0.2.8->configs->model_config.py->VLLM_MODEL_DICT-> 设置 chatglm3-6b 本地路径,如下所示:

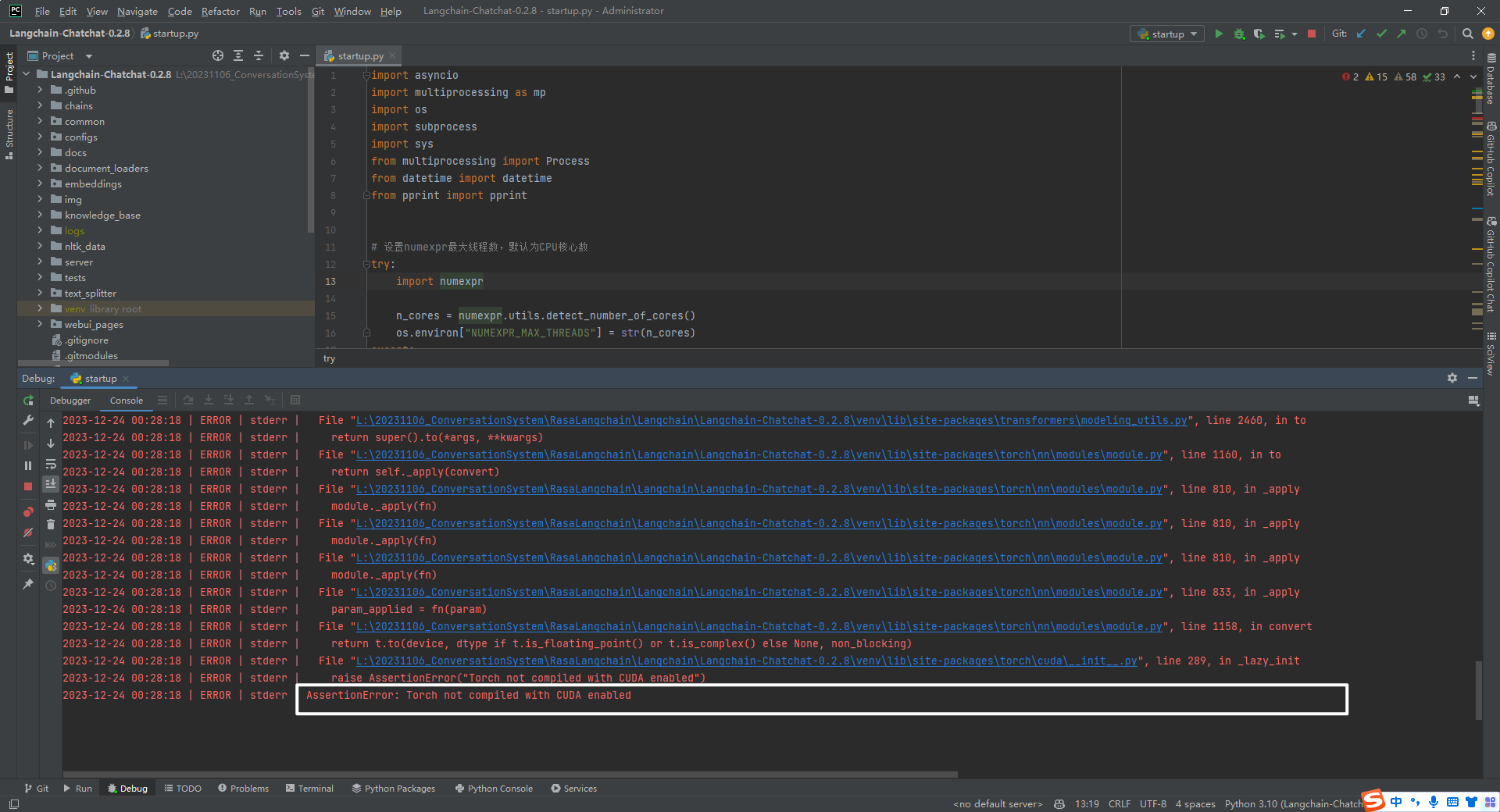

5.python startup.py -a

$ python startup.py -a

手动安装 PyTorch 的 CUDA 版本,如下所示:

pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0--index-url https://download.pytorch.org/whl/cu118

控制台输出的日志信息,如下所示:

import sys;print('Python %s on %s'%(sys.version, sys.platform))

Connected to pydev debugger (build 232.9559.58)

L:\20231106_ConversationSystem\ChatCopilot\Langchain\Langchain-Chatchat-0.2.8\venv\Scripts\python.exe "D:/Program Files/JetBrains/PyCharm 2023.1.3/plugins/python/helpers/pydev/pydevd.py"--multiprocess --qt-support=auto --client 127.0.0.1--port 36490--file L:\20231106_ConversationSystem\RasaLangchain\Langchain\Langchain-Chatchat-0.2.8\startup.py -a

==============================Langchain-Chatchat Configuration==============================

操作系统:Windows-10-10.0.19044-SP0.

python版本:3.10.9(tags/v3.10.9:1dd9be6, Dec 62022,20:01:21)[MSC v.193464 bit (AMD64)]

项目版本:v0.2.8

langchain版本:0.0.344. fastchat版本:0.2.34

当前使用的分词器:ChineseRecursiveTextSplitter

<strong>当前启动的LLM模型:['chatglm3-6b','zhipu-api','openai-api'] @ cuda</strong>{'device':'cuda','host':'127.0.0.1','infer_turbo':False,'model_path':'L:\\20230713_HuggingFaceModel\\GLM3\\chatglm3-6b','model_path_exists':True,'port':20002}{'api_key':'','device':'auto','host':'127.0.0.1','infer_turbo':False,'online_api':True,'port':21001,'provider':'ChatGLMWorker','version':'chatglm_turbo','worker_class':<class'server.model_workers.zhipu.ChatGLMWorker'>}{'api_base_url':'https://api.openai.com/v1','api_key':'','device':'auto','host':'127.0.0.1','infer_turbo':False,'model_name':'gpt-3.5-turbo','online_api':True,'openai_proxy':'','port':20002}<strong>当前Embbedings模型: bge-large-zh @ cuda</strong>==============================Langchain-Chatchat Configuration==============================2023-12-2408:18:36,235- startup.py[line:650]- INFO: 正在启动服务:

2023-12-2408:18:36,236- startup.py[line:651]- INFO: 如需查看 llm_api 日志,请前往 L:\20231106_ConversationSystem\RasaLangchain\Langchain\Langchain-Chatchat-0.2.8\logs

2023-12-2408:19:30| INFO | model_worker | Register to controller

2023-12-2408:19:37| ERROR | stderr | INFO: Started server process [126488]2023-12-2408:19:37| ERROR | stderr | INFO: Waiting for application startup.2023-12-2408:19:37| ERROR | stderr | INFO: Application startup complete.2023-12-2408:19:37| ERROR | stderr | INFO: Uvicorn running on http://127.0.0.1:20000(Press CTRL+C to quit)2023-12-2408:21:18| INFO | model_worker | Loading the model ['chatglm3-6b'] on worker bc7ce098 ...

Loading checkpoint shards:0%||0/7[00:00<?, ?it/s]

Loading checkpoint shards:14%|█▍ |1/7[01:08<06:51,68.62s/it]

Loading checkpoint shards:29%|██▊ |2/7[02:16<05:42,68.43s/it]

Loading checkpoint shards:43%|████▎ |3/7[03:24<04:31,67.83s/it]

Loading checkpoint shards:57%|█████▋ |4/7[04:28<03:19,66.62s/it]

Loading checkpoint shards:71%|███████▏ |5/7[05:36<02:14,67.16s/it]

Loading checkpoint shards:86%|████████▌ |6/7[06:48<01:08,68.75s/it]

Loading checkpoint shards:100%|██████████|7/7[07:29<00:00,59.44s/it]

Loading checkpoint shards:100%|██████████|7/7[07:29<00:00,64.15s/it]2023-12-2408:29:30| ERROR | stderr |2023-12-2408:30:45| INFO | model_worker | Register to controller

INFO: Started server process [125172]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:7861(Press CTRL+C to quit)==============================Langchain-Chatchat Configuration==============================

操作系统:Windows-10-10.0.19044-SP0.

python版本:3.10.9(tags/v3.10.9:1dd9be6, Dec 62022,20:01:21)[MSC v.193464 bit (AMD64)]

项目版本:v0.2.8

langchain版本:0.0.344. fastchat版本:0.2.34

当前使用的分词器:ChineseRecursiveTextSplitter

当前启动的LLM模型:['chatglm3-6b','zhipu-api','openai-api'] @ cuda

{'device':'cuda','host':'127.0.0.1','infer_turbo':False,'model_path':'L:\\20230713_HuggingFaceModel\\GLM3\\chatglm3-6b','model_path_exists':True,'port':20002}{'api_key':'','device':'auto','host':'127.0.0.1','infer_turbo':False,'online_api':True,'port':21001,'provider':'ChatGLMWorker','version':'chatglm_turbo','worker_class':<class'server.model_workers.zhipu.ChatGLMWorker'>}{'api_base_url':'https://api.openai.com/v1','api_key':'','device':'auto','host':'127.0.0.1','infer_turbo':False,'model_name':'gpt-3.5-turbo','online_api':True,'openai_proxy':'','port':20002}

当前Embbedings模型: bge-large-zh @ cuda

<strong>服务端运行信息:</strong><strong> OpenAI API Server: http://127.0.0.1:20000/v1</strong><strong> Chatchat API Server: http://127.0.0.1:7861</strong><strong> Chatchat WEBUI Server: http://127.0.0.1:8501</strong>==============================Langchain-Chatchat Configuration==============================

You can now view your Streamlit app in your browser.

URL: http://127.0.0.1:85012023-12-2408:37:51,151- _client.py[line:1027]- INFO: HTTP Request: POST http://127.0.0.1:20001/list_models "HTTP/1.1 200 OK"

INFO:127.0.0.1:31565-"POST /llm_model/list_running_models HTTP/1.1"200 OK

2023-12-2408:37:51,188- _client.py[line:1027]- INFO: HTTP Request: POST http://127.0.0.1:7861/llm_model/list_running_models "HTTP/1.1 200 OK"2023-12-2408:37:51,331- _client.py[line:1027]- INFO: HTTP Request: POST http://127.0.0.1:20001/list_models "HTTP/1.1 200 OK"2023-12-2408:37:51,337- _client.py[line:1027]- INFO: HTTP Request: POST http://127.0.0.1:7861/llm_model/list_running_models "HTTP/1.1 200 OK"

INFO:127.0.0.1:31565-"POST /llm_model/list_running_models HTTP/1.1"200 OK

INFO:127.0.0.1:31565-"POST /llm_model/list_config_models HTTP/1.1"200 OK

2023-12-2408:37:51,413- _client.py[line:1027]- INFO: HTTP Request: POST http://127.0.0.1:7861/llm_model/list_config_models "HTTP/1.1 200 OK"

(1)OpenAI API Server: http://127.0.0.1/v1

(2)Chatchat API Server: http://127.0.0.1

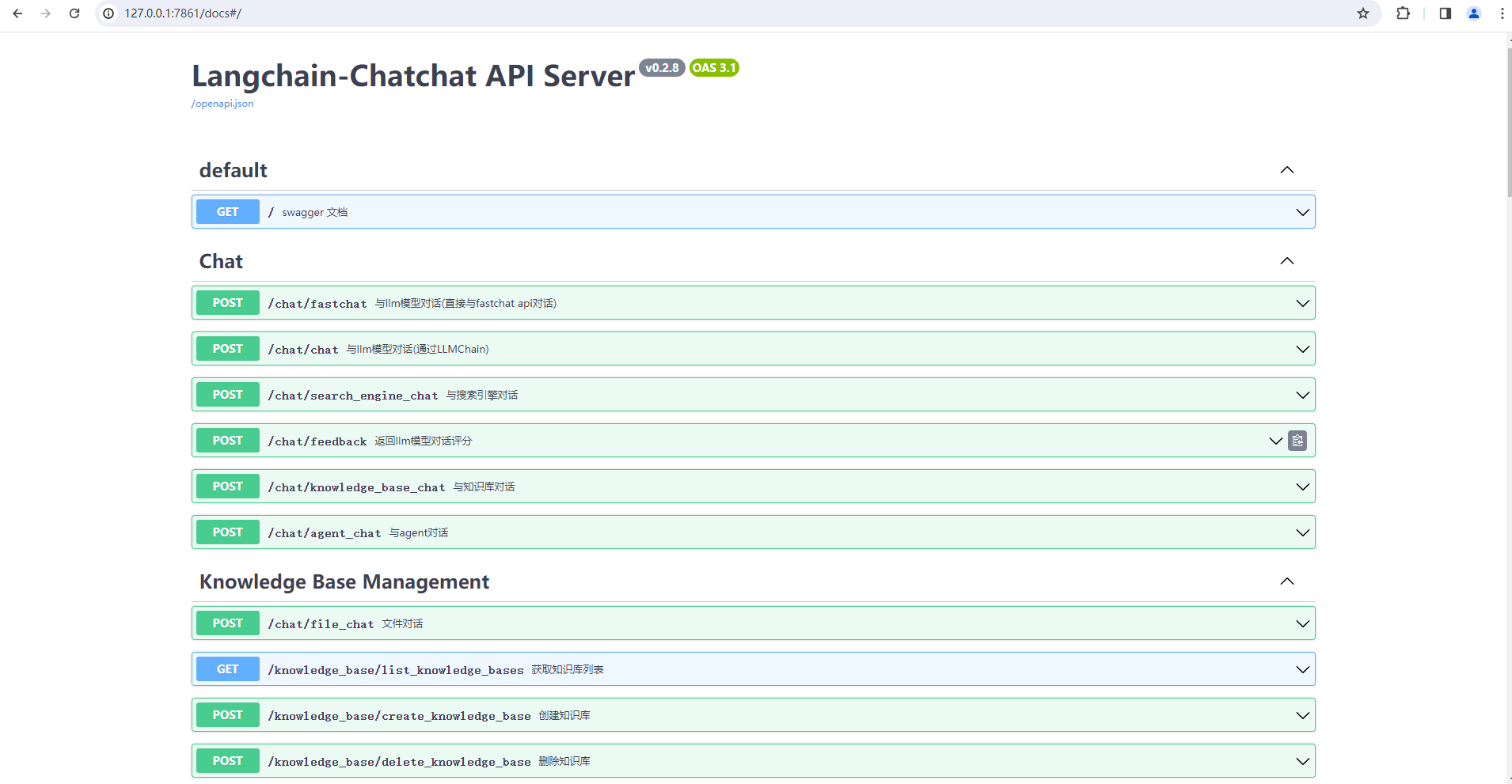

这个是 Langchain-Chatchat v0.2.8 版本后端 API 接口文档,如下所示:

(3)Chatchat WEBUI Server: http://127.0.0.1

对话模式包括:LLM 对话,知识库问答,文件对话,搜索引擎问答,自定义 Agent 问答。

二.运行 Langchain-Chatchat-UI

在 Langchain-Chatchat v0.1.17 版本及以前是有前后端分离的 Vue 项目的,但是 v0.2.0 后就没有了。所以本文使用的是 Langchain-Chatchat v0.1.17 版本中的 Vue 项目。运行前端项目基本命令就是

npm install

,

npm run dev

。

1.不能找到模块

node:path

当执行

npm run dev

时报错,显示

Error: Cannot find module 'node:path'

。如下所示:

以前用的 Vue2+Node 14.17.0,更换为 node-v16.16.0 就好了。执行命令,如下所示:

npm install npm@6-g

npm cache clear --force

npm install

npm run dev

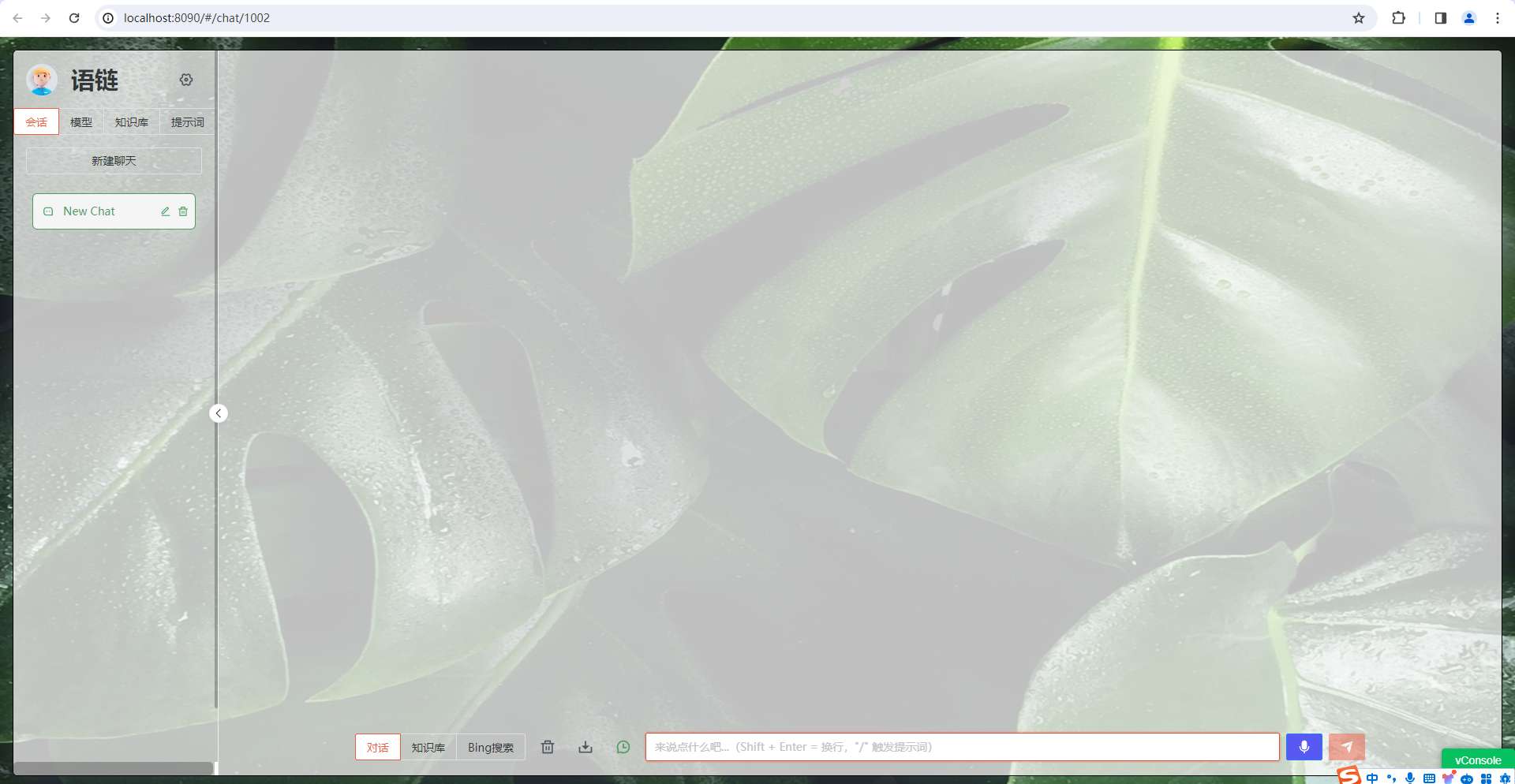

2.将前端 Vue 项目跑起来

这个是 Langchain-Chatchat v0.1.17 版本前端 Vue 的界面(Langchain-Chatchat v0.2.8 后端 API 接口有所调整,需要更新前端接口才能将其运行起来),如下所示:

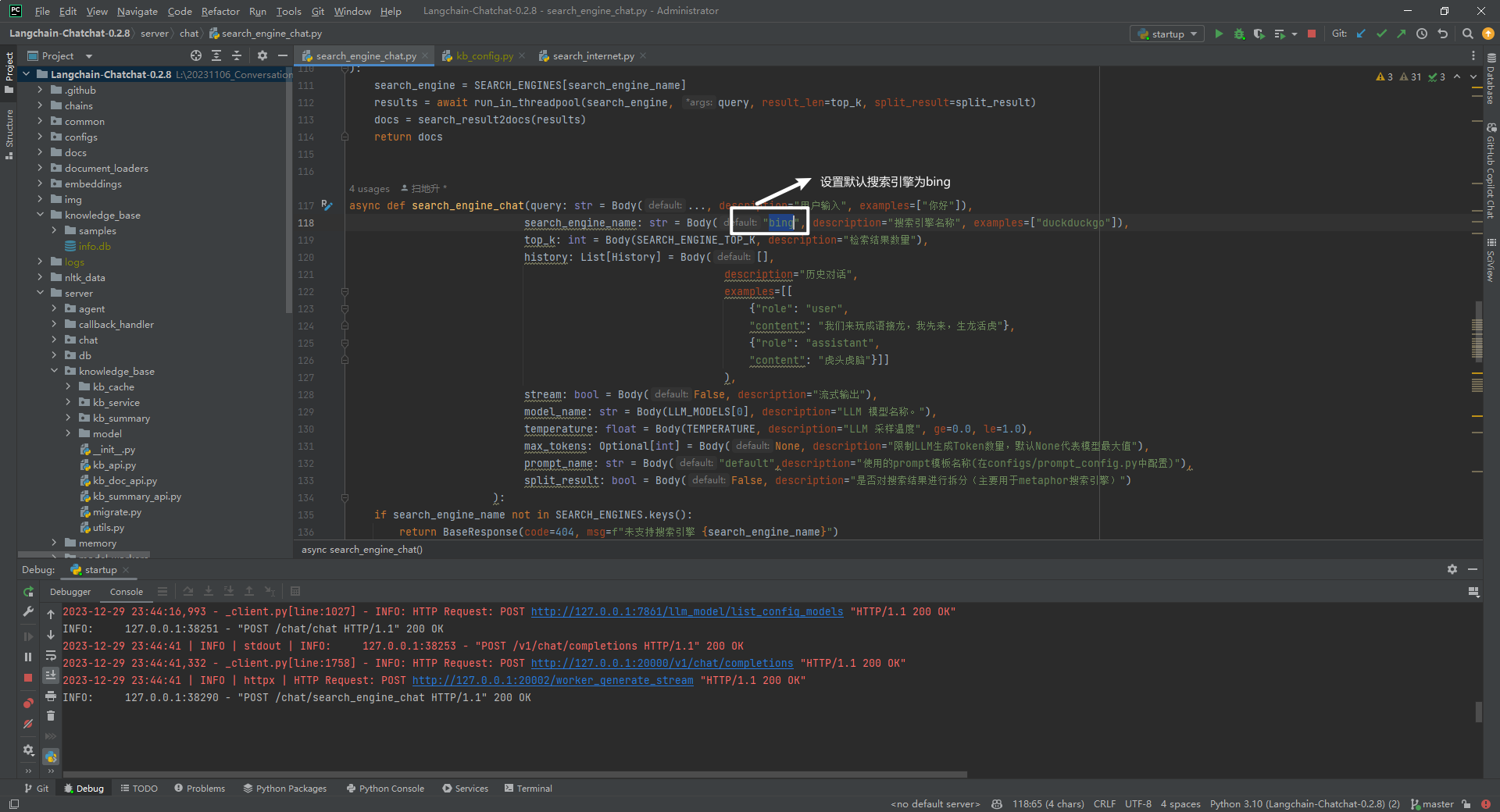

3.遇到的问题

(1)搜索引擎接口

http://localhost:8090/api/chat/search_engine_chat

(2)知识库检索接口

http://localhost:8090/api/chat/file_chat

报错:未找到临时知识库 samples,请先上传文件,但是知识库是已经存在的。

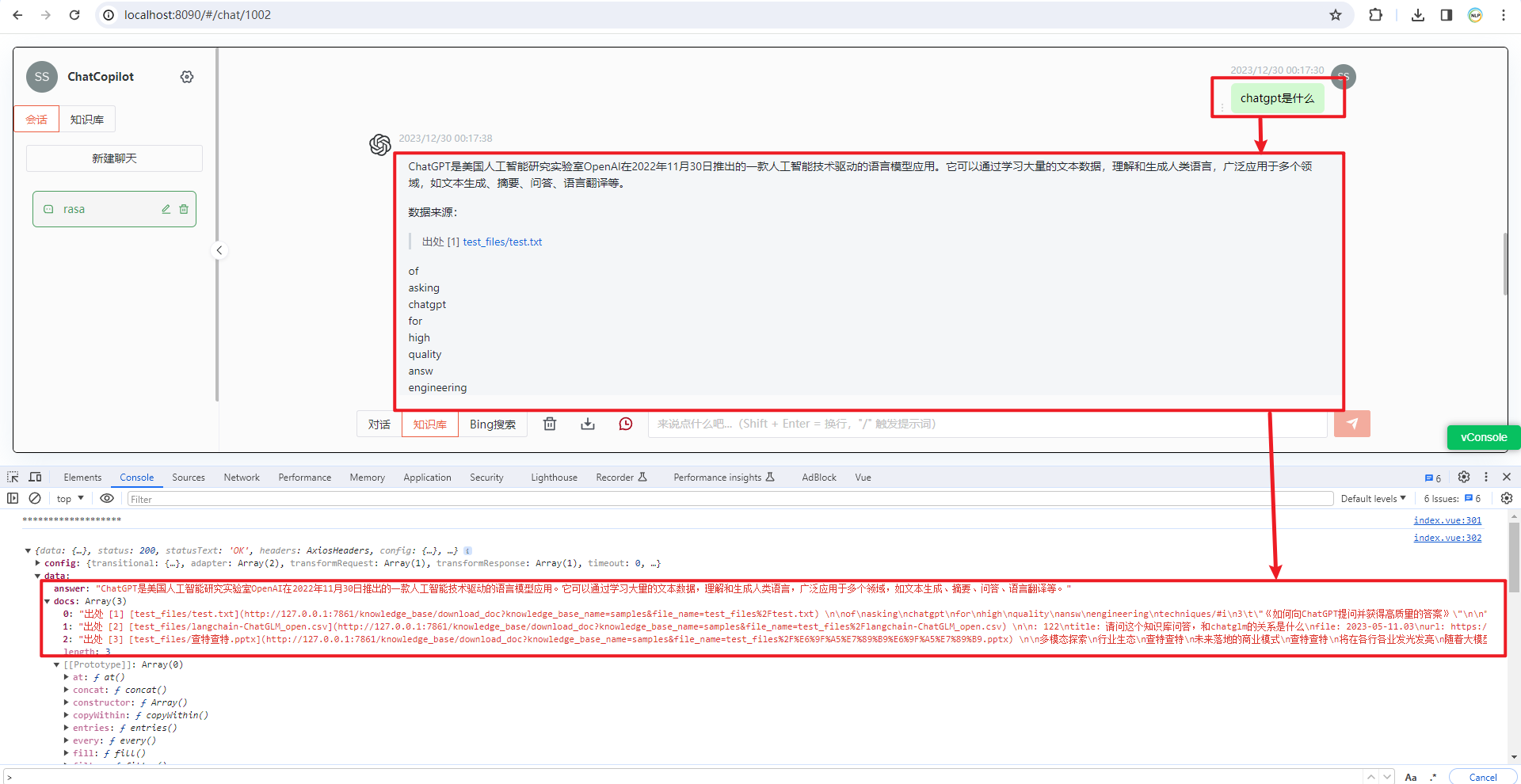

原因:应该调用 knowledge_base_chat 知识库对话接口,而 file_chat 是与临时文件进行对话的接口。

调用 knowledge_base_chat 成功后的信息,如下所示:

(3)文件上传接口

http://localhost:8090/api/knowledge_base/upload_docs

说明:经过一番折腾终于将 Langchain-Chatchat v0.1.17 版本前端 Vue 接口和 Langchain-Chatchat v0.2.8 后端 API 接口调通了(前端界面暂不开源,若有问题可以交流)。

参考文献

[1] https://nodejs.org/download/release/v16.16.0/

[2] https://github.com/chatchat-space/Langchain-Chatchat

[3] https://github.com/LetheSec/HuggingFace-Download-Accelerator

版权归原作者 NLP工程化 所有, 如有侵权,请联系我们删除。