pytorch中使用TensorBoard进行可视化Loss及特征图

安装导入TensorBoard

- 安装TensorBoard

pip install tensorboard - 导入TensorBoard

from torch.utils.tensorboard import SummaryWriter - 实例化TensorBoard

writer = SummaryWriter('./logs')

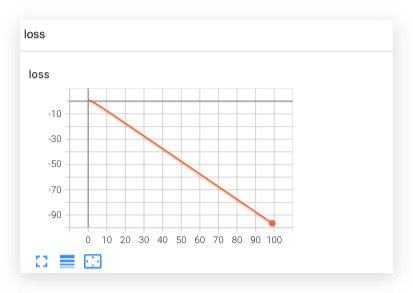

可视化标量数据

训练过程中的loss,accuracy等都是标量,都可以用TensorBoard中的add_scalar来显示,add_scalar方法中第一个参数表示表的名字,第二个参数表示的是你要存的值,第三个参数可以理解为x轴坐标。

for i inrange(100):

loss = i

writer.add_scalar("loss",loss,i)

writer.close()

终端输入tensorboard --logdir=logs,开启TensorBoard

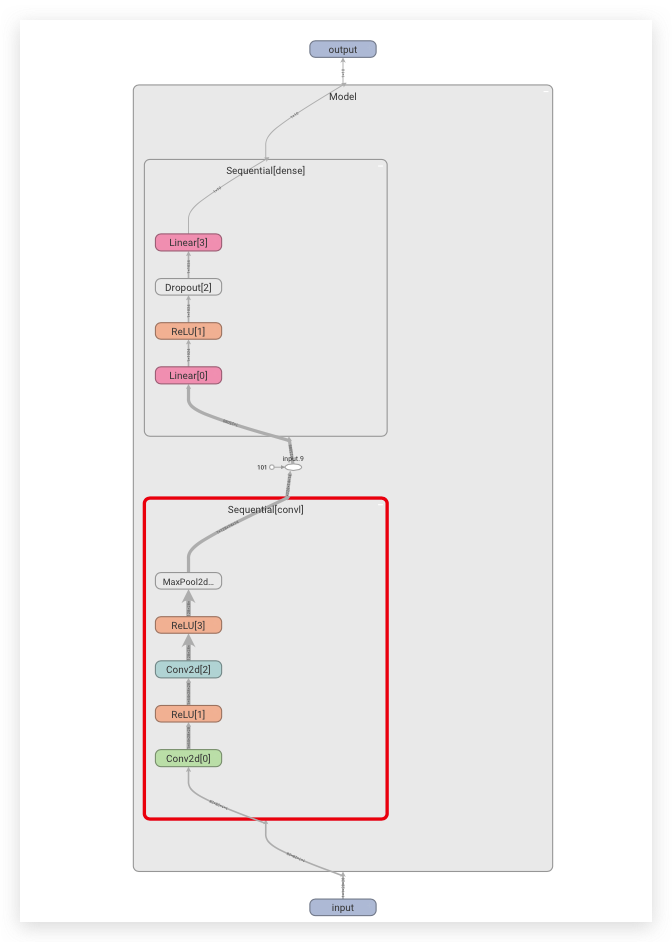

可视化网络结构

使用add_graph方法,可以将定义的网络模型可视化,第一个参数传入模型对象,第二个参数用来描述输入的shape

import torch

classModel(torch.nn.Module):def__init__(self):super(Model,self).__init__()

self.convl = torch.nn.Sequential(

torch.nn.Conv2d(1,64,kernel_size=3,stride=1,padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(64,128,kernel_size=3,stride=1,padding=1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(stride=2,kernel_size=2))

self.dense = torch.nn.Sequential(

torch.nn.Linear(14*14*128,1024),

torch.nn.ReLU(),

torch.nn.Dropout(p=0.5),

torch.nn.Linear(1024,10))defforward(self,x):

x = self.convl(x)

x = x.view(-1,14*14*128)

x = self.dense(x)return x

model = Model()

images = torch.randn(1,1,28,28)

writer.add_graph(model, images)

writer.close()

可视化图片

使用add_image(self, tag, img_tensor, global_step=None, walltime=None, dataformats=‘CHW’)绘制图片,可用于检查模型的输入,监测 feature map 的变化,或是观察 weight。

tag:就是保存图的名称

img_tensor:图片的类型要是torch.Tensor, numpy.array, or string这三种

global_step:第几张图片

dataformats=‘CHW’,默认CHW,tensor是CHW,numpy是HWC

writer = SummaryWriter("logs")

image_path ="./img/1.jpg"

image_PIL = Image.open(image_path)

img = np.array(image_PIL)print(img.shape)

writer.add_image("test", img,1, dataformats='HWC')

writer.close()

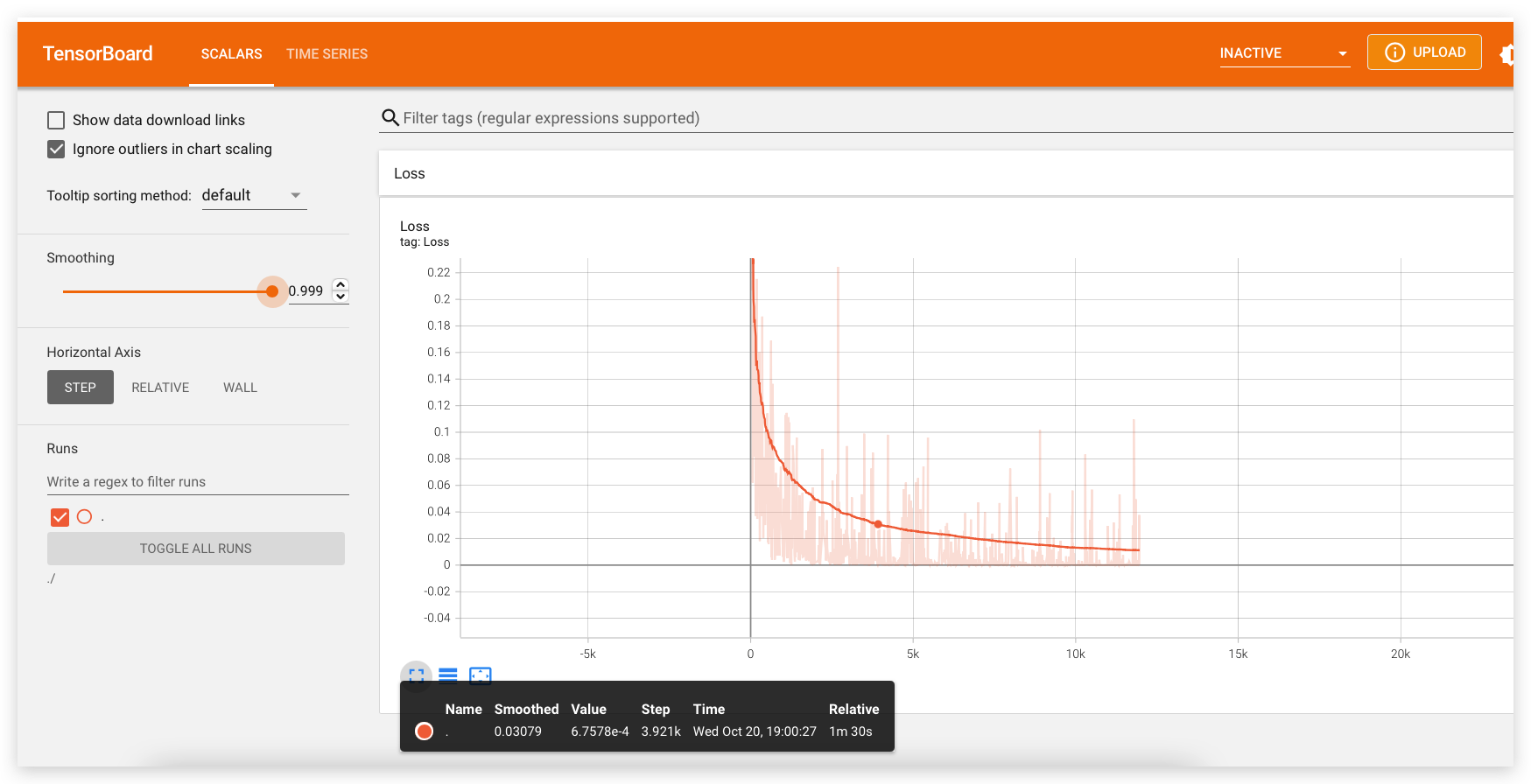

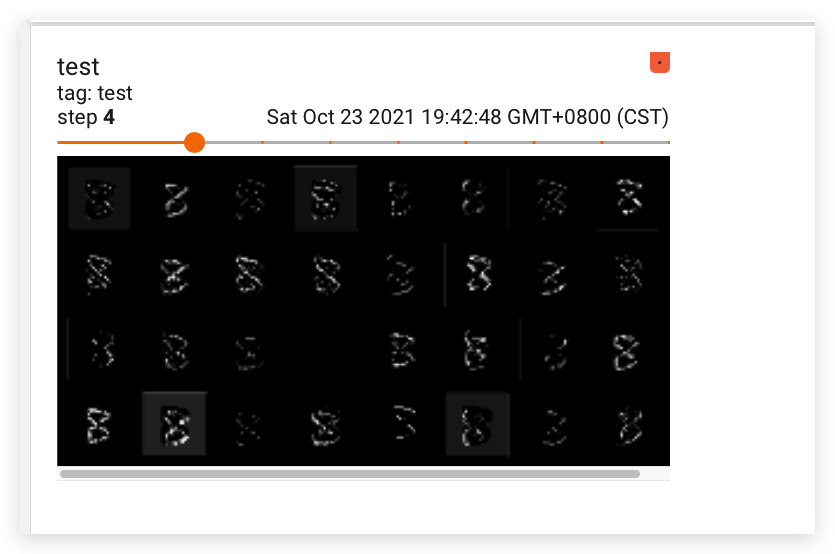

使用TensorBoard可视化Loss和卷积层特征图

- 可视化训练过程中的loss

- 可视化卷积层特征图

使用torch.nn.Module.register_forward_hook(hook_func)函数可以实现特征图的可视化,register_forward_hook是一个钩子函数,设置完后,当输入图片进行前向传播的时候就会执行自定的函数,该函数作为参数传到register_forward_hook方法中。

hook_func函数可从前向过程中接收到三个参数:hook_func(module, input, output)。其中module指的是模块的名称,比如对于ReLU模块,module是ReLU(),对于卷积模块,module是Conv2d(in_channel=…),注意module带有具体的参数。input和output就是我们心心念的特征图,这二者分别是module的输入和输出,输入可能有多个(比如concate层就有多个输入),输出只有一个,所以input是一个tuple,其中每一个元素都是一个Tensor,而输出就是一个Tensor。一般而言output可能更经常拿来做分析。我们可以在hook_func中将特征图画出来并保存为图片,所以hook_func就是我们实现可视化的关键。

defhook_func(module,input):

x =input[0][0]

x = x.unsqueeze(1)global i

image_batch = torchvision.utils.make_grid(x, padding=4)

image_batch = image_batch.numpy().transpose(1,2,0)

writer.add_image("test", image_batch, i, dataformats='HWC')

i +=1

注册torch.nn.Module.register_forward_hook函数

for name, m in model.named_modules():ifisinstance(m, torch.nn.Conv2d):

m.register_forward_pre_hook(hook_func)

完整代码

import torch

import torch.nn as nn

import torchvision

from torchvision import transforms

import torch.nn.functional as F

from torch.utils.tensorboard import SummaryWriter

import os

import cv2

classMyModel(nn.Module):def__init__(self):super(MyModel, self).__init__()

self.convl = torch.nn.Sequential(

torch.nn.Conv2d(1,32, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(32,64, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(64,128, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(stride=2, kernel_size=2))

self.dense = torch.nn.Sequential(

torch.nn.Linear(14*14*128,1024),

torch.nn.ReLU(),

torch.nn.Dropout(p=0.5),

torch.nn.Linear(1024,10))defforward(self, x):

x = self.convl(x)

x = x.view(-1,14*14*128)

x = self.dense(x)

output = F.log_softmax(x, dim=1)return output

defhook_func(module,input):

x =input[0][0]

x = x.unsqueeze(1)global i

image_batch = torchvision.utils.make_grid(x, padding=4)

image_batch = image_batch.numpy().transpose(1,2,0)

writer.add_image("test", image_batch, i, dataformats='HWC')

i +=1if __name__ =='__main__':

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

writer = SummaryWriter("./logs")

device = torch.device("cuda"if torch.cuda.is_available()else"cpu")

pipline = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),(0.3081)),])if torch.cuda.is_available():

map_location ="gpu"else:

map_location ="cpu"

model = MyModel().to(device)

model.load_state_dict(torch.load('./MyModel.pkl',map_location=map_location))

i=0for name, m in model.named_modules():#if isinstance(m, torch.nn.Conv2d):

m.register_forward_pre_hook(hook_func)

img = cv2.imread('./1.png')

writer.add_image("img", img,1, dataformats='HWC')

img = pipline(img).unsqueeze(0).to(device)

img = transforms.functional.resize(img,[28,28])

img = img.reshape(-1,1,28,28)with torch.no_grad():

model(img)

- 第一层卷积后的32张特征图

- 第二层卷积后的64张特征图

- 第三层卷积后的128张特征图

版权归原作者 _-CHEN-_ 所有, 如有侵权,请联系我们删除。