目录

YOLO,VOC数据集标注格式

一、YOLO数据集标注格式

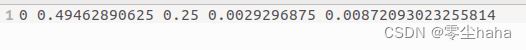

YOLO数据集txt标注格式:

每个标签有五个数据,依次代表:

- 所标注内容的类别,数字与类别一一对应

- 归一化后中心点的x坐标

- 归一化后中心点的y坐标

- 归一化后目标框的宽度w

- 归一化后目标框的高度h

这里归一化是指除以原始图片的宽和高

二、VOC数据集标注格式

VOC数据集xml标注格式

<annotation><folder>VOC</folder><filename>bird_1.jpg</filename> #图片名称以及图片格式

<size> #图片的大小以及是否是rgb图片

<width>1024</width><height>688</height><depth>3</depth></size><segmented>0</segmented><object> #多个目标可以有多个object

<name>bird</name> #图片中一个目标的类别

<pose>Unspecified</pose> #物体的姿态

<truncated>0</truncated> #物体是否被部分遮挡(>15%)

<difficult>0</difficult> #是否为难以辨识的物体, 主要指要结体背景才

#能判断出类别的物体。虽有标注, 但一般忽略这类物体

<bndbox> #xmin和ymin是含目标框左上角的坐标

<xmin>506</xmin> #xmax和ymax是含目标框右下角的坐标

<ymin>170</ymin><xmax>509</xmax><ymax>176</ymax></bndbox></object></annotation>

三、数据集格式转换

转换公式:

- VOC->YOLO

norm_x=(xmin + xmax)/2/width

norm_y=(ymin + ymax)/2/height

norm_w=(xmax - xmin)/width

norm_h=(ymax - ymin)/height

- YOLO->VOC

xmin=width *(norm_x -0.5* norm_w)

ymin=height *(norm_y -0.5* norm_h)

xmax=width *(norm_x +0.5* norm_w)

ymax=height *(norm_y +0.5* norm_h)

YOLO v5官方开源代码

import cv2

import numpy as np

import pandas as pd

import pkg_resources as pkg

import torch

import torchvision

import yaml

defclip_coords(boxes, shape):# Clip bounding xyxy bounding boxes to image shape (height, width)'''

将boxes的坐标(x1y1x2y2左上角右下角)限定在图像的尺寸(img_shapehw)内,防止出界。

'''ifisinstance(boxes, torch.Tensor):# faster individually

boxes[:,0].clamp_(0, shape[1])# x1

boxes[:,1].clamp_(0, shape[0])# y1

boxes[:,2].clamp_(0, shape[1])# x2

boxes[:,3].clamp_(0, shape[0])# y2else:# np.array (faster grouped)

boxes[:,[0,2]]= boxes[:,[0,2]].clip(0, shape[1])# x1, x2

boxes[:,[1,3]]= boxes[:,[1,3]].clip(0, shape[0])# y1, y2defxyxy2xywh(x):# Convert nx4 boxes from [x1, y1, x2, y2] to [x, y, w, h] where xy1=top-left, xy2=bottom-right

y = x.clone()ifisinstance(x, torch.Tensor)else np.copy(x)

y[:,0]=(x[:,0]+ x[:,2])/2# x center

y[:,1]=(x[:,1]+ x[:,3])/2# y center

y[:,2]= x[:,2]- x[:,0]# width

y[:,3]= x[:,3]- x[:,1]# heightreturn y

defxywh2xyxy(x):# Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

y = x.clone()ifisinstance(x, torch.Tensor)else np.copy(x)

y[:,0]= x[:,0]- x[:,2]/2# top left x

y[:,1]= x[:,1]- x[:,3]/2# top left y

y[:,2]= x[:,0]+ x[:,2]/2# bottom right x

y[:,3]= x[:,1]+ x[:,3]/2# bottom right yreturn y

defxywhn2xyxy(x, w=640, h=640, padw=0, padh=0):# Convert nx4 boxes from [x, y, w, h] normalized to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

y = x.clone()ifisinstance(x, torch.Tensor)else np.copy(x)

y[:,0]= w *(x[:,0]- x[:,2]/2)+ padw # top left x

y[:,1]= h *(x[:,1]- x[:,3]/2)+ padh # top left y

y[:,2]= w *(x[:,0]+ x[:,2]/2)+ padw # bottom right x

y[:,3]= h *(x[:,1]+ x[:,3]/2)+ padh # bottom right yreturn y

defxyxy2xywhn(x, w=640, h=640, clip=False, eps=0.0):# Convert nx4 boxes from [x1, y1, x2, y2] to [x, y, w, h] normalized where xy1=top-left, xy2=bottom-rightif clip:

clip_coords(x,(h - eps, w - eps))# warning: inplace clip

y = x.clone()ifisinstance(x, torch.Tensor)else np.copy(x)

y[:,0]=((x[:,0]+ x[:,2])/2)/ w # x center

y[:,1]=((x[:,1]+ x[:,3])/2)/ h # y center

y[:,2]=(x[:,2]- x[:,0])/ w # width

y[:,3]=(x[:,3]- x[:,1])/ h # heightreturn y

defxyn2xy(x, w=640, h=640, padw=0, padh=0):# Convert normalized segments into pixel segments, shape (n,2)

y = x.clone()ifisinstance(x, torch.Tensor)else np.copy(x)

y[:,0]= w * x[:,0]+ padw # top left x

y[:,1]= h * x[:,1]+ padh # top left yreturn y

本文转载自: https://blog.csdn.net/weixin_49513223/article/details/127321295

版权归原作者 零尘haha 所有, 如有侵权,请联系我们删除。

版权归原作者 零尘haha 所有, 如有侵权,请联系我们删除。