0.环境说明

python3.8.5+pytorch

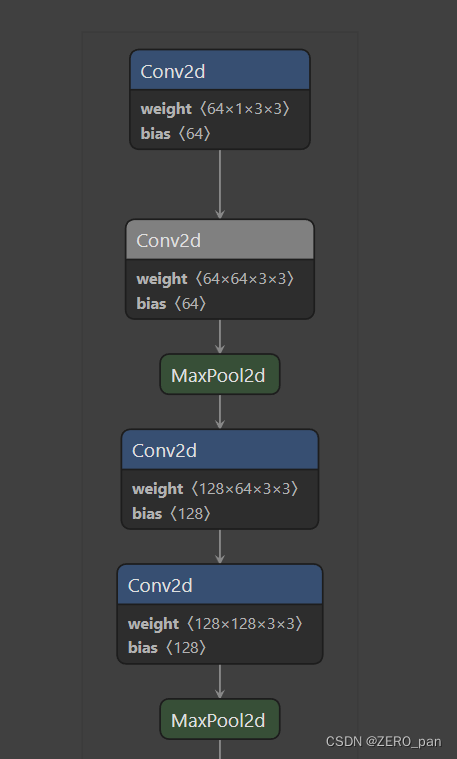

1. 模型结构可视化

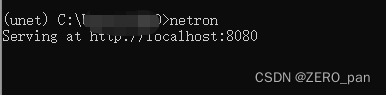

1.1 netron

step1:在虚拟环境中安装netron

pip install netron

step2: 在虚拟环境中打开netron

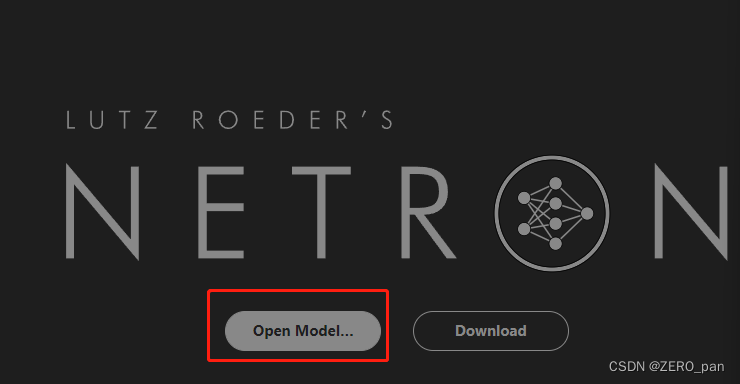

step3:浏览器中输入地址:http://localhost:8080/

step4:选择保存的模型xxx.pt

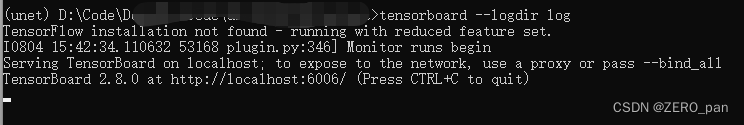

1.2 使用tensorboard

step1:安装tensorboard,最简单的方式就是直接安装一个tensorflow

pip install tensorflow==1.15.0-i https://mirrors.aliyun.com/pypi/simple

step2:代码中设置

from torch.utils.tensorboard import SummaryWriter

'''设置在模型构建后'''

writer = SummaryWriter(log_dir='./output/log')

writer.add_graph(model, torch.empty(10,4))#注意这里要结合你具体的训练样本,10是batch_szie可任意,4是训练样本的特征长度需要和训练样本一致

'''设置在反向传播过程中,记录loss和acc'''# 可视化输出

writer.add_scalar('loss', _loss, train_step)

writer.add_scalar('acc', _acc, train_step)

train_step +=1

'''train损失和test损失共同打印在一张图上,add_scalars注意s'''

writer.add_scalars('epoch_loss',{'train':train_loss,'test':test_loss},epoch)

step3:

- 进入cmd命令行;

- 切换当前磁盘到events文件所在的磁盘;

- 确保events文件所在的路径没有中文字符串;

- 输入命令:

tensorboard --logdir C:\Users\...\output\log

- 浏览器中输入http://localhost:6006/#images

2. 训练过程可视化

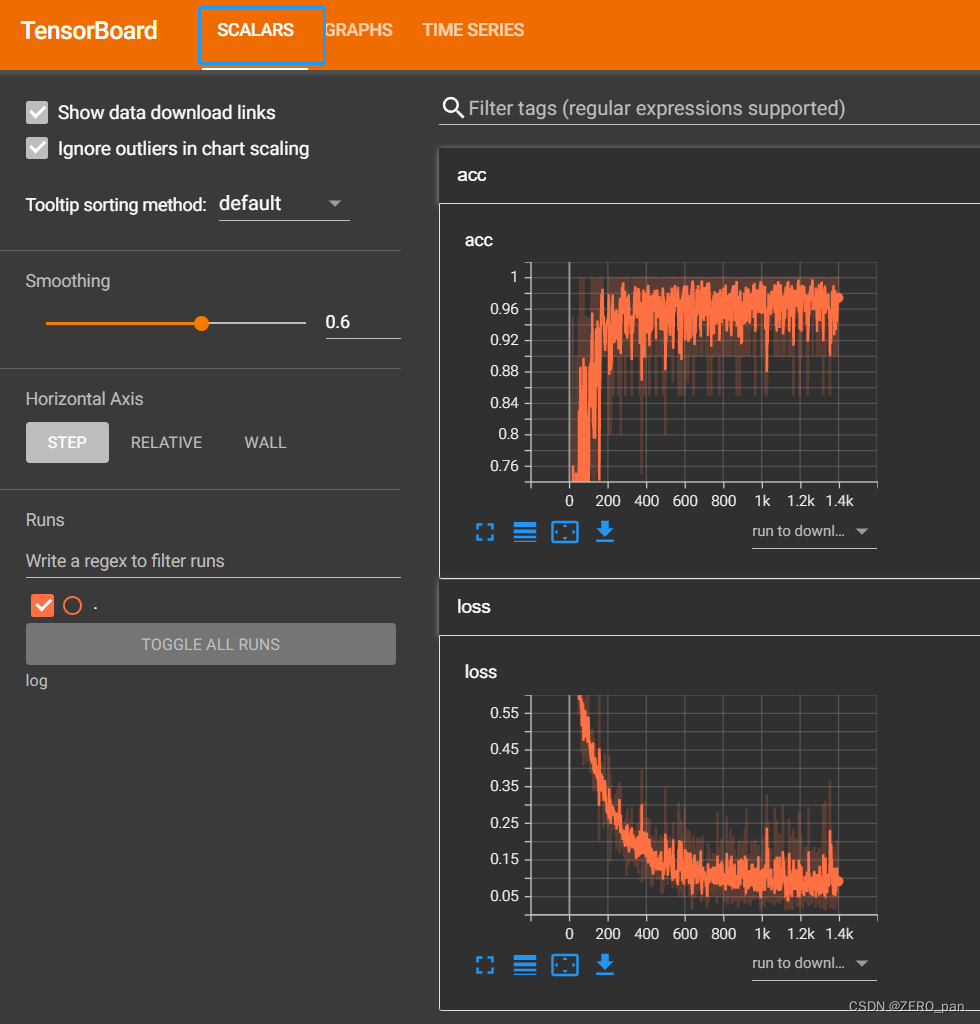

2.1 tensorboard

上文已经提及,只需要在训练过程中add即可。

'''设置在反向传播过程中,记录loss和acc'''# 可视化输出

writer.add_scalar('loss', _loss, train_step)

writer.add_scalar('acc', _acc, train_step)

train_step +=1

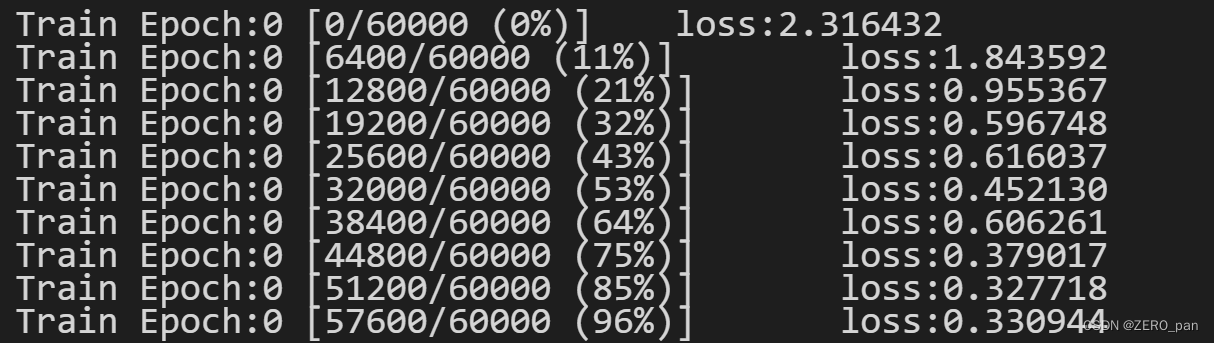

2.2 普通代码

if batch_idx %100==0:print(f"Train Epoch:{epoch} [{batch_idx*len(data)}/{len(train_loader.dataset)} ({100.*batch_idx/len(train_loader):.0f}%)]\tloss:{loss.item():.6f}")

效果图:

3. 特征提取可视化

需要tensorboard配合hook,直接上代码。

model: LeNet

data: MNIST

import enum

import sys

import torch

from torch import nn

from torchvision import datasets,transforms

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from myutils.metrics import Acc_Score #自己写的一个计算准确度的类,继承ModuleclassLeNet_BN(nn.Module):def__init__(self,in_chanel)->None:super(LeNet_BN,self).__init__()

self.feature_hook_img ={}

self.features = nn.Sequential(

nn.Conv2d(in_chanel,6, kernel_size=5), nn.BatchNorm2d(6), nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2, stride=2),

nn.Conv2d(6,16, kernel_size=5), nn.BatchNorm2d(16), nn.Sigmoid())

self.classifi = nn.Sequential(

nn.AvgPool2d(kernel_size=2, stride=2), nn.Flatten(),

nn.Linear(256,120), nn.BatchNorm1d(120), nn.Sigmoid(),

nn.Linear(120,84), nn.BatchNorm1d(84), nn.Sigmoid(),

nn.Linear(84,10))defforward(self,X):

X = self.features(X)# 特征提取与分类需要分开

X = self.classifi(X)return X

defadd_hooks(self):#可视化钩子defcreate_hook_fn(idx):defhook_fn(model,input,output):

self.feature_hook_img[idx]=output.cpu()return hook_fn

for _idx,_layer inenumerate(self.features):

_layer.register_forward_hook(create_hook_fn(_idx))defadd_image_summary(self,writer,step,prefix=None):iflen(self.feature_hook_img)==0:returnif prefix isNone:

prefix='layer'else:

prefix =f"{prefix}_layer"for _k in self.feature_hook_img:# 包含原始图像

_v = self.feature_hook_img[_k][0:1,...]# 只获取第一张图像

_v = torch.permute(_v,(1,0,2,3))#(1,c,h,w)->(c,1,h,w)# 交换通道,展示每个维度的提取的图像特征

writer.add_images(f"{prefix}_{_k}",_v,step)if __name__=='__main__':# 加载数据# device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

tsf = transforms.Compose([transforms.ToTensor()])

train_data= datasets.MNIST(root='dataset\mnist_train',train=True,transform=tsf,download=True)

train_data_loader = DataLoader(train_data,batch_size=32,shuffle=True)

test_data = datasets.MNIST(root='dataset\mnist_test',train=False,transform=tsf,download=True)

test_data_loader = DataLoader(test_data,batch_size=32,shuffle=32)

model = LeNet_BN(1)

model.add_hooks()

lr=1e-2

epochs =10

loss_f = torch.nn.CrossEntropyLoss()

acc_f = Acc_Score()

opt = torch.optim.SGD(model.parameters(),lr)

writer = SummaryWriter(log_dir='./output/log')

writer.add_graph(model,torch.empty(10,1,28,28))for epoch inrange(epochs):for idx,data inenumerate(train_data_loader):

X,y = data

y = y.to(torch.long)# 前向传播

y_pred = model(X)

train_loss = loss_f(y_pred,y)

train_acc = acc_f(y_pred,y)# 反向传播

opt.zero_grad()

train_loss.backward()

opt.step()if(idx+1)%100==0:print(f"epoch:{epoch} |{(idx+1)*32}/{len(train_data)}({100.*(idx+1)*32/len(train_data):.2f}%)|\tloss:{train_loss.item():.3f}\tacc:{train_acc.item():.2f}")

model.add_image_summary(writer,epoch,'train')# 添加本次训练

test_loss=0

test_acc=0

test_numbers =len(test_data)/32for data in test_data_loader:

model.eval()

X,y = data

y=y.to(torch.long)# print(y)# y = y.to(torch.long)

y_pred = model(X)

test_loss += loss_f(y_pred,y).item()

test_acc += acc_f(y_pred,y).item()

test_loss = test_loss/test_numbers

test_acc = test_acc/test_numbers

print('test res:')print(f"epoch:{epoch} \tloss:{test_loss:.3f}\tacc:{test_acc:.2f}")print('-'*80)

writer.add_scalars('epoch_loss',{'train':train_loss.item(),'test':test_loss},epoch)

writer.add_scalars('epoch_acc',{'train':train_acc.item(),'test':test_acc},epoch)

writer.close()# 关闭

本文转载自: https://blog.csdn.net/qq_42911863/article/details/126160153

版权归原作者 ZERO_pan 所有, 如有侵权,请联系我们删除。

版权归原作者 ZERO_pan 所有, 如有侵权,请联系我们删除。