前言

- 今年时间序列SOTA,

DLinear模型,论文下载链接,也可以看我写的论文解析当然最好是读原文。 Dlinear,NLinear模型Github项目地址,下载项目文件- 这里提供我写过注释的项目文件,下载地址

参数设定模块(run_longExp)

- 首先打开

run_longExp.py文件保证在不修改任何参数的情况下,代码可以跑通,这里windows系统需要将代码中--is_training、--model_id、--model、--data参数中required=True选项删除,否则会报错。--num_workers参数需要置为0。 - 其次需要在项目文件夹下新建子文件夹

data用来存放训练数据,可以使用ETTh1数据,这里提供下载地址 - 运行

run_longExp.py训练完成不报错就成功了

参数含义

- 下面是各参数含义(注释)

parser = argparse.ArgumentParser(description='Autoformer & Transformer family for Time Series Forecasting')# 是否进行训练

parser.add_argument('--is_training',type=int, default=1,help='status')# 模型前缀

parser.add_argument('--model_id',type=str, default='test',help='model id')# 选择模型(可选模型有Autoformer, Informer, Transformer,DLinear,NLinear)

parser.add_argument('--model',type=str, default='DLinear',help='model name, options: [Autoformer, Informer, Transformer]')# 数据选择

parser.add_argument('--data',type=str, default='ETTh1',help='dataset type')# 数据存放路径

parser.add_argument('--root_path',type=str, default='./data/',help='root path of the data file')# 数据完整名称

parser.add_argument('--data_path',type=str, default='ETTh1.csv',help='data file')# 预测类型(多变量预测、单变量预测、多元预测单变量)

parser.add_argument('--features',type=str, default='M',help='forecasting task, options:[M, S, MS]; M:multivariate predict multivariate, S:univariate predict univariate, MS:multivariate predict univariate')# 如果选择单变量预测或多元预测单变量,需要指定预测的列

parser.add_argument('--target',type=str, default='OT',help='target feature in S or MS task')# 数据重采样格式

parser.add_argument('--freq',type=str, default='h',help='freq for time features encoding, options:[s:secondly, t:minutely, h:hourly, d:daily, b:business days, w:weekly, m:monthly], you can also use more detailed freq like 15min or 3h')# 模型存放文件夹

parser.add_argument('--checkpoints',type=str, default='./checkpoints/',help='location of model checkpoints')# 时间窗口长度

parser.add_argument('--seq_len',type=int, default=96,help='input sequence length')# 先验序列长度

parser.add_argument('--label_len',type=int, default=48,help='start token length')# 要预测的序列长度

parser.add_argument('--pred_len',type=int, default=96,help='prediction sequence length')# 针对DLinear是否为每个变量(通道)单独建立一个线性层

parser.add_argument('--individual', action='store_true', default=False,help='DLinear: a linear layer for each variate(channel) individually')# 嵌入策略选择

parser.add_argument('--embed_type',type=int, default=0,help='0: default 1: value embedding + temporal embedding + positional embedding 2: value embedding + temporal embedding 3: value embedding + positional embedding 4: value embedding')# 编码器default参数为特征列数

parser.add_argument('--enc_in',type=int, default=7,help='encoder input size')# DLinear with --individual, use this hyperparameter as the number of channels# 解码器default参数与编码器相同

parser.add_argument('--dec_in',type=int, default=7,help='decoder input size')

parser.add_argument('--c_out',type=int, default=7,help='output size')# 模型宽度

parser.add_argument('--d_model',type=int, default=512,help='dimension of model')# 多头注意力机制头数

parser.add_argument('--n_heads',type=int, default=8,help='num of heads')# 模型中encoder层数

parser.add_argument('--e_layers',type=int, default=2,help='num of encoder layers')# 模型中decoder层数

parser.add_argument('--d_layers',type=int, default=1,help='num of decoder layers')# 全连接层神经元个数

parser.add_argument('--d_ff',type=int, default=2048,help='dimension of fcn')# 窗口平均线的窗口大小

parser.add_argument('--moving_avg',type=int, default=25,help='window size of moving average')# 采样因子数

parser.add_argument('--factor',type=int, default=1,help='attn factor')# 是否需要序列长度衰减

parser.add_argument('--distil', action='store_false',help='whether to use distilling in encoder, using this argument means not using distilling',

default=True)# drop_out率

parser.add_argument('--dropout',type=float, default=0.05,help='dropout')# 时间特征编码方式

parser.add_argument('--embed',type=str, default='timeF',help='time features encoding, options:[timeF, fixed, learned]')# 激活函数

parser.add_argument('--activation',type=str, default='gelu',help='activation')# 是否输出attention

parser.add_argument('--output_attention', action='store_true',help='whether to output attention in ecoder')# 是否进行预测

parser.add_argument('--do_predict', action='store_false',help='whether to predict unseen future data')# 多线程

parser.add_argument('--num_workers',type=int, default=0,help='data loader num workers')# 训练轮数

parser.add_argument('--itr',type=int, default=1,help='experiments times')# 训练迭代次数

parser.add_argument('--train_epochs',type=int, default=100,help='train epochs')# batch_size大小

parser.add_argument('--batch_size',type=int, default=32,help='batch size of train input data')# early stopping检测间隔

parser.add_argument('--patience',type=int, default=3,help='early stopping patience')# 学习率

parser.add_argument('--learning_rate',type=float, default=0.0001,help='optimizer learning rate')

parser.add_argument('--des',type=str, default='test',help='exp description')# loss函数

parser.add_argument('--loss',type=str, default='mse',help='loss function')# 学习率衰减参数

parser.add_argument('--lradj',type=str, default='type1',help='adjust learning rate')# 是否使用自动混合精度训练

parser.add_argument('--use_amp', action='store_true',help='use automatic mixed precision training', default=False)# 是否使用GPU训练

parser.add_argument('--use_gpu',type=bool, default=True,help='use gpu')

parser.add_argument('--gpu',type=int, default=0,help='gpu')# GPU分布式训练

parser.add_argument('--use_multi_gpu', action='store_true',help='use multiple gpus', default=False)# 多GPU训练

parser.add_argument('--devices',type=str, default='0,1,2,3',help='device ids of multile gpus')

parser.add_argument('--test_flop', action='store_true', default=False,help='See utils/tools for usage')# 取参数表

args = parser.parse_args()# 获取GPU

args.use_gpu =Trueif torch.cuda.is_available()and args.use_gpu elseFalse

我们在

exp.train(setting)

行打上断点跳到训练主函数

exp_main.py

。

数据处理模块

- 在

_get_data中找到数据处理函数data_provider.py点击进入,可以看到各标准数据集处理方法:

data_dict ={'ETTh1': Dataset_ETT_hour,'ETTh2': Dataset_ETT_hour,'ETTm1': Dataset_ETT_minute,'ETTm2': Dataset_ETT_minute,'Custom': Dataset_Custom,}

- 由于我们的数据集是

ETTh1,那么数据处理的方式为Dataset_ETT_hour,我们进入data_loader.py文件,找到Dataset_ETT_hour类 __init__主要用于传各类参数,这里不过多赘述,主要对__read_data__进行说明

def__read_data__(self):# 数据标准化实例

self.scaler = StandardScaler()# 读取数据

df_raw = pd.read_csv(os.path.join(self.root_path,

self.data_path))# 计算数据起始点

border1s =[0,12*30*24- self.seq_len,12*30*24+4*30*24- self.seq_len]

border2s =[12*30*24,12*30*24+4*30*24,12*30*24+8*30*24]

border1 = border1s[self.set_type]

border2 = border2s[self.set_type]# 如果预测对象为多变量预测或多元预测单变量if self.features =='M'or self.features =='MS':# 取除日期列的其他所有列

cols_data = df_raw.columns[1:]

df_data = df_raw[cols_data]# 若预测类型为S(单特征预测单特征)elif self.features =='S':# 取特征列

df_data = df_raw[[self.target]]# 将数据进行归一化if self.scale:

train_data = df_data[border1s[0]:border2s[0]]

self.scaler.fit(train_data.values)

data = self.scaler.transform(df_data.values)else:

data = df_data.values

# 取日期列

df_stamp = df_raw[['date']][border1:border2]# 利用pandas将数据转换为日期格式

df_stamp['date']= pd.to_datetime(df_stamp.date)# 构建时间特征if self.timeenc ==0:

df_stamp['month']= df_stamp.date.apply(lambda row: row.month,1)

df_stamp['day']= df_stamp.date.apply(lambda row: row.day,1)

df_stamp['weekday']= df_stamp.date.apply(lambda row: row.weekday(),1)

df_stamp['hour']= df_stamp.date.apply(lambda row: row.hour,1)

data_stamp = df_stamp.drop(['date'],1).values

elif self.timeenc ==1:# 时间特征构造函数

data_stamp = time_features(pd.to_datetime(df_stamp['date'].values), freq=self.freq)# 转置

data_stamp = data_stamp.transpose(1,0)# 取数据特征列

self.data_x = data[border1:border2]

self.data_y = data[border1:border2]

self.data_stamp = data_stamp

- 需要注意的是

time_features函数,用来提取日期特征,比如't':['month','day','weekday','hour','minute'],表示提取月,天,周,小时,分钟。可以打开timefeatures.py文件进行查阅,同样后期也可以加一些日期编码进去。 - 同样的,对

__getitem__进行说明

def__getitem__(self, index):# 随机取得标签

s_begin = index

# 训练区间

s_end = s_begin + self.seq_len

# 有标签区间+无标签区间(预测时间步长)

r_begin = s_end - self.label_len

r_end = r_begin + self.label_len + self.pred_len

# 取训练数据

seq_x = self.data_x[s_begin:s_end]

seq_y = self.data_y[r_begin:r_end]# 取训练数据对应时间特征

seq_x_mark = self.data_stamp[s_begin:s_end]# 取有标签区间+无标签区间(预测时间步长)对应时间特征

seq_y_mark = self.data_stamp[r_begin:r_end]return seq_x, seq_y, seq_x_mark, seq_y_mark

网络架构

- 我们回到

exp_main.py文件中的train函数。

deftrain(self, setting):

train_data, train_loader = self._get_data(flag='train')

vali_data, vali_loader = self._get_data(flag='val')

test_data, test_loader = self._get_data(flag='test')

path = os.path.join(self.args.checkpoints, setting)ifnot os.path.exists(path):

os.makedirs(path)# 记录时间

time_now = time.time()# 训练steps

train_steps =len(train_loader)# 早停策略

early_stopping = EarlyStopping(patience=self.args.patience, verbose=True)# 优化器

model_optim = self._select_optimizer()# 损失函数(MSE)

criterion = self._select_criterion()# 分布式训练(windows一般不推荐)if self.args.use_amp:

scaler = torch.cuda.amp.GradScaler()# 训练次数for epoch inrange(self.args.train_epochs):

iter_count =0

train_loss =[]

self.model.train()

epoch_time = time.time()for i,(batch_x, batch_y, batch_x_mark, batch_y_mark)inenumerate(train_loader):

iter_count +=1# 梯度归零

model_optim.zero_grad()# 取训练数据

batch_x = batch_x.float().to(self.device)

batch_y = batch_y.float().to(self.device)

batch_x_mark = batch_x_mark.float().to(self.device)

batch_y_mark = batch_y_mark.float().to(self.device)# decoder输入

dec_inp = torch.zeros_like(batch_y[:,-self.args.pred_len:,:]).float()

dec_inp = torch.cat([batch_y[:,:self.args.label_len,:], dec_inp], dim=1).float().to(self.device)# encoder - decoderif self.args.use_amp:with torch.cuda.amp.autocast():if'Linear'in self.args.model:

outputs = self.model(batch_x)else:if self.args.output_attention:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]else:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)

f_dim =-1if self.args.features =='MS'else0

outputs = outputs[:,-self.args.pred_len:, f_dim:]

batch_y = batch_y[:,-self.args.pred_len:, f_dim:].to(self.device)

loss = criterion(outputs, batch_y)

train_loss.append(loss.item())else:# 如果模型是Linear系列if'Linear'in self.args.model:# 得到输出

outputs = self.model(batch_x)else:if self.args.output_attention:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]else:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark, batch_y)# print(outputs.shape,batch_y.shape)# 如果预测方式为MS,取最后1列否则取第1列

f_dim =-1if self.args.features =='MS'else0

outputs = outputs[:,-self.args.pred_len:, f_dim:]

batch_y = batch_y[:,-self.args.pred_len:, f_dim:].to(self.device)# 计算损失

loss = criterion(outputs, batch_y)# 将损失放入train_loss列表中

train_loss.append(loss.item())# 记录训练过程if(i +1)%500==0:print("\titers: {0}, epoch: {1} | loss: {2:.7f}".format(i +1, epoch +1, loss.item()))

speed =(time.time()- time_now)/ iter_count

left_time = speed *((self.args.train_epochs - epoch)* train_steps - i)print('\tspeed: {:.4f}s/iter; left time: {:.4f}s'.format(speed, left_time))

iter_count =0

time_now = time.time()if self.args.use_amp:

scaler.scale(loss).backward()

scaler.step(model_optim)

scaler.update()else:# 反向传播

loss.backward()# 更新梯度

model_optim.step()print("Epoch: {} cost time: {}".format(epoch +1, time.time()- epoch_time))

train_loss = np.average(train_loss)

vali_loss = self.vali(vali_data, vali_loader, criterion)

test_loss = self.vali(test_data, test_loader, criterion)print("Epoch: {0}, Steps: {1} | Train Loss: {2:.7f} Vali Loss: {3:.7f} Test Loss: {4:.7f}".format(

epoch +1, train_steps, train_loss, vali_loss, test_loss))

early_stopping(vali_loss, self.model, path)if early_stopping.early_stop:print("Early stopping")break# 更新学习率

adjust_learning_rate(model_optim, epoch +1, self.args)# 保存模型

best_model_path = path +'/'+'checkpoint.pth'

self.model.load_state_dict(torch.load(best_model_path))

- 注意模型训练

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark),model中包含DLinear的核心架构(也是最重要的部分),打开项目文件夹下models文件夹,找到DLinear.py文件,打开后找到Model类。直接看forward

defforward(self, x):# x: [Batch, Input length, Channel]# 季节与时间趋势性分解

seasonal_init, trend_init = self.decompsition(x)# 将维度索引2与维度索引1交换

seasonal_init, trend_init = seasonal_init.permute(0,2,1), trend_init.permute(0,2,1)if self.individual:

seasonal_output = torch.zeros([seasonal_init.size(0),seasonal_init.size(1),self.pred_len],dtype=seasonal_init.dtype).to(seasonal_init.device)

trend_output = torch.zeros([trend_init.size(0),trend_init.size(1),self.pred_len],dtype=trend_init.dtype).to(trend_init.device)for i inrange(self.channels):# 使用全连接层得到季节性

seasonal_output[:,i,:]= self.Linear_Seasonal[i](seasonal_init[:,i,:])# 使用全连接层得到趋势性

trend_output[:,i,:]= self.Linear_Trend[i](trend_init[:,i,:])# 两者共享所有权重else:

seasonal_output = self.Linear_Seasonal(seasonal_init)

trend_output = self.Linear_Trend(trend_init)# 将季节性与趋势性相加

x = seasonal_output + trend_output

# 交换维度位置return x.permute(0,2,1)# to [Batch, Output length, Channel]

- 季节趋势性分解,跳转到

series_decomp类

classseries_decomp(nn.Module):"""

Series decomposition block

"""def__init__(self, kernel_size):super(series_decomp, self).__init__()

self.moving_avg = moving_avg(kernel_size, stride=1)defforward(self, x):# 滑动平均

moving_mean = self.moving_avg(x)# 季节趋势性

res = x - moving_mean

return res, moving_mean

- 季节性和趋势性使用同一全连接神经网络,共享所有权重。使用

nn.Linear函数构建了全连接神经网络

结果展示

- 训练DLinear模型是非常快的,因为丢弃了很多transformer中复杂的计算块,跑一遍ETTh1数据只需要大约1分钟,我用的笔记本上的CPU。

Args in experiment:

Namespace(is_training=1, model_id='test', model='DLinear', data='ETTh1', root_path='./data/', data_path='ETTh1.csv', features='M', target='OT', freq='h', checkpoints='./checkpoints/', seq_len=96, label_len=48, pred_len=96, individual=False, embed_type=0, enc_in=7, dec_in=7, c_out=7, d_model=512, n_heads=8, e_layers=2, d_layers=1, d_ff=2048, moving_avg=25, factor=1, distil=True, dropout=0.05, embed='timeF', activation='gelu', output_attention=False, do_predict=True, num_workers=0, itr=1, train_epochs=100, batch_size=32, patience=3, learning_rate=0.0001, des='test', loss='mse', lradj='type1', use_amp=False, use_gpu=False, gpu=0, use_multi_gpu=False, devices='0,1,2,3', test_flop=False)

Use CPU

>>>>>>>start training : DLinear_rate 0.0001>>>>>>>>>>>>>>>>>>>>>>>>>>

train 8449

val 2785

test 2785

Epoch:1 cost time:1.5147898197174072

Epoch:1, Steps:264| Train Loss:0.6620889 Vali Loss:0.8593202 Test Loss:0.5310578

Validation loss decreased (inf -->0.859320). Saving model ...

Updating learning rate to 0.0001

Epoch:2 cost time:1.49473237991333

Epoch:2, Steps:264| Train Loss:0.4363616 Vali Loss:0.7708484 Test Loss:0.4540242

Validation loss decreased (0.859320-->0.770848). Saving model ...

Updating learning rate to 5e-05

Epoch:3 cost time:1.2200875282287598

Epoch:3, Steps:264| Train Loss:0.4081523 Vali Loss:0.7452631 Test Loss:0.4380584

Validation loss decreased (0.770848-->0.745263). Saving model ...

Updating learning rate to 2.5e-05

Epoch:4 cost time:1.2776997089385986

Epoch:4, Steps:264| Train Loss:0.3990288 Vali Loss:0.7355505 Test Loss:0.4318272

Validation loss decreased (0.745263-->0.735550). Saving model ...

Updating learning rate to 1.25e-05

Epoch:5 cost time:1.2430932521820068

Epoch:5, Steps:264| Train Loss:0.3950030 Vali Loss:0.7301292 Test Loss:0.4291500

Validation loss decreased (0.735550-->0.730129). Saving model ...

Updating learning rate to 6.25e-06

Epoch:6 cost time:1.260094165802002

Epoch:6, Steps:264| Train Loss:0.3931120 Vali Loss:0.7285364 Test Loss:0.4276760

Validation loss decreased (0.730129-->0.728536). Saving model ...

Updating learning rate to 3.125e-06

Epoch:7 cost time:1.2400920391082764

Epoch:7, Steps:264| Train Loss:0.3921362 Vali Loss:0.7272122 Test Loss:0.4269841

Validation loss decreased (0.728536-->0.727212). Saving model ...

Updating learning rate to 1.5625e-06

Epoch:8 cost time:1.2691984176635742

Epoch:8, Steps:264| Train Loss:0.3916254 Vali Loss:0.7265375 Test Loss:0.4266387

Validation loss decreased (0.727212-->0.726538). Saving model ...

Updating learning rate to 7.8125e-07

Epoch:9 cost time:1.31856369972229

Epoch:9, Steps:264| Train Loss:0.3913689 Vali Loss:0.7263398 Test Loss:0.4264523

Validation loss decreased (0.726538-->0.726340). Saving model ...

Updating learning rate to 3.90625e-07

Epoch:10 cost time:1.3412230014801025

Epoch:10, Steps:264| Train Loss:0.3912611 Vali Loss:0.7261187 Test Loss:0.4263628

Validation loss decreased (0.726340-->0.726119). Saving model ...

Updating learning rate to 1.953125e-07

Epoch:11 cost time:1.246096134185791

Epoch:11, Steps:264| Train Loss:0.3911887 Vali Loss:0.7262567 Test Loss:0.4263154

EarlyStopping counter:1 out of 3

Updating learning rate to 9.765625e-08

Epoch:12 cost time:1.2540950775146484

Epoch:12, Steps:264| Train Loss:0.3911324 Vali Loss:0.7254719 Test Loss:0.4262920

Validation loss decreased (0.726119-->0.725472). Saving model ...

Updating learning rate to 4.8828125e-08

Epoch:13 cost time:1.284095287322998

Epoch:13, Steps:264| Train Loss:0.3911295 Vali Loss:0.7261668 Test Loss:0.4262800

EarlyStopping counter:1 out of 3

Updating learning rate to 2.44140625e-08

Epoch:14 cost time:1.3260986804962158

Epoch:14, Steps:264| Train Loss:0.3911082 Vali Loss:0.7258070 Test Loss:0.4262740

EarlyStopping counter:2 out of 3

Updating learning rate to 1.220703125e-08

Epoch:15 cost time:1.2486040592193604

Epoch:15, Steps:264| Train Loss:0.3911197 Vali Loss:0.7261318 Test Loss:0.4262710

EarlyStopping counter:3 out of 3

Early stopping

>>>>>>>testing : DLinear_rate 0.0001<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

test 2785

mse:0.42629215121269226, mae:0.4337235391139984>>>>>>>predicting : DLinear_rate 0.0001<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

pred 1

- 模型运行完以后会在

test_results文件夹下生成,模型在测试集上表现情况:

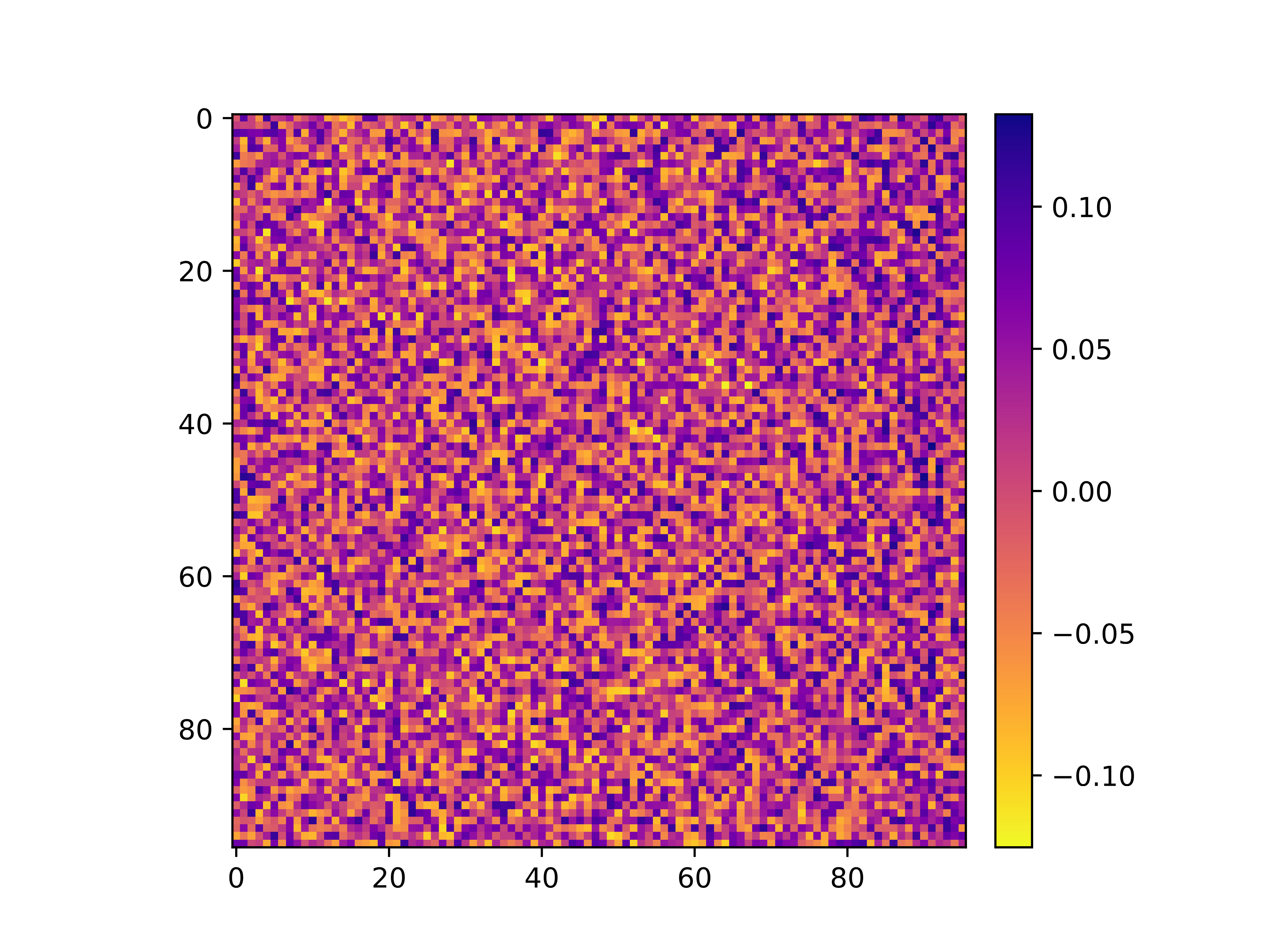

- 如果想要生成季节性、趋势性图,可以打开项目文件夹下的

weight_plot.py文件,将save_root = 'weights_plot/%s'%root.split('/')[1]改成save_root = './weights_plot/',然后运行。那么在weights_plot文件夹下就能看见季节性与趋势性热力图。

季节性趋势

总体趋势

后面如果有时间我会继续写如何使用DLinear定义自己的项目。

本文转载自: https://blog.csdn.net/qq_20144897/article/details/128050482

版权归原作者 羽星_s 所有, 如有侵权,请联系我们删除。

版权归原作者 羽星_s 所有, 如有侵权,请联系我们删除。