论文标题:Learning Important Features Through Propagating Activation Differences

论文作者:****Avanti Shrikumar, Peyton Greenside, Anshul Kundaje

论文发表时间及来源:Oct 2019,ICML

论文链接:http://proceedings.mlr.press/v70/shrikumar17a/shrikumar17a.pdf

DeepLIFT方法

1. DeepLIFT理论

DeepLIFT解释了目标输入、目标输出与“参考(reference)”输入、“参考”输出间的差异。“参考”输入是人为选择的中性的输入。

用表示单层神经元或多层神经元的集合,为对应的“参考”,有。用表示目标输入经过的输出(当为全部神经元的集合时,为目标输出),表示“参考”输出,有 。如(1)式,为各个输入贡献分数的加和。

2. 乘数(Multiplier)与链式法则

乘数与偏导数类似:偏导数是指产生无穷小变化时,的变化率;而乘数是指产生一定量的变化后,的变化率。

这里可理解为中间层的。给定每个神经元与其直接后继的乘数,即可计算任意神经元与目标神经元的乘数。

3. 定义“参考”

MNIST任务中,使用全黑图片作为“参考”。

CIFAR10任务中,使用原始图像的模糊版本能突出目标输入的轮廓,而全黑图片作为参考时产生了一些难以解释的像素。

DNA序列分类任务中,以ATGC的期望频率作为“参考”。即目标输入是四维的one-hot编码,“参考”输入是相同维度的ATGC期望频率。这里还有一种方法没有看懂,见Appendix J。

4. 区分正、负贡献

当应用RevealCancel规则时,区分正、负贡献非常重要。

5. 分配贡献分数的规则

线性规则

用于Dense层,卷积层,不可用于非线性层。定义线性函数,则。

公式有点复杂,举例说明。“参考”输入![\left [ 0, 0 \right ]\cdot \left [ 3, 4 \right ]^{T}=0](https://latex.codecogs.com/gif.latex?%5Cleft%20%5B%200%2C%200%20%5Cright%20%5D%5Ccdot%20%5Cleft%20%5B%203%2C%204%20%5Cright%20%5D%5E%7BT%7D%3D0),目标输入![\left [ 1, 2 \right ]\cdot \left [ 3, 4 \right ]^{T}=11](https://latex.codecogs.com/gif.latex?%5Cleft%20%5B%201%2C%202%20%5Cright%20%5D%5Ccdot%20%5Cleft%20%5B%203%2C%204%20%5Cright%20%5D%5E%7BT%7D%3D11),则由式(6)得,由式(8)得![\left [ 1, 2 \right ]](https://latex.codecogs.com/gif.latex?%5Cleft%20%5B%201%2C%202%20%5Cright%20%5D)两个特征贡献分数分别为3和8,由式(12)得两个神经元的乘数分别为3和4。乘数的作用是,如果神经网络有两层线性函数,![\left [ 3, 4 \right ]^{T}](https://latex.codecogs.com/gif.latex?%5Cleft%20%5B%203%2C%204%20%5Cright%20%5D%5E%7BT%7D)为第一层神经元,![\left [ 5 \right ]](https://latex.codecogs.com/gif.latex?%5Cleft%20%5B%205%20%5Cright%20%5D)为第二层神经元,则第二层的乘数为5,由式(3)得整个神经网络第一个特征的乘数为15,第二个特征的乘数为20,每个位置的输入乘以乘数就是其贡献分数。

Rescale规则

用于非线性层,如ReLU,tanh或sigmoid等。由于非线性函数只有一个输入,则,,和分别为:

当时,可用梯度代替。Rescale规则解决了梯度饱和问题和值域问题,例子见论文。

RevealCancel规则

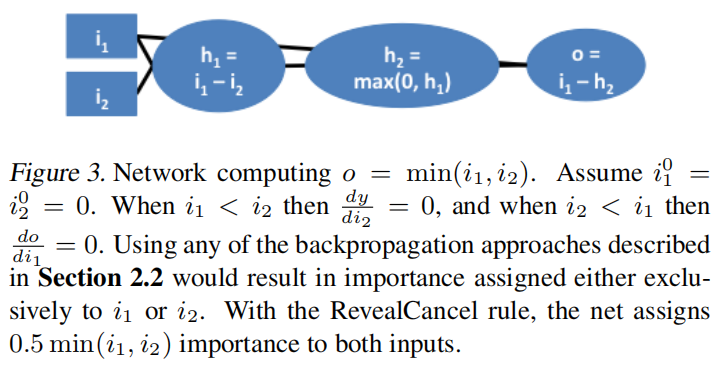

这里说明为何 和 需分开计算。下图是一个计算最小值的操作,假定,目标输入,,则,。根据线性规则,可知,。根据Rescale规则,,,。则总贡献分数为, 总贡献分数为。

同样地,梯度,输入*梯度方法也会赋予其中一个特征0的贡献分数,这忽略了特征间的相互依赖性。 和 分开计算的公式为:

用这种方法计算出和的贡献分数均为0.5,其过程简单来说是把每一层神经元输出做+、-的区分,两条路径分别计算乘数与贡献分数后再加和。计算过程有点复杂,详见Appendix C 3.4。

版权归原作者 黄金贵 所有, 如有侵权,请联系我们删除。