接上一节数据处理,本节将详细介绍训练和网络模型部分的代码,为了配合python3的执行,部分代码做了修改,先给出整个train.py的加注解代码:

import numpy

from data_iterator import DataIterator

import tensorflow as tf

from model import *

import time

import random

import sys

from utils import *

EMBEDDING_DIM = 18

HIDDEN_SIZE = 18 * 2

ATTENTION_SIZE = 18 * 2

best_auc = 0.0

def prepare_data(input, target, maxlen = None, return_neg = False):

# x: a list of sentences

# input: N个训练样本,每一行格式如下:

# 用户id[0], 商品id[1], 商品分类[2],之前点过商品(n个)[3],之前点个商品分类(n个)[4],没点过商品(n*5个)[5],没点过商品分类(n*5个)[6]

# label: 正样本或者负样本

lengths_x = [len(s[4]) for s in input] # N, 每个样本之前点击商品的个数

seqs_mid = [inp[3] for inp in input] # N*n, 之前点过商品序列

seqs_cat = [inp[4] for inp in input] # N * n, 之前点过商品分类序列

noclk_seqs_mid = [inp[5] for inp in input] # N * n * 5, 之前没点过商品序列

noclk_seqs_cat = [inp[6] for inp in input] # N * n * 5, 之前没点过商品分类

if maxlen is not None:

new_seqs_mid = []

new_seqs_cat = []

new_noclk_seqs_mid = []

new_noclk_seqs_cat = []

new_lengths_x = []

for l_x, inp in zip(lengths_x, input): # zip生成组元组成的list,长度与最小list长度一致

if l_x > maxlen:

new_seqs_mid.append(inp[3][l_x - maxlen:])

new_seqs_cat.append(inp[4][l_x - maxlen:])

new_noclk_seqs_mid.append(inp[5][l_x - maxlen:])

new_noclk_seqs_cat.append(inp[6][l_x - maxlen:])

new_lengths_x.append(maxlen)

else:

new_seqs_mid.append(inp[3])

new_seqs_cat.append(inp[4])

new_noclk_seqs_mid.append(inp[5])

new_noclk_seqs_cat.append(inp[6])

new_lengths_x.append(l_x)

lengths_x = new_lengths_x

seqs_mid = new_seqs_mid

seqs_cat = new_seqs_cat

noclk_seqs_mid = new_noclk_seqs_mid

noclk_seqs_cat = new_noclk_seqs_cat

if len(lengths_x) < 1:

return None, None, None, None

n_samples = len(seqs_mid) # 样本数 N

maxlen_x = numpy.max(lengths_x) # 之前最多的点击样本个数;

if maxlen_x <= 1:

maxlen_x = 2

neg_samples = len(noclk_seqs_mid[0][0]) # 每一次之前点击行为对应的负样本个数

mid_his = numpy.zeros((n_samples, maxlen_x)).astype('int64') # N * maxLen_x 之前点击item id 序列

cat_his = numpy.zeros((n_samples, maxlen_x)).astype('int64') # N * maxLen_x 之前点击item 分类 序列

noclk_mid_his = numpy.zeros((n_samples, maxlen_x, neg_samples)).astype('int64') # N * maxLen_x * ngsample(5), 之前每次点击对应负样本

noclk_cat_his = numpy.zeros((n_samples, maxlen_x, neg_samples)).astype('int64') # N * maxLen_x * ngsample(5), 之前每次点击对应负样本分类

mid_mask = numpy.zeros((n_samples, maxlen_x)).astype('float32') # N * maxLen_x 实际之前点击序列长度

for idx, [s_x, s_y, no_sx, no_sy] in enumerate(zip(seqs_mid, seqs_cat, noclk_seqs_mid, noclk_seqs_cat)):

mid_mask[idx, :lengths_x[idx]] = 1. # 第idx个样本,前lengths_x[idx]置为1,即有点击的位置置为1.

mid_his[idx, :lengths_x[idx]] = s_x # 第idx个样本,之前点过的商品id序列

cat_his[idx, :lengths_x[idx]] = s_y # 第idx个样本,之前点过的商品分类序列

noclk_mid_his[idx, :lengths_x[idx], :] = no_sx # 第idx个样本,没点过负样本id

noclk_cat_his[idx, :lengths_x[idx], :] = no_sy # 第idx个样本,没点过负样本分类

uids = numpy.array([inp[0] for inp in input]) # N,用户id

mids = numpy.array([inp[1] for inp in input]) # N,商品id

cats = numpy.array([inp[2] for inp in input]) # N,商品分类

if return_neg:

return uids, mids, cats, mid_his, cat_his, mid_mask, numpy.array(target), numpy.array(lengths_x), noclk_mid_his, noclk_cat_his

# uids: N, 用户id

# mids: N, 商品 item id

# cats: N, 商品分类

# mid_his: N * maxLen_x 之前点击item id 序列

# cat_his: N * maxLen_x 之前点击item 分类 序列

# mid_mask: N * maxLen_x 实际之前点击序列长度

# numpy.array(target): N * 2, label 正样本 [1,0] or 负样本 [0,1]

# numpy.array(lengths_x):N, 实际之前点击样本序列长度

# noclk_mid_his:N * maxLen_x * ngsample(5), 之前每次点击对应负样本

# noclk_cat_his:N * maxLen_x * ngsample(5), 之前每次点击对应负样本分类

else:

return uids, mids, cats, mid_his, cat_his, mid_mask, numpy.array(target), numpy.array(lengths_x)

def eval(sess, test_data, model, model_path):

loss_sum = 0.

accuracy_sum = 0.

aux_loss_sum = 0.

nums = 0

stored_arr = []

for src, tgt in test_data:

nums += 1

uids, mids, cats, mid_his, cat_his, mid_mask, target, sl, noclk_mids, noclk_cats = prepare_data(src, tgt, return_neg=True)

# uids: N, 用户id

# mids: N, 商品 item id

# cats: N, 商品分类

# mid_his: N * maxLen_x 之前点击item id 序列

# cat_his: N * maxLen_x 之前点击item 分类 序列

# mid_mask: N * maxLen_x 实际之前点击序列长度

# target: N * 2, label 正样本 [1,0] or 负样本 [0,1]

# sl:N, 实际之前点击样本序列长度

# noclk_mids: N * maxLen_x * ngsample(5), 之前每次点击对应负样本

# noclk_cats: N * maxLen_x * ngsample(5), 之前每次点击对应负样本分类

prob, loss, acc, aux_loss = model.calculate(sess, [uids, mids, cats, mid_his, cat_his, mid_mask, target, sl, noclk_mids, noclk_cats])

loss_sum += loss

aux_loss_sum = aux_loss

accuracy_sum += acc

prob_1 = prob[:, 0].tolist()

target_1 = target[:, 0].tolist()

for p ,t in zip(prob_1, target_1):

stored_arr.append([p, t])

test_auc = calc_auc(stored_arr)

accuracy_sum = accuracy_sum / nums

loss_sum = loss_sum / nums

aux_loss_sum / nums

global best_auc

if best_auc < test_auc:

best_auc = test_auc

model.save(sess, model_path)

return test_auc, loss_sum, accuracy_sum, aux_loss_sum

def train(

train_file = "local_train_splitByUser",

test_file = "local_test_splitByUser",

#第一行:label 0, 用户id, 商品id(未点击,负样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

#第二行:label 1, 用户id, 商品id(点击过,正样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

uid_voc = "uid_voc.pkl",

mid_voc = "mid_voc.pkl",

cat_voc = "cat_voc.pkl",

batch_size = 128,

maxlen = 100,

test_iter = 100,

save_iter = 100,

model_type = 'DNN',

seed = 2,

):

model_path = "dnn_save_path/ckpt_noshuff" + model_type + str(seed)

best_model_path = "dnn_best_model/ckpt_noshuff" + model_type + str(seed)

gpu_options = tf.GPUOptions(allow_growth=True)

with tf.Session(config=tf.ConfigProto(gpu_options=gpu_options)) as sess:

train_data = DataIterator(train_file, uid_voc, mid_voc, cat_voc, batch_size, maxlen, shuffle_each_epoch=False)

test_data = DataIterator(test_file, uid_voc, mid_voc, cat_voc, batch_size, maxlen)

n_uid, n_mid, n_cat = train_data.get_n() # 用户数、商品数和分类数

if model_type == 'DNN':

model = Model_DNN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'PNN':

model = Model_PNN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'Wide':

model = Model_WideDeep(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN':

model = Model_DIN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-att-gru':

model = Model_DIN_V2_Gru_att_Gru(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-gru-att':

model = Model_DIN_V2_Gru_Gru_att(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-qa-attGru':

model = Model_DIN_V2_Gru_QA_attGru(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-vec-attGru':

model = Model_DIN_V2_Gru_Vec_attGru(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIEN':

model = Model_DIN_V2_Gru_Vec_attGru_Neg(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

else:

print ("Invalid model_type : %s", model_type)

return

# model = Model_DNN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

sys.stdout.flush()

print('test_auc: %.4f ---- test_loss: %.4f ---- test_accuracy: %.4f ---- test_aux_loss: %.4f' % eval(sess, test_data, model, best_model_path))

sys.stdout.flush()

start_time = time.time()

iter = 0

lr = 0.001

for itr in range(3):

loss_sum = 0.0

accuracy_sum = 0.

aux_loss_sum = 0.

for src, tgt in train_data:

# src : 用户id, 商品id, 商品分类,之前点过商品(n个),之前点个商品分类(n个),没点过商品(n*5个),没点过商品分类(n*5个)

# label: 正样本或者负样本

uids, mids, cats, mid_his, cat_his, mid_mask, target, sl, noclk_mids, noclk_cats = prepare_data(src, tgt, maxlen, return_neg=True)

loss, acc, aux_loss = model.train(sess, [uids, mids, cats, mid_his, cat_his, mid_mask, target, sl, lr, noclk_mids, noclk_cats])

loss_sum += loss

accuracy_sum += acc

aux_loss_sum += aux_loss

iter += 1

sys.stdout.flush()

if (iter % test_iter) == 0:

print('iter: %d ----> train_loss: %.4f ---- train_accuracy: %.4f ---- tran_aux_loss: %.4f' % \

(iter, loss_sum / test_iter, accuracy_sum / test_iter, aux_loss_sum / test_iter))

print(' test_auc: %.4f ----test_loss: %.4f ---- test_accuracy: %.4f ---- test_aux_loss: %.4f' % eval(sess, test_data, model, best_model_path))

loss_sum = 0.0

accuracy_sum = 0.0

aux_loss_sum = 0.0

if (iter % save_iter) == 0:

print('save model iter: %d' %(iter))

model.save(sess, model_path+"--"+str(iter))

lr *= 0.5

def test(

train_file = "local_train_splitByUser",

test_file = "local_test_splitByUser",

uid_voc = "uid_voc.pkl",

mid_voc = "mid_voc.pkl",

cat_voc = "cat_voc.pkl",

batch_size = 128,

maxlen = 100,

model_type = 'DNN',

seed = 2

):

model_path = "dnn_best_model/ckpt_noshuff" + model_type + str(seed)

gpu_options = tf.GPUOptions(allow_growth=True)

with tf.Session(config=tf.ConfigProto(gpu_options=gpu_options)) as sess:

train_data = DataIterator(train_file, uid_voc, mid_voc, cat_voc, batch_size, maxlen)

test_data = DataIterator(test_file, uid_voc, mid_voc, cat_voc, batch_size, maxlen)

n_uid, n_mid, n_cat = train_data.get_n()

if model_type == 'DNN':

model = Model_DNN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'PNN':

model = Model_PNN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'Wide':

model = Model_WideDeep(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN':

model = Model_DIN(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-att-gru':

model = Model_DIN_V2_Gru_att_Gru(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-gru-att':

model = Model_DIN_V2_Gru_Gru_att(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-qa-attGru':

model = Model_DIN_V2_Gru_QA_attGru(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIN-V2-gru-vec-attGru':

model = Model_DIN_V2_Gru_Vec_attGru(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

elif model_type == 'DIEN':

model = Model_DIN_V2_Gru_Vec_attGru_Neg(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

else:

print ("Invalid model_type : %s", model_type)

return

model.restore(sess, model_path)

print('test_auc: %.4f ----test_loss: %.4f ---- test_accuracy: %.4f ---- test_aux_loss: %.4f' % eval(sess, test_data, model, model_path))

if __name__ == '__main__':

if len(sys.argv) == 4:

SEED = int(sys.argv[3])

else:

SEED = 3

tf.set_random_seed(SEED)

numpy.random.seed(SEED)

random.seed(SEED)

if sys.argv[1] == 'train':

train(model_type=sys.argv[2], seed=SEED)

elif sys.argv[1] == 'test':

test(model_type=sys.argv[2], seed=SEED)

else:

print('do nothing...')

训练数据获取

首先介绍下如何读取之前生成的训练数据并迭代获取这些数据生成最终的训练数据。

样本数据获取和迭代

训练和测试样本数据获取代码:

train_data = DataIterator(train_file, uid_voc, mid_voc, cat_voc, batch_size, maxlen, shuffle_each_epoch=False)

test_data = DataIterator(test_file, uid_voc, mid_voc, cat_voc, batch_size, maxlen)

这里通过DataIterator类来获取训练和测试样本数据,DataIterator的定义在data_iterator.py,详细注解代码如下:

import numpy

import json

import _pickle as pkl

import random

import gzip

import shuffle

def unicode_to_utf8(d):

return dict((key.encode("UTF-8"), value) for (key,value) in d.items())

def load_dict(filename):

try:

with open(filename, 'rb') as f:

return unicode_to_utf8(json.load(f))

except:

with open(filename, 'rb') as f:

#return unicode_to_utf8(pkl.load(f))

return pkl.load(f)

def fopen(filename, mode='r'):

if filename.endswith('.gz'):

return gzip.open(filename, mode)

return open(filename, mode)

class DataIterator:

def __init__(self, source, # local_train_splitByUser

#第一行:label 0, 用户id, 商品id(未点击,负样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

#第二行:label 1, 用户id, 商品id(点击过,正样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

uid_voc, # 用户 id 编号,uid_voc.pkl

mid_voc, # item id 编号,mid_voc.pkl

cat_voc, # cat id 编号,cat_voc.pkl

batch_size=128,

maxlen=100,

skip_empty=False,

shuffle_each_epoch=False,

sort_by_length=True,

max_batch_size=20,

minlen=None):

if shuffle_each_epoch:

self.source_orig = source

self.source = shuffle.main(self.source_orig, temporary=True)

else:

self.source = fopen(source, 'r')

self.source_dicts = []

for source_dict in [uid_voc, mid_voc, cat_voc]:

self.source_dicts.append(load_dict(source_dict)) # uid_voc, mid_voc 和 cat_voc;

f_meta = open("item-info", "r")

# (文件 item-info 保存字段): 商品item id, 商品分类 cat(某个名词,例如:Cables & Accessories)

meta_map = {} # item id 和 商品分类 cat的映射

for line in f_meta:

arr = line.strip().split("\t")

if arr[0] not in meta_map:

meta_map[arr[0]] = arr[1]

self.meta_id_map ={}

for key in meta_map:

val = meta_map[key] # item id 对应的 item cate

if key in self.source_dicts[1]:

mid_idx = self.source_dicts[1][key] # item id 对应的编号

else:

mid_idx = 0

if val in self.source_dicts[2]:

cat_idx = self.source_dicts[2][val] # cate id 对应的编号

else:

cat_idx = 0

self.meta_id_map[mid_idx] = cat_idx #item id 编号 和 cat id 编号对应

f_review = open("reviews-info", "r")

#(文件reviews-info保存字段):user id, 商品item id, rating of the product(商品等级,浮点数), 时间戳

self.mid_list_for_random = []

for line in f_review:

arr = line.strip().split("\t")

tmp_idx = 0

if arr[1] in self.source_dicts[1]: # mid_voc

tmp_idx = self.source_dicts[1][arr[1]]

self.mid_list_for_random.append(tmp_idx) # item id 的编号

self.batch_size = batch_size

self.maxlen = maxlen

self.minlen = minlen

self.skip_empty = skip_empty

self.n_uid = len(self.source_dicts[0]) # 用户数

self.n_mid = len(self.source_dicts[1]) # 商品 item数

self.n_cat = len(self.source_dicts[2]) # 商品分类数

self.shuffle = shuffle_each_epoch

self.sort_by_length = sort_by_length

self.source_buffer = []

self.k = batch_size * max_batch_size

self.end_of_data = False

def get_n(self):

return self.n_uid, self.n_mid, self.n_cat

def __iter__(self):

return self

def reset(self):

if self.shuffle:

self.source= shuffle.main(self.source_orig, temporary=True)

else:

self.source.seek(0)

def __next__(self):

if self.end_of_data:

self.end_of_data = False

self.reset()

raise StopIteration

source = []

target = []

if len(self.source_buffer) == 0:

for k_ in range(self.k):

ss = self.source.readline()

#第一行:label 0, 用户id, 商品id(未点击,负样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

#第二行:label 1, 用户id, 商品id(点击过,正样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

if ss == "":

break

self.source_buffer.append(ss.strip("\n").split("\t"))

# list: label, 用户id, 商品id, 商品分类, 之前点击过所有商品id, 之前点击过所有商品分类

# sort by history behavior length

if self.sort_by_length: # true

his_length = numpy.array([len(s[4].split("")) for s in self.source_buffer])

tidx = his_length.argsort()

_sbuf = [self.source_buffer[i] for i in tidx]

self.source_buffer = _sbuf # 按照之前点击商品个数排序

else:

self.source_buffer.reverse()

if len(self.source_buffer) == 0:

self.end_of_data = False

self.reset()

raise StopIteration

try:

# actual work here

while True:

# read from source file and map to word index

try:

ss = self.source_buffer.pop() # label, 用户id, 商品id, 商品分类, 之前点击过所有商品id, 之前点击过所有商品分类

except IndexError:

break

uid = self.source_dicts[0][ss[1]] if ss[1] in self.source_dicts[0] else 0 # 用户id编号

mid = self.source_dicts[1][ss[2]] if ss[2] in self.source_dicts[1] else 0 # 产品id编号

cat = self.source_dicts[2][ss[3]] if ss[3] in self.source_dicts[2] else 0 # 分类编号

tmp = []

for fea in ss[4].split(""):

m = self.source_dicts[1][fea] if fea in self.source_dicts[1] else 0

tmp.append(m)

mid_list = tmp # 所有点击过的产品id编号

tmp1 = []

for fea in ss[5].split(""):

c = self.source_dicts[2][fea] if fea in self.source_dicts[2] else 0

tmp1.append(c)

cat_list = tmp1 # 所有点击过的产品分类编号

# read from source file and map to word index

#if len(mid_list) > self.maxlen:

# continue

if self.minlen != None:

if len(mid_list) <= self.minlen:

continue

if self.skip_empty and (not mid_list):

continue

noclk_mid_list = []

noclk_cat_list = []

for pos_mid in mid_list:

noclk_tmp_mid = []

noclk_tmp_cat = []

noclk_index = 0

while True:

noclk_mid_indx = random.randint(0, len(self.mid_list_for_random)-1)

noclk_mid = self.mid_list_for_random[noclk_mid_indx]

if noclk_mid == pos_mid:

continue

noclk_tmp_mid.append(noclk_mid)

noclk_tmp_cat.append(self.meta_id_map[noclk_mid])

noclk_index += 1

if noclk_index >= 5:

break

noclk_mid_list.append(noclk_tmp_mid)

noclk_cat_list.append(noclk_tmp_cat)

source.append([uid, mid, cat, mid_list, cat_list, noclk_mid_list, noclk_cat_list])

#用户id, 商品id, 商品分类,之前点过商品(n个),之前点个商品分类(n个),没点过商品(n*5个),没点过商品分类(n*5个)

target.append([float(ss[0]), 1-float(ss[0])])

#label, 正样本或者负样本

if len(source) >= self.batch_size or len(target) >= self.batch_size:

break

except IOError:

self.end_of_data = True

# all sentence pairs in maxibatch filtered out because of length

if len(source) == 0 or len(target) == 0:

source, target = self.next()

return source, target

#source: N个样本,对于每个样本有如下字段:

# 用户id, 商品id, 商品分类,之前点过商品(n个),之前点个商品分类(n个),没点过商品(n*5个),没点过商品分类(n*5个)

#label: N个样本的label: [0,1] 或者 [1,0]

分别打开训练文件local_train_splitByUser和测试文件local_test_splitByUser,之前的数据处理部分已经给出了说明,这两个文件的格式是每两行对应一个用户点击行为的正负样本对,格式如下:

#第一行:label 0, 用户id, 商品id(未点击,负样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

#第二行:label 1, 用户id, 商品id(点击过,正样本), 商品分类, 之前点击过所有商品id,之前点击过所有商品分类

uid_voc、mid_voc和cat_voc分别为用户id、商品item id 和商品分类的编号,__next__函数实现了for循环取样本的功能,具体处理流程已经给出了详细的代码注释,最终返回source和target两个变量,存储内容如下:

#返回变量1,source: N个样本,对于每个样本有如下字段:

# 用户id, 商品id, 商品分类,之前点过商品(n个),之前点个商品分类(n个),没点过商品(n*5个),没点过商品分类(n*5个)

#返回变量2,label: N个样本的label: [0,1] 或者 [1,0]

数据准备

在获取训练和测试样本数据后,还要进一步对数据做准备和处理,准备处理部分代码如下:

for src, tgt in train_data:

# src : 用户id, 商品id, 商品分类,之前点过商品(n个),之前点个商品分类(n个),没点过商品(n*5个),没点过商品分类(n*5个)

# label: 正样本或者负样本

uids, mids, cats, mid_his, cat_his, mid_mask, target, sl, noclk_mids, noclk_cats = prepare_data(src, tgt, maxlen, return_neg=True)

主要通过prepare_data函数实现:

def prepare_data(input, target, maxlen = None, return_neg = False):

# x: a list of sentences

# input: N个训练样本,每一行格式如下:

# 用户id[0], 商品id[1], 商品分类[2],之前点过商品(n个)[3],之前点个商品分类(n个)[4],没点过商品(n*5个)[5],没点过商品分类(n*5个)[6]

# label: 正样本或者负样本

lengths_x = [len(s[4]) for s in input] # N, 每个样本之前点击商品的个数

seqs_mid = [inp[3] for inp in input] # N*n, 之前点过商品序列

seqs_cat = [inp[4] for inp in input] # N * n, 之前点过商品分类序列

noclk_seqs_mid = [inp[5] for inp in input] # N * n * 5, 之前没点过商品序列

noclk_seqs_cat = [inp[6] for inp in input] # N * n * 5, 之前没点过商品分类

if maxlen is not None:

new_seqs_mid = []

new_seqs_cat = []

new_noclk_seqs_mid = []

new_noclk_seqs_cat = []

new_lengths_x = []

for l_x, inp in zip(lengths_x, input): # zip生成组元组成的list,长度与最小list长度一致

if l_x > maxlen:

new_seqs_mid.append(inp[3][l_x - maxlen:])

new_seqs_cat.append(inp[4][l_x - maxlen:])

new_noclk_seqs_mid.append(inp[5][l_x - maxlen:])

new_noclk_seqs_cat.append(inp[6][l_x - maxlen:])

new_lengths_x.append(maxlen)

else:

new_seqs_mid.append(inp[3])

new_seqs_cat.append(inp[4])

new_noclk_seqs_mid.append(inp[5])

new_noclk_seqs_cat.append(inp[6])

new_lengths_x.append(l_x)

lengths_x = new_lengths_x

seqs_mid = new_seqs_mid

seqs_cat = new_seqs_cat

noclk_seqs_mid = new_noclk_seqs_mid

noclk_seqs_cat = new_noclk_seqs_cat

if len(lengths_x) < 1:

return None, None, None, None

n_samples = len(seqs_mid) # 样本数 N

maxlen_x = numpy.max(lengths_x) # 之前最多的点击样本个数;

if maxlen_x <= 1:

maxlen_x = 2

neg_samples = len(noclk_seqs_mid[0][0]) # 每一次之前点击行为对应的负样本个数

mid_his = numpy.zeros((n_samples, maxlen_x)).astype('int64') # N * maxLen_x 之前点击item id 序列

cat_his = numpy.zeros((n_samples, maxlen_x)).astype('int64') # N * maxLen_x 之前点击item 分类 序列

noclk_mid_his = numpy.zeros((n_samples, maxlen_x, neg_samples)).astype('int64') # N * maxLen_x * ngsample(5), 之前每次点击对应负样本

noclk_cat_his = numpy.zeros((n_samples, maxlen_x, neg_samples)).astype('int64') # N * maxLen_x * ngsample(5), 之前每次点击对应负样本分类

mid_mask = numpy.zeros((n_samples, maxlen_x)).astype('float32') # N * maxLen_x 实际之前点击序列长度

for idx, [s_x, s_y, no_sx, no_sy] in enumerate(zip(seqs_mid, seqs_cat, noclk_seqs_mid, noclk_seqs_cat)):

mid_mask[idx, :lengths_x[idx]] = 1. # 第idx个样本,前lengths_x[idx]置为1,即有点击的位置置为1.

mid_his[idx, :lengths_x[idx]] = s_x # 第idx个样本,之前点过的商品id序列

cat_his[idx, :lengths_x[idx]] = s_y # 第idx个样本,之前点过的商品分类序列

noclk_mid_his[idx, :lengths_x[idx], :] = no_sx # 第idx个样本,没点过负样本id

noclk_cat_his[idx, :lengths_x[idx], :] = no_sy # 第idx个样本,没点过负样本分类

uids = numpy.array([inp[0] for inp in input]) # N,用户id

mids = numpy.array([inp[1] for inp in input]) # N,商品id

cats = numpy.array([inp[2] for inp in input]) # N,商品分类

if return_neg:

return uids, mids, cats, mid_his, cat_his, mid_mask, numpy.array(target), numpy.array(lengths_x), noclk_mid_his, noclk_cat_his

# uids: N, 用户id

# mids: N, 商品 item id

# cats: N, 商品分类

# mid_his: N * maxLen_x 之前点击item id 序列

# cat_his: N * maxLen_x 之前点击item 分类 序列

# mid_mask: N * maxLen_x 实际之前点击序列长度

# numpy.array(target): N * 2, label 正样本 [1,0] or 负样本 [0,1]

# numpy.array(lengths_x):N, 实际之前点击样本序列长度

# noclk_mid_his:N * maxLen_x * ngsample(5), 之前每次点击对应负样本

# noclk_cat_his:N * maxLen_x * ngsample(5), 之前每次点击对应负样本分类

else:

return uids, mids, cats, mid_his, cat_his, mid_mask, numpy.array(target), numpy.array(lengths_x)

max_len = 100,表示用户历史点击商品的截断长度为100,即最长历史击样本序列长度为100,代码同样给出了详细注释,最终返回变量:uids, mids, cats, mid_his, cat_his, mid_mask, target, sl, noclk_mids, noclk_cats,定义如下:

# uids: N, 用户id

# mids: N, 商品 item id

# cats: N, 商品分类

# mid_his: N * maxLen_x 之前点击item id 序列

# cat_his: N * maxLen_x 之前点击item 分类 序列

# mid_mask: N * maxLen_x 实际之前点击序列长度

# target: N * 2, label 正样本 [1,0] or 负样本 [0,1]

# sl:N, 实际之前点击样本序列长度

# noclk_mids: N * maxLen_x * ngsample(5), 之前每次点击对应负样本

# noclk_cats: N * maxLen_x * ngsample(5), 之前每次点击对应负样本分类

这是模型训练需要的全部数据。

模型结构

模型定义代码如下:

model = Model_DIN_V2_Gru_Vec_attGru_Neg(n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE)

基础网络结构

网络结构类Model_DIN_V2_Gru_Vec_attGru_Neg定义在model.py文件:

class Model_DIN_V2_Gru_Vec_attGru_Neg(Model):

def __init__(self, n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE, use_negsampling=True):

# 用户id数,商品id数,商品分类数,18,18 * 2,18 * 2

super(Model_DIN_V2_Gru_Vec_attGru_Neg, self).__init__(n_uid, n_mid, n_cat,

EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE,

use_negsampling)

# RNN layer(-s)

with tf.name_scope('rnn_1'):

# item_his_eb: 之前点过商品embedding 和 分类embedding拼接在一起,[batch_size, n, EMBEDDING_DIM * 2]

# [batch_size,], 实际之前点击样本序列长度

# HIDDEN_SIZE 32

rnn_outputs, _ = dynamic_rnn(GRUCell(HIDDEN_SIZE), inputs=self.item_his_eb,

sequence_length=self.seq_len_ph, dtype=tf.float32,

scope="gru1")

# [batch_size, n, HIDDEN_SIZE]

tf.summary.histogram('GRU_outputs', rnn_outputs)

# rnn_outputs[:, :-1, :]:上一时刻embedding特征,[batch_size, n - 1, HIDDEN_SIZE]

# item_his_eb[:, 1:, :]:当前时刻的embedding特征,[batch_size, n - 1, EMBEDDING_DIM * 2]

# noclk_item_his_eb[:, 1:, :]:每次点击行为取第0个为负样本,[batch_size, n - 1, EMBEDDING_DIM * 2]

# 每一个样本,有效的点击序列个数:[batch_size, n - 1]

aux_loss_1 = self.auxiliary_loss(rnn_outputs[:, :-1, :], self.item_his_eb[:, 1:, :],

self.noclk_item_his_eb[:, 1:, :],

self.mask[:, 1:], stag="gru")

self.aux_loss = aux_loss_1

# Attention layer

with tf.name_scope('Attention_layer_1'):

# item_eb:mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

# rnn_outputs:GRU1输出的用户兴趣状态,[batch_size, n, HIDDEN_SIZE]

att_outputs, alphas = din_fcn_attention(self.item_eb, rnn_outputs, ATTENTION_SIZE, self.mask,

softmax_stag=1, stag='1_1', mode='LIST', return_alphas=True)

# 输出:ouput: [batch_size, n, HIDDEN_SIZE]

# 每个样本用户之前每一个行为兴趣特征和当前item的权重,即注意力分数:scores: [batch_size, n]

tf.summary.histogram('alpha_outputs', alphas)

with tf.name_scope('rnn_2'):

rnn_outputs2, final_state2 = dynamic_rnn(VecAttGRUCell(HIDDEN_SIZE), inputs=rnn_outputs,

att_scores = tf.expand_dims(alphas, -1),

sequence_length=self.seq_len_ph, dtype=tf.float32,

scope="gru2")

# 实现AUGRU,输出:[batch_size, HIDDEN_SIZE]

tf.summary.histogram('GRU2_Final_State', final_state2)

inp = tf.concat([self.uid_batch_embedded, self.item_eb, self.item_his_eb_sum, self.item_eb * self.item_his_eb_sum, final_state2], 1)

# uid_batch_embedded: [batch_size, EMBEDDING_DIM]用户特征embedding

# item_eb: mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

# item_his_eb_sum:之前行为embedding求和[batch_size, EMBEDDING_DIM * 2]

# final_state2:attention兴趣层提提权求和

# 所有特征拼接的一起,送入全连接网络

self.build_fcn_net(inp, use_dice=True)

其父类为model类,定义同样在model.py:

class Model(object):

def __init__(self, n_uid, n_mid, n_cat, EMBEDDING_DIM, HIDDEN_SIZE, ATTENTION_SIZE, use_negsampling = False):

with tf.name_scope('Inputs'):

self.mid_his_batch_ph = tf.placeholder(tf.int32, [None, None], name='mid_his_batch_ph')

self.cat_his_batch_ph = tf.placeholder(tf.int32, [None, None], name='cat_his_batch_ph')

self.uid_batch_ph = tf.placeholder(tf.int32, [None, ], name='uid_batch_ph')

self.mid_batch_ph = tf.placeholder(tf.int32, [None, ], name='mid_batch_ph')

self.cat_batch_ph = tf.placeholder(tf.int32, [None, ], name='cat_batch_ph')

self.mask = tf.placeholder(tf.float32, [None, None], name='mask')

self.seq_len_ph = tf.placeholder(tf.int32, [None], name='seq_len_ph')

self.target_ph = tf.placeholder(tf.float32, [None, None], name='target_ph')

self.lr = tf.placeholder(tf.float64, [])

self.use_negsampling =use_negsampling

if use_negsampling:

self.noclk_mid_batch_ph = tf.placeholder(tf.int32, [None, None, None], name='noclk_mid_batch_ph') #generate 3 item IDs from negative sampling.

self.noclk_cat_batch_ph = tf.placeholder(tf.int32, [None, None, None], name='noclk_cat_batch_ph')

# Embedding layer

with tf.name_scope('Embedding_layer'):

# uid embedding 层

self.uid_embeddings_var = tf.get_variable("uid_embedding_var", [n_uid, EMBEDDING_DIM])

tf.summary.histogram('uid_embeddings_var', self.uid_embeddings_var)

self.uid_batch_embedded = tf.nn.embedding_lookup(self.uid_embeddings_var, self.uid_batch_ph)

#mid embedding 层

self.mid_embeddings_var = tf.get_variable("mid_embedding_var", [n_mid, EMBEDDING_DIM])

tf.summary.histogram('mid_embeddings_var', self.mid_embeddings_var)

self.mid_batch_embedded = tf.nn.embedding_lookup(self.mid_embeddings_var, self.mid_batch_ph)

self.mid_his_batch_embedded = tf.nn.embedding_lookup(self.mid_embeddings_var, self.mid_his_batch_ph)

if self.use_negsampling:

self.noclk_mid_his_batch_embedded = tf.nn.embedding_lookup(self.mid_embeddings_var, self.noclk_mid_batch_ph)

# [batch_size, n, 5, EMBEDDING_DIM]

# cat embedding 层

self.cat_embeddings_var = tf.get_variable("cat_embedding_var", [n_cat, EMBEDDING_DIM])

tf.summary.histogram('cat_embeddings_var', self.cat_embeddings_var)

self.cat_batch_embedded = tf.nn.embedding_lookup(self.cat_embeddings_var, self.cat_batch_ph)

self.cat_his_batch_embedded = tf.nn.embedding_lookup(self.cat_embeddings_var, self.cat_his_batch_ph)

if self.use_negsampling:

self.noclk_cat_his_batch_embedded = tf.nn.embedding_lookup(self.cat_embeddings_var, self.noclk_cat_batch_ph)

# [batch_size, n, 5, EMBEDDING_DIM]

self.item_eb = tf.concat([self.mid_batch_embedded, self.cat_batch_embedded], 1)

# mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

self.item_his_eb = tf.concat([self.mid_his_batch_embedded, self.cat_his_batch_embedded], 2)

# 之前点过商品embedding 和 分类embedding拼接在一起,[batch_size, n, EMBEDDING_DIM * 2]

self.item_his_eb_sum = tf.reduce_sum(self.item_his_eb, 1) # 之前行为embedding求和[batch_size, EMBEDDING_DIM * 2]

if self.use_negsampling:

self.noclk_item_his_eb = tf.concat(

[self.noclk_mid_his_batch_embedded[:, :, 0, :], self.noclk_cat_his_batch_embedded[:, :, 0, :]], -1)# 0 means only using the first negative item ID. 3 item IDs are inputed in the line 24.

# 每次点击行为取第0个为负样本,[batch_size, n, EMBEDDING_DIM * 2]

self.noclk_item_his_eb = tf.reshape(self.noclk_item_his_eb,

[-1, tf.shape(self.noclk_mid_his_batch_embedded)[1], 36])# cat embedding 18 concate item embedding 18.

self.noclk_his_eb = tf.concat([self.noclk_mid_his_batch_embedded, self.noclk_cat_his_batch_embedded], -1)

# [batch_size, n, 5, EMBEDDING_DIM * 2]

self.noclk_his_eb_sum_1 = tf.reduce_sum(self.noclk_his_eb, 2)

# [batch_size, n, EMBEDDING_DIM * 2]

self.noclk_his_eb_sum = tf.reduce_sum(self.noclk_his_eb_sum_1, 1)

# [batch_size, EMBEDDING_DIM * 2]

def build_fcn_net(self, inp, use_dice = False):

bn1 = tf.layers.batch_normalization(inputs=inp, name='bn1')

dnn1 = tf.layers.dense(bn1, 200, activation=None, name='f1')

if use_dice:

dnn1 = dice(dnn1, name='dice_1')

else:

dnn1 = prelu(dnn1, 'prelu1')

dnn2 = tf.layers.dense(dnn1, 80, activation=None, name='f2')

if use_dice:

dnn2 = dice(dnn2, name='dice_2')

else:

dnn2 = prelu(dnn2, 'prelu2')

dnn3 = tf.layers.dense(dnn2, 2, activation=None, name='f3')

self.y_hat = tf.nn.softmax(dnn3) + 0.00000001

with tf.name_scope('Metrics'):

# Cross-entropy loss and optimizer initialization

ctr_loss = - tf.reduce_mean(tf.log(self.y_hat) * self.target_ph)

self.loss = ctr_loss

if self.use_negsampling:

self.loss += self.aux_loss

tf.summary.scalar('loss', self.loss)

self.optimizer = tf.train.AdamOptimizer(learning_rate=self.lr).minimize(self.loss)

# Accuracy metric

self.accuracy = tf.reduce_mean(tf.cast(tf.equal(tf.round(self.y_hat), self.target_ph), tf.float32))

tf.summary.scalar('accuracy', self.accuracy)

self.merged = tf.summary.merge_all()

def auxiliary_loss(self, h_states, click_seq, noclick_seq, mask, stag = None):

# h_states:上一时刻embedding特征,[batch_size, n - 1, HIDDEN_SIZE]

# click_seq: 当前时刻的embedding特征,[batch_size, n - 1, HIDDEN_SIZE]

# noclick_seq: 每次点击行为取第0个为负样本,[batch_size, n - 1, EMBEDDING_DIM * 2]

# mask: 每一个样本,有效的点击序列个数,[batch_size, n - 1]

mask = tf.cast(mask, tf.float32)

click_input_ = tf.concat([h_states, click_seq], -1) # [batch_size, n - 1, HIDDEN_SIZE * 2]

noclick_input_ = tf.concat([h_states, noclick_seq], -1) # [batch_size, n - 1, HIDDEN_SIZE * 2]

click_prop_ = self.auxiliary_net(click_input_, stag = stag)[:, :, 0] # [batch_size, n - 1]

noclick_prop_ = self.auxiliary_net(noclick_input_, stag = stag)[:, :, 0] # [batch_size, n - 1]

click_loss_ = - tf.reshape(tf.log(click_prop_), [-1, tf.shape(click_seq)[1]]) * mask

noclick_loss_ = - tf.reshape(tf.log(1.0 - noclick_prop_), [-1, tf.shape(noclick_seq)[1]]) * mask

loss_ = tf.reduce_mean(click_loss_ + noclick_loss_)

return loss_

def auxiliary_net(self, in_, stag='auxiliary_net'):

# [batch_size, n - 1, HIDDEN_SIZE * 2]

bn1 = tf.layers.batch_normalization(inputs=in_, name='bn1' + stag, reuse=tf.AUTO_REUSE)

dnn1 = tf.layers.dense(bn1, 100, activation=None, name='f1' + stag, reuse=tf.AUTO_REUSE)

dnn1 = tf.nn.sigmoid(dnn1)

dnn2 = tf.layers.dense(dnn1, 50, activation=None, name='f2' + stag, reuse=tf.AUTO_REUSE)

dnn2 = tf.nn.sigmoid(dnn2)

dnn3 = tf.layers.dense(dnn2, 2, activation=None, name='f3' + stag, reuse=tf.AUTO_REUSE)

y_hat = tf.nn.softmax(dnn3) + 0.00000001

return y_hat

def train(self, sess, inps):

if self.use_negsampling:

loss, accuracy, aux_loss, _ = sess.run([self.loss, self.accuracy, self.aux_loss, self.optimizer], feed_dict={

self.uid_batch_ph: inps[0],

self.mid_batch_ph: inps[1],

self.cat_batch_ph: inps[2],

self.mid_his_batch_ph: inps[3],

self.cat_his_batch_ph: inps[4],

self.mask: inps[5],

self.target_ph: inps[6],

self.seq_len_ph: inps[7],

self.lr: inps[8],

self.noclk_mid_batch_ph: inps[9],

self.noclk_cat_batch_ph: inps[10],

})

return loss, accuracy, aux_loss

else:

loss, accuracy, _ = sess.run([self.loss, self.accuracy, self.optimizer], feed_dict={

self.uid_batch_ph: inps[0],

self.mid_batch_ph: inps[1],

self.cat_batch_ph: inps[2],

self.mid_his_batch_ph: inps[3],

self.cat_his_batch_ph: inps[4],

self.mask: inps[5],

self.target_ph: inps[6],

self.seq_len_ph: inps[7],

self.lr: inps[8],

})

return loss, accuracy, 0

def calculate(self, sess, inps):

if self.use_negsampling:

probs, loss, accuracy, aux_loss = sess.run([self.y_hat, self.loss, self.accuracy, self.aux_loss], feed_dict={

self.uid_batch_ph: inps[0], # [uids[0], mids[1], cats[2], mid_his[3], cat_his[4], mid_mask[5], target[6], sl, noclk_mids, noclk_cats]

self.mid_batch_ph: inps[1],

self.cat_batch_ph: inps[2],

self.mid_his_batch_ph: inps[3],

self.cat_his_batch_ph: inps[4],

self.mask: inps[5],

self.target_ph: inps[6],

self.seq_len_ph: inps[7],

self.noclk_mid_batch_ph: inps[8],

self.noclk_cat_batch_ph: inps[9],

})

return probs, loss, accuracy, aux_loss

else:

probs, loss, accuracy = sess.run([self.y_hat, self.loss, self.accuracy], feed_dict={

self.uid_batch_ph: inps[0],

self.mid_batch_ph: inps[1],

self.cat_batch_ph: inps[2],

self.mid_his_batch_ph: inps[3],

self.cat_his_batch_ph: inps[4],

self.mask: inps[5],

self.target_ph: inps[6],

self.seq_len_ph: inps[7]

})

return probs, loss, accuracy, 0

def save(self, sess, path):

saver = tf.train.Saver()

saver.save(sess, save_path=path)

def restore(self, sess, path):

saver = tf.train.Saver()

saver.restore(sess, save_path=path)

print('model restored from %s' % path)

兴趣提取层(Interest Extractor Layer)

兴趣提取层部分代码实现如下:

# RNN layer(-s)

with tf.name_scope('rnn_1'):

# item_his_eb: 之前点过商品embedding 和 分类embedding拼接在一起,[batch_size, n, EMBEDDING_DIM * 2]

# [batch_size,], 实际之前点击样本序列长度

# HIDDEN_SIZE 32

rnn_outputs, _ = dynamic_rnn(GRUCell(HIDDEN_SIZE), inputs=self.item_his_eb,

sequence_length=self.seq_len_ph, dtype=tf.float32,

scope="gru1")

# [batch_size, n, HIDDEN_SIZE]

tf.summary.histogram('GRU_outputs', rnn_outputs)

# rnn_outputs[:, :-1, :]:上一时刻embedding特征,[batch_size, n - 1, HIDDEN_SIZE]

# item_his_eb[:, 1:, :]:当前时刻的embedding特征,[batch_size, n - 1, EMBEDDING_DIM * 2]

# noclk_item_his_eb[:, 1:, :]:每次点击行为取第0个为负样本,[batch_size, n - 1, EMBEDDING_DIM * 2]

# 每一个样本,有效的点击序列个数:[batch_size, n - 1]

aux_loss_1 = self.auxiliary_loss(rnn_outputs[:, :-1, :], self.item_his_eb[:, 1:, :],

self.noclk_item_his_eb[:, 1:, :],

self.mask[:, 1:], stag="gru")

self.aux_loss = aux_loss_1

可以看到,首先将用户之前点击item 的embedding送入以GRU为核的rnn结构中,获取每一步的兴趣状态向量rnn_outputs。

接着将0到n-2时刻的rnn_outputs[:, :-1, :],1到n-1时刻(早一个时刻)的点击商品embedding向量item_his_eb[:, 1:, :]对应负样本点击商品embedding向量noclk_item_his_eb[:, 1:, :]以及标识历史点击序列长度mask[:, 1:]送入auxiliary loss函数,auxiliary loss的实现代码如下:

def auxiliary_loss(self, h_states, click_seq, noclick_seq, mask, stag = None):

# h_states:上一时刻embedding特征,[batch_size, n - 1, HIDDEN_SIZE]

# click_seq: 当前时刻的embedding特征,[batch_size, n - 1, HIDDEN_SIZE]

# noclick_seq: 每次点击行为取第0个为负样本,[batch_size, n - 1, EMBEDDING_DIM * 2]

# mask: 每一个样本,有效的点击序列个数,[batch_size, n - 1]

mask = tf.cast(mask, tf.float32)

click_input_ = tf.concat([h_states, click_seq], -1) # [batch_size, n - 1, HIDDEN_SIZE * 2]

noclick_input_ = tf.concat([h_states, noclick_seq], -1) # [batch_size, n - 1, HIDDEN_SIZE * 2]

click_prop_ = self.auxiliary_net(click_input_, stag = stag)[:, :, 0] # [batch_size, n - 1]

noclick_prop_ = self.auxiliary_net(noclick_input_, stag = stag)[:, :, 0] # [batch_size, n - 1]

click_loss_ = - tf.reshape(tf.log(click_prop_), [-1, tf.shape(click_seq)[1]]) * mask

noclick_loss_ = - tf.reshape(tf.log(1.0 - noclick_prop_), [-1, tf.shape(noclick_seq)[1]]) * mask

loss_ = tf.reduce_mean(click_loss_ + noclick_loss_)

return loss_

第一层rnn返回的状态序列rnn_outputs为模型化兴趣变化过程的兴趣序列。

兴趣进化层 (Interest Evolving Layer)实现

兴趣进化层 (Interest Evolving Layer)实现代码如下:

# Attention layer

with tf.name_scope('Attention_layer_1'):

# item_eb:mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

# rnn_outputs:GRU1输出的用户兴趣状态,[batch_size, n, HIDDEN_SIZE]

att_outputs, alphas = din_fcn_attention(self.item_eb, rnn_outputs, ATTENTION_SIZE, self.mask,

softmax_stag=1, stag='1_1', mode='LIST', return_alphas=True)

# 输出:ouput: [batch_size, n, HIDDEN_SIZE]

# 每个样本用户之前每一个行为兴趣特征和当前item的权重,即注意力分数:scores: [batch_size, n]

tf.summary.histogram('alpha_outputs', alphas)

with tf.name_scope('rnn_2'):

rnn_outputs2, final_state2 = dynamic_rnn(VecAttGRUCell(HIDDEN_SIZE), inputs=rnn_outputs,

att_scores = tf.expand_dims(alphas, -1),

sequence_length=self.seq_len_ph, dtype=tf.float32,

scope="gru2")

# 实现AUGRU,输出:[batch_size, HIDDEN_SIZE]

tf.summary.histogram('GRU2_Final_State', final_state2)

inp = tf.concat([self.uid_batch_embedded, self.item_eb, self.item_his_eb_sum, self.item_eb * self.item_his_eb_sum, final_state2], 1)

# uid_batch_embedded: [batch_size, EMBEDDING_DIM]用户特征embedding

# item_eb: mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

# item_his_eb_sum:之前行为embedding求和[batch_size, EMBEDDING_DIM * 2]

# final_state2:attention兴趣层提提权求和

# 所有特征拼接的一起,送入全连接网络

self.build_fcn_net(inp, use_dice=True)

attention层,输入每个样本用户的兴趣变化序列rnn_outputs和当前item的embedding item_eb,利用attention机制获取每个样本用户之前每一个行为兴趣特征和当前item的权重,返回即注意力分数:scores[batch_size, n],attention层代码实现如下:

def din_fcn_attention(query, facts, attention_size, mask, stag='null', mode='SUM', softmax_stag=1, time_major=False, return_alphas=False, forCnn=False):

# query:mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

# facts:GRU1输出的用户兴趣状态,[batch_size, n, HIDDEN_SIZE]

# attention_size:36

# mask:每一个样本,有效的点击序列个数,[batch_size, n]

if isinstance(facts, tuple):

# In case of Bi-RNN, concatenate the forward and the backward RNN outputs.

facts = tf.concat(facts, 2)

if len(facts.get_shape().as_list()) == 2:

facts = tf.expand_dims(facts, 1)

if time_major:

# (T,B,D) => (B,T,D)

facts = tf.array_ops.transpose(facts, [1, 0, 2])

# Trainable parameters

mask = tf.equal(mask, tf.ones_like(mask))

facts_size = facts.get_shape().as_list()[-1] # D value - hidden size of the RNN layer

# 上一层GRU(GRU1)的输出状态,即此层GRU(GRU2)的输入的维度大小:HIDDEN_SIZE。

querry_size = query.get_shape().as_list()[-1]

# 推荐商品 item id的embedding维度:EMBEDDING_DIM * 2。

query = tf.layers.dense(query, facts_size, activation=None, name='f1' + stag)

# 全连接,输入:[batch_size, EMBEDDING_DIM * 2] 输出:[batch_size, HIDDEN_SIZE]

query = prelu(query)

# prelu 非线性变换函数

queries = tf.tile(query, [1, tf.shape(facts)[1]])

# queries的1维不变,2维扩展为之前的n倍,即维度变为:[batch_size, HIDDEN_SIZE * n],,对于一个推荐商品,其会生成n个重复的相同一个推荐商品的embedding向量

queries = tf.reshape(queries, tf.shape(facts))

# 维度进一步变为:[batch_size, n, HIDDEN_SIZE]

din_all = tf.concat([queries, facts, queries-facts, queries*facts], axis=-1)

# # 最后一个维度拼接到一起,拼接后变为 [batch_size, n, 4 * HIDDEN_SIZE]

d_layer_1_all = tf.layers.dense(din_all, 80, activation=tf.nn.sigmoid, name='f1_att' + stag)

# 第一层网络,输出[batch_size, n, 80]

d_layer_2_all = tf.layers.dense(d_layer_1_all, 40, activation=tf.nn.sigmoid, name='f2_att' + stag)

# 第二层网络,输出[batch_size, n, 40]

d_layer_3_all = tf.layers.dense(d_layer_2_all, 1, activation=None, name='f3_att' + stag)

# 第三层网络,输出[batch_size, n, 1]

d_layer_3_all = tf.reshape(d_layer_3_all, [-1, 1, tf.shape(facts)[1]])

scores = d_layer_3_all

# 最后的输出为[batch_size, 1, n]

# Mask

# key_masks = tf.sequence_mask(facts_length, tf.shape(facts)[1]) # [B, T]

key_masks = tf.expand_dims(mask, 1) # [B, 1, T]

# 标识矩阵B * T个点位,哪些是true (存在之前点击过的商品)哪些是false(不存在之前点击过的商品)

#例如:tf.sequence_mask([1, 3, 2], 5),返回值为:

# [[True, False, False, False, False],

# [True, True, True, False, False],

# [True, True, False, False, False]]

paddings = tf.ones_like(scores) * (-2 ** 32 + 1)

if not forCnn:

scores = tf.where(key_masks, scores, paddings) # [B, 1, T]

# Scale

# scores = scores / (facts.get_shape().as_list()[-1] ** 0.5)

# Activation

if softmax_stag:

scores = tf.nn.softmax(scores) # [B, 1, T]

# [batch_size, 1, n]

# Weighted sum

if mode == 'SUM':

output = tf.matmul(scores, facts) # [B, 1, H]

# output = tf.reshape(output, [-1, tf.shape(facts)[-1]])

else:

scores = tf.reshape(scores, [-1, tf.shape(facts)[1]])

# 纬度变为: [batch_size, n], 表示没个样本每个行为的权重

output = facts * tf.expand_dims(scores, -1)

# [batch_size, n, HIDDEN_SIZE] * [batch_size, n, 1]

output = tf.reshape(output, tf.shape(facts))

# [batch_size, n, HIDDEN_SIZE]

if return_alphas:

return output, scores

return output

ouput为每个历史点击商品embedding取attention打分加权后的结果,scores为这个batch的用户之前点过商品embedding的注意力分数,这里的代码和之前介绍的DIN代码实现中attention层代码是类似的。

AUGRU层实现,AUGRU层具体原理可以参考专题1,代码如下:

with tf.name_scope('rnn_2'):

rnn_outputs2, final_state2 = dynamic_rnn(VecAttGRUCell(HIDDEN_SIZE), inputs=rnn_outputs,

att_scores = tf.expand_dims(alphas, -1),

sequence_length=self.seq_len_ph, dtype=tf.float32,

scope="gru2")

# 实现AUGRU,输出:[batch_size, HIDDEN_SIZE]

tf.summary.histogram('GRU2_Final_State', final_state2)

inp = tf.concat([self.uid_batch_embedded, self.item_eb, self.item_his_eb_sum, self.item_eb * self.item_his_eb_sum, final_state2], 1)

# uid_batch_embedded: [batch_size, EMBEDDING_DIM]用户特征embedding

# item_eb: mid embedding 和 cat embedding拼接在一起,[batch_size, EMBEDDING_DIM * 2]

# item_his_eb_sum:之前行为embedding求和[batch_size, EMBEDDING_DIM * 2]

# final_state2:attention兴趣层提提权求和

# 所有特征拼接的一起,送入全连接网络

dynamic_rnn的代码实现是在rnn.py,这里实现了AUGRU的核心功能,返回最终attention兴趣提权求和的状态特征final_state2,最后将所有特征拼到一起送入全连接层:

def build_fcn_net(self, inp, use_dice = False):

bn1 = tf.layers.batch_normalization(inputs=inp, name='bn1')

dnn1 = tf.layers.dense(bn1, 200, activation=None, name='f1')

if use_dice:

dnn1 = dice(dnn1, name='dice_1')

else:

dnn1 = prelu(dnn1, 'prelu1')

dnn2 = tf.layers.dense(dnn1, 80, activation=None, name='f2')

if use_dice:

dnn2 = dice(dnn2, name='dice_2')

else:

dnn2 = prelu(dnn2, 'prelu2')

dnn3 = tf.layers.dense(dnn2, 2, activation=None, name='f3')

self.y_hat = tf.nn.softmax(dnn3) + 0.00000001

with tf.name_scope('Metrics'):

# Cross-entropy loss and optimizer initialization

ctr_loss = - tf.reduce_mean(tf.log(self.y_hat) * self.target_ph)

self.loss = ctr_loss

if self.use_negsampling:

self.loss += self.aux_loss

tf.summary.scalar('loss', self.loss)

self.optimizer = tf.train.AdamOptimizer(learning_rate=self.lr).minimize(self.loss)

# Accuracy metric

self.accuracy = tf.reduce_mean(tf.cast(tf.equal(tf.round(self.y_hat), self.target_ph), tf.float32))

tf.summary.scalar('accuracy', self.accuracy)

self.merged = tf.summary.merge_all()

ctr_loss和aux_loss求和得到最终的loss函数,通过优化器来优化即可完成训练。

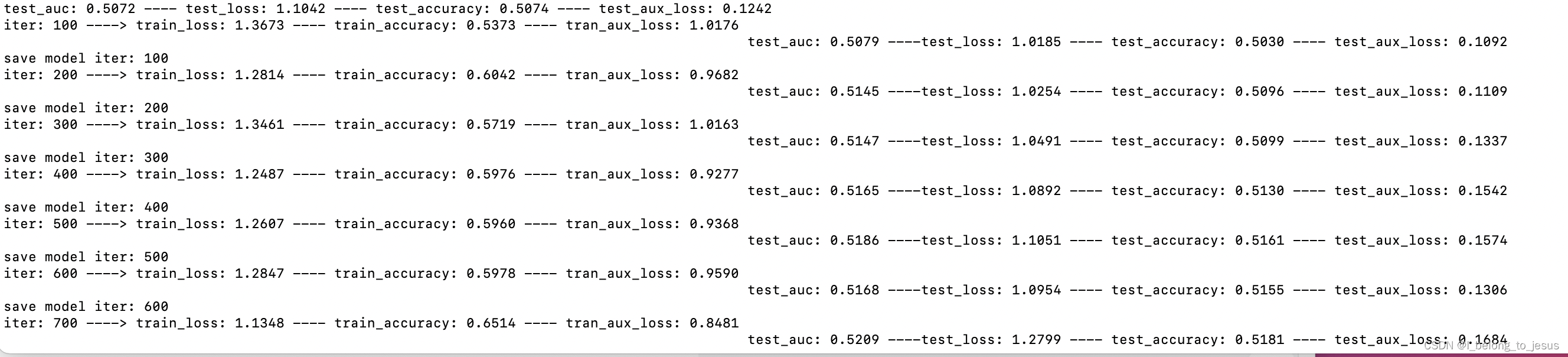

训练模型

执行:

train.py train DIEN

可以实现DIEN的训练,成功后会打印如下结果:

当然其他网络结构也有实现代码,这个不在本节介绍的范围,会在其他文章中介绍。

版权归原作者 I_belong_to_jesus 所有, 如有侵权,请联系我们删除。