predict模式用于在新图像或视频上使用经过训练的

YOLOv8

模型进行预测,在此模式下,模型从

checkpoint

文件加载,用户可以提供图像或视频来执行推理。模型预测输入图像或视频中对象的类别和位置。

from ultralytics import YOLO

from PIL import Image

import cv2

model = YOLO("model.pt")# 接受所有格式-image/dir/Path/URL/video/PIL/ndarray。0用于网络摄像头

results = model.predict(source="0")

results = model.predict(source="folder", show=True)# 展示预测结果# from PIL

im1 = Image.open("bus.jpg")

results = model.predict(source=im1, save=True)# 保存绘制的图像# from ndarray

im2 = cv2.imread("bus.jpg")

results = model.predict(source=im2, save=True, save_txt=True)# 将预测保存为标签# from list of PIL/ndarray

results = model.predict(source=[im1, im2])

YOLOv8

预测模式可以为各种任务生成预测,在使用流模式时返回结果对象列表或结果对象的内存高效生成器。通过在预测器的调用方法中传递

stream=True

来启用流模式。

stream=True

的流媒体模式应用于长视频或大型预测源,否则结果将在内存中累积并最终导致内存不足错误。

inputs =[img, img]# list of numpy arrays

results = model(inputs, stream=True)# generator of Results objectsfor result in results:

boxes = result.boxes # Boxes object for bbox outputs

masks = result.masks # Masks object for segmentation masks outputs

probs = result.probs # Class probabilities for classification outputs

相关参数如下:

KeyValueDescription

source

'ultralytics/assets'

source directory for images or videos

conf

0.25

object confidence threshold for detection

iou

0.7

intersection over union (IoU) threshold for NMS

half

False

use half precision (FP16)

device

None

device to run on, i.e. cuda device=0/1/2/3 or device=cpu

show

False

show results if possible

save

False

save images with results

save_txt

False

save results as .txt file

save_conf

False

save results with confidence scores

save_crop

False

save cropped images with results

hide_labels

False

hide labels

hide_conf

False

hide confidence scores

max_det

300

maximum number of detections per image

vid_stride

False

video frame-rate stride

line_thickness

3

bounding box thickness (pixels)

visualize

False

visualize model features

augment

False

apply image augmentation to prediction sources

agnostic_nms

False

class-agnostic NMS

retina_masks

False

use high-resolution segmentation masks

classes

None

filter results by class, i.e. class=0, or class=[0,2,3]

boxes

True

Show boxes in segmentation predictions

YOLOv8

可以接受各种输入源,如下表所示。这包括图像、URL、PIL图像、OpenCV、numpy数组、torch张量、CSV文件、视频、目录、全局、YouTube视频和流。该表指示每个源是否可以在流模式下使用stream=True✅以及每个源的示例参数。

sourcemodel(arg)typenotesimage

'im.jpg'

str

,

Path

URL

'https://ultralytics.com/images/bus.jpg'

str

screenshot

'screen'

str

PIL

Image.open('im.jpg')

PIL.Image

HWC, RGBOpenCV

cv2.imread('im.jpg')[:,:,::-1]

np.ndarray

HWC, BGR to RGBnumpy

np.zeros((640,1280,3))

np.ndarray

HWCtorch

torch.zeros(16,3,320,640)

torch.Tensor

BCHW, RGBCSV

'sources.csv'

str

,

Path

RTSP, RTMP, HTTPvideo ✅

'vid.mp4'

str

,

Path

directory ✅

'path/'

str

,

Path

glob ✅

'path/*.jpg'

str

Use

*

operatorYouTube ✅

'https://youtu.be/Zgi9g1ksQHc'

str

stream ✅

'rtsp://example.com/media.mp4'

str

RTSP, RTMP, HTTP

图像类型

Image SuffixesExample Predict CommandReference.bmp

yolo predict source=image.bmp

Microsoft BMP File Format.dng

yolo predict source=image.dng

Adobe DNG.jpeg

yolo predict source=image.jpeg

JPEG.jpg

yolo predict source=image.jpg

JPEG.mpo

yolo predict source=image.mpo

Multi Picture Object.png

yolo predict source=image.png

Portable Network Graphics.tif

yolo predict source=image.tif

Tag Image File Format.tiff

yolo predict source=image.tiff

Tag Image File Format.webp

yolo predict source=image.webp

WebP.pfm

yolo predict source=image.pfm

Portable FloatMap

视频类型

Video SuffixesExample Predict CommandReference.asf

yolo predict source=video.asf

Advanced Systems Format.avi

yolo predict source=video.avi

Audio Video Interleave.gif

yolo predict source=video.gif

Graphics Interchange Format.m4v

yolo predict source=video.m4v

MPEG-4 Part 14.mkv

yolo predict source=video.mkv

Matroska.mov

yolo predict source=video.mov

QuickTime File Format.mp4

yolo predict source=video.mp4

MPEG-4 Part 14 - Wikipedia.mpeg

yolo predict source=video.mpeg

MPEG-1 Part 2.mpg

yolo predict source=video.mpg

MPEG-1 Part 2.ts

yolo predict source=video.ts

MPEG Transport Stream.wmv

yolo predict source=video.wmv

Windows Media Video.webm

yolo predict source=video.webm

WebM Project

预测结果对象包含以下组件:

Results.boxes:

— 具有用于操作边界框的属性和方法的boxes

Results.masks:

— 用于索引掩码或获取段坐标的掩码对象

Results.probs:

— 包含类概率或logits

Results.orig_img:

— 载入内存的原始图像

Results.path:

— 包含输入图像路径的路径

默认情况下,每个结果都由一个torch. Tensor组成,它允许轻松操作:

results = results.cuda()

results = results.cpu()

results = results.to('cpu')

results = results.numpy()

from ultralytics import YOLO

import cv2

from ultralytics.yolo.utils.benchmarks import benchmark

model = YOLO("yolov8-seg.yaml").load('yolov8n-seg.pt')

results = model.predict(r'E:\CS\DL\yolo\yolov8study\bus.jpg')

boxes = results[0].boxes

masks = results[0].masks

probs = results[0].probs

print(f"boxes:{boxes[0]}")print(f"masks:{masks.xy }")print(f"probs:{probs}")

output:

image 1/1 E:\CS\DL\yolo\yolov8study\bus.jpg: 640x480 4 0s, 15, 136, 25.9ms

Speed: 4.0ms preprocess, 25.9ms inference, 10.0ms postprocess per image at shape (1, 3, 640, 640)

WARNING 'Boxes.boxes' is deprecated. Use 'Boxes.data' instead.

boxes:ultralytics.yolo.engine.results.Boxes object with attributes:

boxes: tensor([[670.1221, 389.6674, 809.4929, 876.5032, 0.8875, 0.0000]], device='cuda:0')

cls: tensor([0.], device='cuda:0')

conf: tensor([0.8875], device='cuda:0')

data: tensor([[670.1221, 389.6674, 809.4929, 876.5032, 0.8875, 0.0000]], device='cuda:0')

id: None

is_track: False

orig_shape: tensor([1080, 810], device='cuda:0')

shape: torch.Size([1, 6])

xywh: tensor([[739.8075, 633.0853, 139.3708, 486.8358]], device='cuda:0')

xywhn: tensor([[0.9133, 0.5862, 0.1721, 0.4508]], device='cuda:0')

xyxy: tensor([[670.1221, 389.6674, 809.4929, 876.5032]], device='cuda:0')

xyxyn: tensor([[0.8273, 0.3608, 0.9994, 0.8116]], device='cuda:0')

masks:[array([[804.94, 391.5],

[794.81, 401.62],

[794.81, 403.31],

[791.44, 406.69],

......

probs:None

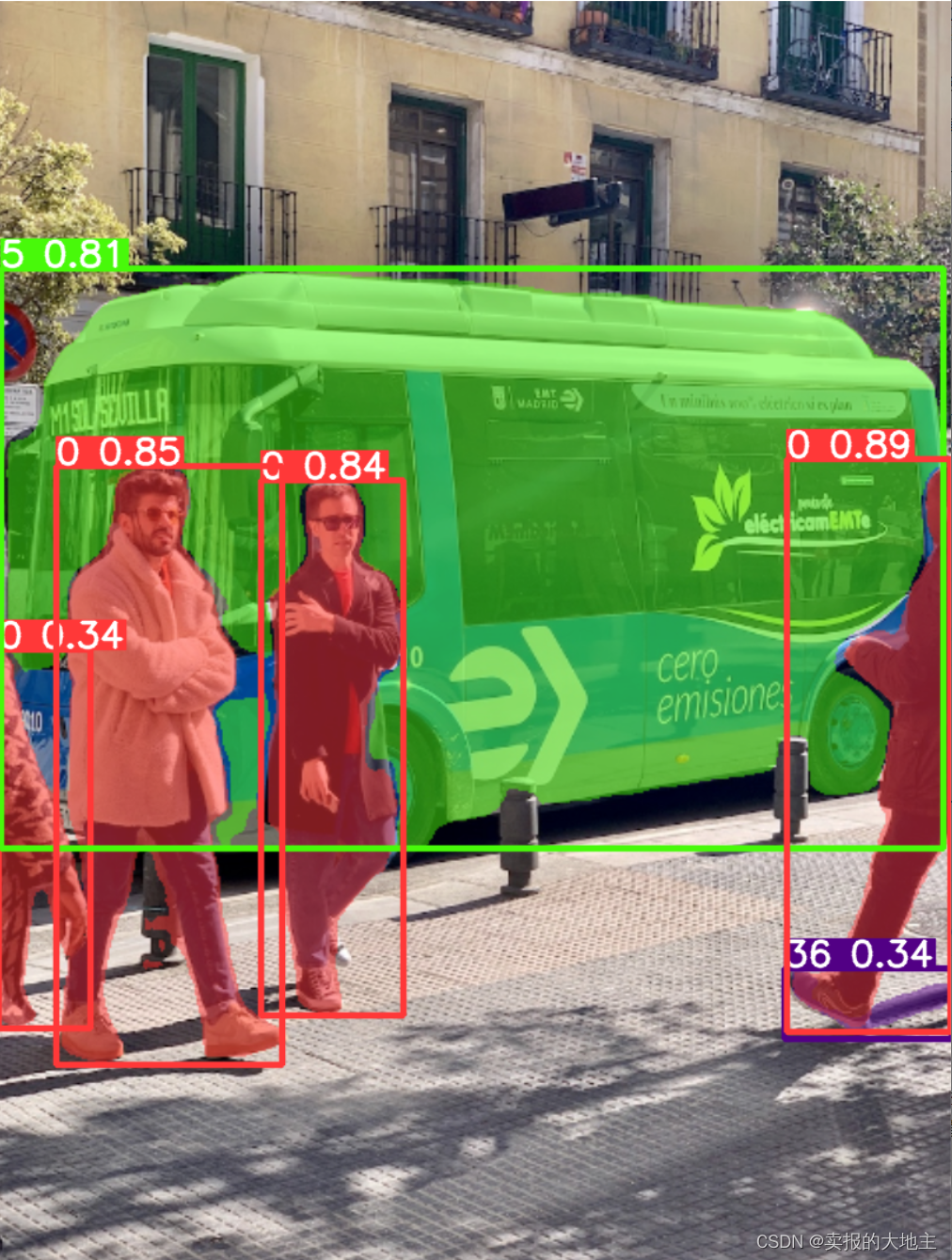

我们可以使用Result对象的

plot()

函数在图像对象中绘制结果。它绘制在结果对象中找到的所有组件(框、掩码、分类日志等)

annotated_frame = results[0].plot()# Display the annotated frame

cv2.imshow("YOLOv8 Inference", annotated_frame)

cv2.waitKey()

cv2.destroyAllWindows()

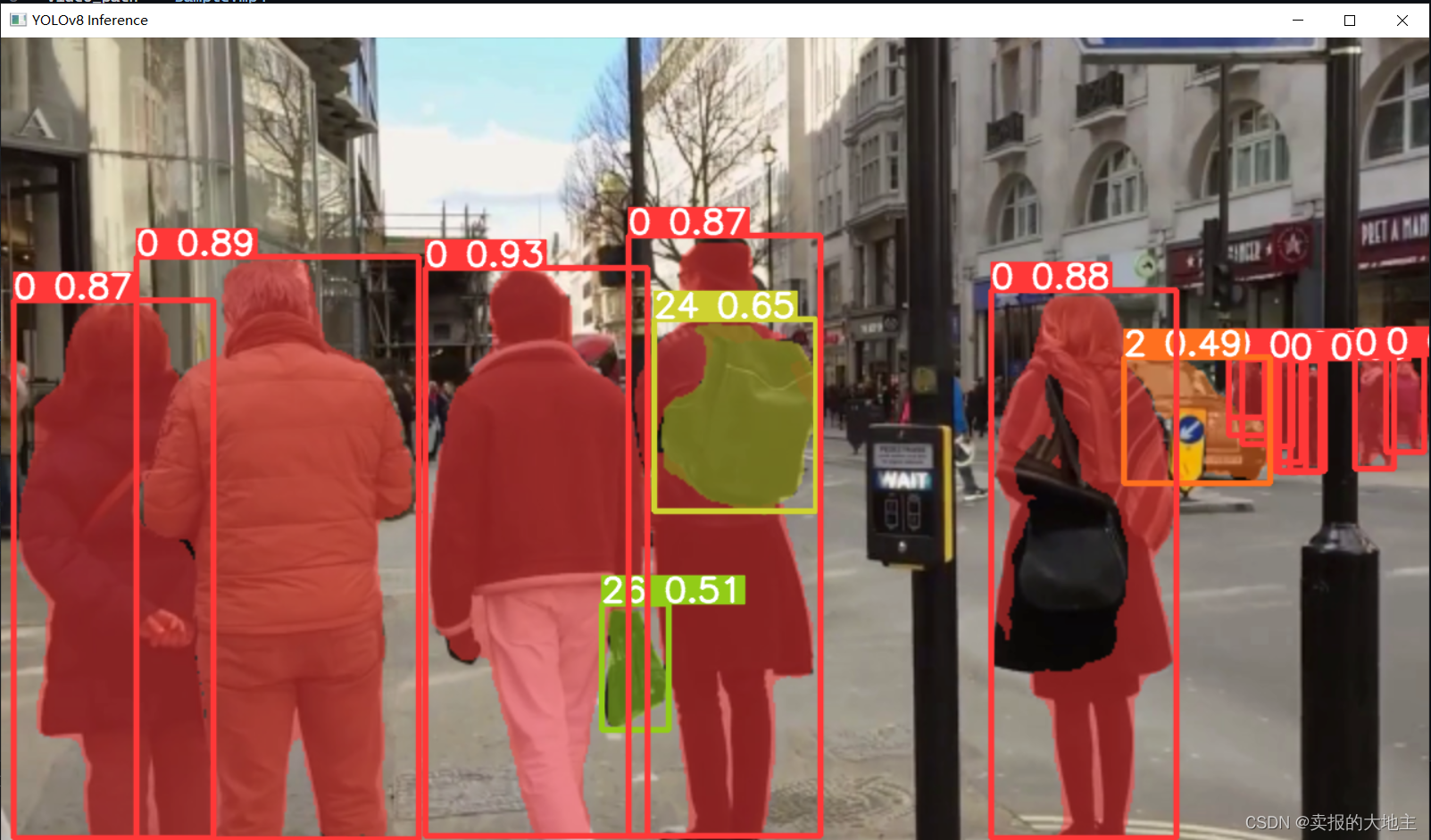

使用OpenCV(cv2)和YOLOv8对视频帧运行推理的Python脚本。

import cv2

from ultralytics import YOLO

# Load the YOLOv8 model

model = model = YOLO("yolov8-seg.yaml").load('yolov8n-seg.pt')# Open the video file

video_path ="sample.mp4"

cap = cv2.VideoCapture(video_path)# Loop through the video frameswhile cap.isOpened():# Read a frame from the video

success, frame = cap.read()if success:# Run YOLOv8 inference on the frame

results = model(frame)# Visualize the results on the frame

annotated_frame = results[0].plot()# Display the annotated frame

cv2.imshow("YOLOv8 Inference", annotated_frame)# Break the loop if 'q' is pressedif cv2.waitKey(1)&0xFF==ord("q"):breakelse:# Break the loop if the end of the video is reachedbreak# Release the video capture object and close the display window

cap.release()

cv2.destroyAllWindows()

版权归原作者 卖报的大地主 所有, 如有侵权,请联系我们删除。