一、相关软件包下载地址:

CentOS-7-x86_64-DVD-2207-02.iso:

https://mirrors.tuna.tsinghua.edu.cn/centos/7/isos/x86_64/

jdk-8u181-linux-x64.tar.gz:

https://repo.huaweicloud.com/java/jdk/8u181-b13/

Hadoop:

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.4/

Mysql mysql-5.7.25-1.el7.x86_64.rpm-bundle.tar:

https://downloads.mysql.com/archives/community/

Mysql-connector mysql-connector-java-5.1.47.tar.gz:

https://downloads.mysql.com/archives/c-j/

Hive:

https://dlcdn.apache.org/hive/hive-3.1.2/

XSHELL:

https://www.xshell.com/zh/free-for-home-school/

二、准备文件

在根目录下建立software文件夹:mkdir software

压缩文件传输到software文件夹中。

解压命令:

gz文件:tar -zxvf 文件名

Mysql文件: tar -xvf 文件名

文件夹改名:mv 源文件夹名 目标文件夹名

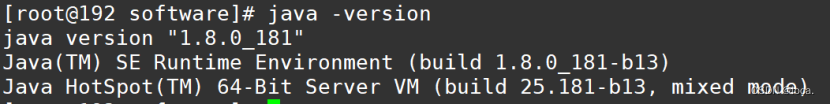

三、部署JDK

打开配置文件:vi /etc/profile

在文件最下方添加:

exportJAVA_HOME=/software/jdk

exportPATH=$PATH:$JAVA_HOME/bin

保存退出,使文件生效:source /etc/profile

查看java版本:java -version

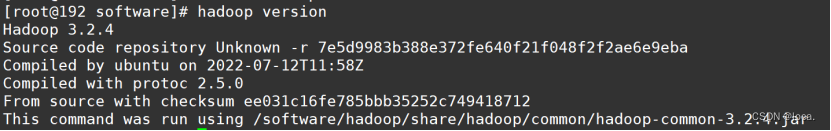

四、部署hadoop

打开配置文件:vi /etc/profile

在文件最下方添加:

exportHADOOP_HOME=/software/hadoop

exportPATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

保存退出,生效:source /etc/profile

查看hadoop版本: hadoop version

配置SSH免密:

ssh-keygen -t rsa -P''-f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_ke ys

chmod 0600 ~/.ssh/authorized_keys

进入/software/hadoop/etc/hadoop文件夹

Hadoop-env.sh在最后面加入:

exportJAVA_HOME=/software/jdk

exportHDFS_NAMENODE_USER=root

exportHDFS_SECONDARYNAMENODE_USER=root

exportHDFS_DATANODE_USER=root

Yarn-env.sh最后面加入:

exportYARN_REGISTRYDNS_SECURE_USER=root

exportYARN_RESOURCEMANAGER_USER=root

exportYARN_NODEMANAGER_USER=root

修改core-site.xml:

<configuration><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property><property><name>hadoop.tmp.dir</name><value>/opt/hadoop/tmp</value></property><property><name>hadoop.proxyuser.root.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.root.groups</name><value>*</value></property></configuration>

修改hdfs-site.xml:

<configuration><property><name>dfs.replication</name><value>1</value></property></configuration>

修改mapred-site.xml:

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.application.classpath</name><value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value></property></configuration>

修改yarn-site.xml:

<configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value></property></configuration>

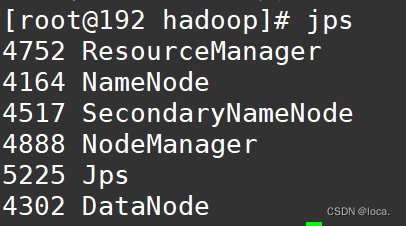

格式化hadoop hdfs:

hdfs namenode -format

启动:start-all.sh

查看启动进程:

五、部署mysql

查询是否安装了mariadb

[root@localhost ~]# rpm -aq | grep mariadb

列出信息

删除mariadb相关套件

[root@localhost ~]# rpm -e --nodeps mariadb-libs-后面加上面显示的版本号

安装依赖:

yum install perl -y

yum install net-tools -y

依次执行以下命令

rpm-ivh mysql-community-common-5.7.26-1.el7.x86_64.rpm

rpm-ivh mysql-community-libs-5.7.26-1.el7.x86_64.rpm

rpm-ivh mysql-community-libs-compat-5.7.26-1.el7.x86_64.rpm

rpm-ivh mysql-community-client-5.7.26-1.el7.x86_64.rpm

rpm-ivh mysql-community-server-5.7.26-1.el7.x86_64.rpm

启动mysql:

systemctl start mysqld

查看状态:

systemctl status mysqld

生成临时密码:

grep"temporary password" /var/log/mysqld.log

使用临时密码登录mysql:

mysql -uroot-p

设置密码强度为低级

mysql>set global validate_password_policy=0;

设置密码长度为6

mysql>set global validate_password_length=6;

修改本地密码

mysql> alter user'root'@'localhost'identified by '123456';

设置满足任意主机节点root的远程访问权限(否则后续hive无法连接mysql)

mysql> grant all privileges on *.* to 'root'@'%' identified by '123456' with grant option;

刷新权限

mysql> flush privileges;

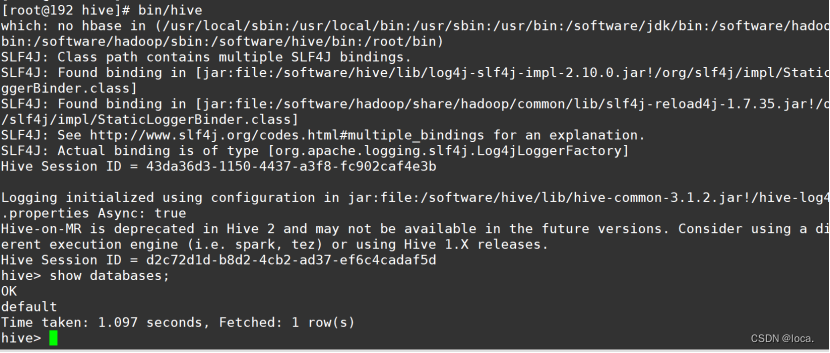

六、部署Hive

/etc/profile最后面加入:

exportHIVE_HOME=/software/hive

exportPATH=$PATH:$HIVE_HOME/bin

刷新:

source /etc/profile

进入配置文件夹:/software/hive/conf

cp hive-env.sh.template hive-env.sh

Hive-env.sh文件最后添加:

exportJAVA_HOME=/software/jdk

exportHADOOP_HOME=/software/hadoop

exportHIVE_CONF_DIR=/software/hive/conf

vi hive-site.xml

复制(IP修改为虚拟机IP):

<configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://192.168.59.128:3306/hive?createDatabaseIfNotExist=true</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value></property><property><name>javax.jdo.option.ConnectionPassword</name><value>123456</value></property><property><name>hive.exec.local.scratchdir</name><value>/tmp/hive</value></property><property><name>hive.downloaded.resources.dir</name><value>/tmp/${hive.session.id}_resources</value></property><property><name>hive.querylog.location</name><value>/tmp/hive</value></property><property><name>hive.server2.logging.operation.log.location</name><value>/tmp/hive/operation_logs</value></property><property><name>hive.server2.enable.doAs</name><value>FALSE</value></property></configuration>

一些依赖包:

cp mysql-connector-java-5.1.47.jar /software/hive/lib/

mv /software/hive/lib/guava-19.0.jar{,.bak}cp /software/hadoop/share/hadoop/hdfs/lib/guava-27.0-jre.jar /software/hive/lib/

初始化:

schematool -dbType mysql -initSchema

进入hive文件夹,启动hive:

bin/hive

输入show databases;

版权归原作者 loca. 所有, 如有侵权,请联系我们删除。