最近趁着有空想复习一下Kafka原理,之前学的是Kafka1.9的版本,需要Zookeeper作为基础,专门存放Kafka的元数据使用,如Broker、Consumer、Topic等;但下载的时候发现Kafka已经升级到3.3的版本,真是时光飞逝,岁月荏苒呀,更新速度太快了!不过话又说回来,程序猿不就是活到老学到老嘛,啥也不说了,开干!顺嘴提一句,3.1以后的版本可以不用Zookeeper支持,Kafka自己支持了,这样可以减少资源占用,也可以在没有必要的情况下不用单独安装Zookeeper!

docker-compose部署Kafka kraft集群

环境配置

程序猿,你懂得,阿里服务器,CPU 1核, 内存2G

1. 服务器环境

[root@Genterator ~]# cat /proc/cpuinfo | grep name | cut -f2 -d: | uniq -c

1 Intel(R) Xeon(R) Platinum 8269CY CPU @ 2.50GHz

[root@Genterator ~]# cat /proc/meminfo | grep MemTotal

MemTotal: 1790344 kB

[root@Genterator ~]# free -h

total used free shared buff/cache available

Mem: 1.7Gi 1.4Gi 134Mi 0.0Ki 212Mi 204Mi

Swap: 2.5Gi 114Mi 2.4Gi

kafka每个broker的内存默认为1G,对于咱们这种屌丝程序猿只能用硬盘充当内存使用了!此处使用了Linux的交换分区,==当虚拟机内存不足时, 把一部分不用的硬盘空间虚拟成内存使用,从而解决内存不足问题。==虽然性能不及内存,但好在还能凑合使用,学习、测试完全ok!

2. Docker-compose

[root@Genterator ~]# docker-compose -v

docker-compose version 1.27.4, build 40524192

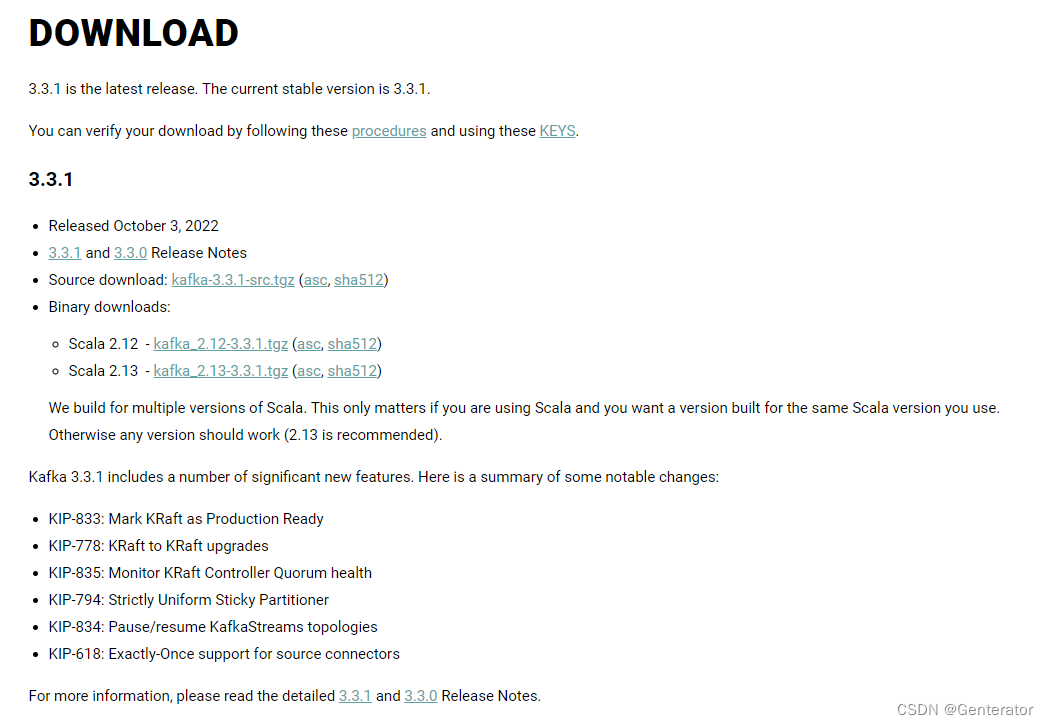

3. Kafka

Kafka使用最新版,在复习的过程中顺便体验一下新版的性能与特色,重申一遍:新版不在需要ZK的支持!

由于Kafka官方并未提供docker镜像,此处使用的是Docker Hub中的

bitnami/kafka

,使用人数较多,估计坑也踩得差不多了,算比较安全稳定点的

执行文件

1. compose-docker.yml

不说废话,直接上配置文件

version:"3.6"services:kafka1:container_name: kafka1

image:'bitnami/kafka:3.3.1'user: root

ports:-'19092:9092'-'19093:9093'environment:# 允许使用Kraft- KAFKA_ENABLE_KRAFT=yes

- KAFKA_CFG_PROCESS_ROLES=broker,controller

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

# 定义kafka服务端socket监听端口(Docker内部的ip地址和端口)- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093# 定义安全协议- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

#定义外网访问地址(宿主机ip地址和端口)- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://11.21.13.15:19092- KAFKA_BROKER_ID=1

- KAFKA_KRAFT_CLUSTER_ID=iZWRiSqjZAlYwlKEqHFQWI

- [email protected]:9093,[email protected]:9093,[email protected]:9093- ALLOW_PLAINTEXT_LISTENER=yes

# 设置broker最大内存,和初始内存- KAFKA_HEAP_OPTS=-Xmx512M -Xms256M

volumes:- /opt/volume/kafka/broker01:/bitnami/kafka:rw

networks:netkafka:ipv4_address: 172.23.0.11

kafka2:container_name: kafka2

image:'bitnami/kafka:3.3.1'user: root

ports:-'29092:9092'-'29093:9093'environment:- KAFKA_ENABLE_KRAFT=yes

- KAFKA_CFG_PROCESS_ROLES=broker,controller

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://11.21.13.15:29092#修改宿主机ip- KAFKA_BROKER_ID=2

- KAFKA_KRAFT_CLUSTER_ID=iZWRiSqjZAlYwlKEqHFQWI #哪一,三个节点保持一致- [email protected]:9093,[email protected]:9093,[email protected]:9093- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_HEAP_OPTS=-Xmx512M -Xms256M

volumes:- /opt/volume/kafka/broker02:/bitnami/kafka:rw

networks:netkafka:ipv4_address: 172.23.0.12

kafka3:container_name: kafka3

image:'bitnami/kafka:3.3.1'user: root

ports:-'39092:9092'-'39093:9093'environment:- KAFKA_ENABLE_KRAFT=yes

- KAFKA_CFG_PROCESS_ROLES=broker,controller

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://11.21.13.15:39092#修改宿主机ip- KAFKA_BROKER_ID=3

- KAFKA_KRAFT_CLUSTER_ID=iZWRiSqjZAlYwlKEqHFQWI

- [email protected]:9093,[email protected]:9093,[email protected]:9093- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_HEAP_OPTS=-Xmx512M -Xms256M

volumes:- /opt/volume/kafka/broker03:/bitnami/kafka:rw

networks:netkafka:ipv4_address: 172.23.0.13

networks:name:netkafka:driver: bridge

name: netkafka

ipam:driver: default

config:-subnet: 172.23.0.0/25

gateway: 172.23.0.1

**注:其中

11.21.13.15

的ip为我乱写的,代表我阿里云服务器的ip地址,如有雷同,纯属巧合!**

2. 执行命令

[root@Genterator ~]# docker-compose up -d

kafka1...done

kafka2...done

kafka3...done

检查kafka运行是否正常

[root@Genterator ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

606a05866b2a bitnami/kafka:3.3.1 "/opt/bitnami/script…"5 hours ago Up 5 hours 0.0.0.0:39092->9092/tcp, 0.0.0.0:39093->9093/tcp kafka3

57f0ff2fb97d bitnami/kafka:3.3.1 "/opt/bitnami/script…"5 hours ago Up 5 hours 0.0.0.0:19092->9092/tcp, 0.0.0.0:19093->9093/tcp kafka1

98a8b542a8bb bitnami/kafka:3.3.1 "/opt/bitnami/script…"5 hours ago Up 5 hours 0.0.0.0:29092->9092/tcp, 0.0.0.0:29093->9093/tcp kafka2

测试

选择一个kafka节点进入到容器中,找到对应的安装kafka路径/opt/bitnami/kafka

[root@Genterator ~]# docker exec -it kafka1 /bin/bash

root@57f0ff2fb97d:cd /opt/bitnami/kafka

LICENSE NOTICE bin config libs licenses logs site-docs

root@57f0ff2fb97d:/opt/bitnami/kafka# cd bin

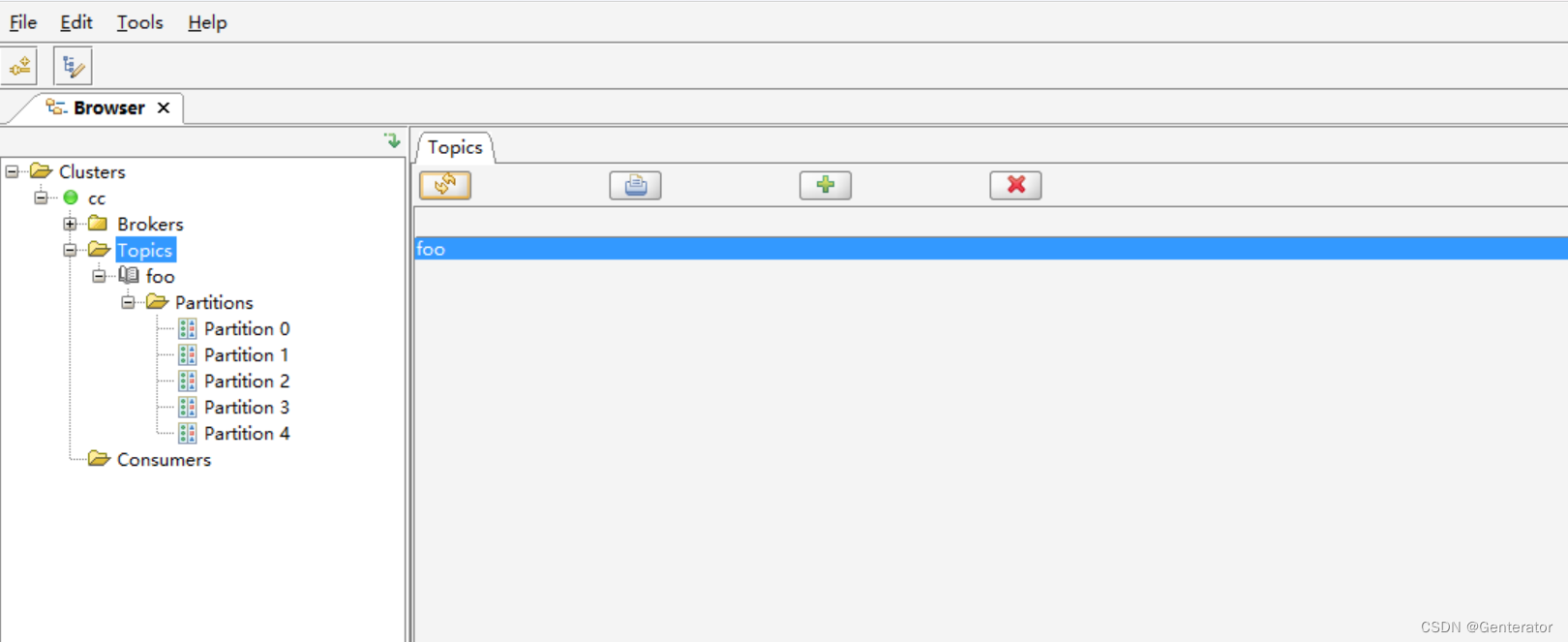

1. 创建Topic

# 创建一个副本为3、分区为5的topic

root@57f0ff2fb97d:/opt/bitnami/kafka/bin# ./kafka-topics.sh --create --topic foo --partitions 5 --replication-factor 3 --bootstrap-server kafka1:9092,kafka2:9092,kafka3:9092

Created topic foo.

# 查看topic详细信息

root@57f0ff2fb97d:/opt/bitnami/kafka/bin# kafka-topics.sh --describe --topic foo --bootstrap-server kafka1:9092, kafka2:9092, kafka3:9092

Topic: foo TopicId: 83kWG4PUT9u4fOzG5WgFoA PartitionCount: 5 ReplicationFactor: 3 Configs:

Topic: foo Partition: 0 Leader: 3 Replicas: 3,1,2 Isr: 3,1,2

Topic: foo Partition: 1 Leader: 1 Replicas: 1,2,3 Isr: 1,2,3

Topic: foo Partition: 2 Leader: 2 Replicas: 2,3,1 Isr: 2,3,1

Topic: foo Partition: 3 Leader: 3 Replicas: 3,2,1 Isr: 3,2,1

Topic: foo Partition: 4 Leader: 2 Replicas: 2,1,3 Isr: 2,1,3

在图形化界面中可以看到:

2. 生产和消费

此时我在

kafka1

上进行生产,

kafka2

和

kafka3

将进行消费

kafka1生产

root@57f0ff2fb97d:/opt/bitnami/kafka/bin# kafka-console-producer.sh --broker-list 172.23.0.11:9092,172.23.0.12:9092,172.23.0.13:9092 --topic foo>Hello

>Kafka

kafka2和kafka3消费

root@57f0ff2fb97d:/opt/bitnami/kafka/bin# kafka-console-consumer.sh --bootstrap-server 172.23.0.11:9092,172.23.0.12:9092,172.23.0.13:9092 --topic foo

3. 删除Topic

root@57f0ff2fb97d:/opt/bitnami/kafka/bin# kafka-topics.sh --delete --topic foo --bootstrap-server kafka1:9092,kafka2:9092,kafka3:9092

版权归原作者 Genterator 所有, 如有侵权,请联系我们删除。