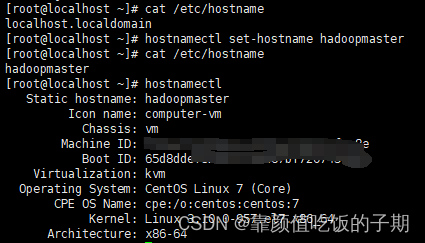

1.修改主机名称(需要在root用户下执行)

hostnamectl set-hostname 需要修改的主机名称

或者修改配置文件 vim /etc/hostname

2.如果主机没有固定IP,需要固定IP(这一步自行查询)

3.关闭防火墙

systemctl start firewalld.service #开启防火墙

systemctl restart firewalld.service #重启防火墙

systemctl stop firewalld.service #关闭防火墙

systemctl status firewalld.service # 防火墙状态

4.禁用selinux

永久关闭selinux 安全策略,可以修改/etc/selinux/config, 将SELINUX=enforcing 修改为SELINUX=disabled

# This file controls the state of SELinux on the system.# SELINUX= can take one of these three values:# enforcing - SELinux security policy is enforced.# permissive - SELinux prints warnings instead of enforcing.# disabled - No SELinux policy is loaded.SELINUX=disabled

# SELINUXTYPE= can take one of three values:# targeted - Targeted processes are protected,# minimum - Modification of targeted policy. Only selected processes are protected.# mls - Multi Level Security protection.SELINUXTYPE=targeted

5.设置ssh免密登录

进入/root/.ssh储存密钥文件夹,通过ls -l指令查看是否有旧密钥

cd /root/.ssh #进入秘钥存放目录

rm -rf * #删除旧秘钥

使用ssh-keygen -t dsa 命令生成秘钥,在这个过程中需要多次回车键选取默认配置

[root@localhost .ssh]# ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter fileinwhich to save the key (/root/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_dsa.

Your public key has been saved in /root/.ssh/id_dsa.pub.

The key fingerprint is:

SHA256:QNHQYbzj9rWNmAItP5x root@hadoopmaster

The key's randomart image is:

+---[DSA 1024]----+

| +*oo ..||.| B * *

| + oo.oo|

+----[SHA256]-----+

将生成的密钥文件id_dsa.pub 复制到SSH指定的密钥文件中authorized_keys中

cat id_dsa.pub >>authorized_keys

测试秘钥是否登入成功

[root@localhost .ssh]# ssh hadoopmaster

The authenticity of host'hadoopmaster (fe80::7468:4a91:e381:bd03%eth0)' can't be established.

ECDSA key fingerprint is SHA256:SOi/rsJBsRn/zcHQ/gtT0Bg.

ECDSA key fingerprint is MD5:6a:0:88:38:fc:e0:bf:4b6:bf:59:b0.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'hadoopmaster,f8a91:e3' (ECDSA) to the list of known hosts.

Last login: Fri Feb 210:17:45 2024 from 192.168

6.重启

修改主机名等相关配置,必须重启主机

[root@hadoopmaster ~]# reboot

7.安装jdk

将jdk-8u341-linux-x64.rpm上传到/user/local文件夹中,并执行

rpm -ivh jdk-8u341-linux-x64.rpm

这样安装的jdk路径为:/usr/java/jdk1.8.0_341-amd64

8.安装hadoop(hadoop用户下操作)

将hadoop-3.3.6.tar.gz文件上传到/home/hadoop文件夹,然后使用tar -xvf hadoop-3.3.6.tar.gz 解压文件,并使用mv hadoop-3.3.6 hadoop更改文件夹名

9.配置hadoop环境配置(root下操作)

vim /etc/profile

exportHADOOP_HOME=/home/hadoop/hadoop

exportPATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/lib

exportHDFS_NAMENODE_USER=root

exportHDFS_DATANODE_USER=root

exportHDFS_SECONDARYNAMENODE_USER=root

exportHDFS_JOURNALNODE_USER=root

exportHDFS_ZKFC_USER=root

exportYARN_RESOURCEMANAGER_USER=root

exportYARN_NODEMANAGER_USER=root

exportHADOOP_MAPRED_HOME=$HADOOP_HOMEexportHADOOP_COMMON_HOME=$HADOOP_HOMEexportHADOOP_HDFS_HOME=$HADOOP_HOMEexportHADOOP_YARN_HOME=$HADOOP_HOMEexportHADOOP_INSTALL=$HADOOP_HOMEexportHADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

exportHADOOP_LIBEXEC_DIR=$HADOOP_HOME/libexec

exportJAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native

exportHADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

修改完之后,执行source /etc/profile使变更环境变量生效

[root@hadoopmaster local]# source /etc/profile

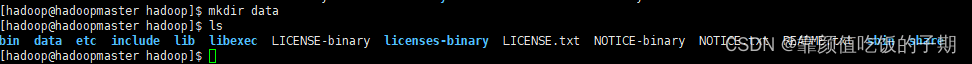

在hadoop目录创建data目录

mkdir ./data

11.修改配置文件

进入/home/hadoop/hadoop/etc/hadoop查看目录下的文件,配置几个必要的文件

(1)配置core-site.xml

vim ./core-site.xml

<configuration><property><name>fs.defaultFS</name><value>hdfs://hadoopmaster:9000</value><description>NameNode URI</description></property><property><name>hadoop.tmp.dir</name><value>/home/hadoop/hadoop/data</value></property><property><name>hadoop.http.staticuser.user</name><value>root</value></property><property><name>io.file.buffer.size</name><value>131073</value></property><property><name>hadoop.proxyuser.root.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.root.groups</name><value>*</value></property></configuration>

(2)配置hdfs-site.xml

vim ./hdfs-site.xml

<configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.namenode.name.dir</name><value>/home/hadoop/hadoop/data/dfs/name</value></property><property><name>dfs.datanode.data.dir</name><value>/home/hadoop/hadoop/data/dfs/data</value></property><property><name>dfs.permissions.enabled</name><value>false</value></property></configuration>

(3)配置mapred-site.xml

vim ./mapred-site.xml

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.jobhistory.address</name><value>hadoopmaster:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>hadoopmaster:19888</value></property><property><name>mapreduce.map.memory.mb</name><value>2048</value></property><property><name>mapreduce.reduce.memory.mb</name><value>2048</value></property><property><name>mapreduce.application.classpath</name><value>/home/hadoop/hadoop/etc/hadoop:/home/hadoop/hadoop/share/hadoop/common/*:/home/hadoop/hadoop/share/hadoop/common/lib/*:/home/hadoop/hadoop/share/hadoop/hdfs/*:/home/hadoop/hadoop/share/hadoop/hdfs/lib/*:/home/hadoop/hadoop/share/hadoop/mapreduce/*:/home/hadoop/hadoop/share/hadoop/mapreduce/lib/*:/home/hadoop/hadoop/share/hadoop/yarn/*:/home/hadoop/hadoop/share/hadoop/yarn/lib/*</value></property></configuration>

(4)配置yarn-site.xml

vim ./yarn-site.xml

<configuration><!-- Site specific YARN configuration properties --><property><name>yarn.resourcemanager.connect.retry-interval.ms</name><value>20000</value></property><property><name>yarn.resourcemanager.scheduler.class</name><value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value></property><property><name>yarn.nodemanager.localizer.address</name><value>hadoopmaster:8040</value></property><property><name>yarn.nodemanager.address</name><value>hadoopmaster:8050</value></property><property><name>yarn.nodemanager.webapp.address</name><value>hadoopmaster:8042</value></property><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.local-dirs</name><value>/home/hadoop/hadoop/yarndata/yarn</value></property><property><name>yarn.nodemanager.log-dirs</name><value>/home/hadoop/hadoop/yarndata/log</value></property><property><name>yarn.nodemanager.vmem-check-enabled</name><value>false</value></property></configuration>

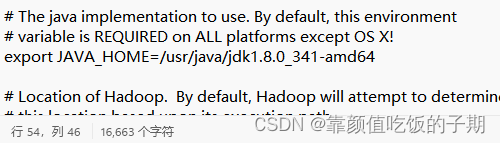

(5)配置hadoop-env.sh

vim ./hadoop-env.sh

修改第54行

export JAVA_HOME=/usr/java/jdk1.8.0_341-amd64

(6)配置workers

vim ./workers

[hadoop@hadoopmaster hadoop]$ vim ./workers

[hadoop@hadoopmaster hadoop]$ cat ./workers

hadoopmaster

11.初始化hadoop

进入/home/hadoop/hadoop/bin路径

执行:hadoop namenode -format

12.Hadoop3 验证

Hadoop 使用之前必须进行格式化,可以使用如下指令进行格式化:

hadoop namenode -format

如果在使用Hadoop的过程中出错,或者Hadoop 无法正常启动,可能需要重新格式化

重新格式化的流程步骤:

停止Hadoop

删除Hadoop 下的data和logs文件夹

重新格式化

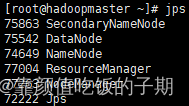

13.启动hadoop

start-all.sh

查看进程

jps

13.停止hadoop

stop-all.sh

版权归原作者 靠颜值吃饭的子期 所有, 如有侵权,请联系我们删除。