这里以 mysql - kafka connect - oracle 实现upsert 全量同步为例:

启动zookeeper 、 kafka 等组件后

编写kafka/config/connect-distributed.properties文件

##

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

##

# This file contains some of the configurations for the Kafka Connect distributed worker. This file is intended

# to be used with the examples, and some settings may differ from those used in a production system, especially

# the `bootstrap.servers` and those specifying replication factors.

# A list of host/port pairs to use for establishing the initial connection to the Kafka cluster.

bootstrap.servers=localhost:9092

# unique name for the cluster, used in forming the Connect cluster group. Note that this must not conflict with consumer group IDs

group.id=connect-cluster

# The converters specify the format of data in Kafka and how to translate it into Connect data. Every Connect user will

# need to configure these based on the format they want their data in when loaded from or stored into Kafka

key.converter=org.apache.kafka.connect.json.JsonConverter

value.converter=org.apache.kafka.connect.json.JsonConverter

# Converter-specific settings can be passed in by prefixing the Converter's setting with the converter we want to apply

# it to

key.converter.schemas.enable=true

value.converter.schemas.enable=true

# Topic to use for storing offsets. This topic should have many partitions and be replicated and compacted.

# Kafka Connect will attempt to create the topic automatically when needed, but you can always manually create

# the topic before starting Kafka Connect if a specific topic configuration is needed.

# Most users will want to use the built-in default replication factor of 3 or in some cases even specify a larger value.

# Since this means there must be at least as many brokers as the maximum replication factor used, we'd like to be able

# to run this example on a single-broker cluster and so here we instead set the replication factor to 1.

offset.storage.topic=connect-offsets

offset.storage.replication.factor=1

#offset.storage.partitions=25

# Topic to use for storing connector and task configurations; note that this should be a single partition, highly replicated,

# and compacted topic. Kafka Connect will attempt to create the topic automatically when needed, but you can always manually create

# the topic before starting Kafka Connect if a specific topic configuration is needed.

# Most users will want to use the built-in default replication factor of 3 or in some cases even specify a larger value.

# Since this means there must be at least as many brokers as the maximum replication factor used, we'd like to be able

# to run this example on a single-broker cluster and so here we instead set the replication factor to 1.

config.storage.topic=connect-configs

config.storage.replication.factor=1

# Topic to use for storing statuses. This topic can have multiple partitions and should be replicated and compacted.

# Kafka Connect will attempt to create the topic automatically when needed, but you can always manually create

# the topic before starting Kafka Connect if a specific topic configuration is needed.

# Most users will want to use the built-in default replication factor of 3 or in some cases even specify a larger value.

# Since this means there must be at least as many brokers as the maximum replication factor used, we'd like to be able

# to run this example on a single-broker cluster and so here we instead set the replication factor to 1.

status.storage.topic=connect-status

status.storage.replication.factor=1

#status.storage.partitions=5

# Flush much faster than normal, which is useful for testing/debugging

offset.flush.interval.ms=10000

# List of comma-separated URIs the REST API will listen on. The supported protocols are HTTP and HTTPS.

# Specify hostname as 0.0.0.0 to bind to all interfaces.

# Leave hostname empty to bind to default interface.

# Examples of legal listener lists: HTTP://myhost:8083,HTTPS://myhost:8084"

listeners=HTTP://collector:8083

# The Hostname & Port that will be given out to other workers to connect to i.e. URLs that are routable from other servers.

# If not set, it uses the value for "listeners" if configured.

rest.advertised.host.name=my-connect-worker-host

rest.advertised.port=8083

# rest.advertised.listener=

# Set to a list of filesystem paths separated by commas (,) to enable class loading isolation for plugins

# (connectors, converters, transformations). The list should consist of top level directories that include

# any combination of:

# a) directories immediately containing jars with plugins and their dependencies

# b) uber-jars with plugins and their dependencies

# c) directories immediately containing the package directory structure of classes of plugins and their dependencies

# Examples:

# plugin.path=/usr/local/share/java,/usr/local/share/kafka/plugins,/opt/connectors,

plugin.path=/home/connect/confluentinc-kafka-connect-jdbc-10.7.4/lib/,/home/connect/debezium-connector-oracle

注:要确保8083端口没被占用

**启动 connect **

./bin/connect-distributed.sh ./config/connect-distributed.properties

注: 这里窗口会被占用,不想被占用,用 nohup 启动

编写mysql-source文件

{

"name": "mysql-source",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSourceConnector",

"connection.url": "jdbc:mysql://collector:3306/test?user=root&password=123456",

"mode": "bulk",

"table.whitelist": "student",

"topic.prefix": "student-"

}

}

注:这里 mode 要写为bulk 才能实现全量同步,incrementing 是增量

编写oracle-sink 文件

{

"name": "oracle-sink",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSinkConnector",

"connection.url": "jdbc:oracle:thin:@collector:1521:orcl",

"db.hostname": "collector",

"tasks.max": "1",

"connection.user": "dbzuser",

"connection.password": "dbz2023",

"db.fetch.size": "1",

"topics": "student-student",

"multitenant": "false",

"table.name.format": "t1",

"dialect.name": "OracleDatabaseDialect",

"auto.evolve": "true",

"pk.mode": "record_value",

"pk.fields": "id",

"insert.mode": "upsert"

}

}

注:这里的topic 是提前创建好的student-student,也可以不创建,他自己生成,但指定的时候要去指定前缀。

同时,还需要对应的mysql 、 oracle 驱动,这里用的mysql 8.0.26 、ojdbc8-23.3.0.23.09,

connector驱动类就写

io.confluent.connect.jdbc.JdbcSourceConnector和JdbcSinkConnector

向8083端口注册(curl 请求)

curl -i -X POST -H "Accept: application/json" -H "Content-Type: application/json" http://collector:8083/connectors/ -d @/opt/installs/kafka/connector/mysql-source.json

curl -i -X POST -H "Accept: application/json" -H "Content-Type: application/json" http://collector:8083/connectors/ -d @/opt/installs/kafka/connector/oracle-sink.json

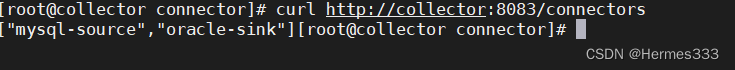

查看目前的connect连接

测试操作

切换oracle用户启动oracle

[root@collector connector]# su oracle

[oracle@collector connector]$ lsnrctl start

[oracle@collector connector]$ sqlplus /nolog

SQL> conn /as sysdba

SQL> startup

mysql 源表添加一条记录

去查oracle 目标表

curl操作

REST API描述GET /查看Kafka集群版本信息GET /connectors查看当前活跃的连接器列表,显示连接器的名字POST /connectors根据指定配置,创建一个新的连接器GET /connectors/{name}查看指定连接器的信息GET /connectors/{name}/config查看指定连接器的配置信息PUT /connectors/{name}/config修改指定连接器的配置信息GET /connectors/{name}/status查看指定连接器的状态POST /connectors/{name}/restart重启指定的连接器PUT /connectors/{name}/pause暂停指定的连接器GET /connectors/{name}/tasks查看指定连接器正在运行的TaskPOST /connectors/{name}/tasks修改Task的配置GET /connectors/{name}/tasks/{taskId}/status查看指定连接器中指定Task的状态POST /connectors/{name}/tasks/{tasked}/restart重启指定连接器中指定的TaskDELETE /connectors/{name}/删除指定的连接器

参考文档:Kafka——Kafka Connect详解_kafka-connect-CSDN博客

版权归原作者 Hermes333 所有, 如有侵权,请联系我们删除。