本程序可以根据时间要求获取某华网上不同模块的新闻内容,时间要求包括设置截止日期,以及时间间隔,比如说获取距离2023-04-20一天以内的新闻。主要使用了selenium有关的爬虫技术,具体实现如下:

目录

一、SpiderXinhua类的基础属性

程序被封装为一个类SpiderXinhua,此类中相关属性如下:

xinhua_url_base0 = "http://www.news.cn"

#各个模块的链接

xinhua_url_lists = {

}

#代理信息

user_agent = "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36"

headers = {

"user-agent": user_agent

}

#错误提示信息

Xinhua_errormessage = []

#可用的url

Need_url = []

# 当天日期

# 查看更多

LookmoreBtn = "//div[contains(@class,'xpage-more-btn')]"

#xpath信息

# 军事

Xpath_army = "//ul[contains(@class,'army_list')]//div[@class='tit']/a/@href"

Xpath_army_spide = "//div[@class='swiper-wrapper']/div[not(contains(@class,'swiper-slide-duplicate'))]//p[@class='name']/a/@href"

# 列表

Xpath_t_start = "//div[@class='item item-style1']["

Xpath_t_end = "]/div[@class='txt']/div[@class='info clearfix domPc']/div[contains(@class,'time')]/text()"

Xpath_h_start = Xpath_t_start

Xpath_h_end = "]//div[@class='tit']//a/@href"

# 教育

Xpath_Jt_start = "//div["

Xpath_Jt_end = "]/div/div[@class='item item-style1']/div[@class='txt']/div[@class='info clearfix domPc']/div[@class='time']/text()"

Xpath_Jh_start = Xpath_Jt_start

Xpath_Jh_end = "]/div/div[@class='item item-style1']/div[@class='txt']/div[@class='tit']/a/@href"

# 特殊列表

Xpath_Ft_start = "//li[@class='item item-style2']["

Xpath_Ft_end = "]/div[@class='info clearfix domPc']/div[@class='time']/text()"

Xpath_Fh_start = Xpath_Ft_start

Xpath_Fh_end = "]/div[@class='tit']//a/@href"

# 轮播

Xpath_info_spide = "//div[contains(@class,'swiper-slide')]/div[@class='tit']//a/@href"

Xpath_spidenum = "//span[@class='swiper-pagination-total']/text()"

# 体育

Xpath_sports = "//div[@class='mcon']//a/@href"

#selenium

option = webdriver.ChromeOptions()

option.add_argument("headless")

path = 'chromedriver.exe'

browswe = webdriver.Chrome(executable_path=path, chrome_options=option)

当然,代码中的xpath的信息是根据对相关模块的具体信息得到的,也可以根据新的页面信息自行构造出xpath。

二、日期获取与格式转换的函数timeinhref

通过对新华网上网页链接的观察,可以发现这些url可以以/c.html或者/c.htm结尾,或者是以/c_10个数字.htm的形式结尾,不同的格式中,其日期信息位置不同,因为我们需要提取存在于链接中的日期信息,因此需要对不同样式的url进行不同的处理,具体如下所示:

'''日期获取与格式转换'''

def timeinhref(self,news_href):

new_date_date = datetime.datetime(1900, 1, 1).date()

#news_href存在才执行下面的程序,减少了程序出bug的情况

if news_href:

#判断程序是以/c.html结尾还是以/c.htm结尾或者是其他形式

if str(news_href).endswith("/c.html") or str(news_href).endswith("/c.htm"):

get_date = str(news_href).split("/")[-3]

new_date_date = datetime.datetime(int(get_date[0:4]), int(get_date[4:6]), int(get_date[6:])).date()

else:

if re.search('^c_[0-9]{10}.htm$', str(news_href).split("/")[-1]) or re.search('^c_[0-9]{10}.html$', str(news_href).split("/")[-1]):

smalllist = str(news_href).split("/")

get_ym = smalllist[-3]

get_d = smalllist[-2]

new_date_date = datetime.datetime(int(get_ym[0:4]), int(get_ym[5:]), int(get_d[0:])).date()

return new_date_date

三、 得到可用的网页链接need_hrefget

我们最终需要的只是符合时间要求的新闻,因此仅保留符合要求的新闻的链接。根据xpath从页面上获取的url信息可能是不能直接访问的,因为它们不是以http://开头的,要加上"http://www.news.cn"方可访问

def need_hrefget(self,need_url, army_href):

if army_href:

for onehref in army_href:

if str(onehref).endswith(".htm") or str(onehref).endswith(".html"):

new_date_date = SpiderXinhua.timeinhref(self,onehref)

now_date = self.Now_date

daysub = (now_date - new_date_date).days + 1

#仅获得符合时间要求的新闻

if int(daysub) <= int(self.datesub):

#对不是以http://开头的url要加上"http://www.news.cn"方可访问

if str(onehref)[0:1] == "/":

onehref = SpiderXinhua.xinhua_url_base0 + str(onehref)

need_url.append(onehref)

print(onehref)

else:

break

else:

continue

四、单模块新闻获取xinhua_onemokuai_urlsget

对于一些模块,可能有轮播的新闻,也有列表的新闻。

新闻以列表形式存在,不断逐个获取连接,直到当前新闻链接的时间信息不符合要求;如果需要点击查看更多按钮,则查看。

轮播的新闻按照设置的xpath得到,但注意个数,避免出现重复。

对于军事、体育等特殊模块单独执行。

对爬取出错的模块,提示失误信息。

'''单模块'''

def xinhua_onemokuai_urlsget(self,mokuaileibiename, mokuaileibieurl, need_url, xinhua_errormessage):

try:

# browswe = webdriver.Chrome(executable_path=path, chrome_options=option)

print(mokuaileibiename)

benciurl = mokuaileibieurl

SpiderXinhua.browswe.get(benciurl)

xinhua_url_base = "http://" + benciurl.split("/")[2]

content = SpiderXinhua.browswe.page_source

tree = etree.HTML(content)

# 军事

if mokuaileibiename == "军事":

xpath_army = SpiderXinhua.Xpath_army

army_href = tree.xpath(xpath_army)

if army_href:

SpiderXinhua.need_hrefget(self,need_url, army_href)

else:

xinhua_errormessage.append("新华网--军事列表数据获取失败,检查xpath是否正确!")

xpath_army_spide = SpiderXinhua.Xpath_army_spide

army_href_spide = tree.xpath(xpath_army_spide)

if army_href_spide:

SpiderXinhua.need_hrefget(self,need_url, army_href_spide)

else:

xinhua_errormessage.append("新华网--军事滚动数据获取失败,检查xpath是否正确!")

return

# 体育

if mokuaileibiename == "体育":

xpath_sports = SpiderXinhua.Xpath_sports

sports_href = tree.xpath(xpath_sports)

if sports_href:

del (sports_href[0])

SpiderXinhua.need_hrefget(self,need_url, sports_href)

else:

xinhua_errormessage.append("新华网--体育数据获取失败,检查xpath是否正确!")

return

# 轮播的新闻

xpath_info_spide = SpiderXinhua.Xpath_info_spide

xpath_spidenum = SpiderXinhua.Xpath_spidenum

news_href_duo = tree.xpath(xpath_info_spide)

spidenum = tree.xpath(xpath_spidenum)

news_href = []

if spidenum:

for i in range(int(spidenum[0])):

news_href.append(news_href_duo[i])

for i in range(len(news_href)):

if str(news_href[i]).endswith("/c.html") or str(news_href[i]).endswith("/c.htm"):

get_date = str(news_href[i]).split("/")[-3]

new_date_date = datetime.datetime(int(get_date[0:4]), int(get_date[4:6]),

int(get_date[6:])).date()

else:

if re.search('^c_[0-9]{10}.htm$', str(news_href[i]).split("/")[-1]):

smalllist = str(news_href[i]).split("/")

get_ym = smalllist[-3]

get_d = smalllist[-2]

new_date_date = datetime.datetime(int(get_ym[0:4]), int(get_ym[5:]), int(get_d[0:])).date()

else:

continue

daysub = (self.Now_date - new_date_date).days + 1

if int(daysub) <= int(self.datesub):

if str(news_href[i])[0:1] == "/":

news_href[i] = xinhua_url_base + str(news_href[i])

need_url.append(news_href[i])

print(news_href[i])

# 列表新闻

xpath_t_start = SpiderXinhua.Xpath_t_start

xpath_t_end = SpiderXinhua.Xpath_t_end

xpath_h_start = xpath_t_start

xpath_h_end = SpiderXinhua.Xpath_h_end

if mokuaileibiename == "教育":

xpath_t_start = SpiderXinhua.Xpath_Jt_start

xpath_t_end = SpiderXinhua.Xpath_Jt_end

xpath_h_start = xpath_t_start

xpath_h_end = SpiderXinhua.Xpath_Jh_end

numid = 1

tryerror = 0

goflag = True

#新闻以列表形式存在,不断逐个获取连接,直到当前新闻链接的时间信息不符合要求

#如果需要点击查看更多按钮,则查看

#goflag就是决定是否继续向下获取的

while goflag:

xpath_info_item_t = xpath_t_start + str(numid) + xpath_t_end

new_time = tree.xpath(xpath_info_item_t)

if not new_time:

if numid == 1 and tryerror == 0:

xpath_t_start = SpiderXinhua.Xpath_Ft_start

xpath_t_end = SpiderXinhua.Xpath_Ft_end

xpath_h_start = xpath_t_start

xpath_h_end = SpiderXinhua.Xpath_Fh_end

tryerror = tryerror + 1

continue

#查看更多

lookmore = SpiderXinhua.browswe.find_element(By.XPATH, SpiderXinhua.LookmoreBtn)

if lookmore:

lookmore.click()

time.sleep(2)

content = SpiderXinhua.browswe.page_source

tree = etree.HTML(content)

continue

goflag = False

xinhua_errormessage.append(

"新华网--" + str(mokuaileibiename) + "列表数据获取失败,检查xpath是否正确!")

new_date_date = datetime.datetime.strptime(new_time[0], "%Y-%m-%d").date()

daysub = (Now_date - new_date_date).days + 1

if daysub <= self.datesub:

xpath_info_item_h = xpath_h_start + str(numid) + xpath_h_end

news_href = tree.xpath(xpath_info_item_h)

if news_href:

if str(news_href[0])[0:1] == "/":

news_href[0] = xinhua_url_base + str(news_href[0])

if str(news_href[0])[0:3] == "../":

news_href[0] = xinhua_url_base + str(news_href[0])[2:]

need_url.append(news_href[0])

print(news_href[0])

numid = numid + 1

else:

goflag = False

except:

xinhua_errormessage.append("新华网--" + str(mokuaileibiename) + "数据获取失败,网页格式不符,可手动查看!")

五、循环每个模块xinhuawangurlsget

'''循环每个模块'''

def xinhuawangurlsget(self,need_url, xinhua_errormessage):

SpiderXinhua.xinhua_threadlist = []

for ui in range(len(SpiderXinhua.xinhua_url_lists)):

mokuaileibiename = list(SpiderXinhua.xinhua_url_lists.keys())[ui]

mokuaileibieurl = list(SpiderXinhua.xinhua_url_lists.values())[ui]

SpiderXinhua.xinhua_onemokuai_urlsget(self,mokuaileibiename, mokuaileibieurl, need_url, xinhua_errormessage)

return need_url, xinhua_errormessage

六、其余函数

'''主要'''

def mainmain(self):

Need_url, Xinhua_errormessage = SpiderXinhua.xinhuawangurlsget(self,SpiderXinhua.Need_url, SpiderXinhua.Xinhua_errormessage)

self.Need_url=Need_url

self.Xinhua_errormessage=Xinhua_errormessage

def __init__(self,datesub,nowdate):

self.datesub=datesub

self.Now_date=nowdate

SpiderXinhua.mainmain(self)

七、执行示例

设置时间间隔为1天,截止时间为当天日期

if __name__=="__main__":

Now_date = datetime.date.today()

axinhua=SpiderXinhua(1,Now_date)

print(len(axinhua.Need_url))

print(axinhua.Need_url)

print(axinhua.Xinhua_errormessage)

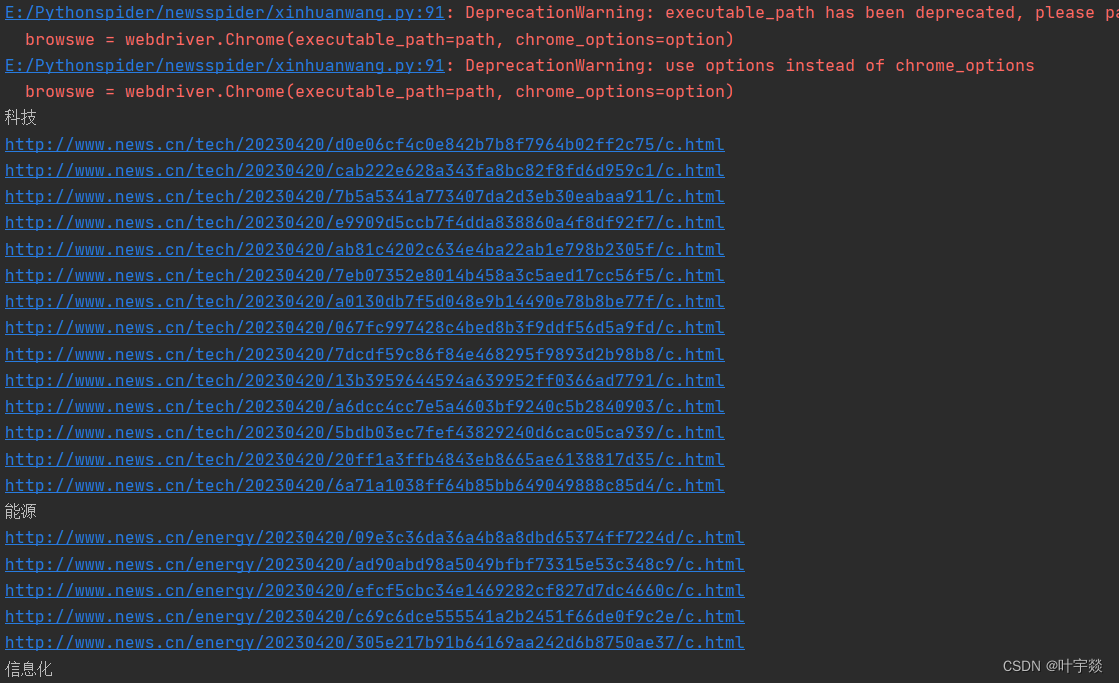

执行结果为:

版权归原作者 叶宇燚 所有, 如有侵权,请联系我们删除。