VGGnet

VGGnet是由牛津大学和DeepMind研发的深度学习网络(2014年)。它是由Alexnet发展而来的,其中最为经典的是vgg16和vgg19 ,至今任被广泛应用于图像特征提取

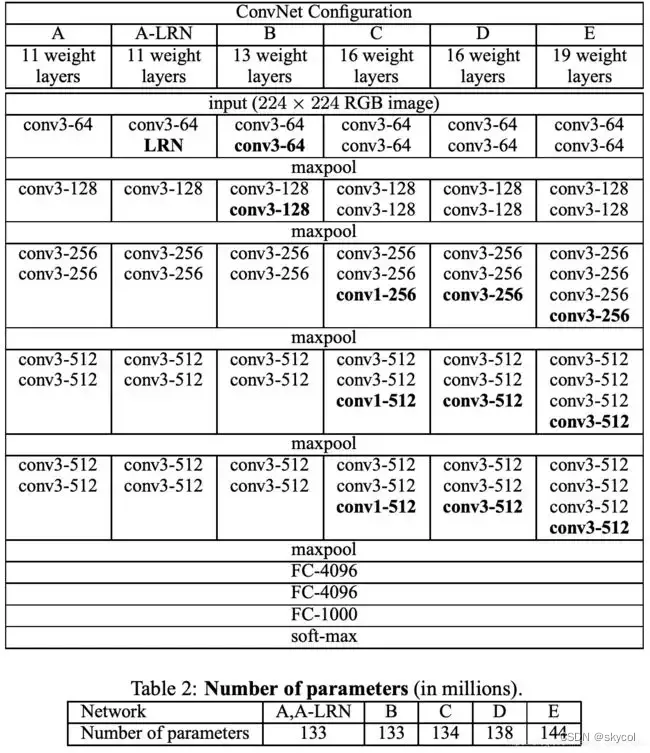

下面是vgg11-vgg19的结构,vgg后的数字代表网络层数

Keras定义vgg16

vgg16结构:

上图的输入是224*224,可以根据实际做调整

keras使我们很容易构建深度学习模型

根据上图定义的vgg16模型:

from keras.models import Sequential

from keras.layers import Conv2D,Dense,Flatten,Dropout,MaxPool2D,BatchNormalization

def vgg16_model():

model=Sequential()

model.add(Conv2D(filters=64,kernel_size=(3,3),strides=(1,1),input_shape=(224,224,3),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(filters=64,kernel_size=(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(MaxPool2D()) # pool_size=(2,2) strides=(2,2)

model.add(Conv2D(128,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(128,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(MaxPool2D())

model.add(Conv2D(256,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(256,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(256,(1,1),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(MaxPool2D())

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(512,(1,1),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(MaxPool2D())

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(Conv2D(512,(1,1),strides=(1,1),padding='same',activation='relu',kernel_initializer='glorot_uniform'))

model.add(MaxPool2D())

model.add(Flatten())

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1000,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1000,activation='softmax'))

return model

model=vgg16_model()

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_146 (Conv2D) (None, 224, 224, 64) 1792

conv2d_147 (Conv2D) (None, 224, 224, 64) 36928

max_pooling2d_56 (MaxPoolin (None, 112, 112, 64) 0

g2D)

conv2d_148 (Conv2D) (None, 112, 112, 128) 73856

conv2d_149 (Conv2D) (None, 112, 112, 128) 147584

max_pooling2d_57 (MaxPoolin (None, 56, 56, 128) 0

g2D)

conv2d_150 (Conv2D) (None, 56, 56, 256) 295168

conv2d_151 (Conv2D) (None, 56, 56, 256) 590080

conv2d_152 (Conv2D) (None, 56, 56, 256) 65792

max_pooling2d_58 (MaxPoolin (None, 28, 28, 256) 0

...

Total params: 134,639,952

Trainable params: 134,639,952

Non-trainable params: 0

Keras实现vgg16迁移学习

训练数据:

cifar10数据集(由 Hinton 的学生 Alex Krizhevsky 和 Ilya Sutskever 整理的一个用于识别普适物体的小型数据集。一共包含 10 个类别的 RGB 彩色图 片:飞机( a叩lane )、汽车( automobile )、鸟类( bird )、猫( cat )、鹿( deer )、狗( dog )、蛙类( frog )、马( horse )、船( ship )和卡车( truck )。图片的尺寸为 32×32 ,数据集中一共有 50000 张训练图片和 10000 张测试图片)

Keras.applications:

提供了许多带有预训练权值的深度学习模型

livelossplot:

一个绘制损失图像的库,可以在训练过程中实时绘制训练情况

from keras.datasets import cifar10

from keras.utils import to_categorical

from keras import applications

from keras.models import Sequential,Model

from keras.layers import Dense,Dropout,Flatten

from keras import optimizers

# 加载cifar10数据集

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train = x_train.astype('float32') / 255

x_test = x_test.astype('float32') / 255

y_train = to_categorical(y_train, 10) #按照10个类别one-hot编码

y_test = to_categorical(y_test, 10)

# 加载keras训练后的vgg16模型

vgg_model= applications.VGG16(include_top=False,input_shape=(32,32,3))

for layer in vgg_model.layers[:15]: #冻结前15层

layer.trainable=False

# 定义迁移学习层

top_model=Sequential()

top_model.add(Flatten(input_shape=vgg_model.output_shape[1:]))

top_model.add(Dense(32, activation='relu'))

top_model.add(Dropout(0.5))

top_model.add(Dense(10, activation='softmax'))

# 结合预训练模型和迁移学习层为新模型

model = Model(

inputs=vgg_model.input,

outputs=top_model(vgg_model.output))

model.compile(

loss='categorical_crossentropy',

optimizer = optimizers.Adam(learning_rate=0.0001),

metrics=['accuracy'])

# 边训练边绘制损失

from livelossplot import PlotLossesKeras

plotlosses = PlotLossesKeras()

model.fit(x_train,y_train,epochs=5,verbose=1,validation_split=0.1,batch_size=32,callbacks=plotlosses)

训练中损失和分类准确率变化:

# 测试集损失与准确率

test_loss, test_acc = model.evaluate(x_test,y_test)

print('test_loss:{:.2} test_acc:{}%'.format(test_loss,test_acc*100))

# 保存模型

model.save('trained/cifar10.h5')

预测新图-识别猫咪

用学习出的模型预测猫咪

from keras.models import load_model

from keras.utils import image_utils

import matplotlib.pyplot as plt

import numpy as np

# 加载模型

model=load_model("trained/cifar10.h5")

# 图片预处理

path = 'data/my_cat.jpg'

img_height, img_width = 32, 32

x = image_utils.load_img(path=path, target_size=(img_height, img_width))

x = image_utils.img_to_array(x)

# print(x.shape) # (32, 32, 3)

x = x[None] # 相当于增加一个维度

# print(x.shape) # (1, 32, 32, 3)

# 预测

y = model.predict(x)

labels=["airplane","automobile","bird","cat","deer","dog","frog","horse","ship","truck"]

result=[i.tolist().index(1) for i in y]

print("This is a {}".format(labels[result[0]]))

img = Image.open(path)

# img.show() # 会调用系统的显示窗口

plt.figure(dpi=120)

plt.imshow(img)

成功识别我的猫!

版权归原作者 skycol 所有, 如有侵权,请联系我们删除。