CDH6.2.1 集成ranger和atlas操作****手册

目录

说明

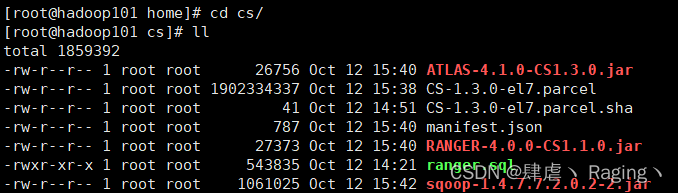

本文档旨在描述相关CDH6.2.1集成ranger和atlas的安装配置操作,便于相关使用人员后参考。安装包地址:/root/productRelease/PDC/V2.0.0/数据中台/组件/lib/中台安装包/cs

相关配置信息

软件版本

软件

信息

CDH

CDH6.2.1

OS

CentOS 7.5

访问地址信息

代理访问地址

备注

安装完成后,CM管理界面

服务器信息

IP

主机名

10.10.1.9

wyg01.xxxx.com

10.10.1.8

wyg02.xxxx.com

10.10.1.7

wyg03.xxxx.com

准备

初始化元数据库

Ranger元数据库

- 在mysql中创建数据库ranger,创建用户名为rnageradmin,密码为2wsx#EDC

mysql -uroot -p2wsx#EDC

create database ranger DEFAULT CHARACTER SET utf8;

grant all privileges on ranger.* to 'rangeradmin'@'%' identified by '2wsx#EDC';

SET GLOBAL innodb_file_per_table = ON,

innodb_file_format = Barracuda,

innodb_large_prefix = ON;

FLUSH PRIVILEGES;

- 导入基础数据

use ranger;

source /home/cs/ranger.sql

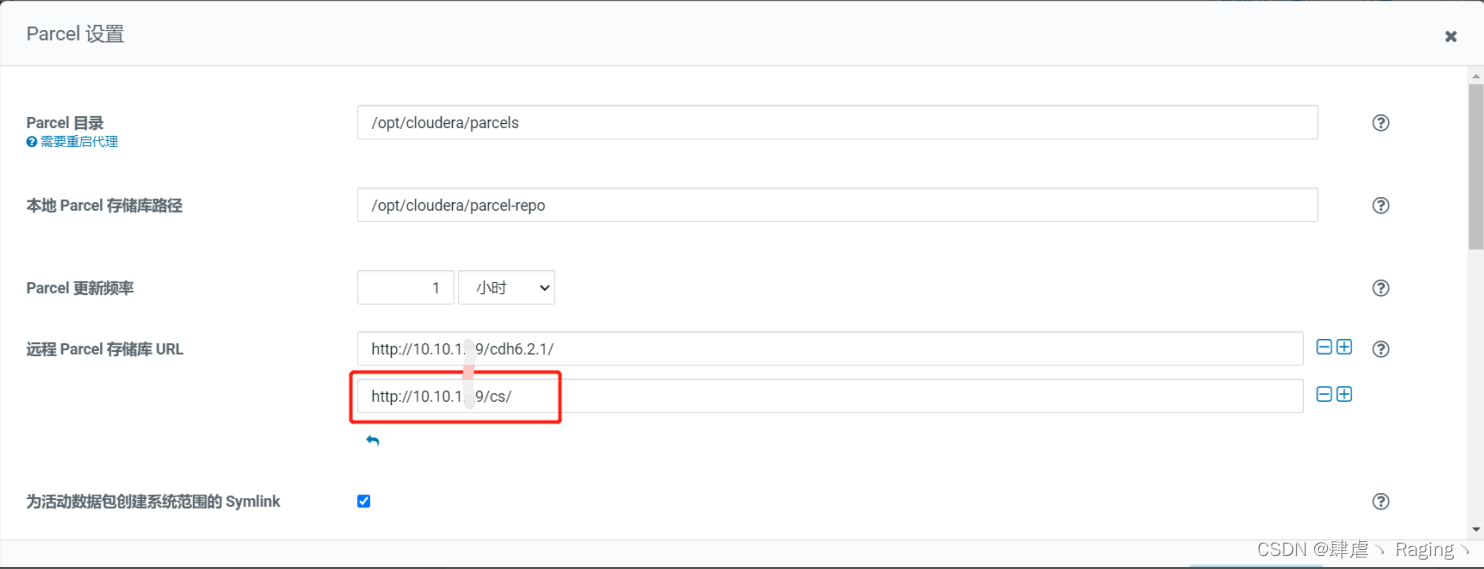

配置ranger和atlas parcel软件源

- 制作parcel源

ln -s /home/cs/ /var/www/html/

- cm配置ranger和atlas软件源并进行软件节点分发

然后就会出现CS1.3.0的parcel

点击 下载--> 分配 --> 激活(我这里是已经激活好的)

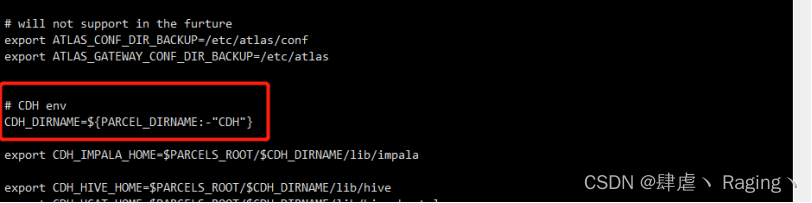

环境配置文件修改(所有节点)

CDH5版本需要修改,CDH6版本不用修改

vi /opt/cloudera/parcels/CS-1.3.0/meta/cs_env.sh

CDH env

CDH_DIRNAME=${PARCEL_DIRNAME:-"CDH"}

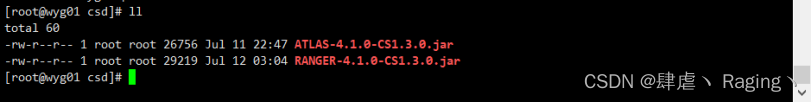

Ranger和atlas**** csd文件放置cm-server下,重启 cm-server

cp /home/cs/RANGER-4.1.0-CS1.3.0.jar /opt/cloudera/csd/

cp /home/cs/ATLAS-4.1.0-CS1.3.0.jar /opt/cloudera/csd/

重启cm-server

systemctl restart cloudera-scm-server

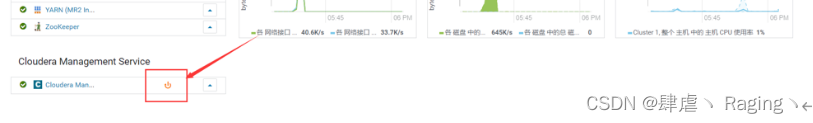

重启cloudera manager server

登录cm页面 http://10.10.1.9:7180/ admin/admin

集成ranger步骤

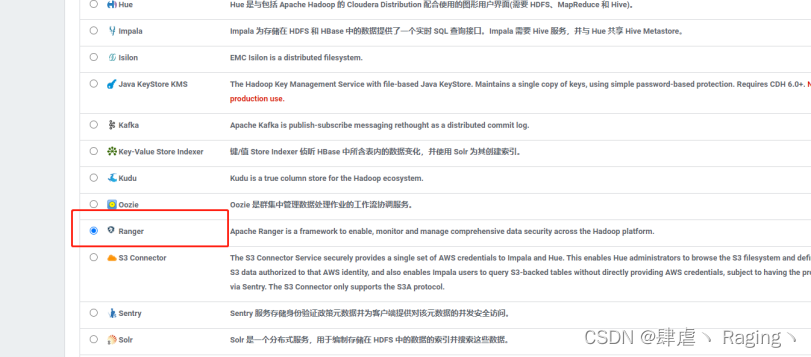

界面安装

- 登录cm页面 http://10.10.1.9:7180/ admin/admin,进入cloudera manager页面进入安装ranger页面

选择安装服务节点

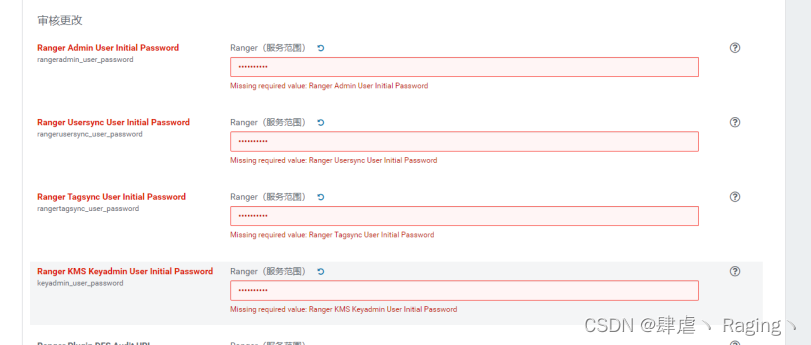

配置内部机制密码

如下配置ranger@123

填写数据库相关信息(用户名,密码,数据库名称,数据库地址)

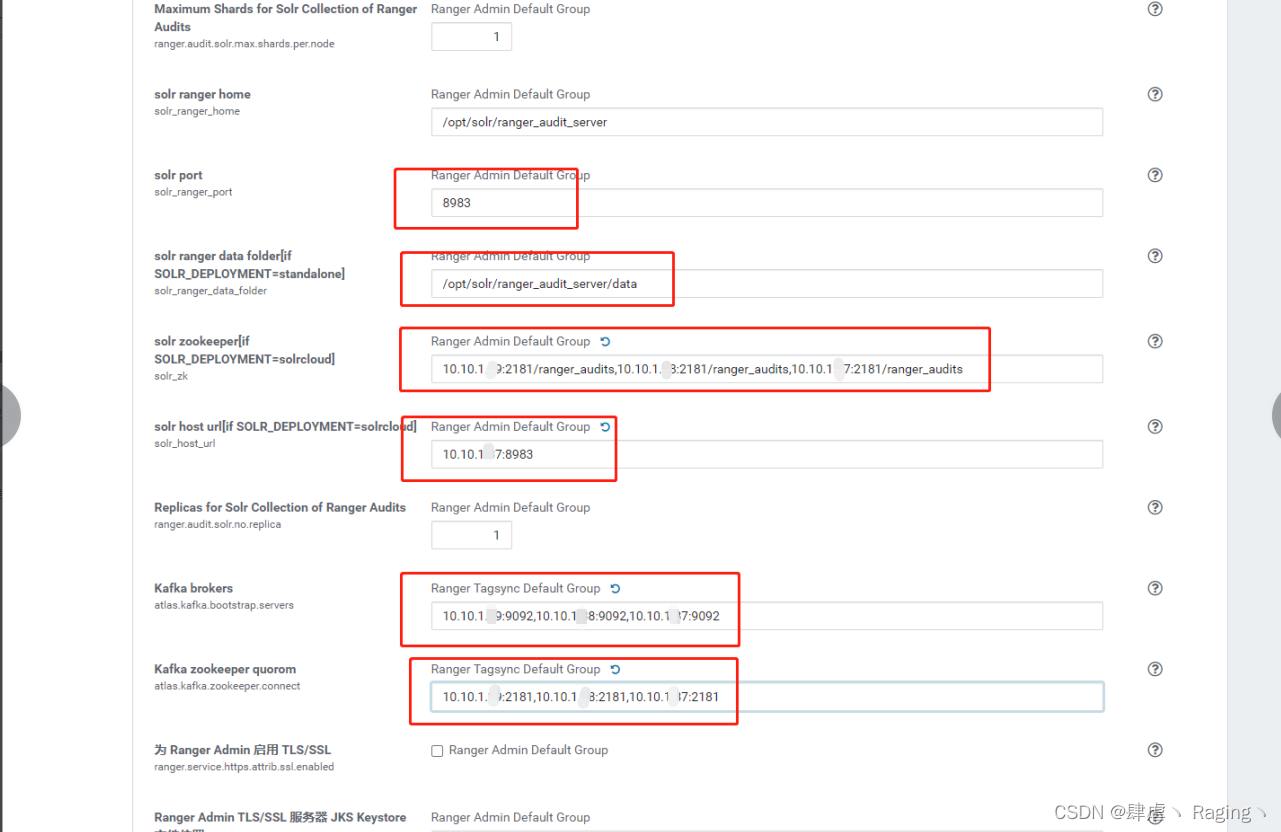

填写 CDH中solr端口8983,solrcloud zookeeper地址,solr_host_url solr的服务器地址,配置kafka地址和zookeeper地址

10.10.1.9:2181/ranger_audits,10.10.1.8:2181/ranger_audits,10.10.1.7:2181/ranger_audits

10.10.1.7:8983

10.10.1.9:9092,10.10.1.8:9092,10.10.1.7:9092

10.10.1.9:2181,10.10.1.8:2181,10.10.1.7:2181

后续点击继续即可安装完成!!!

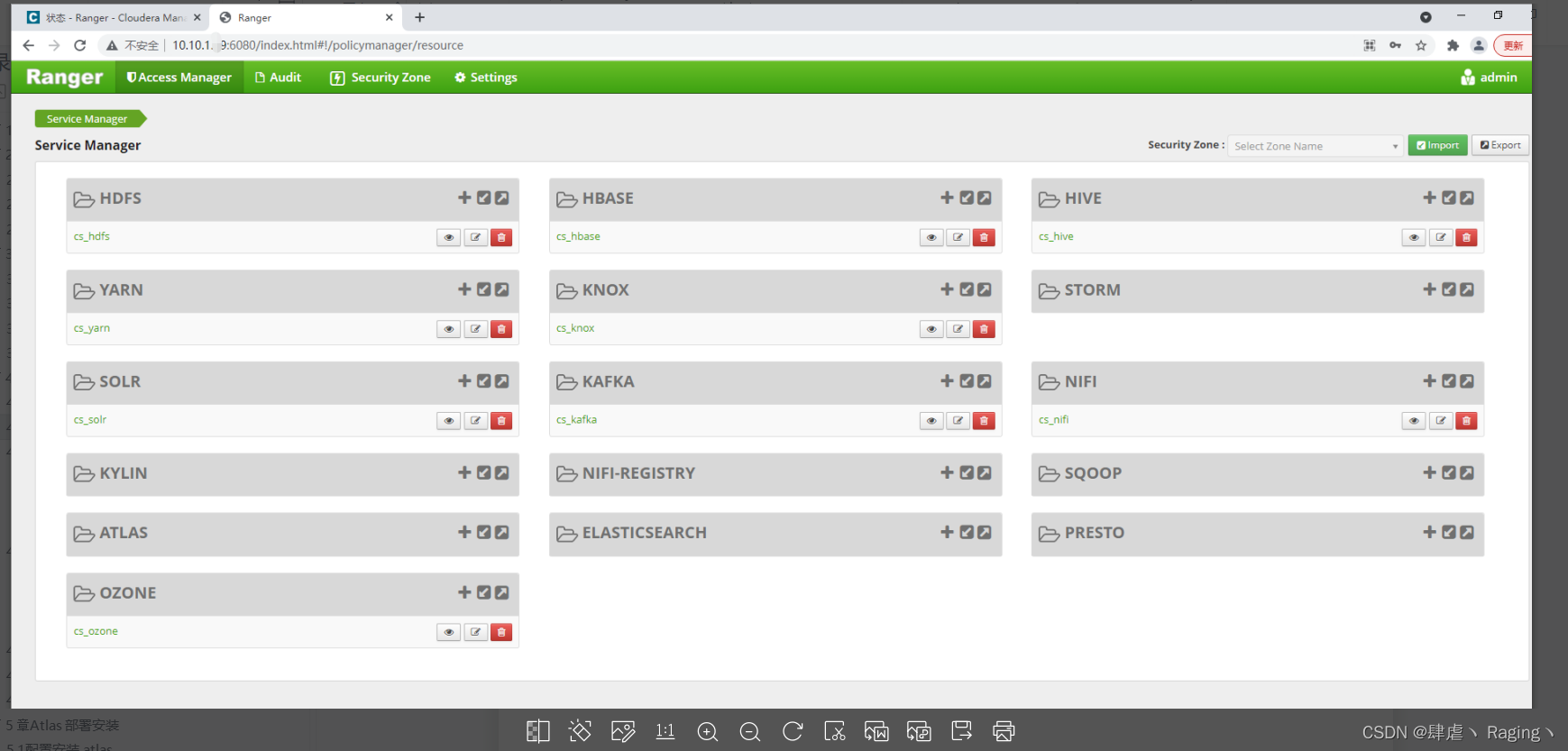

启动ranger页面测试是否有问题

http://10.10.1.9:6080 用户名密码 admin/ranger@123

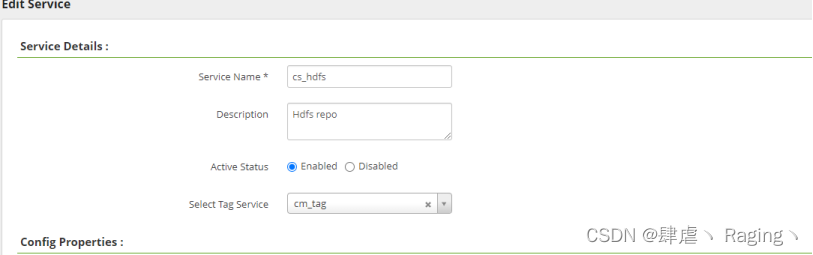

配置安装plugin

Hdfs

vi /opt/cloudera/parcels/CS/lib/ranger-hdfs-plugin/install.properties

主节点:

#Tue Sep 28 19:39:20 CST 2021

#POLICY_MGR_URL=http://172.16.0.106:8020/clusterservice

#REPOSITORY_NAME=pds_hdfs_55

POLICY_MGR_URL=http://wyg01.para.com:6080

REPOSITORY_NAME=cs_hdfs

COMPONENT_INSTALL_DIR_NAME=/opt/cloudera/parcels/CDH/lib/hadoop-hdfs

XAAUDIT.SOLR.ENABLE=true

XAAUDIT.SOLR.URL=http://wyg01.xxxx.com:8983/solr/ranger_audits

XAAUDIT.SOLR.USER=NONE

XAAUDIT.SOLR.PASSWORD=NONE

XAAUDIT.SOLR.ZOOKEEPER=NONE

XAAUDIT.SOLR.FILE_SPOOL_DIR=/var/log/hadoop/hdfs/audit/solr/spool

XAAUDIT.HDFS.ENABLE=false

XAAUDIT.HDFS.HDFS_DIR=hdfs://__REPLACE__NAME_NODE_HOST:8020/ranger/audit

XAAUDIT.HDFS.FILE_SPOOL_DIR=/var/log/hadoop/hdfs/audit/hdfs/spool

XAAUDIT.HDFS.AZURE_ACCOUNTNAME=__REPLACE_AZURE_ACCOUNT_NAME

XAAUDIT.HDFS.AZURE_ACCOUNTKEY=__REPLACE_AZURE_ACCOUNT_KEY

XAAUDIT.HDFS.AZURE_SHELL_KEY_PROVIDER=__REPLACE_AZURE_SHELL_KEY_PROVIDER

XAAUDIT.HDFS.AZURE_ACCOUNTKEY_PROVIDER=__REPLACE_AZURE_ACCOUNT_KEY_PROVIDER

XAAUDIT.HDFS.IS_ENABLED=false

XAAUDIT.HDFS.DESTINATION_DIRECTORY=hdfs://__REPLACE__NAME_NODE_HOST:8020/ranger/audit/%app-type%/%time:yyyyMMdd%

XAAUDIT.HDFS.LOCAL_BUFFER_DIRECTORY=__REPLACE__LOG_DIR/hadoop/%app-type%/audit

XAAUDIT.HDFS.LOCAL_ARCHIVE_DIRECTORY=__REPLACE__LOG_DIR/hadoop/%app-type%/audit/archive

XAAUDIT.HDFS.DESTINTATION_FILE=%hostname%-audit.log

XAAUDIT.HDFS.DESTINTATION_FLUSH_INTERVAL_SECONDS=900

XAAUDIT.HDFS.DESTINTATION_ROLLOVER_INTERVAL_SECONDS=86400

XAAUDIT.HDFS.DESTINTATION_OPEN_RETRY_INTERVAL_SECONDS=60

XAAUDIT.HDFS.LOCAL_BUFFER_FILE=%time:yyyyMMdd-HHmm.ss%.log

XAAUDIT.HDFS.LOCAL_BUFFER_FLUSH_INTERVAL_SECONDS=60

XAAUDIT.HDFS.LOCAL_BUFFER_ROLLOVER_INTERVAL_SECONDS=600

XAAUDIT.HDFS.LOCAL_ARCHIVE_MAX_FILE_COUNT=10

XAAUDIT.SOLR.IS_ENABLED=true

XAAUDIT.SOLR.MAX_QUEUE_SIZE=1

XAAUDIT.SOLR.MAX_FLUSH_INTERVAL_MS=1000

XAAUDIT.SOLR.SOLR_URL=http://wyg01.xxxx.com:8983/solr/ranger_audits

SSL_KEYSTORE_FILE_PATH=/etc/hadoop/conf/ranger-plugin-keystore.jks

SSL_KEYSTORE_PASSWORD=myKeyFilePassword

SSL_TRUSTSTORE_FILE_PATH=/etc/hadoop/conf/ranger-plugin-truststore.jks

SSL_TRUSTSTORE_PASSWORD=changeit

CUSTOM_USER=hdfs

CUSTOM_GROUP=hadoop

子节点

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

Location of Policy Manager URL

Example:

POLICY_MGR_URL=http://pds-devcdh-node2.paradev.com:6080

POLICY_MGR_URL=http://wyg01.para.com:6080

This is the repository name created within policy manager

Example:

REPOSITORY_NAME=cs_hdfs

REPOSITORY_NAME=cs_hdfs

Set hadoop home when hadoop program and Ranger HDFS Plugin are not in the

same path.

COMPONENT_INSTALL_DIR_NAME=/opt/cloudera/parcels/CDH/lib/hadoop-hdfs

AUDIT configuration with V3 properties

Enable audit logs to Solr

#Example

#XAAUDIT.SOLR.ENABLE=true

#XAAUDIT.SOLR.URL=http://pds-devcdh-node2.paradev.com:8983/solr/ranger_audits

#XAAUDIT.SOLR.ZOOKEEPER=

#XAAUDIT.SOLR.FILE_SPOOL_DIR=/var/log/hadoop/hdfs/audit/solr/spool

XAAUDIT.SOLR.ENABLE=true

XAAUDIT.SOLR.URL=http://wyg01.para.com:8983/solr/ranger_audits

XAAUDIT.SOLR.USER=NONE

XAAUDIT.SOLR.PASSWORD=NONE

XAAUDIT.SOLR.ZOOKEEPER=NONE

XAAUDIT.SOLR.FILE_SPOOL_DIR=/var/log/hadoop/hdfs/audit/solr/spool

Enable audit logs to HDFS

#Example

#XAAUDIT.HDFS.ENABLE=true

#XAAUDIT.HDFS.HDFS_DIR=hdfs://node-1.example.com:8020/ranger/audit

#XAAUDIT.HDFS.FILE_SPOOL_DIR=/var/log/hadoop/hdfs/audit/hdfs/spool

If using Azure Blob Storage

#XAAUDIT.HDFS.HDFS_DIR=wasb[s]://<containername>@<accountname>.blob.core.windows.net/<path>

#XAAUDIT.HDFS.HDFS_DIR=wasb://ranger_audit_container@my-azure-account.blob.core.windows.net/ranger/audit

XAAUDIT.HDFS.ENABLE=false

XAAUDIT.HDFS.HDFS_DIR=hdfs://__REPLACE__NAME_NODE_HOST:8020/ranger/audit

XAAUDIT.HDFS.FILE_SPOOL_DIR=/var/log/hadoop/hdfs/audit/hdfs/spool

Following additional propertis are needed When auditing to Azure Blob Storage via HDFS

Get these values from your /etc/hadoop/conf/core-site.xml

#XAAUDIT.HDFS.HDFS_DIR=wasb[s]://<containername>@<accountname>.blob.core.windows.net/<path>

XAAUDIT.HDFS.AZURE_ACCOUNTNAME=__REPLACE_AZURE_ACCOUNT_NAME

XAAUDIT.HDFS.AZURE_ACCOUNTKEY=__REPLACE_AZURE_ACCOUNT_KEY

XAAUDIT.HDFS.AZURE_SHELL_KEY_PROVIDER=__REPLACE_AZURE_SHELL_KEY_PROVIDER

XAAUDIT.HDFS.AZURE_ACCOUNTKEY_PROVIDER=__REPLACE_AZURE_ACCOUNT_KEY_PROVIDER

End of V3 properties

Audit to HDFS Configuration

If XAAUDIT.HDFS.IS_ENABLED is set to true, please replace tokens

that start with REPLACE with appropriate values

XAAUDIT.HDFS.IS_ENABLED=true

XAAUDIT.HDFS.DESTINATION_DIRECTORY=hdfs://__REPLACE__NAME_NODE_HOST:8020/ranger/audit/%app-type%/%time:yyyyMMdd%

XAAUDIT.HDFS.LOCAL_BUFFER_DIRECTORY=__REPLACE__LOG_DIR/hadoop/%app-type%/audit

XAAUDIT.HDFS.LOCAL_ARCHIVE_DIRECTORY=__REPLACE__LOG_DIR/hadoop/%app-type%/audit/archive

Example:

XAAUDIT.HDFS.IS_ENABLED=true

XAAUDIT.HDFS.DESTINATION_DIRECTORY=hdfs://namenode.example.com:8020/ranger/audit/%app-type%/%time:yyyyMMdd%

XAAUDIT.HDFS.LOCAL_BUFFER_DIRECTORY=/var/log/hadoop/%app-type%/audit

XAAUDIT.HDFS.LOCAL_ARCHIVE_DIRECTORY=/var/log/hadoop/%app-type%/audit/archive

XAAUDIT.HDFS.IS_ENABLED=false

XAAUDIT.HDFS.DESTINATION_DIRECTORY=hdfs://__REPLACE__NAME_NODE_HOST:8020/ranger/audit/%app-type%/%time:yyyyMMdd%

XAAUDIT.HDFS.LOCAL_BUFFER_DIRECTORY=__REPLACE__LOG_DIR/hadoop/%app-type%/audit

XAAUDIT.HDFS.LOCAL_ARCHIVE_DIRECTORY=__REPLACE__LOG_DIR/hadoop/%app-type%/audit/archive

XAAUDIT.HDFS.DESTINTATION_FILE=%hostname%-audit.log

XAAUDIT.HDFS.DESTINTATION_FLUSH_INTERVAL_SECONDS=900

XAAUDIT.HDFS.DESTINTATION_ROLLOVER_INTERVAL_SECONDS=86400

XAAUDIT.HDFS.DESTINTATION_OPEN_RETRY_INTERVAL_SECONDS=60

XAAUDIT.HDFS.LOCAL_BUFFER_FILE=%time:yyyyMMdd-HHmm.ss%.log

XAAUDIT.HDFS.LOCAL_BUFFER_FLUSH_INTERVAL_SECONDS=60

XAAUDIT.HDFS.LOCAL_BUFFER_ROLLOVER_INTERVAL_SECONDS=600

XAAUDIT.HDFS.LOCAL_ARCHIVE_MAX_FILE_COUNT=10

#Solr Audit Provder

XAAUDIT.SOLR.IS_ENABLED=true

XAAUDIT.SOLR.MAX_QUEUE_SIZE=1

XAAUDIT.SOLR.MAX_FLUSH_INTERVAL_MS=1000

XAAUDIT.SOLR.SOLR_URL=http://wyg01.xxxx.com:8983/solr/ranger_audits

SSL Client Certificate Information

Example:

SSL_KEYSTORE_FILE_PATH=/etc/hadoop/conf/ranger-plugin-keystore.jks

SSL_KEYSTORE_PASSWORD=none

SSL_TRUSTSTORE_FILE_PATH=/etc/hadoop/conf/ranger-plugin-truststore.jks

SSL_TRUSTSTORE_PASSWORD=none

You do not need use SSL between agent and security admin tool, please leave these sample value as it is.

SSL_KEYSTORE_FILE_PATH=/etc/hadoop/conf/ranger-plugin-keystore.jks

SSL_KEYSTORE_PASSWORD=myKeyFilePassword

SSL_TRUSTSTORE_FILE_PATH=/etc/hadoop/conf/ranger-plugin-truststore.jks

SSL_TRUSTSTORE_PASSWORD=changeit

Custom component user

CUSTOM_COMPONENT_USER=<custom-user>

keep blank if component user is default

CUSTOM_USER=hdfs

Custom component group

CUSTOM_COMPONENT_GROUP=<custom-group>

keep blank if component group is default

CUSTOM_GROUP=hadoop

Hbase

vi /opt/cloudera/parcels/CS/lib/ranger-hbase-plugin/install.properties

Hive

vi /opt/cloudera/parcels/CS/lib/ranger-hive-plugin/install.properties

配置基础权限策略

Hdfs配置策略

Hbase配置策略

Hive配置策略

Hdfs添加配置文件****

- 在hdfs-site.xml 的 NameNode 高级配置代码段(安全阀)中添加配置

dfs.namenode.inode.attributes.provider.class

org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer

- 在namenode节点上执行

sh /opt/cloudera/parcels/CS/lib/ranger-hdfs-plugin/enable-hdfs-plugin.sh

- 重启hdfs,更新配置

HBASE添加配置文件

hbase配置参数调整

hbase-site.xml 的 Master 高级配置代码段(安全阀)

如果后续要装atlas,请用如下配置(此处配置建议最后加)

hbase.coprocessor.master.classes

org.apache.atlas.hbase.hook.HBaseAtlasCoprocessor,org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor

hbase-site.xml 的 RegionServer 高级配置代码段(安全阀)

hbase.coprocessor.region.classes

org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor

如果没装atlas,hbase的master和region如下:

hbase.coprocessor.master.classes

org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor

hbase.coprocessor.region.classes

org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor

- 在hbase所有节点上执行

sh /opt/cloudera/parcels/CS/lib/ranger-hbase-plugin/enable-hbase-plugin.sh

- 重启hbase,更新配置

HIVE添加配置文件

勾选

如果启动hive报错:

Caused by: org.apache.thrift.transport.TTransportException: Unsupported mechanism type PLAIN

修改/opt/cloudera/parcels/CDH/lib/hive/conf/目录下的hive环境变量文件hive-env.sh,注释删除export HIVE_OPTS配置

在beeline机器上上执行如下命令

sh /opt/cloudera/parcels/CS/lib/ranger-hive-plugin/enable-hive-plugin.sh

Atlas部署安装

配置安装atlas

登录cm页面 http://192.168.132.101:7180/ admin/admin,进入cloudera manager页面

@1、添加角色服务,

@2、安装atlas服务

@2、选择atlas服务节点

@2、配置服务kafka和zookeeper节点地址

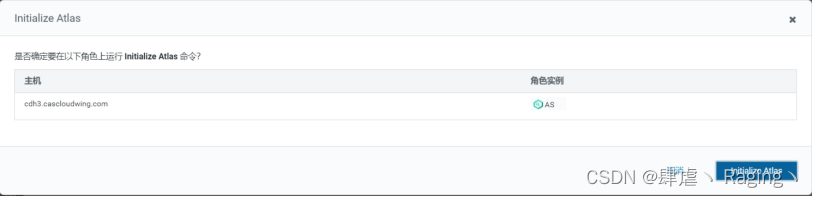

@3、安装完成之后 初始化元数据

@4、启动atlas导入 hive,hbase,kafka元数据

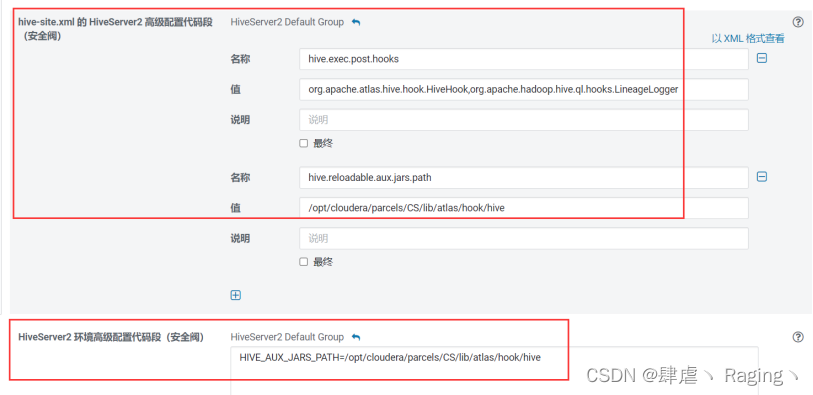

@5、进行hive配置

hive.exec.post.hooks

org.apache.atlas.hive.hook.HiveHook

/opt/cloudera/parcels/CS/lib/atlas/hook/hive

HIVE_AUX_JARS_PATH=/opt/cloudera/parcels/CS/lib/atlas/hook/hive

hive.exec.post.hooks

org.apache.atlas.hive.hook.HiveHook,org.apache.hadoop.hive.ql.hooks.LineageLogger

hive.reloadable.aux.jars.path

/opt/cloudera/parcels/CS/lib/atlas/hook/hive

HIVE_AUX_JARS_PATH=/opt/cloudera/parcels/CS/lib/atlas/hook/hive

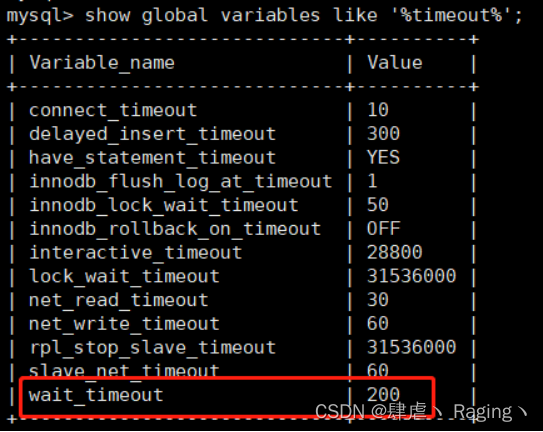

@6、修改MySQL配置

show global variables like '%timeout%';

set global wait_timeout = 600;

(可以根据实际情况加大,最大为2147483,单位秒)

配置好重启hive,重启atlas

@5、登录 atlas web ui界面查看

admin/Admin123

版权归原作者 Wangyg0902 所有, 如有侵权,请联系我们删除。