文章目录

版本概况

服务版本centos7.9kubernetesv1.20.15helmv3.10.1zookeeper3.8.1kafka3.4.0

一、添加helm仓库

# 添加bitnami和官方helm仓库:

helm repo add bitnami https://charts.bitnami.com/bitnami

# 查看仓库

helm repo list

二、安装部署集群

安装方式有两种,在线安装和离线安装,在线安装方便快捷,但是无法修改参数。由于需要修改配置,故本文采用离线安装方式。

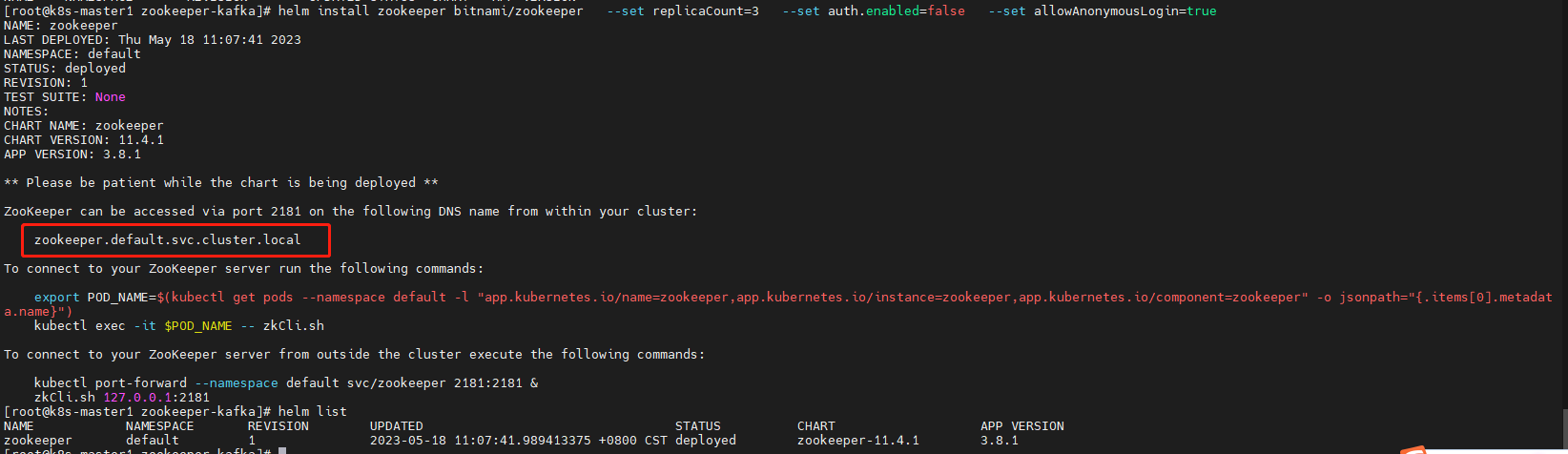

2.1 在线安装zookeeper+kafka集群

1. 部署zookeeper集群

# 部署zookeeper集群

helm install zookeeper bitnami/zookeeper \

--set replicaCount=3 \

--set auth.enabled=false \

--set allowAnonymousLogin=true

# 查看

helm list

提示:

由于这个ApacheZookeeper集群不会公开,所以在部署时禁用了身份验证。对于生产环境,请考虑使用生产配置。

生产环境参考:https://github.com/bitnami/charts/tree/main/bitnami/zookeeper#production-configuration

2. 部署kafka集群

# 部署kafka集群

helm install kafka bitnami/kafka \

--set zookeeper.enabled=false \

--set replicaCount=3 \

--set externalZookeeper.servers=ZOOKEEPER-SERVICE-NAME # ZOOKEEPER-SERVICE-NAME 替换为上一步结束时获得的Apache ZOOKEEPER服务名称

2.2 离线安装zookeeper+kafka集群

由于在线安装,zookeeper的pod起不来,一直处于pending的状态,原因是因为pvc存储卷挂载的问题,所以这里选择把zookeeper和kafka的包下载下来,修改配置文件,然后进行离线安装。

我这里将zookeeper和kafka安装在default命名空间下,如果您想按在指定namespace下,命令后加

-n [namespace]

就可以了。

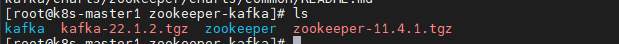

1. 下载离线包

# 创建存放压缩包目录

mkdir /bsm/zookeeper-kafka && cd /bsm/zookeeper-kafka

# 拉取压缩包

helm pull bitnami/zookeeper

helm pull bitnami/kafka

# 解压

tar -zvxf kafka-22.1.2.tgz

tar -zvxf zookeeper-11.4.1.tgz

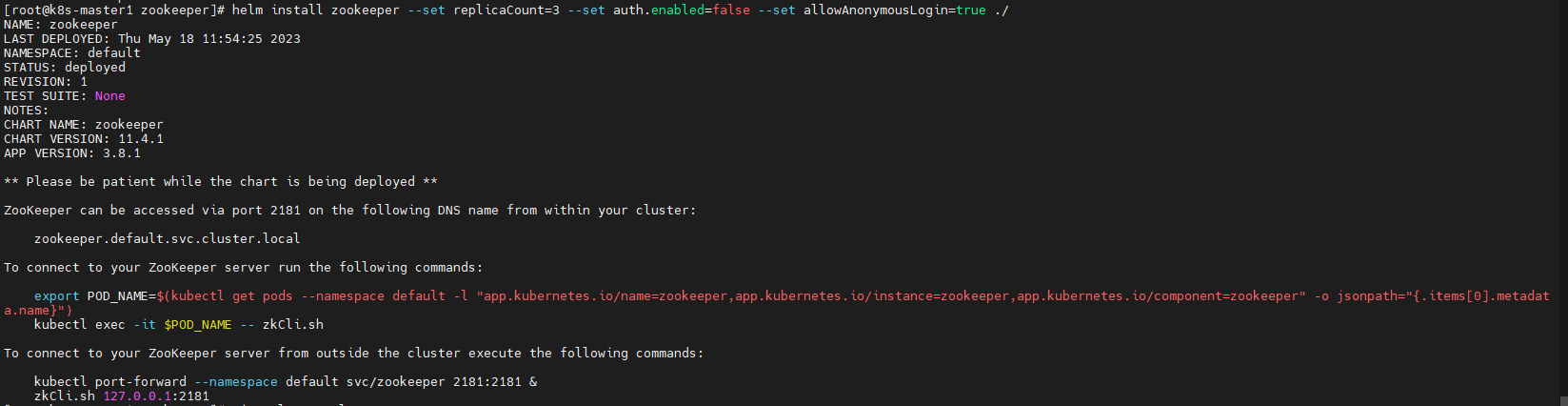

2. 部署zookeeper集群

- 修改配置文件

values.yaml主要修改内容:enabled设为false ;注释掉 storageClass: “”、existingClaim: “”

cd /bsm/zookeeper-kafka/zookeeper

vim values.yaml

# 修改配置文件,主要修改存储storageclass(kafka的配置文件类似)

persistence:

## @param persistence.enabled Enable ZooKeeper data persistence using PVC. If false, use emptyDir##

enabled: false # 测试环境可设置为false## @param persistence.existingClaim Name of an existing PVC to use (only when deploying a single replica)### existingClaim: ""## @param persistence.storageClass PVC Storage Class for ZooKeeper data volume## If defined, storageClassName: <storageClass>## If set to "-", storageClassName: "", which disables dynamic provisioning## If undefined (the default) or set to null, no storageClassName spec is## set, choosing the default provisioner. (gp2 on AWS, standard on## GKE, AWS & OpenStack)### storageClass: "" # 注释掉storageClass。如果创建好了storageclass可打开注释,并写入storageClass的名称

- 安装zookeeper集群

# 安装zookeeper

cd /bsm/zookeeper-kafka/zookeeper

helm install zookeeper --set replicaCount=3 --set auth.enabled=false --set allowAnonymousLogin=true ./# ./ 表示zookeeper的value.yaml文件所在路径

# 查看pod状态:[root@k8s-master1 rabbitmq]# kubectl get pods

NAME READY STATUS RESTARTS AGE

zookeeper-0 1/1 Running 0 85s

zookeeper-1 1/1 Running 0 85s

zookeeper-2 1/1 Running 0 84s

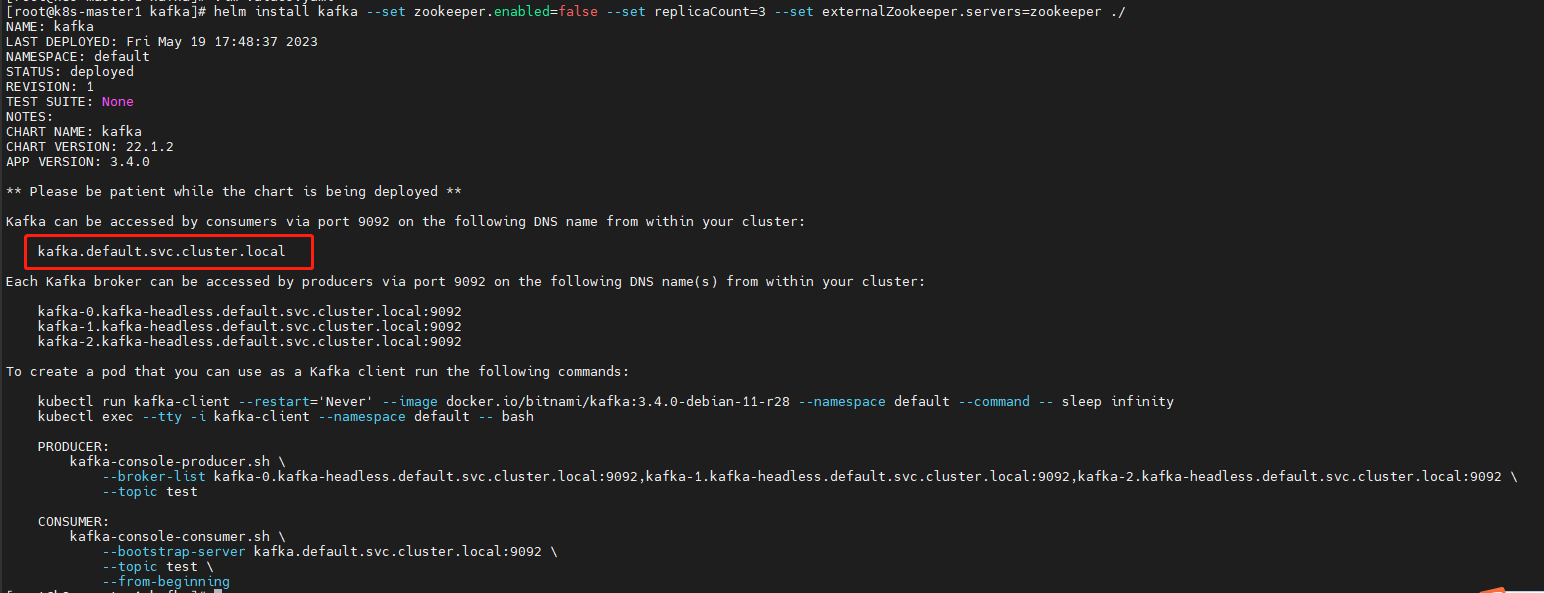

3. 安装kafka集群

- 修改配置文件

values.yaml主要修改内容:enabled设为false ;注释掉 storageClass: “”、existingClaim: “”;kraft.enable 修改为 false

cd /bsm/zookeeper-kafka/kafka

vim values.yaml

修改一:

persistence:

## @param persistence.enabled Enable Kafka data persistence using PVC, note that ZooKeeper persistence is unaffected##

enabled: false #改为false## @param persistence.existingClaim A manually managed Persistent Volume and Claim## If defined, PVC must be created manually before volume will be bound## The value is evaluated as a template### existingClaim: ""## @param persistence.storageClass PVC Storage Class for Kafka data volume## If defined, storageClassName: <storageClass>## If set to "-", storageClassName: "", which disables dynamic provisioning## If undefined (the default) or set to null, no storageClassName spec is## set, choosing the default provisioner.### storageClass: ""## @param persistence.accessModes Persistent Volume Access Modes

修改二:

kraft:

## @param kraft.enabled Switch to enable or disable the Kraft mode for Kafka##

enabled: false #改为false## @param kraft.processRoles Roles of your Kafka nodes. Nodes can have 'broker', 'controller' roles or both of them.##

processRoles: broker,controller

- 安装 kafka 集群

# 安装zookeeper

cd /bsm/zookeeper-kafka/kafka

helm install kafka --set zookeeper.enabled=false --set replicaCount=3 --set externalZookeeper.servers=ZOOKEEPER-SERVICE-NAME ./# ZOOKEEPER-SERVICE-NAME 替换为上一步结束时获得的Apache ZOOKEEPER服务名称# ./ 表示kafka的value.yaml文件所在路径

# 查看:[root@k8s-master1 kafka]# kubectl get pods

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 0 3m58s

kafka-1 1/1 Running 0 3m58s

kafka-2 1/1 Running 0 3m58s

报错:

Error: INSTALLATION FAILED: execution error at (kafka/templates/NOTES.txt:314:4):

VALUES VALIDATION:

kafka: Kraft mode

You cannot use Kraft mode and Zookeeper at the same time. They are mutually exclusive. Disable zookeeper in ‘.Values.zookeeper.enabled’ and delete values from ‘.Values.externalZookeeper.servers’ if you want to use Kraft mode

原因:

新版kafka新增了一个kraft模式,他与zookeeper是冲突的,不能同时使用,所以如果使用指定的zookeeper,kraft模式要关闭。

解决办法:

修改kafka的配置文件“value.yaml”,将 kraft.enable 的值改为false

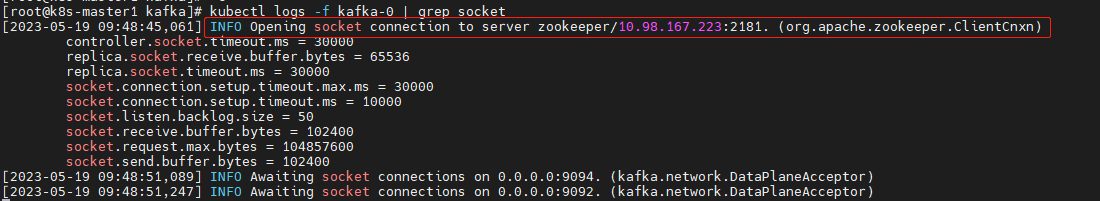

三、验证kafka与zookeeper是否绑定

查看kafka日志中有以下信息:

kubectl logs -f kafka-0 | grep socket

四、测试集群

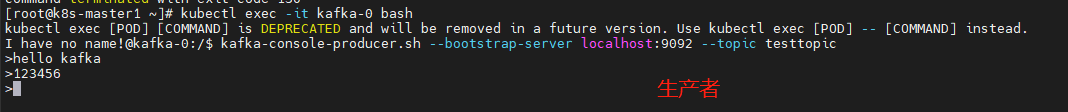

# 进入kafka的pod创建一个topic[root@k8s-master1 kafka]# kubectl exec -it kafka-0 bash

kubectl exec [POD][COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD]--[COMMAND] instead.

I have no name!@kafka-0:/$ kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic testtopic

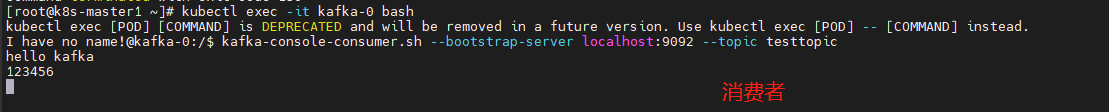

heCreated topic testtopic.# 启动一个消费者[root@k8s-master1 ~]# kubectl exec -it kafka-0 bash

I have no name!@kafka-0:/$ kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic testtopic

# 新开一个窗口,进入kafka的pod,启动一个生产者,输入消息;在消费者端可以收到消息[root@k8s-master1 ~]# kubectl exec -it kafka-0 bash

kubectl exec [POD][COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD]--[COMMAND] instead.

I have no name!@kafka-0:/$ kafka-console-producer.sh --bootstrap-server localhost:9092 --topic testtopic

在生产者页面输入信息,可以在消费者页面查看到。

部署成功。

附:可改善地方

持久化存储,配置文件value.yaml中storageclass参数未设定,亲和力未设定,测试环境要求没有那么多,生产环境大家可以按需配置。

卸载应用

helm uninstall kafka -n [namespace]

helm uninstall zookeeper -n [namespace]

参考文章:

bitnami官网:https://docs.bitnami.com/tutorials/deploy-scalable-kafka-zookeeper-cluster-kubernetes

csdn文章:https://blog.csdn.net/heian_99/article/details/114840056

版权归原作者 我是小bā吖 所有, 如有侵权,请联系我们删除。