前言:

对于任何基础设施或后端服务系统,日志都是极其重要的。对于受Google内部容器管理系统Borg启发而催生出的Kubernetes项目来说,自然少不了对Logging的支持。

efk就是目前比较受欢迎的日志管理系统。kubernetes可以实现efk的快速部署和使用,通过statefulset控制器部署elasticsearch组件,用来存储日志数据,还可通过volumenclaimtemplate动态生成pv实现es数据的持久化。通过deployment部署kibana组件,实现日志的可视化管理。通过daemonset控制器部署fluentd组件,来收集各节点和k8s集群的日志。

实践流程:

K8s中比较流行的日志收集解决方案是Elasticsearch、Fluentd和Kibana(EFK)技术栈,也是官方推荐的一种方案。

本次实践主要就是配置启动一个可扩展的 Elasticsearch 集群,然后在Kubernetes集群中创建一个Kibana应用,最后通过DaemonSet来运行Fluentd,以便它在每个Kubernetes工作节点上都可以运行一个 Pod,此pod挂载本地的docker日志目录到容器内部(k8s集群的日志都在这个目录下),fluentd将日志收集处理后推送到elasticsearch,kibana进行一个完整的展示。

**EFK 利用部署在每个节点上的 Fluentd 采集 Kubernetes 节点服务器的

/var/log

和

/var/lib/docker/container

两个目录下的日志,然后传到 Elasticsearch 中。最后,用户通过访问 Kibana 来查询日志(如果docker没有使用默认的目录

/var/lib/docker/container

,请根据实际情况更改)。**

具体过程如下:

- 创建 Fluentd 并且将 Kubernetes 节点服务器 log 目录挂载进容器。

- Fluentd 采集节点服务器 log 目录下的 containers 里面的日志文件。

- Fluentd 将收集的日志转换成 JSON 格式。

- Fluentd 利用 Exception Plugin 检测日志是否为容器抛出的异常日志,如果是就将异常栈的多行日志合并。

- Fluentd 将换行多行日志 JSON 合并。

- Fluentd 使用 Kubernetes Metadata Plugin 检测出 Kubernetes 的 Metadata 数据进行过滤,如 Namespace、Pod Name 等。

- Fluentd 使用 ElasticSearch Plugin 将整理完的 JSON 日志输出到 ElasticSearch 中。

- ElasticSearch 建立对应索引,持久化日志信息。

- Kibana 检索 ElasticSearch 中 Kubernetes 日志相关信息进行展示。

相关组件介绍:

- Elasticsearch

Elasticsearch 是一个实时的、分布式的可扩展的搜索引擎,允许进行全文、结构化搜索,它通常用于索引和搜索大量日志数据,也可用于搜索许多不同类型的文档。

- Kibana

Elasticsearch 通常与 Kibana 一起部署,Kibana 是 Elasticsearch 的一个功能强大的数据可视化 Dashboard,Kibana 允许你通过 web 界面来浏览 Elasticsearch 日志数据。

- Fluentd

Fluentd是一个流行的开源数据收集器,我们将在 Kubernetes 集群节点上安装 Fluentd,通过获取容器日志文件、过滤和转换日志数据,然后将数据传递到 Elasticsearch 集群,在该集群中对其进行索引和存储。

正式的部署步骤:

一,

关于volume存储插件的问题

由于elasticsearch这个组件是计划部署为一个可扩展的集群,因此,使用了volumenclaimtemplate模板动态生成pv,而volumenclaimtemplate必须要有一个可用的StorageClass,因此,部署一个nfs-client-provisioner插件,然后借由此插件实现一个可用的StorageClass。因前面写过关于此类部署的文章,就不在此重复了,以免本文篇幅过长,下面是部署方案:

kubernetes学习之持久化存储StorageClass(4)_晚风_END的博客-CSDN博客_kubernetes中用于持久化存储的组件

二,

关于kubernetes内部使用的DNS---COREDNS的功能

云原生|kubernetes|kubernetes-1.18 二进制安装教程单master(其它的版本也基本一样)_晚风_END的博客-CSDN博客_二进制安装kubelet 这个里面关于coredns做了一个比较详细的介绍,不太会的可以看这里部署coredns,以保证es集群的成功部署。

测试coredns的功能是否正常:

kubectl run -it --image busybox:1.28.3 -n web dns-test --restart=Never --rm

测试了解析域名 kubernetes,kubernetes-default,baidu.com ,elasticsearch.kube-logging.svc.cluster.local 这么几个域名(elasticsearch-cluster我已经部署好才测试成功了elasticsearch.kube-logging.svc.cluster.local 这个域名啦),并查看了容器内的dns相关文件。

总之,一句话,要保证coredns是可用的,正常的,否则es集群是部署不好的哦。

DNS测试用例:

/ # nslookup kubernetes

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

/ # nslookup kubernetes.default

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

/ # nslookup baidu.com

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: baidu.com

Address 1: 110.242.68.66

Address 2: 39.156.66.10

/ # nslookup elasticsearch.kube-logging.svc.cluster.local

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: elasticsearch.kube-logging.svc.cluster.local

Address 1: 10.244.1.20 es-cluster-1.elasticsearch.kube-logging.svc.cluster.local

Address 2: 10.244.1.21 es-cluster-0.elasticsearch.kube-logging.svc.cluster.local

Address 3: 10.244.2.20 es-cluster-2.elasticsearch.kube-logging.svc.cluster.local

/ # cat /etc/resolv.conf

nameserver 10.0.0.2

search web.svc.cluster.local svc.cluster.local cluster.local localdomain default.svc.cluster.local

options ndots:5

三,

es集群的部署

建立相关的namespace:

cat << EOF > es-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kube-logging

EOF

headless service

es-svc.yaml里的headless service:

使用无头service的原因是,headless service不具备负载均衡也没有IP,而headless service可以提供一个稳定的域名elasticsearch.kube-logging.svc.cluster.local(service的名字是elasticsearch嘛),而es的部署方式是StateFulSet,是有三个pod的,也就是DNS的测试内容

在kube-logging名称空间定义了一个名为 elasticsearch 的 Service服务,带有app=elasticsearch标签,当我们将 ElasticsearchStatefulSet 与此服务关联时,服务将返回带有标签app=elasticsearch的 Elasticsearch Pods的DNS A记录。最后,我们分别定义端口9200、9300,分别用于与 REST API 交互,以及用于节点间通信(9300是节点之间es集群选举通信用的)

DNS测试用例:

/ # nslookup elasticsearch.kube-logging.svc.cluster.local

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: elasticsearch.kube-logging.svc.cluster.local

Address 1: 10.244.1.20 es-cluster-1.elasticsearch.kube-logging.svc.cluster.local

Address 2: 10.244.1.21 es-cluster-0.elasticsearch.kube-logging.svc.cluster.local

Address 3: 10.244.2.20 es-cluster-2.elasticsearch.kube-logging.svc.cluster.local

**es-svc.yaml 集群的service部署清单: **** **

cat << EOF >es-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: kube-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

EOF

es-sts-deploy.yaml 部署清单详解:

【整体关键字段介绍】:

在kube-logging的名称空间中定义了一个es-cluster的StatefulSet。容器的名字是elasticsearch,镜像是elasticsearch:7.8.0。使用resources字段来指定容器需要保证至少有0.1个vCPU,并且容器最多可以使用1个vCPU(这在执行初始的大量提取或处理负载高峰时限制了Pod的资源使用)。了解有关资源请求和限制,可参考https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/。暴漏了9200和9300两个端口,名称要和上面定义的 Service 保持一致,通过volumeMount声明了数据持久化目录,定义了一个data数据卷,通过volumeMount把它挂载到容器里的/usr/share/elasticsearch/data目录。我们将在以后的YAML块中为此StatefulSet定义VolumeClaims。

然后,我们使用serviceName 字段与我们之前创建的ElasticSearch服务相关联。这样可以确保可以使用以下DNS地址访问StatefulSet中的每个Pod:,es-cluster-[0,1,2].elasticsearch.kube-logging.svc.cluster.local,其中[0,1,2]与Pod分配的序号数相对应。我们指定3个replicas(3个Pod副本),将matchLabels selector 设置为app: elasticseach,然后在该.spec.template.metadata中指定pod需要的镜像。该.spec.selector.matchLabels和.spec.template.metadata.labels字段必须匹配。

【部分关键变量介绍】:

a,cluster.name

**Elasticsearch 集群的名称,我们这里是 k8s-logs,此变量非常重要。 **** b,node.name**

节点的名称,通过metadata.name来获取。这将解析为 es-cluster-[0,1,2],取决于节点的指定顺序。

c,discovery.zen.ping.unicast.hosts

**此字段用于设置在Elasticsearch集群中节点相互连接的发现方法。 我们使用 unicastdiscovery方式,它为我们的集群指定了一个静态主机列表。 由于我们之前配置的无头服务,我们的 Pod 具有唯一的DNS域es-cluster-[0,1,2].elasticsearch.logging.svc.cluster.local, 因此我们相应地设置此变量。由于都在同一个 namespace 下面,所以我们可以将其缩短为es-cluster-[0,1,2]**d,discovery.zen.minimum_master_nodes

**我们将其设置为(N/2) + 1,N是我们的群集中符合主节点的节点的数量。 我们有3个Elasticsearch 节点,因此我们将此值设置为2(向下舍入到最接近的整数)。**e,ES_JAVA_OPTS

**这里我们设置为-Xms512m -Xmx512m,告诉JVM使用512MB的最小和最大堆。 你应该根据群集的资源可用性和需求调整这些参数。**f,

initcontainer内容

**. . . initContainers: - name: fix-permissions image: busybox command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"] securityContext: privileged: true volumeMounts: - name: data mountPath: /usr/share/elasticsearch/data - name: increase-vm-max-map image: busybox command: ["sysctl", "-w", "vm.max_map_count=262144"] securityContext: privileged: true - name: increase-fd-ulimit image: busybox command: ["sh", "-c", "ulimit -n 65536"] securityContext: privileged: true **这里我们定义了几个在主应用程序之前运行的Init 容器,这些初始容器按照定义的顺序依次执行,执行完成后才会启动主应用容器。第一个名为 fix-permissions 的容器用来运行 chown 命令,将 Elasticsearch 数据目录的用户和组更改为1000:1000(Elasticsearch 用户的 UID)。因为默认情况下,Kubernetes 用 root 用户挂载数据目录,这会使得 Elasticsearch 无法方法该数据目录,可以参考 Elasticsearch 生产中的一些默认注意事项相关文档说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/docker.html#_notes_for_production_use_and_defaults。

第二个名为increase-vm-max-map 的容器用来增加操作系统对mmap计数的限制,默认情况下该值可能太低,导致内存不足的错误,要了解更多关于该设置的信息,可以查看 Elasticsearch 官方文档说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/vm-max-map-count.html。最后一个初始化容器是用来执行ulimit命令增加打开文件描述符的最大数量的。

g,

在 StatefulSet 中,使用volumeClaimTemplates来定义volume 模板即可:

**. . . volumeClaimTemplates: - metadata: name: data labels: app: elasticsearch spec: accessModes: [ "ReadWriteOnce" ] storageClassName: managed-nfs-storage resources: requests: storage: 10Gi **我们这里使用 volumeClaimTemplates 来定义持久化模板,Kubernetes 会使用它为 Pod 创建 PersistentVolume,设置访问模式为ReadWriteOnce,这意味着它只能被 mount到单个节点上进行读写,然后最重要的是使用了一个名为do-block-storage的 StorageClass 对象,所以我们需要提前创建该对象,我们这里使用的 NFS 作为存储后端,所以需要安装一个对应的 nfs-client-provisioner驱动。

es-sts-deploy.yaml 集群部署清单:

cat << EOF > es-sts-deploy.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: kube-logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: fix-permissions

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 10Gi

EOF

OK,稍等几分钟后,es集群基本就部署好了,看看pod和svc是否正常吧:

[root@k8s-master ~]# k get po,svc -n kube-logging

NAME READY STATUS RESTARTS AGE

pod/es-cluster-0 1/1 Running 0 6m44s

pod/es-cluster-1 1/1 Running 0 6m37s

pod/es-cluster-2 1/1 Running 0 6m30s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 3m51s

四,

**kibana的部署 **

这个没什么好说的,干就完了,需要注意的是,镜像和上面的es版本一致,都是7.8.0哦。

总共两个清单文件,一个是service,该service是暴露节点端口的,如果有安装ingress,那么,此service可以设置为headless service不用设置为NodePort。

第二个文件是部署pod的文件,其中的value: http://elasticsearch:9200 是指的headless service的9200端口,假如啊,注意我这是假如,headless service名字是myes,那么,这里value就应该是 http://myes:9200,总之,此环境变量把kibana和elasticsearch集群联系起来了。

kibana-svc.yaml

cat << EOF > kibana-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

type: NodePort

ports:

- port: 5601

selector:

app: kibana

EOF

kibana-deploy.yaml

cat << EOF > kibana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601

EOF

稍等大概5分钟,查看一哈kibana的日志 ,直到有这个出现:http server running at http://0:5601 表示kibana部署完成。

","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["info","plugins","taskManager","taskManager"],"pid":6,"message":"TaskManager is identified by the Kibana UUID: dd9bcb6f-4353-4861-81e5-fe3ac42bb157"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["status","plugin:[email protected]","info"],"pid":6,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["status","plugin:[email protected]","info"],"pid":6,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["status","plugin:[email protected]","info"],"pid":6,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["status","plugin:[email protected]","info"],"pid":6,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["status","plugin:[email protected]","info"],"pid":6,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["status","plugin:[email protected]","info"],"pid":6,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2022-10-15T11:45:36Z","tags":["listening","info"],"pid":6,"message":"Server running at http://0:5601"}

{"type":"log","@timestamp":"2022-10-15T11:45:38Z","tags":["info","http","server","Kibana"],"pid":6,"message":"http server running at http://0:5601"}

看一下kibana相关的pod和service是否正常:

[root@k8s-master ~]# k get po,svc -n kube-logging

NAME READY STATUS RESTARTS AGE

pod/es-cluster-0 1/1 Running 0 12m

pod/es-cluster-1 1/1 Running 0 12m

pod/es-cluster-2 1/1 Running 0 12m

pod/kibana-588d597485-wljbr 1/1 Running 0 49s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 9m21s

service/kibana NodePort 10.0.132.94 <none> 5601:32042/TCP 49s

** 打开浏览器,任意一个节点IP+32042就可以登录kibana了。**

五,

采集器fluentd的部署

ServiceAccent清单文件:

cat << EOF > fluentd-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-logging

labels:

app: fluentd

EOF

fluentd的rbac:

cat << EOF > fluentd-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

labels:

app: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: kube-logging

EOF

fluentd的DaemonSet部署清单 :

配置说明:

将宿主机Node的/var/log和/var/lib/docker/containers目录挂载到 fluentd容器中,用于读取容器输出到stdout和stderr的日志,以及kubernetes组件的日志。

资源限制根据实际情况进行调整,避免Fluentd占用太多资源。

利用环境变量,设置了elasticsarch服务的访问地址,此处使用了service名称,也就是这个:elasticsearch.kube-logging.svc.cluster.local。

cat << EOF > fluentd-deploy.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-logging

labels:

app: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1

imagePullPolicy: IfNotPresent

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.kube-logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: disable

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

EOF

那么,fluent的变量有哪些呢?进入pod里,查看fluent的配置文件,里面有比如 FLUENT_ELASTICSEARCH_HOST,ENV['FLUENT_ELASTICSEARCH_PORT以及日志等级log_level 这里是info等等变量。

root@fluentd-d58br:/fluentd/etc# cat fluent.conf

# AUTOMATICALLY GENERATED

# DO NOT EDIT THIS FILE DIRECTLY, USE /templates/conf/fluent.conf.erb

@include "#{ENV['FLUENTD_SYSTEMD_CONF'] || 'systemd'}.conf"

@include "#{ENV['FLUENTD_PROMETHEUS_CONF'] || 'prometheus'}.conf"

@include kubernetes.conf

@include conf.d/*.conf

<match **>

@type elasticsearch

@id out_es

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1'}"

reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"

reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"

reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"

log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"

logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash'}"

logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"

index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"

type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"

<buffer>

flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"

flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"

chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}"

queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"

retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"

retry_forever true

</buffer>

</match>

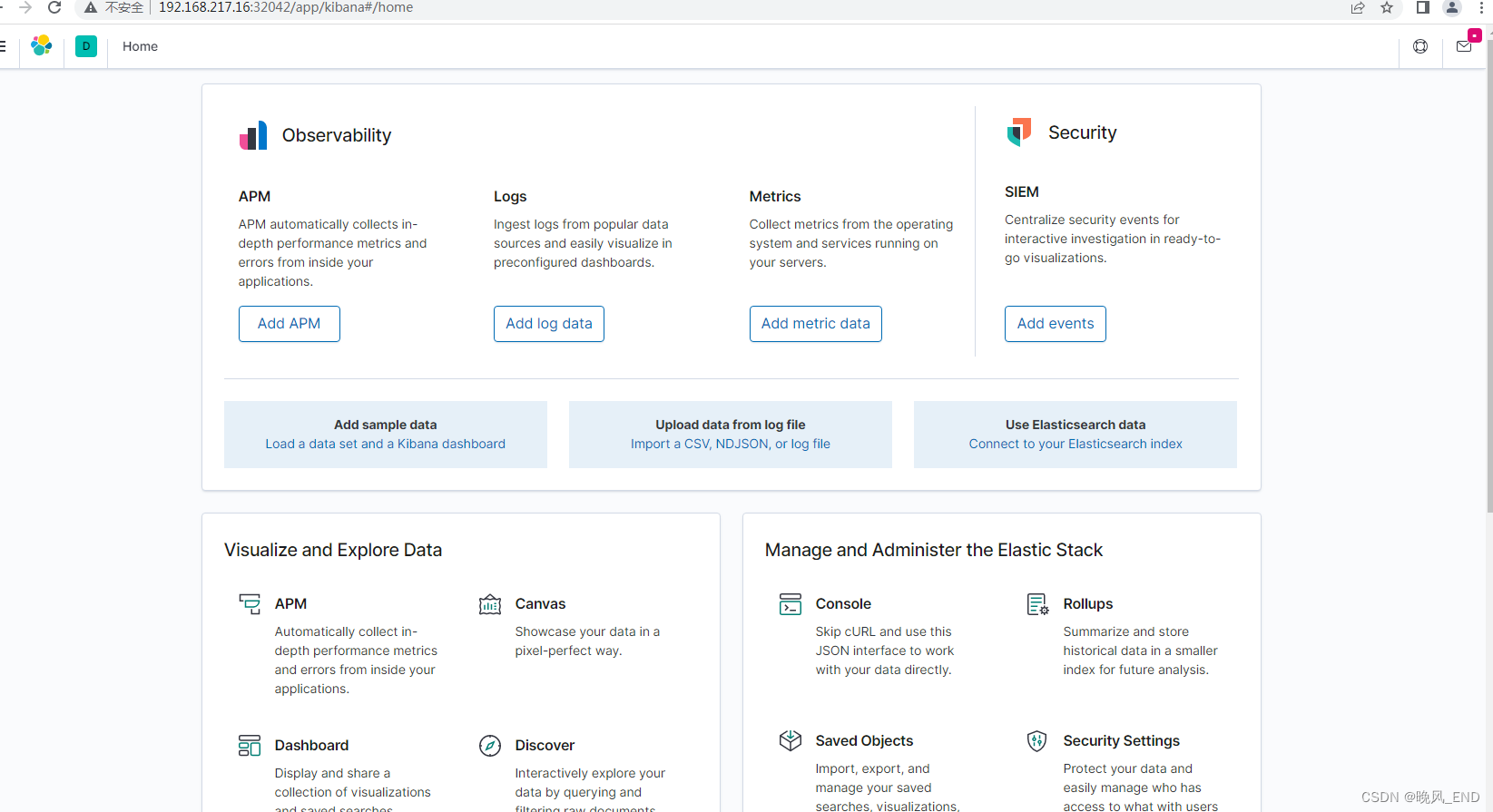

OK,现在的efk基本就是搭建好了,浏览器登录kibana:

登录前先查询一哈kibana的service暴露的端口,30180是目前的端口:

[root@k8s-master ~]# k get svc -n kube-logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 12h

kibana NodePort 10.0.132.94 <none> 5601:30180/TCP 12h

不使用测试数据,我们用自己的数据

默认页面是这样的哈

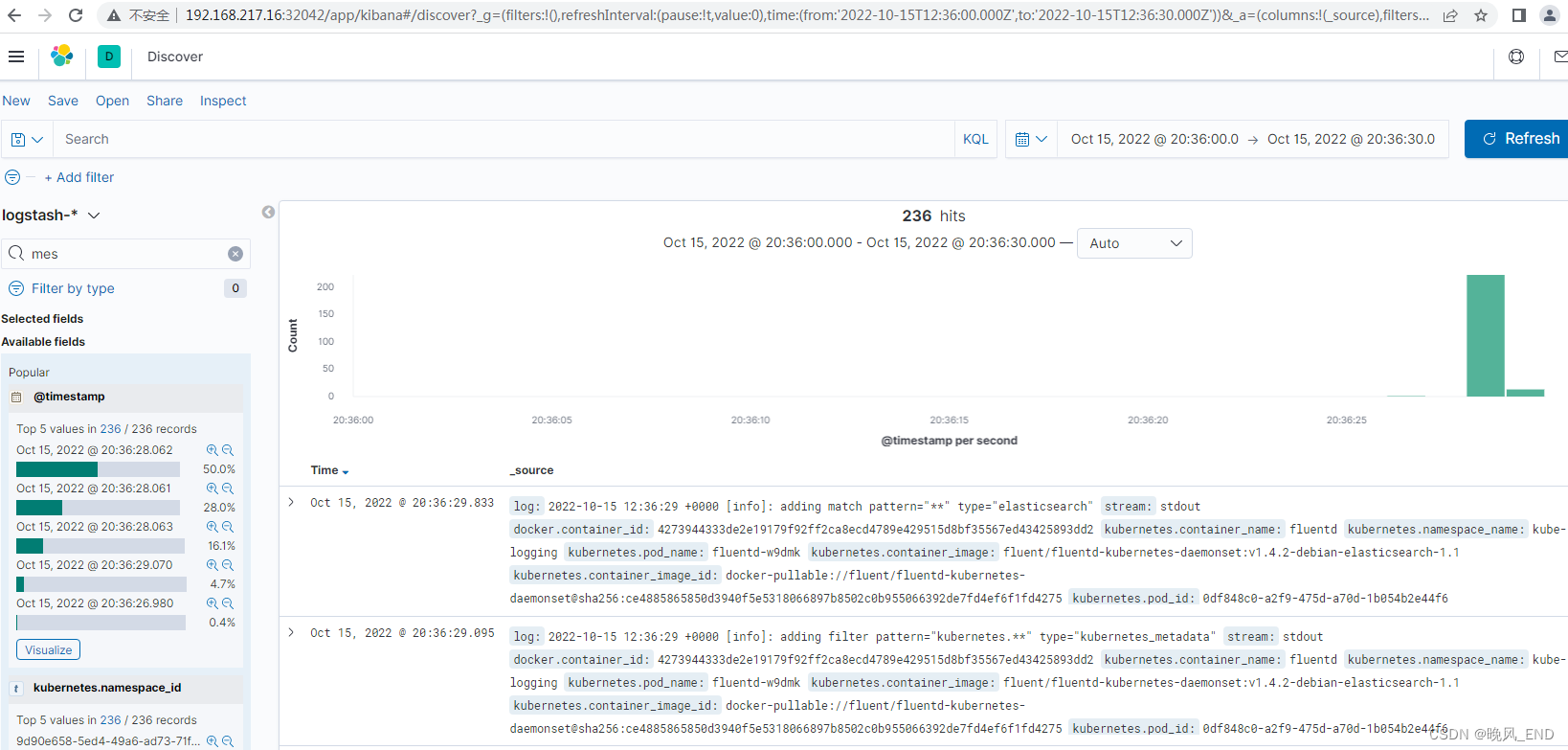

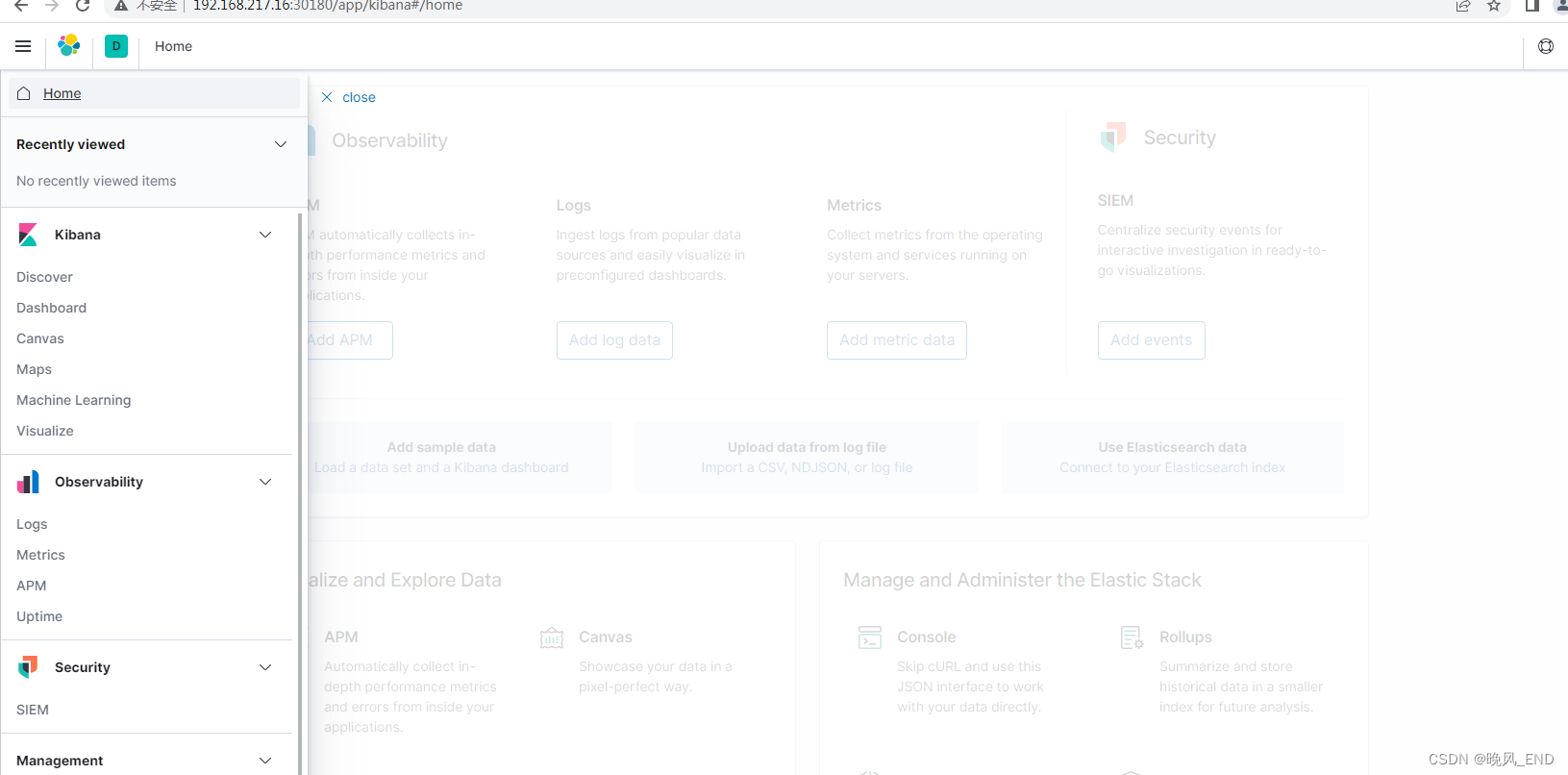

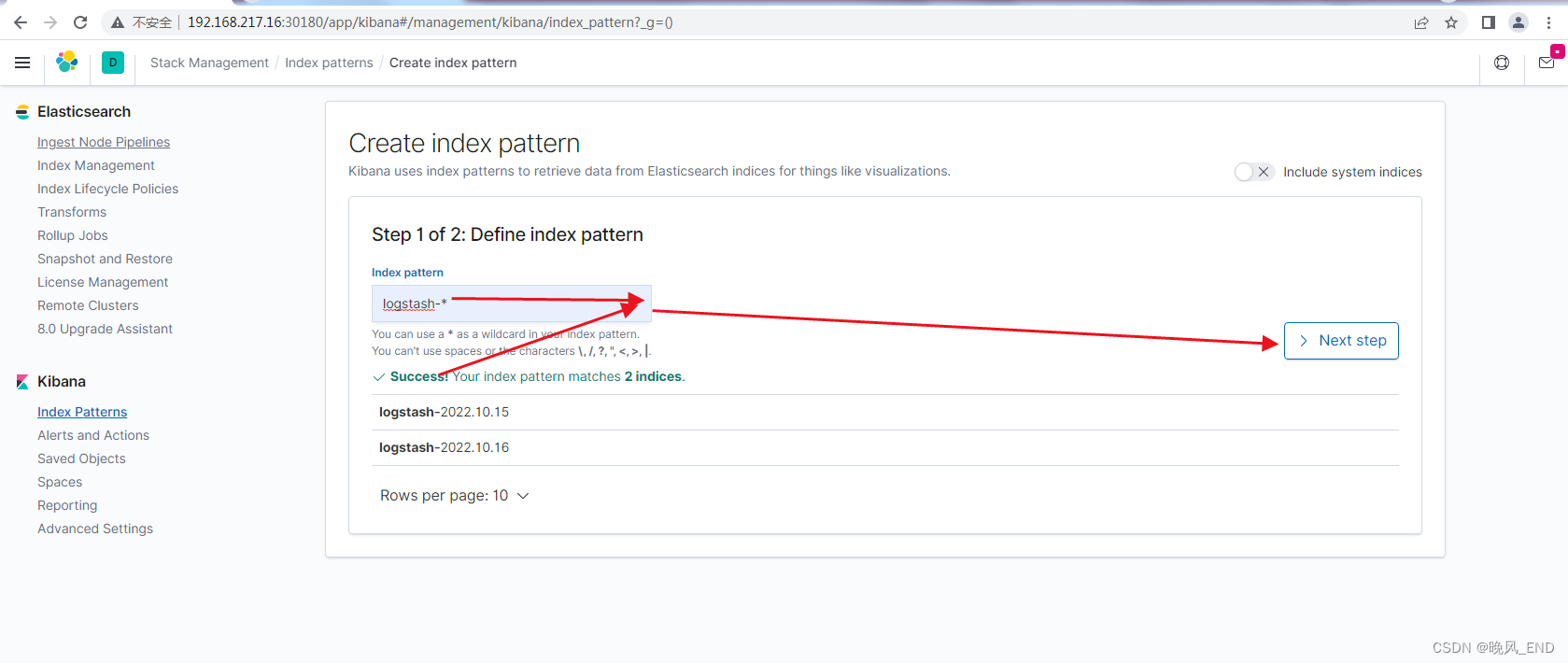

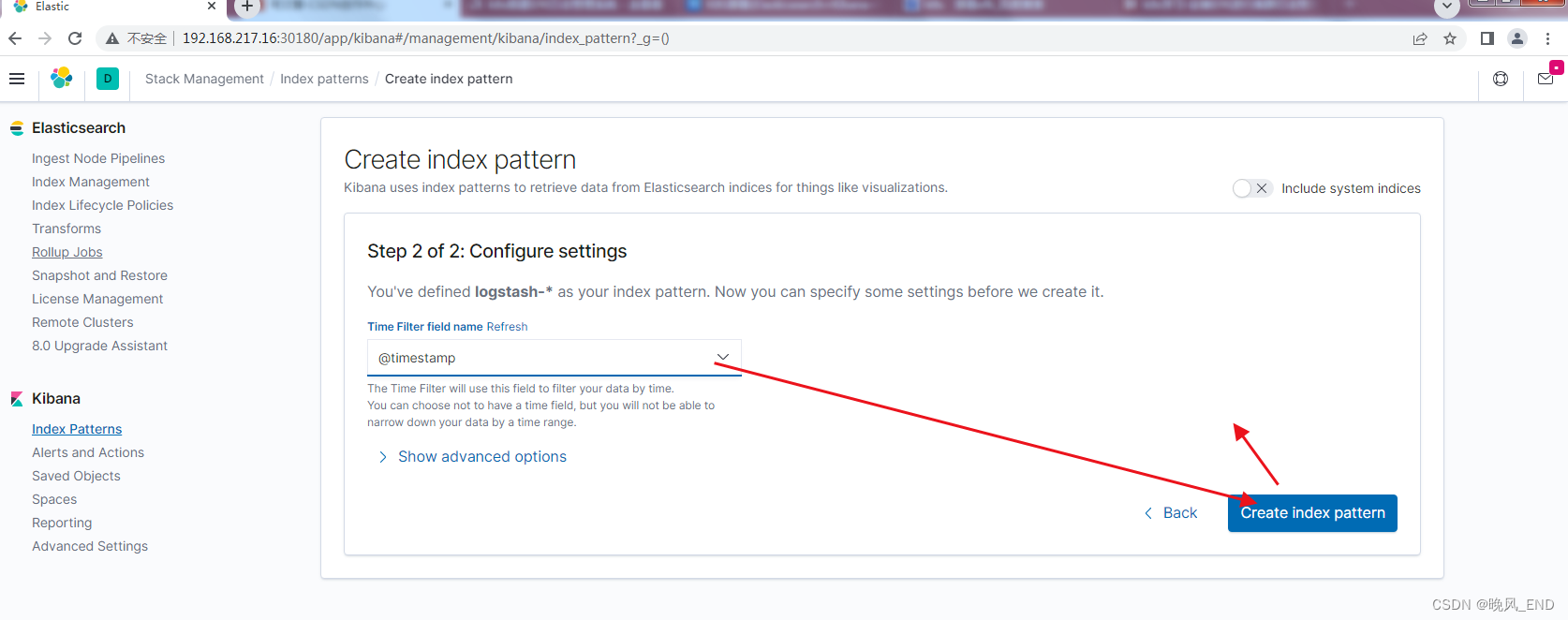

选择上面那个菜单的kibana下面的Discover,进入新建索引页面,输入logstash-*:

这里选择自带的时间戳,下拉框可以选择到的

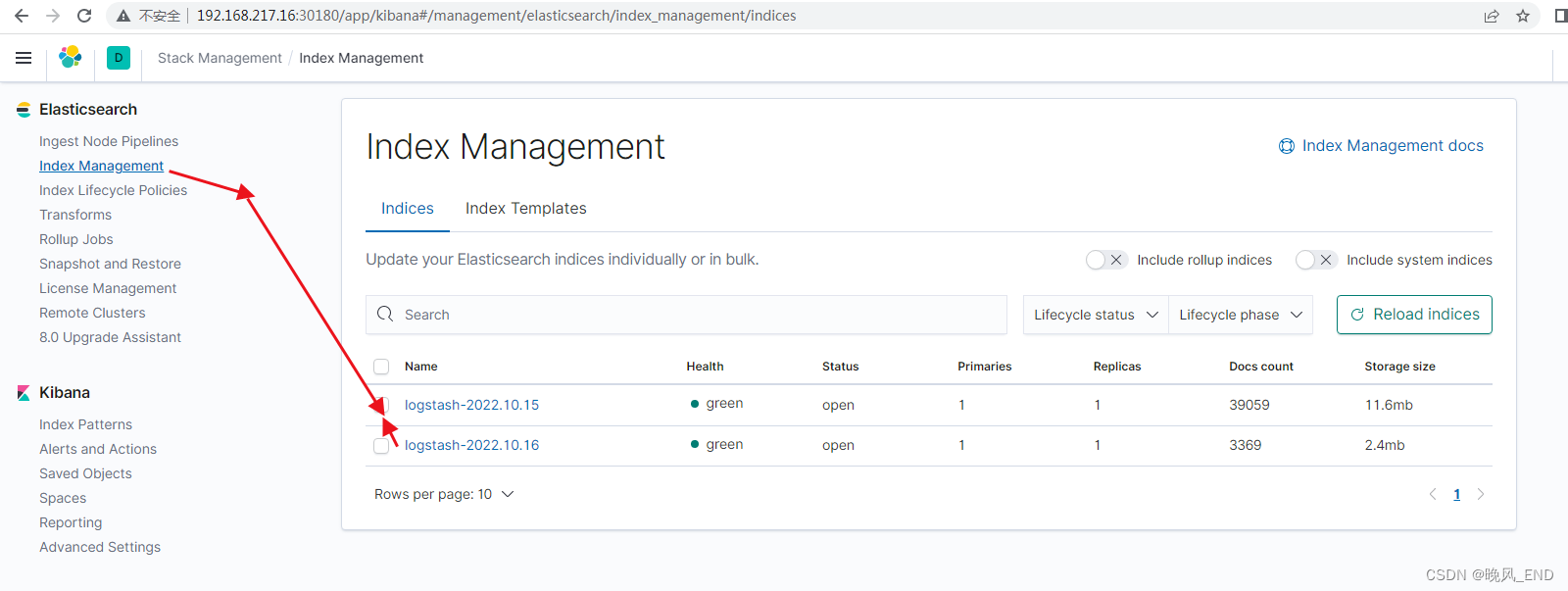

可以看一下索引是否正常,绿色表示正常的啦:

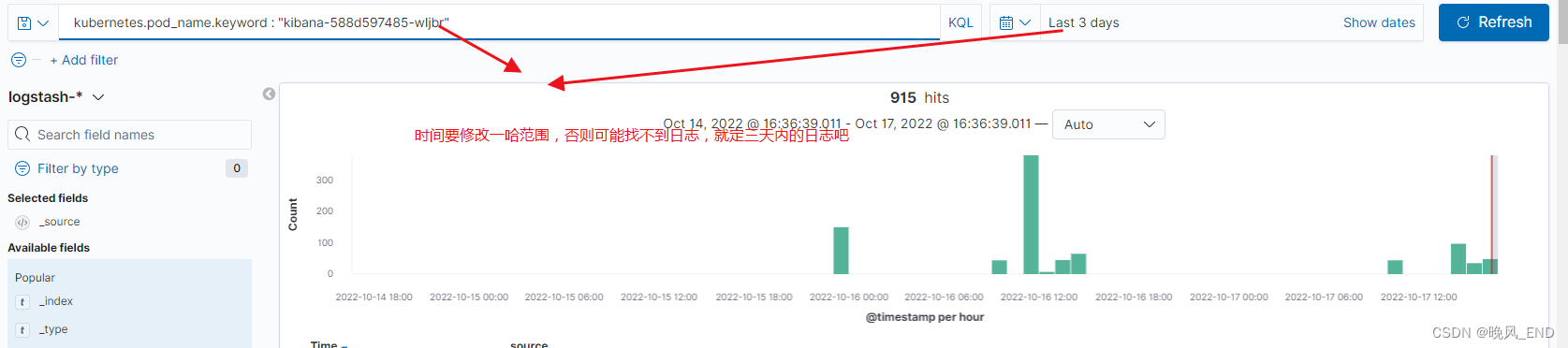

kibana下的Discover,可以看到详细的数据了

测试日志是否正确的收集:

现有这么多个pod,一哈随机挑选个pod的日志查看

[root@k8s-master ~]# kk

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx ingress-nginx-admission-create-7bg96 0/1 Completed 0 44h 10.244.0.27 k8s-master <none> <none>

ingress-nginx ingress-nginx-admission-patch-rpbnw 0/1 Completed 0 44h 10.244.1.15 k8s-node1 <none> <none>

ingress-nginx ingress-nginx-controller-75sqz 1/1 Running 3 44h 192.168.217.16 k8s-master <none> <none>

ingress-nginx ingress-nginx-controller-lkc24 1/1 Running 4 44h 192.168.217.17 k8s-node1 <none> <none>

ingress-nginx ingress-nginx-controller-xjg6s 1/1 Running 3 44h 192.168.217.18 k8s-node2 <none> <none>

kube-logging es-cluster-0 1/1 Running 2 41h 10.244.1.33 k8s-node1 <none> <none>

kube-logging es-cluster-1 1/1 Running 0 6h1m 10.244.2.29 k8s-node2 <none> <none>

kube-logging es-cluster-2 1/1 Running 2 41h 10.244.1.34 k8s-node1 <none> <none>

kube-logging fluentd-d58br 1/1 Running 1 29h 10.244.1.32 k8s-node1 <none> <none>

kube-logging fluentd-lrpgc 1/1 Running 1 29h 10.244.0.43 k8s-master <none> <none>

kube-logging fluentd-mvpsq 1/1 Running 1 29h 10.244.2.27 k8s-node2 <none> <none>

kube-logging kibana-588d597485-wljbr 1/1 Running 2 41h 10.244.0.45 k8s-master <none> <none>

kube-system coredns-59864d888b-bpzj6 1/1 Running 3 46h 10.244.0.44 k8s-master <none> <none>

kube-system kube-flannel-ds-4bxpd 1/1 Running 6 3d17h 192.168.217.16 k8s-master <none> <none>

kube-system kube-flannel-ds-5stwc 1/1 Running 8 3d17h 192.168.217.18 k8s-node2 <none> <none>

kube-system kube-flannel-ds-pg6kq 1/1 Running 7 3d17h 192.168.217.17 k8s-node1 <none> <none>

kube-system nfs-client-provisioner-9c9f9bd86-tz9lk 1/1 Running 5 3d5h 10.244.2.28 k8s-node2 <none> <none>

查看kibana这个pod的日志,查询前时间改大一些

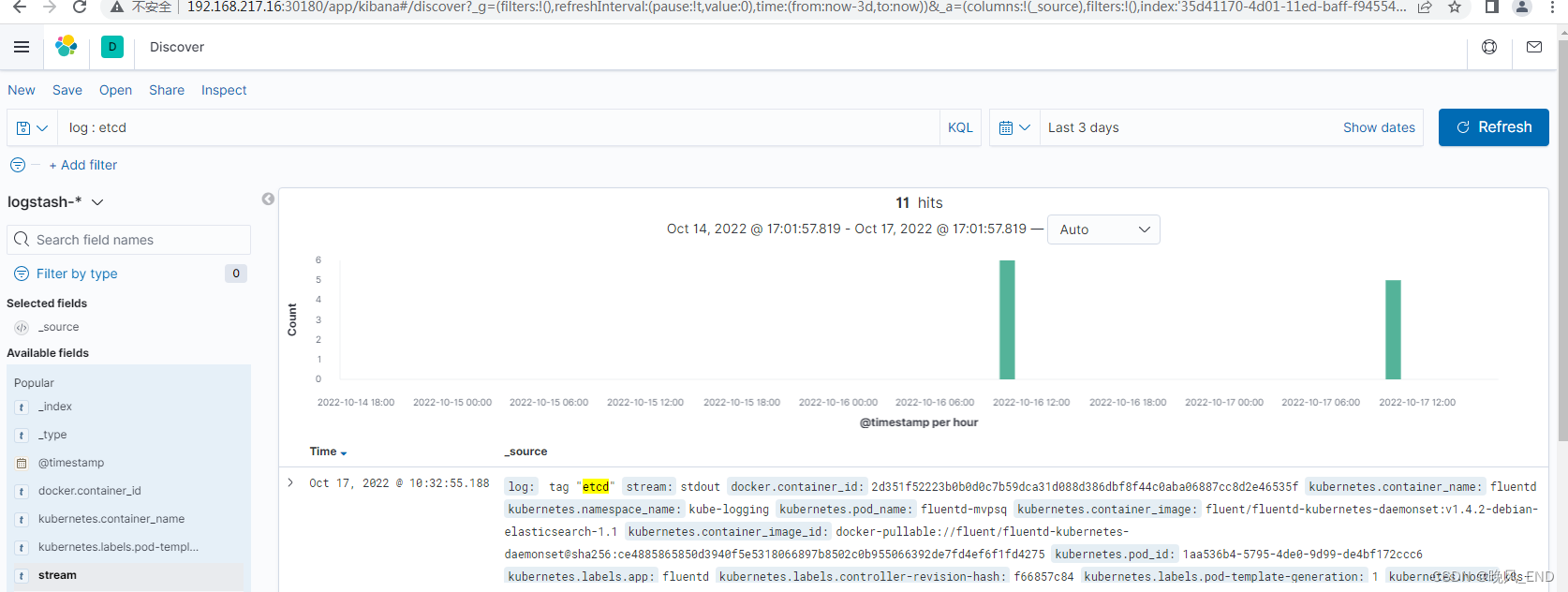

查看elasticsearch集群的日志:

查看etcd相关的日志:

OK,kubernetes搭建EFK日志系统圆满完成。

版权归原作者 晚风_END 所有, 如有侵权,请联系我们删除。