近期接触到Apache Flink,想要认真学习一下。准备一步步从头开始直到项目实际试用,把学习过程记录下来,形成一个序列博客。以此督促自己好好学习,不要半途而废。

本篇操作步骤基本是照着官网文档进行的。

试用环境是Ubuntu 22.04、Jdk 8、Flink 1.18.0。

1. 下载Flink

1.1 最新的release 1.18.0版本,官网下载地址或者腾讯镜像站。推荐从镜像站下载,官网的下载速度实在感人。

1.2 下载获得

flink-1.18.0-bin-scala_2.12.tgz

,并解压。

$ tar-xzf flink-1.18.0-bin-scala_2.12.tgz

$ cd flink-1.18.0

2. 启动集群

2.1 先保证已正常安装Java,官网说的是Java11,我尝试在Jdk8和Jdk17环境下都可以正常启动。

2.2 执行命令,启动Flink:

$ ./bin/start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host DESKTOP-B2S657C.

Starting taskexecutor daemon on host DESKTOP-B2S657C.

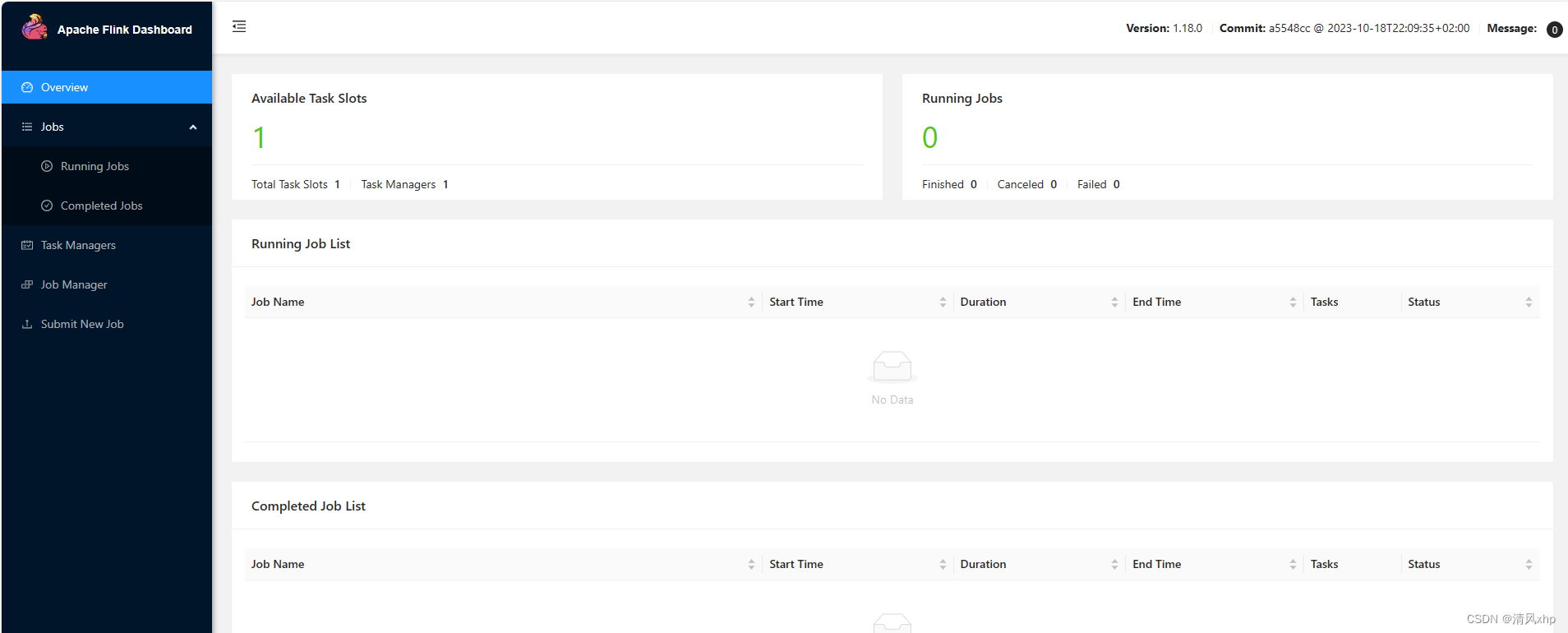

2.3 查看运行情况,在浏览器中输入

http://localhost:8081

访问,正常情况下会看到如下界面:

如果看到访问失败界面,可以查看日志文件(在

flink-1.18.0/log/目录下

)查看异常原因。

3. 提交作业(Job)

3.1 安装目录下附带了许多示例作业。任意选择一个,快速部署到已运行的集群上来试用:

$ ./bin/flink run examples/streaming/WordCount.jar

Executing example with default input data.

Use --input to specify file input.

Printing result to stdout. Use --output to specify output path.

Job has been submitted with JobID 7c7fa8cc747f03af44a1681662f2780f

Program execution finished

Job with JobID 7c7fa8cc747f03af44a1681662f2780f has finished.

Job Runtime: 737 ms

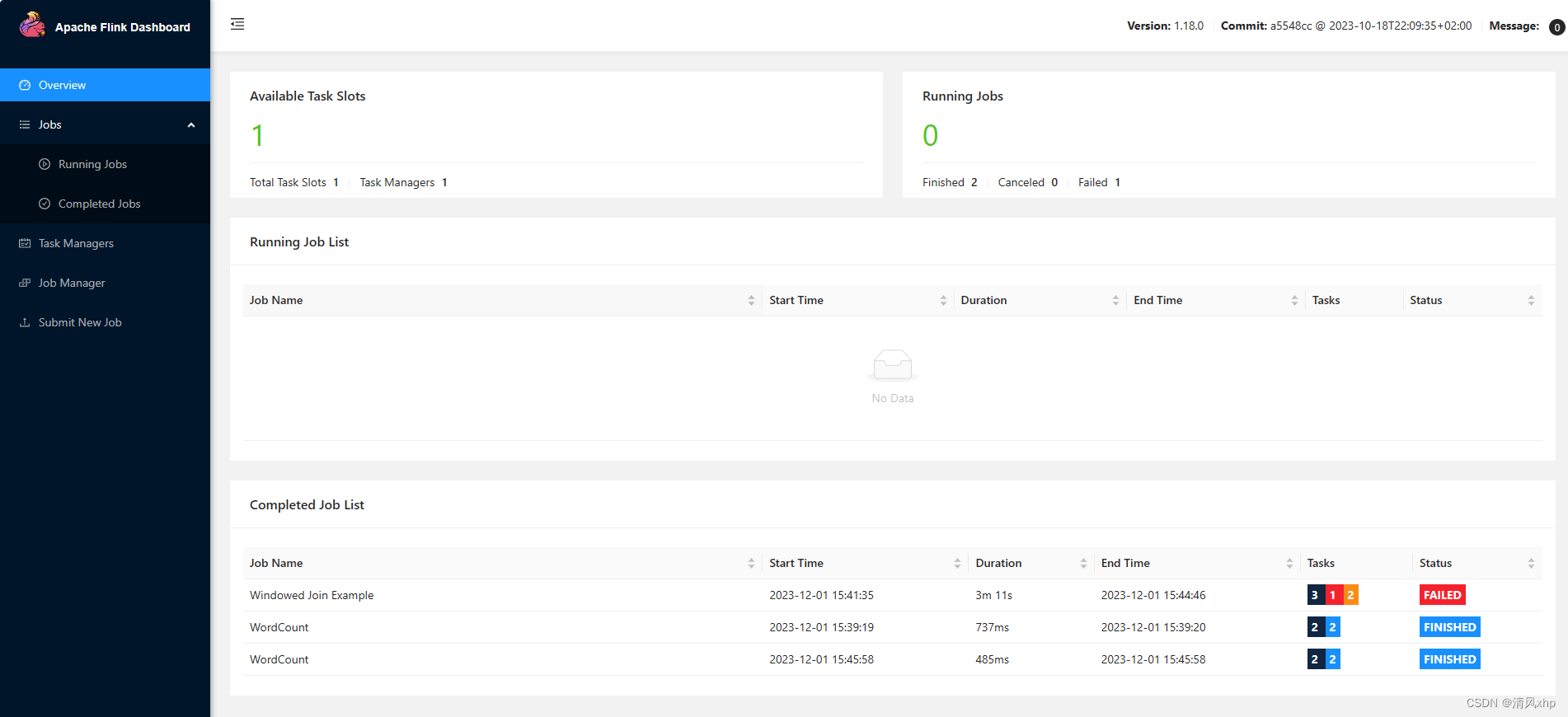

3.2 通过命令查看执行日志:

$ tail log/flink-u-xhp-taskexecutor-0-DESKTOP-B2S657C.out

(nymph,1)(in,3)(thy,1)(orisons,1)(be,4)(all,2)(my,1)(sins,1)(remember,1)(d,4)

3.3 通过Web界面查看Flink运行情况:

4. 停止集群

4.1 执行如下命令,快速停止集群和所有正在运行的组件:

$ ./bin/stop-cluster.sh

Stopping taskexecutor daemon (pid: 861) on host DESKTOP-B2S657C.

No standalonesession daemon (pid: 504) is running anymore on DESKTOP-B2S657C.

5. 安装过程总结

5.1 官网介绍说,Flink 可以运行在所有类 UNIX 的环境中,例如 Linux、 MacOSX 和 Cygwin (适用于 Windows)。因此,我把Linux和Cygwin都尝试了一下。

5.2 看网上博客有在Windows的CMD下直接运行的,应该是当前的最新版1.18已经不支持了,因为/bin目录下没有

.bat

文件。

5.3 因工作电脑是Windows,所以通过VMware里的Ubuntu和WSL的Ubuntu都试用了一下。之前一直习惯在WMware里使用Linux,比较习惯图形界面操作。现在试用了WSL,发现这种模式,资源占用小,像操作CMD窗口一样操作Linux系统,使用更方便。

5.4 在VMware-Ubuntu里安装使用Flink,照着官网操作,基本没发现什么问题,很顺畅。

5.5 在WSL-Ubuntu里安装使用Flink,出现两个小问题,解决后就很顺畅了:

a)因第一次使用缺失Java环境,遂使用如下命令安装jdk并查看:

$ sudoaptinstall openjdk-17-jdk

$ java-version

openjdk version "17.0.9"2023-10-17

OpenJDK Runtime Environment (build 17.0.9+9-Ubuntu-122.04)

OpenJDK 64-Bit Server VM (build 17.0.9+9-Ubuntu-122.04, mixed mode, sharing)

b)因不知道怎么跳到C/D/E盘,找不到Windows本地的文件。查询得知使用

df

命令可以查看磁盘情况:

$ df

Filesystem 1K-blocks Used Available Use% Mounted on

rootfs 3325053406919102826331431221% /

none 3325053406919102826331431221% /dev

none 3325053406919102826331431221% /run

none 3325053406919102826331431221% /run/lock

none 3325053406919102826331431221% /run/shm

none 3325053406919102826331431221% /run/user

tmpfs 3325053406919102826331431221% /sys/fs/cgroup

C:\154829012791645407566447252% /mnt/c

D:\3325053406919102826331431221% /mnt/d

E:\421713256121084524096048043% /mnt/e

知道了挂载路径,问题迎刃而解。

惭愧,Linux命令总是捡了丢,丢了捡,到用时还得现查。

5.6 通过Cygwin安装Flink:

a)第一次知道这个软件,看介绍还是很神奇的,由官网下载、安装自无需多言。

b)安装开始,同样先使用

df

命令查看路径:

$ df

Filesystem 1K-blocks Used Available Use% Mounted on

D:/ProgramFiles/cygwin64 3325053406919146026331388021% /

C: 154829012791523407567667252% /cygdrive/c

E: 421713256121084524096048043% /cygdrive/e

c)第一步,先安装Java环境;

d)第二步,进入Flink根目录,执行启动命令:

$ cd /cygdrive/d/ProgramData/Cygwin/flink-1.18.0

$ ./bin/start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host DESKTOP-B2S657C.

Starting taskexecutor daemon on host DESKTOP-B2S657C.

e)通过Web界面查看运行情况,结果访问不到。然后看日志,发现如下错误:

Improperly specified VM option 'MaxMetaspaceSize=268435456'

Error: Could not create the Java Virtual Machine.

Error: A fatal exception has occurred. Program will exit.

经网上查找,修改

/bin/config.sh

文件,将其中

export JVM_ARGS="${JVM_ARGS} ${jvm_params}"

一行内容注释掉,大约是第597行。

重新启动,Web界面可以正常访问。

f)执行提交作业操作:

$ ./bin/flink run examples/streaming/WordCount.jar

Executing example with default input data.

Use --input to specify file input.

Printing result to stdout. Use --output to specify output path.

Job has been submitted with JobID 8fb0e1574bf0e78d1b7203c32e44d7bf

Program execution finished

Job with JobID 8fb0e1574bf0e78d1b7203c32e44d7bf has finished.

Job Runtime: 208 ms

命令查看日志,无任何输出:

$ tail log/flink-xhp-taskexecutor-4-DESKTOP-B2S657C.out

然后查看日志文件

/log/flink-xhp-taskexecutor-4-DESKTOP-B2S657C.log

,发现有如下错误:

org.apache.flink.util.FlinkException: Failed to start the TaskManagerRunner.

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.runTaskManager(TaskManagerRunner.java:493) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.lambda$runTaskManagerProcessSecurely$5(TaskManagerRunner.java:535) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.security.contexts.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:28) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.runTaskManagerProcessSecurely(TaskManagerRunner.java:535) [flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.runTaskManagerProcessSecurely(TaskManagerRunner.java:515) [flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.main(TaskManagerRunner.java:473) [flink-dist-1.18.0.jar:1.18.0]

Caused by: java.io.IOException: Could not create the working directory D:\ProgramFiles\cygwin64\tmp\tm_localhost:53407-df920d.

at org.apache.flink.runtime.entrypoint.WorkingDirectory.createDirectory(WorkingDirectory.java:58) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.entrypoint.WorkingDirectory.<init>(WorkingDirectory.java:39) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.entrypoint.WorkingDirectory.create(WorkingDirectory.java:88) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.entrypoint.ClusterEntrypointUtils.lambda$createTaskManagerWorkingDirectory$0(ClusterEntrypointUtils.java:152) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.entrypoint.DeterminismEnvelope.map(DeterminismEnvelope.java:49) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.entrypoint.ClusterEntrypointUtils.createTaskManagerWorkingDirectory(ClusterEntrypointUtils.java:150) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.startTaskManagerRunnerServices(TaskManagerRunner.java:211) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.start(TaskManagerRunner.java:293) ~[flink-dist-1.18.0.jar:1.18.0]

at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.runTaskManager(TaskManagerRunner.java:491) ~[flink-dist-1.18.0.jar:1.18.0]

... 5 more

网上一顿查找解决方案,逐一尝试,仍未解决,然后就卡壳了。

g)到此,使用Cygwin安装Flink的尝试就算夭折了。

5.7 总结一下,Cygwin / VMware-Linux / WSL-Linux三种方式,个人倾向优先级 WSL-Linux > VMware-Linux > Cygwin。

版权归原作者 -XHP- 所有, 如有侵权,请联系我们删除。