解决集群部署Hadoop 启动后没有ResourceManager问题

问题

我安装的是Hadoop3.3.4,使用的是Java17,在启动hadoop时,出现了下面的问题

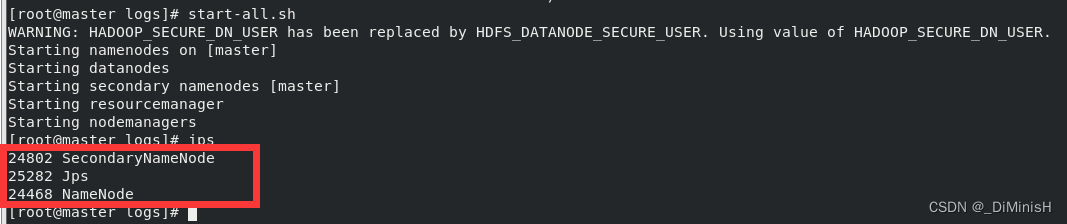

1. 启动完成后输入jps命令没有看到ResourceManager

2. 查看ResourceManager日志出现java.lang.reflect.InaccessibleObjectException异常

2023-03-18 11:14:47,513 ERROR org.apache.hadoop.yarn.server.resourcemanager.ResourceManager: Error starting ResourceManager

java.lang.ExceptionInInitializerError

at com.google.inject.internal.cglib.reflect.$FastClassEmitter.<init>(FastClassEmitter.java:67)

at com.google.inject.internal.cglib.reflect.$FastClass$Generator.generateClass(FastClass.java:72)

at com.google.inject.internal.cglib.core.$DefaultGeneratorStrategy.generate(DefaultGeneratorStrategy.java:25)

at com.google.inject.internal.cglib.core.$AbstractClassGenerator.create(AbstractClassGenerator.java:216)

at com.google.inject.internal.cglib.reflect.$FastClass$Generator.create(FastClass.java:64)

at com.google.inject.internal.BytecodeGen.newFastClass(BytecodeGen.java:204)

at com.google.inject.internal.ProviderMethod$FastClassProviderMethod.<init>(ProviderMethod.java:256)

at com.google.inject.internal.ProviderMethod.create(ProviderMethod.java:71)

at com.google.inject.internal.ProviderMethodsModule.createProviderMethod(ProviderMethodsModule.java:275)

at com.google.inject.internal.ProviderMethodsModule.getProviderMethods(ProviderMethodsModule.java:144)

at com.google.inject.internal.ProviderMethodsModule.configure(ProviderMethodsModule.java:123)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:349)

at com.google.inject.AbstractModule.install(AbstractModule.java:122)

at com.google.inject.servlet.ServletModule.configure(ServletModule.java:52)

at com.google.inject.AbstractModule.configure(AbstractModule.java:62)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements.getElements(Elements.java:110)

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:138)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:104)

at com.google.inject.Guice.createInjector(Guice.java:96)

at com.google.inject.Guice.createInjector(Guice.java:73)

at com.google.inject.Guice.createInjector(Guice.java:62)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.build(WebApps.java:417)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.start(WebApps.java:465)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.startWepApp(ResourceManager.java:1389)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.serviceStart(ResourceManager.java:1498)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:194)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.main(ResourceManager.java:1699)

Caused by: java.lang.reflect.InaccessibleObjectException: Unable to make protected final java.lang.Class java.lang.ClassLoader.defineClass(java.lang.String,byte[],int,int,java.security.ProtectionDomain) throws java.lang.ClassFormatError accessible: module java.base does not "opens java.lang" to unnamed module @5e922278

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:354)

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:297)

at java.base/java.lang.reflect.Method.checkCanSetAccessible(Method.java:199)

at java.base/java.lang.reflect.Method.setAccessible(Method.java:193)

at com.google.inject.internal.cglib.core.$ReflectUtils$2.run(ReflectUtils.java:56)

at java.base/java.security.AccessController.doPrivileged(AccessController.java:318)

at com.google.inject.internal.cglib.core.$ReflectUtils.<clinit>(ReflectUtils.java:46)... 29more

解决方法

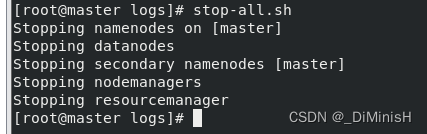

1. 停止hadoop

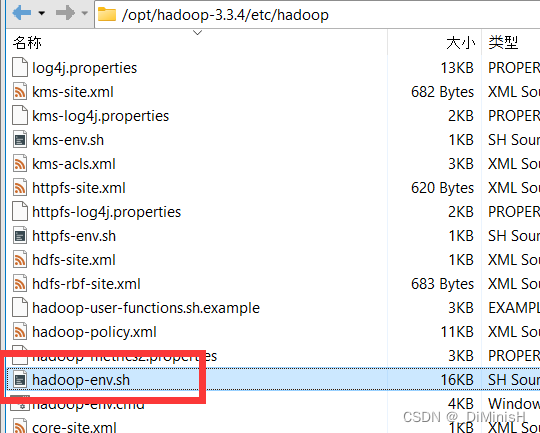

2. 修改每台机器上的hadoop-env

我的在

/opt/hadoop-3.3.4/etc/hadoop

这个路径下

找到这里

添加代码

exportHADOOP_OPTS="--add-opens java.base/java.lang=ALL-UNNAMED"

这个是添加java运行时参数,不过上面注明了这样是不推荐的

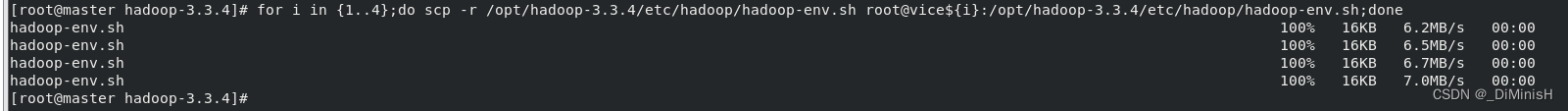

然后分发到其他机器

foriin{1..4};doscp -r /opt/hadoop-3.3.4/etc/hadoop/hadoop-env.sh root@vice${i}:/opt/hadoop-3.3.4/etc/hadoop/hadoop-env.sh;done

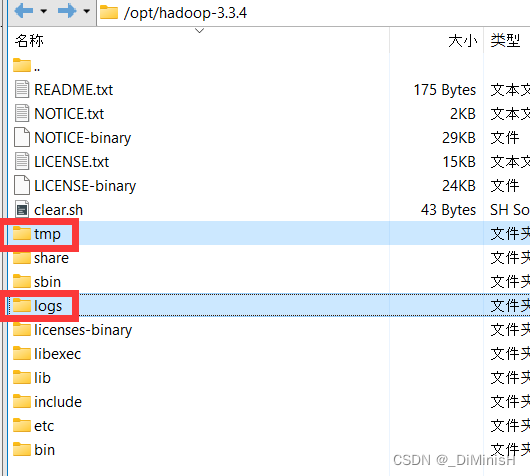

3. 删除所有机器上tmp和logs文件夹里的内容

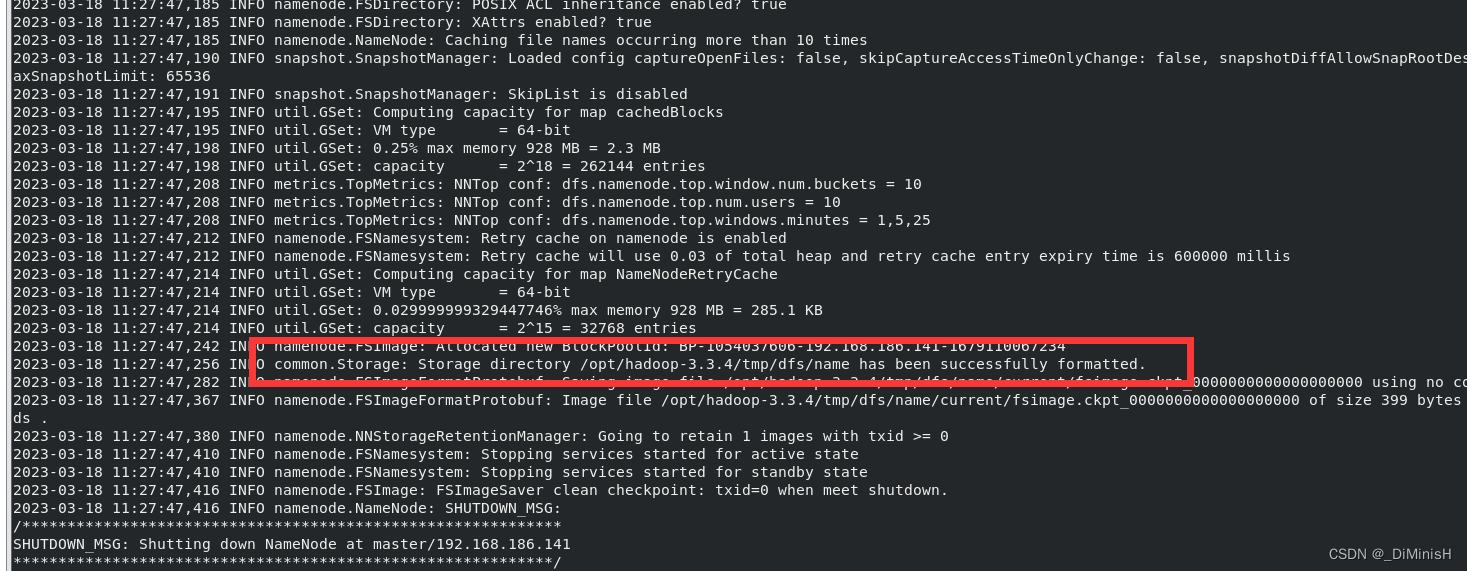

4. master重新格式化NameNode

hdfs namenode -format

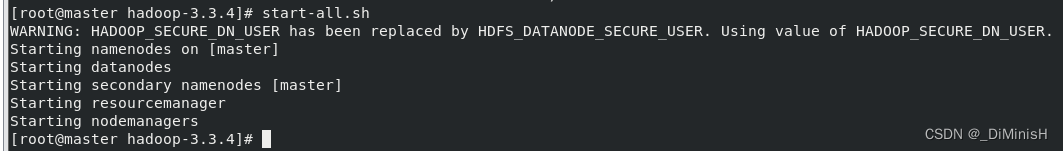

5. 启动hadoop

start-all.sh

6. 查看是否解决问题

(1)master服务器上输入jps命令

出现了ResourceManager

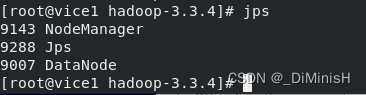

(2)在从节点上输入jps命令

运行正常

(3)访问一下http://192.168.186.141:8088/

运行正常

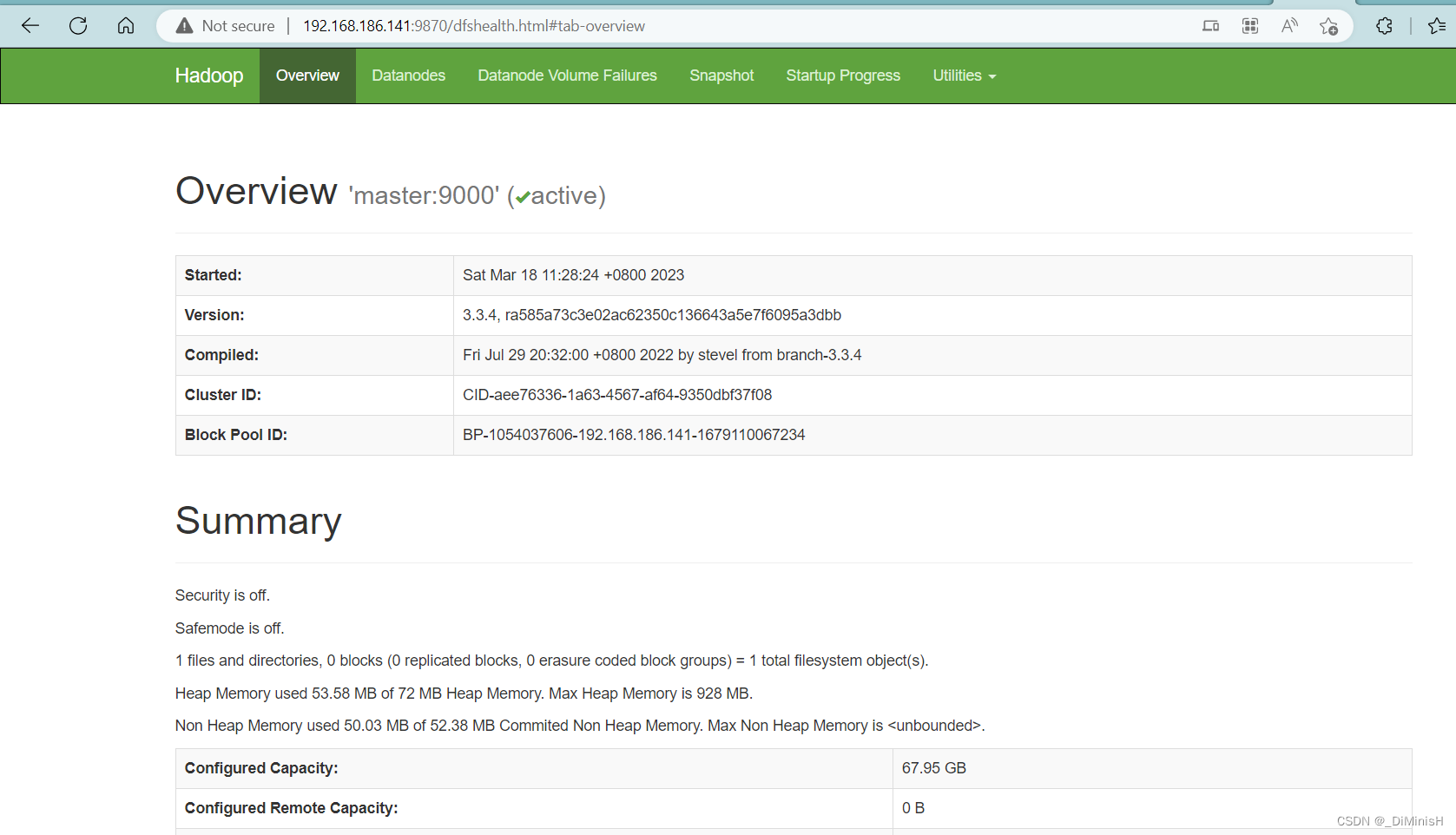

(4)访问一下http://192.168.186.141:9870/

一切正常,问题成功解决

版权归原作者 _DiMinisH 所有, 如有侵权,请联系我们删除。