一、Docker-Compose概述

Compose 项目是 Docker 官方的开源项目,负责实现对 Docker 容器集群的快速编排。使用前面介绍的Dockerfile我们很容易定义一个单独的应用容器。然而在日常开发工作中,经常会碰到需要多个容器相互配合来完成某项任务的情况。例如要实现一个 Web 项目,除了 Web 服务容器本身,往往还需要再加上后端的数据库服务容器;再比如在分布式应用一般包含若干个服务,每个服务一般都会部署多个实例。如果每个服务都要手动启停,那么效率之低、维护量之大可想而知。这时候就需要一个工具能够管理一组相关联的的应用容器,这就是Docker Compose。

Compose有2个重要的概念:

项目(Project):由一组关联的应用容器组成的一个完整业务单元,在 docker-compose.yml 文件中定义。

服务(Service):一个应用的容器,实际上可以包括若干运行相同镜像的容器实例。

docker compose运行目录下的所有yml文件组成一个工程,一个工程包含多个服务,每个服务中定义了容器运行的镜像、参数、依赖。一个服务可包括多个容器实例。docker-compose就是docker容器的编排工具,主要就是解决相互有依赖关系的多个容器的管理。

二、安装docker-compose

1.从github上下载docker-compose二进制文件安装

- 下载最新版的docker-compose文件

官方文档地址:Install Docker Compose | Docker Documentation

https://github.com/docker/compose/releases/download/v2.5.0/docker-compose-linux-x86_64

- 添加可执行权限

[root@offline-client bin]# mv docker-compose-linux-x86_64 docker-compose

[root@offline-client bin]# docker-compose version

Docker Compose version v2.5.0

2.pip安装

pip install docker-compose

三、Docker-compose实战

1.MySQL示例

1.1 MySQL run

docker run -itd -p 3306:3306 -m="1024M" --privileged=true

-v /data/software/mysql/conf/:/etc/mysql/conf.d

-v /data/software/mysql/data:/var/lib/mysql

-v /data/software/mysql/log/:/var/log/mysql/

-e MYSQL_ROOT_PASSWORD=winner@001 --name=mysql mysql:5.7.19

1.2 mysql-compose.yml

version: "3"

services:

mysql:

image: mysql:5.7.19

restart: always

container_name: mysql

ports:

- 3306:3306

volumes:

- /data/software/mysql/conf/:/etc/mysql/conf.d

- /data/software/mysql/data:/var/lib/mysql

- /data/software/mysql/log/:/var/log/mysql

environment:

MYSQL_ROOT_PASSWORD: 123456

MYSQL_DATABASE: kangll

MYSQL_USER: kangll

MYSQL_PASSWORD: 123456

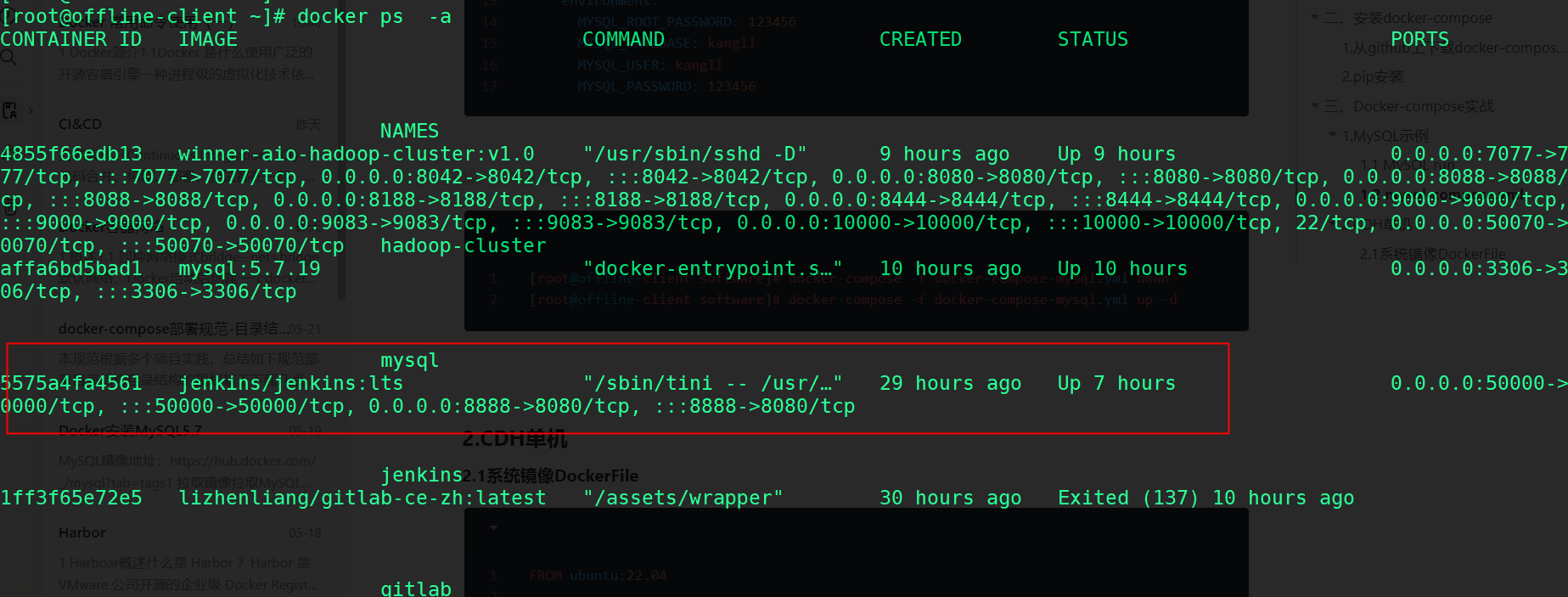

MySQL 容器启动与关闭

[root@offline-client software]# docker-compose -f docker-compose-mysql.yml down

[root@offline-client software]# docker-compose -f docker-compose-mysql.yml up -d

docker ps 查看MySQL进程

2.CDH单机

2.1系统镜像DockerFile

FROM ubuntu:20.04

# 作者信息

LABEL kangll <[email protected]>

# 安装 依赖包

RUN apt-get clean && apt-get -y update \

&& apt-get install -y openssh-server lrzsz vim net-tools openssl gcc openssh-client inetutils-ping \

&& sed -ri 's/^#?PermitRootLogin\s+.*/PermitRootLogin yes/' /etc/ssh/sshd_config \

&& sed -ri 's/UsePAM yes/#UsePAM yes/g' /etc/ssh/sshd_config \

&& ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime \

# 修改 root 密码

&& useradd winner \

&& echo "root:kangll" | chpasswd \

&& echo "winner:kangll" | chpasswd \

&& echo "root ALL=(ALL) ALL" >> /etc/sudoers \

&& echo "winner ALL=(ALL) ALL" >> /etc/sudoers

# 生成密钥

#&& ssh-keygen -t dsa -f /etc/ssh/ssh_host_dsa_key \

#&& ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key

# 启动sshd服务并且暴露22端口

RUN mkdir /var/run/sshd

EXPOSE 22

# 执行后台启动 ssh 服务命令

CMD ["/usr/sbin/sshd", "-D"]

2.2CDH镜像DockerFile

FROM ubuntu20-ssh-base:v1.0 # 系统镜像

LABEL kangll <[email protected]>

RUN ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' && \

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys && \

sed -i 's/PermitEmptyPasswords yes/PermitEmptyPasswords no /' /etc/ssh/sshd_config && \

sed -i 's/PermitRootLogin without-password/PermitRootLogin yes /' /etc/ssh/sshd_config && \

echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config && \

echo " UserKnownHostsFile /dev/null" >> /etc/ssh/ssh_config

# JDK

ADD jdk-8u162-linux-x64.tar.gz /hadoop/software/

ENV JAVA_HOME=/hadoop/software/jdk1.8.0_162

ENV CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

ENV PATH=$JAVA_HOME/bin:$PATH

# hadoop

ADD hadoop-2.6.0-cdh5.15.0.tar.gz /hadoop/software/

ENV HADOOP_HOME=/hadoop/software/hadoop

ENV HADOOP_CONF_DIR=/hadoop/software/hadoop/etc/hadoop

ENV PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

# spark

ADD spark-1.6.0-cdh5.15.0.tar.gz /hadoop/software/

ENV SPARK_HOME=/hadoop/software/spark

ENV PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

# hbase

ADD hbase-1.2.0-cdh5.15.0.tar.gz /hadoop/software/

ENV HBASE_HOME=/hadoop/software/hbase

ENV PATH=$PATH:$HBASE_HOME/bin

# hive

ADD hive-1.1.0-cdh5.15.0.tar.gz /hadoop/software/

ENV HIVE_HOME=/hadoop/software/hive

ENV HIVE_CONF_DIR=/hadoop/software/hive/conf

ENV PATH=$HIVE_HOME/bin:$PATH

# scala

ADD scala-2.10.5.tar.gz /hadoop/software/

ENV SCALA_HOME=/hadoop/software/scala

ENV PATH=$PATH:$SCALA_HOME/bin

ADD azkaban.3.30.1.tar.gz /hadoop/software/

WORKDIR /hadoop/software/

RUN ln -s jdk1.8.0_162 jdk \

&& ln -s spark-1.6.0-cdh5.15.0 spark \

&& ln -s scala-2.10.5 scala \

&& ln -s hadoop-2.6.0-cdh5.15.0 hadoop \

&& ln -s hbase-1.2.0-cdh5.15.0 hbase \

&& ln -s hive-1.1.0-cdh5.15.0 hive

WORKDIR /hadoop/software/hadoop/share/hadoop

RUN ln -s mapreduce2 mapreduce

WORKDIR /hadoop/software/

# 添加winner用户

RUN chown -R winner:winner /hadoop/software/ && \

chmod -R +x /hadoop/software/

## 集群启动脚本

COPY start-cluster.sh /bin/

RUN chmod +x /bin/start-cluster.sh

CMD ["bash","-c","/bin/start-cluster.sh && tail -f /dev/null"]

start-cluster脚本, 方便多次启动和在log中查看启动日志

#!/bin/bash

#########################################################################

# #

# 脚本功能: winner-aio-docker, docker-compose安装,环境初始化 #

# 作 者: kangll #

# 创建时间: 2022-02-28 #

# 修改时间: 2022-04-07 #

# 当前版本: 2.0v #

# 调度周期: 初始化任务执行一次 #

# 脚本参数: 无 #

##########################################################################

source /etc/profile

source ~/.bash_profile

set -e

rm -rf /tmp/*

### 判断NameNode初始化文件夹是否存在,决定是否格式化

NNPath=/hadoop/software/hadoop/data/dfs/name/current

if [ -d "$NNPath" ];then

echo "------------------------------------------> NameNode已格式化 <--------------------------------------"

else

echo "------------------------------------------> NameNode开始格式化 <------------------------------------"

/hadoop/software/hadoop/bin/hdfs namenode -format

fi

# NameNode, SecondaryNameNode monitor

namenodeCount=`ps -ef |grep NameNode |grep -v "grep" |wc -l`

if [ $namenodeCount -le 1 ];then

/usr/sbin/sshd

echo "-----------------------------------------> Namenode 启动 <---------------------------------------- "

start-dfs.sh

else

echo "----------------------------> Namenode, SecondaryNameNode 正常 <---------------------------------- "

fi

#DataNode

DataNodeCount=`ps -ef |grep DataNode |grep -v "grep" |wc -l`

if [ $DataNodeCount == 0 ];then

/usr/sbin/sshd

echo "-------------------------------------> DataNode 启动 <-------------------------------------------"

start-dfs.sh

else

echo "-------------------------------------> DataNode 正常 <-------------------------------------------"

fi

#HBase Monitor

hmasterCount=`ps -ef |grep HMaster |grep -v "grep" |wc -l`

if [ $hmasterCount == 0 ];then

/usr/sbin/sshd

echo "-------------------------------------> hmaster 启动 <-------------------------------------------"

start-hbase.sh

else

echo "-------------------------------------> hmaster 正常 <-------------------------------------------"

fi

#HBase RegionServer Monitor

regionCount=`ps -ef |grep HRegionServer |grep -v "grep" |wc -l`

if [ $regionCount == 0 ];then

echo "--------------------------------------> RegionServer 启动 <---------------------------------------"

/usr/sbin/sshd

start-hbase.sh

else

echo "--------------------------------------> RegionServer 正常 <---------------------------------------"

fi

#yarn ResourceManager

ResourceManagerCount=`ps -ef |grep ResourceManager |grep -v "grep" |wc -l`

if [ $ResourceManagerCount == 0 ];then

/usr/sbin/sshd

echo "-------------------------------------> ResourceManager 启动 <------------------------------------"

start-yarn.sh

else

echo "-------------------------------------> ResourceManager 正常 <------------------------------------"

fi

#yarn NodeManager

NodeManagerCount=`ps -ef |grep NodeManager |grep -v "grep" |wc -l`

if [ $NodeManagerCount == 0 ];then

/usr/sbin/sshd

echo "-------------------------------------> NodeManager 启动 <-----------------------------------------"

start-yarn.sh

else

echo "-------------------------------------> NodeManager 正常 <-----------------------------------------"

fi

#hive

runJar=`ps -ef |grep RunJar |grep -v "grep" |wc -l`

if [ $runJar == 0 ];then

/usr/sbin/sshd

echo "-----------------------------------------> hive 启动 <-----------------------------------------"

hive --service metastore &

else

echo "------------------------------------------> hive 正常 <-----------------------------------------"

fi

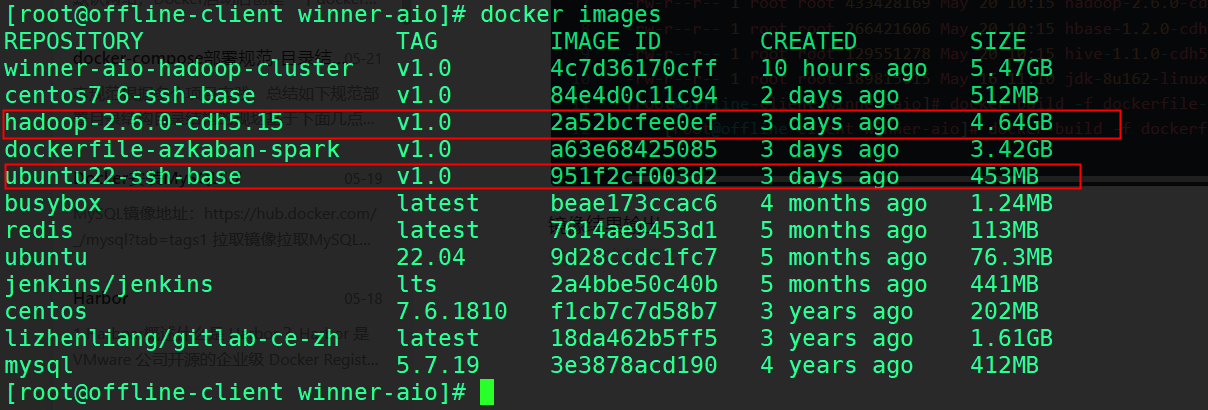

2.3镜像构建

[root@offline-client winner-aio]# ll total 1147020 -rw-r--r-- 1 root root 906 May 21 17:24 dockerfile-centos -rw-r--r-- 1 root root 1700 May 20 18:44 dockerfile-hadoop -rw-r--r-- 1 root root 42 May 18 16:23 dockerfile-mysql5.7 -rw-r--r-- 1 root root 971 May 19 15:00 dockerfile-ubuntu -rw-r--r-- 1 root root 433428169 May 20 10:15 hadoop-2.6.0-cdh5.15.0.tar.gz -rw-r--r-- 1 root root 266421606 May 20 10:15 hbase-1.2.0-cdh5.15.0.tar.gz -rw-r--r-- 1 root root 129551278 May 20 10:15 hive-1.1.0-cdh5.15.0.tar.gz -rw-r--r-- 1 root root 189815615 May 16 11:10 jdk-8u162-linux-x64.tar.gz ## 镜像构建 docker build -f dockerfile-ubuntu -t ubuntu22-ssh-base:v1.0 . docker build -f dockerfile-hadoop -t hadoop-2.6.0-cdh5.15:v1.0 .

镜像结果输出

2.4桥接网络创建

docker network create -d bridge hadoop-network

2.5CDH-yml

version: "3"

services:

cdh:

image: hadoop-cluster:v1.0

container_name: hadoop-cluster

hostname: hadoop

networks:

- hadoop-network

ports:

- 9000:9000

- 8088:8088

- 50070:50070

- 8188:8188

- 8042:8042

- 10000:10000

- 9083:9083

- 8080:8080

- 7077:7077

- 8444:8444

# 数据与日志路径映射到本地数据盘

volumes:

- /data/software/hadoop/data:/hadoop/software/hadoop/data

- /data/software/hadoop/logs:/hadoop/software/hadoop/logs

- /data/software/hadoop/conf:/hadoop/software/hadoop/etc/hadoop

- /data/software/hbase/conf:/hadoop/software/hbase/conf

- /data/software/hbase/logs:/hadoop/software/hbase/logs

- /data/software/hive/conf:/hadoop/software/hive/conf

networks:

hadoop-network: {}

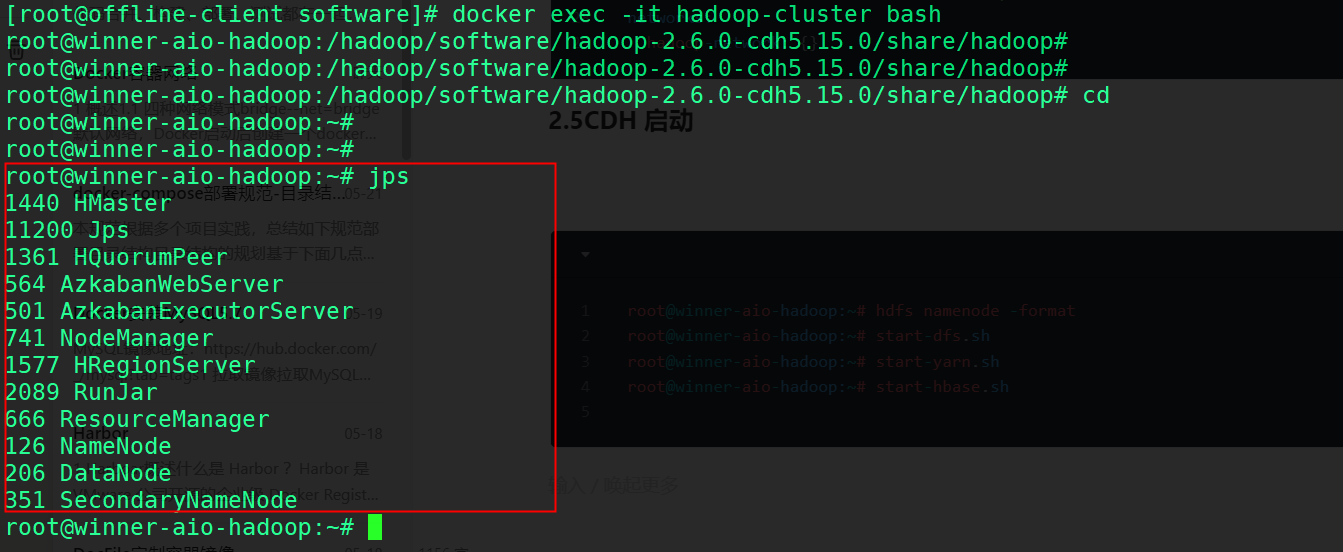

2.6CDH 启动

docker-compose -f dockerfile-hadoop-cluster up -d

使用JPS查看服务进程

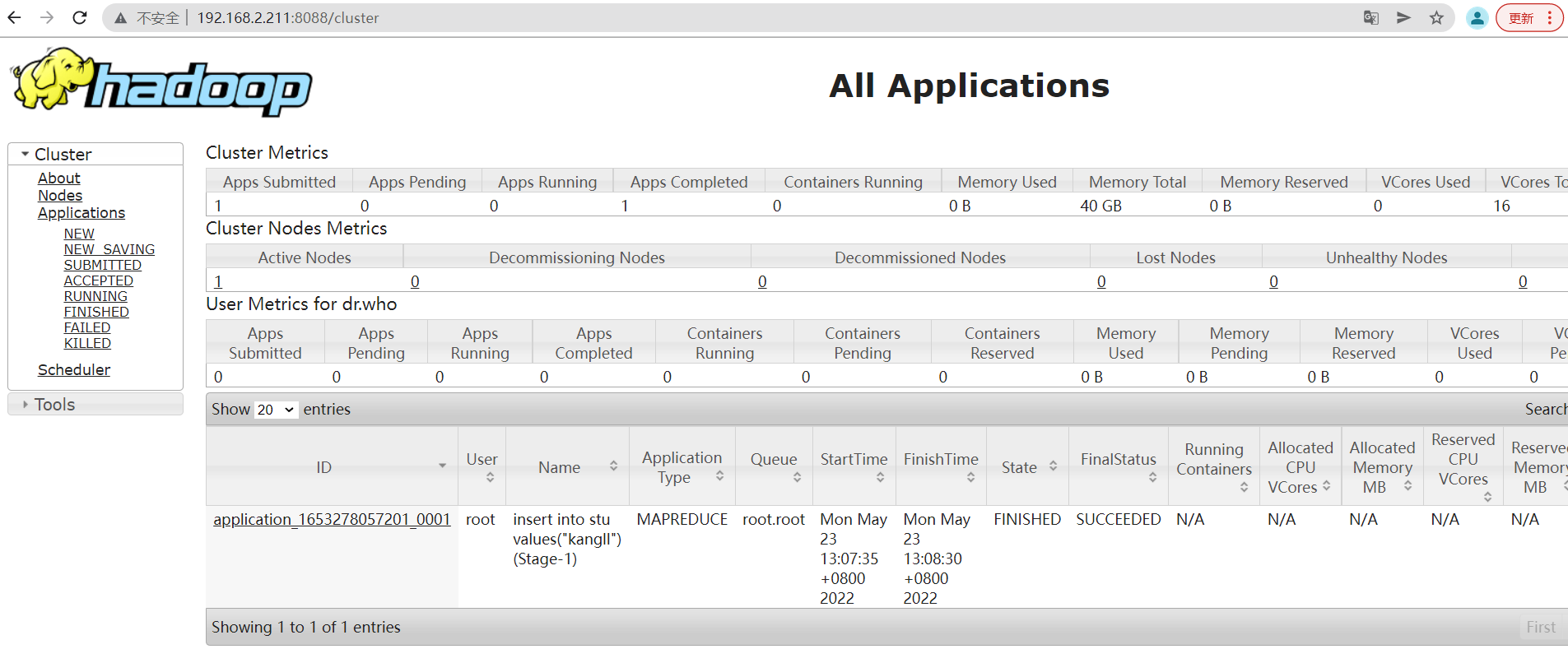

访问hadoop-web

访问yarn-web

------------------------ 感谢点赞!-------------------------------------

版权归原作者 开着拖拉机回家 所有, 如有侵权,请联系我们删除。