一 canal的扫盲

1.1 canal的介绍

canal是阿里巴巴旗下的一款开源项目,使用java语言进行开发,基于数据库增量日志解析,提供增量数据订阅与消费的功能。是一款很好用的数据库同步工具。目前只支持mysql。

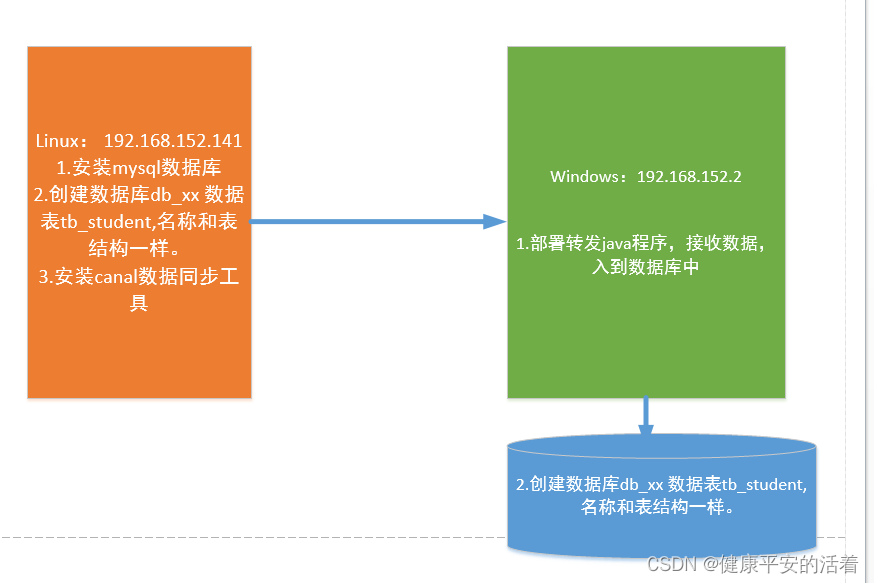

二 canal的搭建

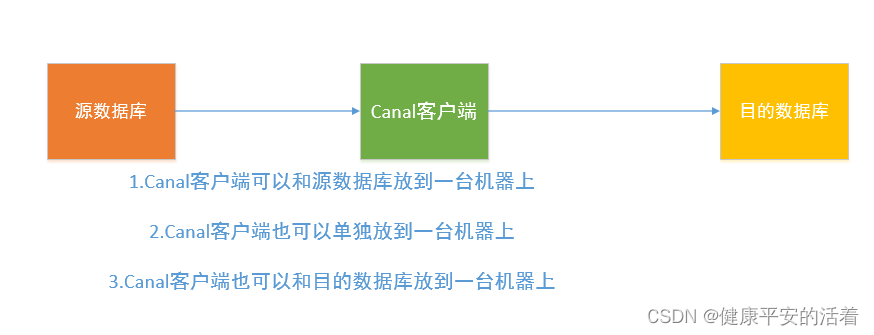

2.1 架构流程

2.2 配置服务器mysql

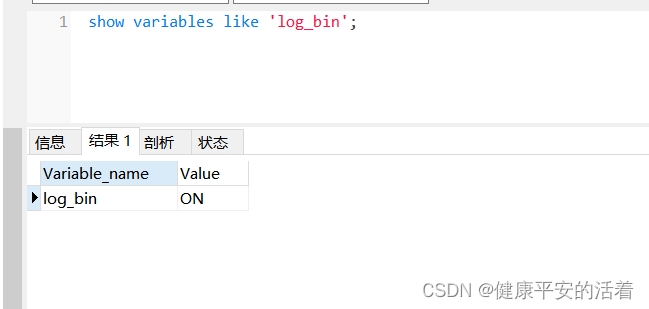

canal的原理是基于mysql binlog技术,所以,这里一定要开启mysql的binlog写入的功能。

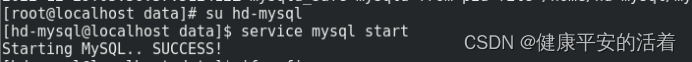

1.开启mysql服务:service mysqld start 或 service mysql start

2.检测binlog功能是否开启,如果是off,则没有开启,如果是on表示开启

show variables like 'log_bin';

3.如果binlog的显示为off,则修改配置文件 my.cnf 进行配置开启

vi /etc/my.cnf

# set zhucongfuzhi

server_id = 86 # 设置服务器编号

log_bin = master-bin # 启用二进制日志,并设置二进制日志文件前缀

expire_logs_days=7 #自动清理 7 天前的log文件,可根据需要修改

binlog_format=ROW #选择row模式

4.重启mysql数据库

切换到 mysql的 隶属用户:hd-mysql

[root@localhost local]# su hd-mysql

[hd-mysql@localhost etc]$ service mysql start

Starting MySQL. SUCCESS!

重启后,再查看binlog的值,为on,则表示已经开启了。

5.创建远程访问用户,并授权访问

进入mysql的命令模式:

create user 'canal'@'%'IDENTIFIED BY 'boc123'

grant all on *.* to 'canal'@'%'

flush privileges;

mysql> create user 'canal'@'%'IDENTIFIED BY 'boc123';

Query OK, 0 rows affected (0.01 sec)

mysql> grant all on . to 'canal'@'%';

Query OK, 0 rows affected (0.01 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.02 sec)

mysql>

2.3 配置安装canal同步工具

1.软件包下载地址

Releases · alibaba/canal · GitHub

2.上传软件包到服务器

3.解压并修改配置文件

将软件安装到:**/usr/local/ 目录下 ,完整路径为 /usr/local/canal 这个目录**

[root@localhost local]# mkdir -p canal

[root@localhost local]# cd canal/

[root@localhost canal]# ls

[root@localhost canal]# pwd

/usr/local/canal

[root@localhost canal]# tar -zxvf /root/export/servers/canal.deployer-1.1.6.tar.gz -C .

bin/startup.bat

bin/restart.sh

bin/startup.sh

bin/stop.sh

conf/metrics/

conf/example/

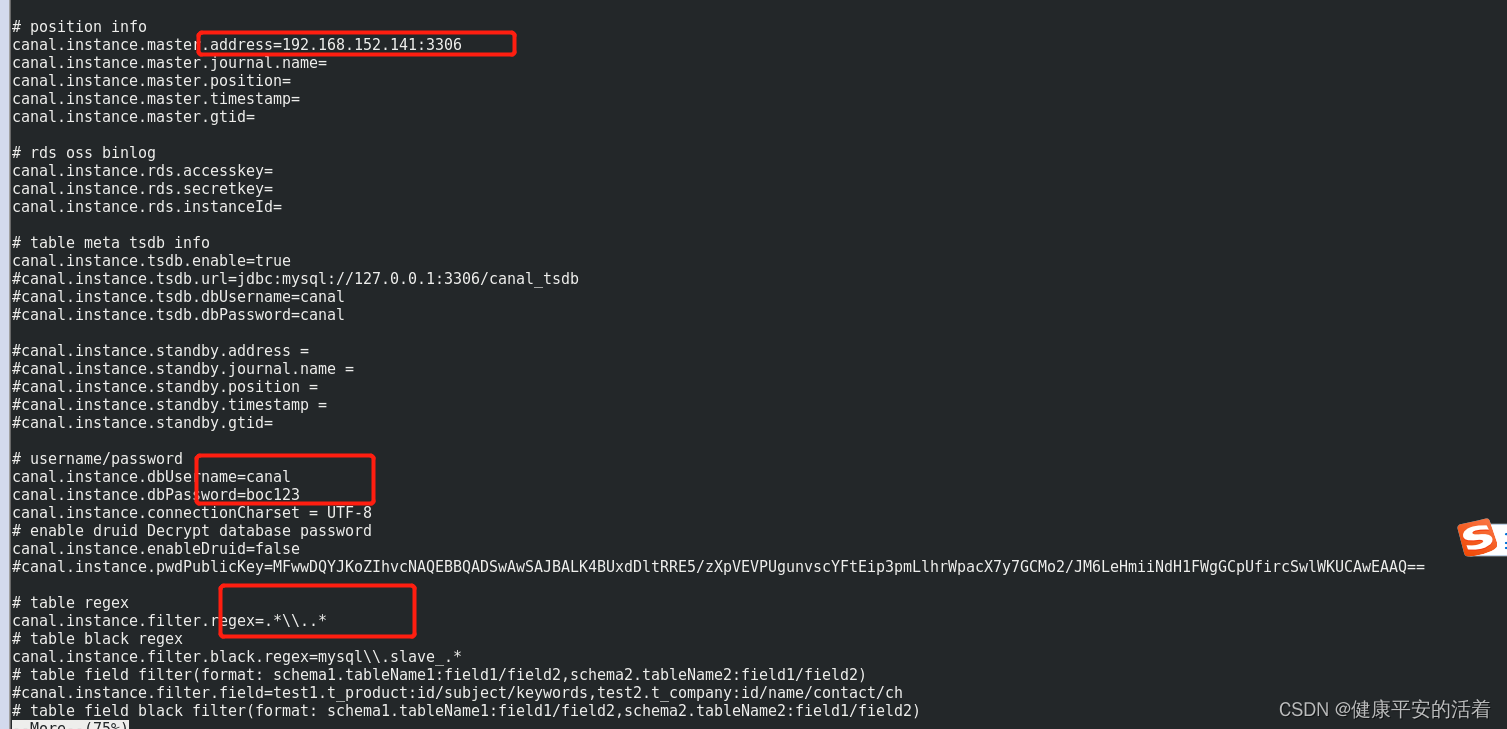

4.修改配置文件

vi conf/example/instance.properties

[root@localhost example]# pwd

/usr/local/canal/conf/example

[root@localhost example]# **vi instance.properties **

修改内容如下

mysql 数据解析关注的表,Perl正则表达式.

多个正则之间以逗号(,)分隔,转义符需要双斜杠(\)

常见例子:

- 所有表:.* or .\..

- canal schema下所有表: canal\..*

- canal下的以canal打头的表:canal\.canal.*

- canal schema(这里的canal是数据库的名字,test1 为表名)下的一张表:canal.test1

- 多个规则组合使用:canal\..*,mysql.test1,mysql.test2 (逗号分隔)

注意:此过滤条件只针对row模式的数据有效(ps. mixed/statement因为不解析sql,所以无法准确提取tableName进行过滤)

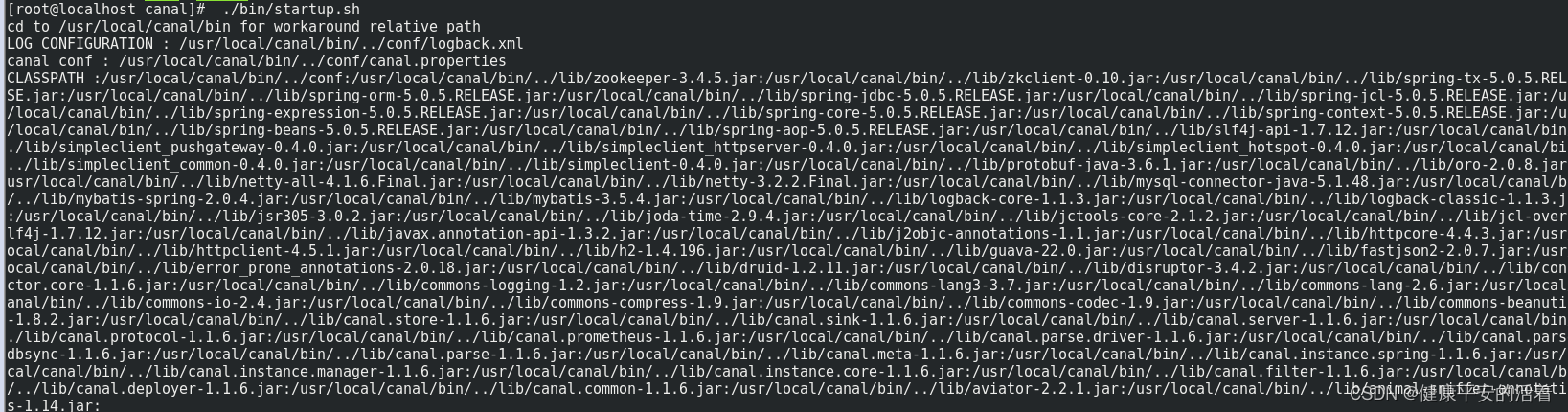

3.进入bin目录下启动

1.进入到安装目录: /usr/local/canal

**2.启动命令: ** sh bin/startup.sh

[root@localhost canal]#** ./bin/startup.sh**

cd to /usr/local/canal/bin for workaround relative path

LOG CONFIGURATION : /usr/local/canal/bin/../conf/logback.xml

canal conf : /usr/local/canal/bin/../conf/canal.properties

截图如下

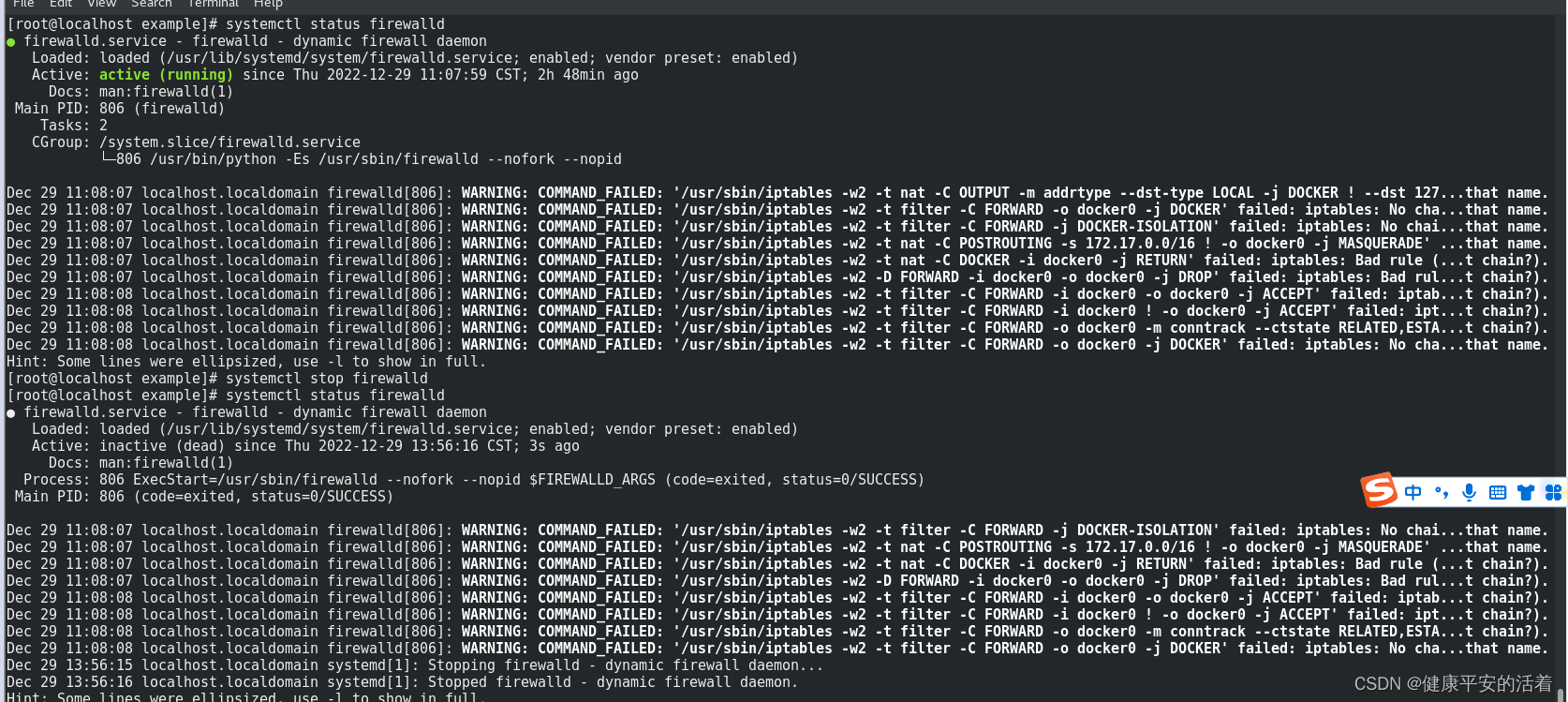

2.4 关闭防火墙

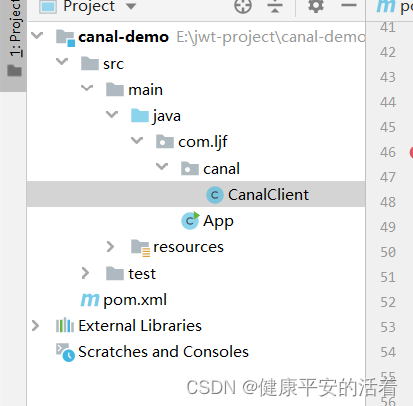

2.5 编写接收java程序

1.项目结构

2.pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.2.1.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.ljf</groupId>

<artifactId>canal-demo</artifactId>

<version>1.0-SNAPSHOT</version>

<name>canal-demo</name>

<!-- FIXME change it to the project's website -->

<url>http://www.example.com</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--mysql-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<dependency>

<groupId>commons-dbutils</groupId>

<artifactId>commons-dbutils</artifactId>

<version>1.7</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba.otter</groupId>

<artifactId>canal.client</artifactId>

<version>1.1.0</version>

</dependency>

</dependencies>

<build>

</build>

</project>

3.配置文件

# 服务端口

server.port=10010

# 服务名

spring.application.name=canal-client-t14

# 环境设置:dev、test、prod

spring.profiles.active=dev

# mysql数据库连接

spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

spring.datasource.url=jdbc:mysql://localhost:3306/xx_db?serverTimezone=GMT%2B8

spring.datasource.username=root

spring.datasource.password=cloudiip

4.处理类

package com.ljf.canal;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.protocol.CanalEntry.*;

import com.alibaba.otter.canal.protocol.Message;

import com.google.protobuf.InvalidProtocolBufferException;

import org.apache.commons.dbutils.DbUtils;

import org.apache.commons.dbutils.QueryRunner;

import org.springframework.stereotype.Component;

import javax.annotation.Resource;

import javax.sql.DataSource;

import java.net.InetSocketAddress;

import java.sql.Connection;

import java.sql.SQLException;

import java.util.Iterator;

import java.util.List;

import java.util.Queue;

import java.util.concurrent.ConcurrentLinkedQueue;

@Component

public class CanalClient {

//sql队列

private Queue<String> SQL_QUEUE = new ConcurrentLinkedQueue<>();

@Resource

private DataSource dataSource;

/**

* canal入库方法

*/

public void run() {

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress("192.168.152.141",

11111), "example", "canal", "boc123");

int batchSize = 1000;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

try {

while (true) {

//尝试从master那边拉去数据batchSize条记录,有多少取多少

Message message = connector.getWithoutAck(batchSize);

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

Thread.sleep(1000);

} else {

dataHandle(message.getEntries());

}

connector.ack(batchId);

//当队列里面堆积的sql大于一定数值的时候就模拟执行

if (SQL_QUEUE.size() >= 1) {

executeQueueSql();

}

}

} catch (InterruptedException e) {

e.printStackTrace();

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

} finally {

connector.disconnect();

}

}

/**

* 模拟执行队列里面的sql语句

*/

public void executeQueueSql() {

int size = SQL_QUEUE.size();

for (int i = 0; i < size; i++) {

String sql = SQL_QUEUE.poll();

System.out.println("[sql]----> " + sql);

this.execute(sql.toString());

}

}

/**

* 数据处理

*

* @param entrys

*/

private void dataHandle(List<Entry> entrys) throws InvalidProtocolBufferException {

for (Entry entry : entrys) {

if (EntryType.ROWDATA == entry.getEntryType()) {

RowChange rowChange = RowChange.parseFrom(entry.getStoreValue());

EventType eventType = rowChange.getEventType();

if (eventType == EventType.DELETE) {

saveDeleteSql(entry);

} else if (eventType == EventType.UPDATE) {

saveUpdateSql(entry);

} else if (eventType == EventType.INSERT) {

saveInsertSql(entry);

}

}

}

}

/**

* 保存更新语句

*

* @param entry

*/

private void saveUpdateSql(Entry entry) {

try {

RowChange rowChange = RowChange.parseFrom(entry.getStoreValue());

List<RowData> rowDatasList = rowChange.getRowDatasList();

for (RowData rowData : rowDatasList) {

List<Column> newColumnList = rowData.getAfterColumnsList();

StringBuffer sql = new StringBuffer("update " + entry.getHeader().getTableName() + " set ");

for (int i = 0; i < newColumnList.size(); i++) {

sql.append(" " + newColumnList.get(i).getName()

+ " = '" + newColumnList.get(i).getValue() + "'");

if (i != newColumnList.size() - 1) {

sql.append(",");

}

}

sql.append(" where ");

List<Column> oldColumnList = rowData.getBeforeColumnsList();

for (Column column : oldColumnList) {

if (column.getIsKey()) {

//暂时只支持单一主键

sql.append(column.getName() + "=" + column.getValue());

break;

}

}

SQL_QUEUE.add(sql.toString());

}

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

}

/**

* 保存删除语句

*

* @param entry

*/

private void saveDeleteSql(Entry entry) {

try {

RowChange rowChange = RowChange.parseFrom(entry.getStoreValue());

List<RowData> rowDatasList = rowChange.getRowDatasList();

for (RowData rowData : rowDatasList) {

List<Column> columnList = rowData.getBeforeColumnsList();

StringBuffer sql = new StringBuffer("delete from " + entry.getHeader().getTableName() + " where ");

for (Column column : columnList) {

if (column.getIsKey()) {

//暂时只支持单一主键

sql.append(column.getName() + "=" + column.getValue());

break;

}

}

SQL_QUEUE.add(sql.toString());

}

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

}

/**

* 保存插入语句

*

* @param entry

*/

private void saveInsertSql(Entry entry) {

try {

RowChange rowChange = RowChange.parseFrom(entry.getStoreValue());

List<RowData> rowDatasList = rowChange.getRowDatasList();

for (RowData rowData : rowDatasList) {

List<Column> columnList = rowData.getAfterColumnsList();

StringBuffer sql = new StringBuffer("insert into " + entry.getHeader().getTableName() + " (");

for (int i = 0; i < columnList.size(); i++) {

sql.append(columnList.get(i).getName());

if (i != columnList.size() - 1) {

sql.append(",");

}

}

sql.append(") VALUES (");

for (int i = 0; i < columnList.size(); i++) {

sql.append("'" + columnList.get(i).getValue() + "'");

if (i != columnList.size() - 1) {

sql.append(",");

}

}

sql.append(")");

SQL_QUEUE.add(sql.toString());

}

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

}

/**

* 入库

* @param sql

*/

public void execute(String sql) {

Connection con = null;

try {

if(null == sql) return;

con = dataSource.getConnection();

QueryRunner qr = new QueryRunner();

int row = qr.execute(con, sql);

System.out.println("update: "+ row);

} catch (SQLException e) {

e.printStackTrace();

} finally {

DbUtils.closeQuietly(con);

}

}

}

5.启动类

package com.ljf;

import com.ljf.canal.CanalClient;

import org.springframework.boot.CommandLineRunner;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import javax.annotation.Resource;

/**

* Hello world!

*

*/

@SpringBootApplication

public class App implements CommandLineRunner

{

@Resource

private CanalClient canalClient;

public static void main( String[] args )

{

System.out.println( "Hello World!" );

SpringApplication.run(App.class, args);

}

@Override

public void run(String... strings) throws Exception {

//项目启动,执行canal客户端监听

canalClient.run();

}

}

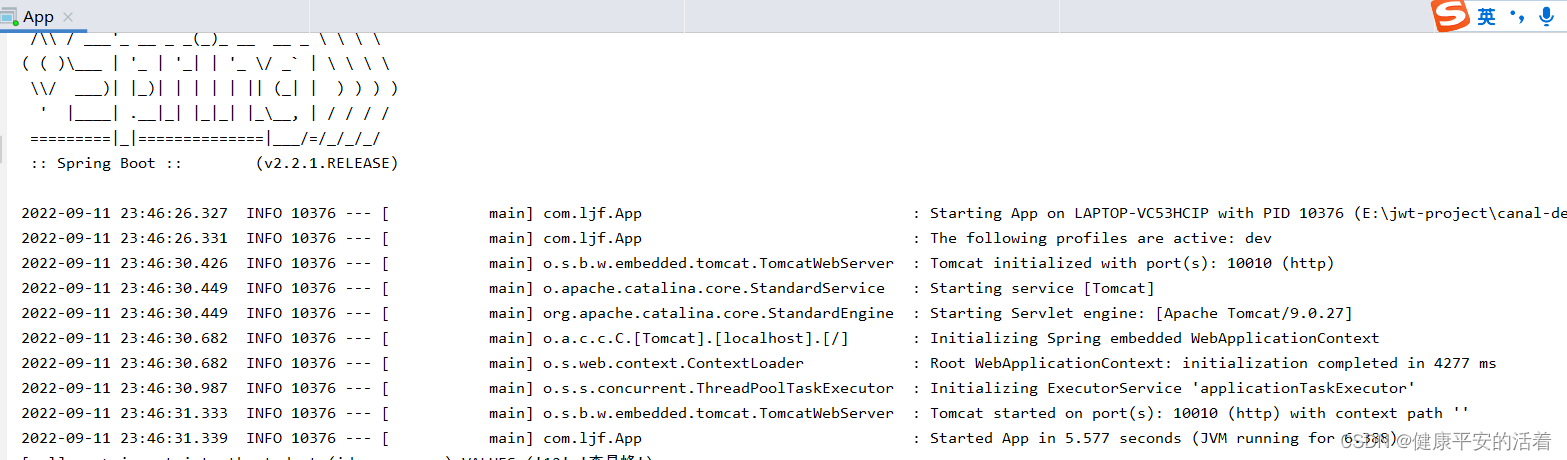

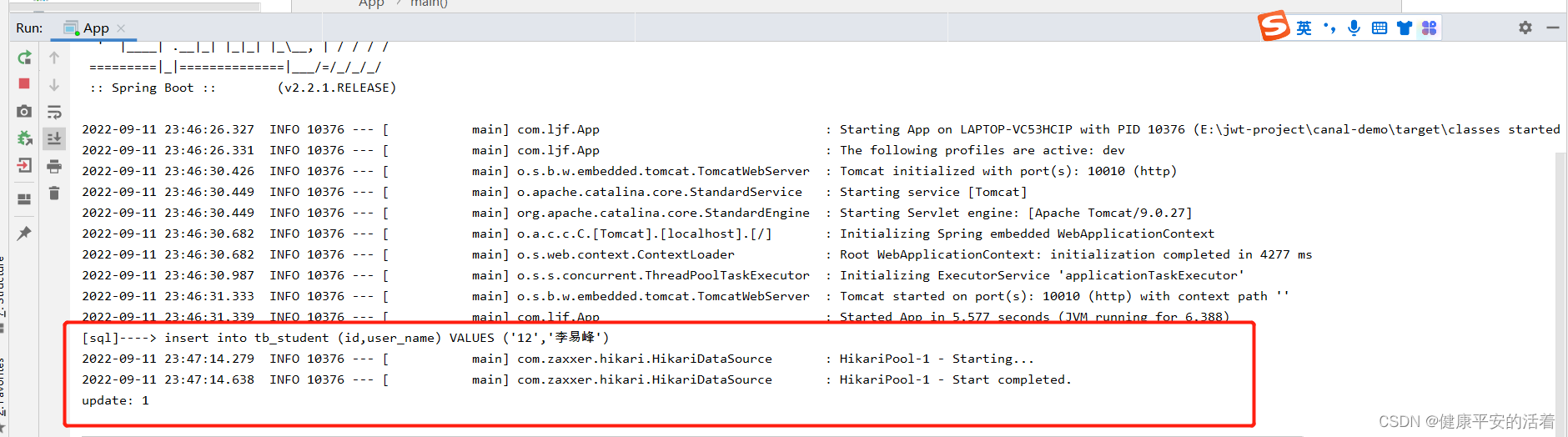

6.启动服务

2.6 测试验证

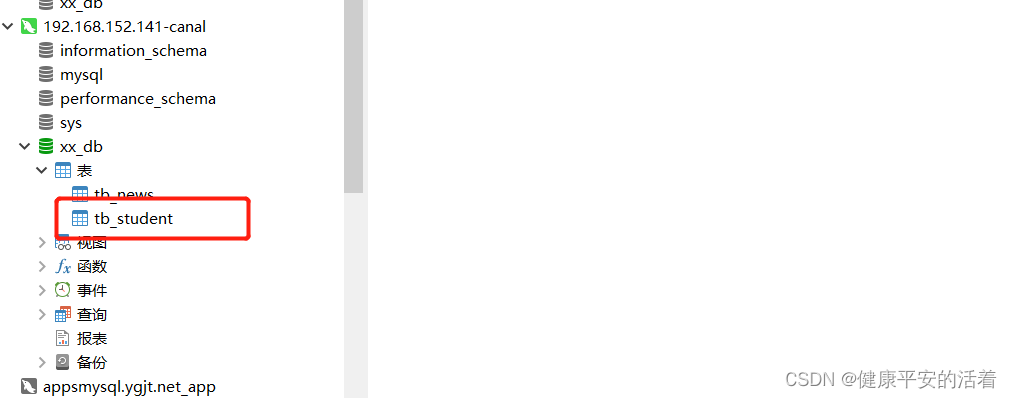

1.在目的端的数据库,新建一个同样名字的数据库,同样名字的数据表。

如这里源数据库 xx_db, 数据表tb_student;

目的端:

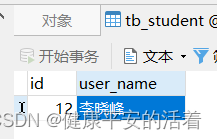

2.在源表中新增数据

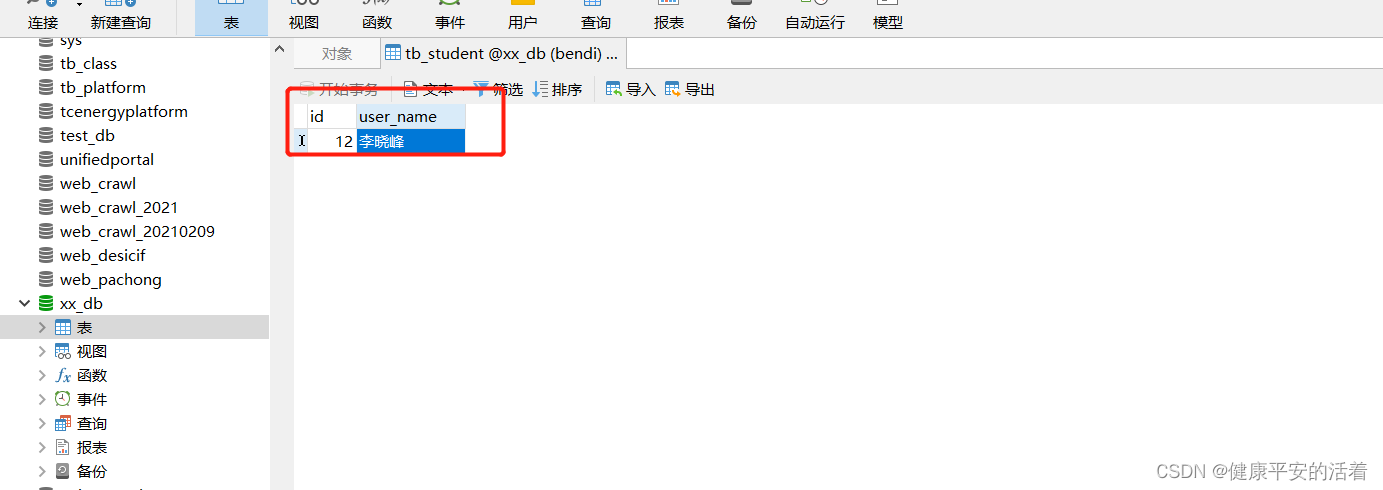

3.在目的库中查看

4.查看console

总结: 可以看到新增数据已经同步过来了!

版权归原作者 健康平安的活着 所有, 如有侵权,请联系我们删除。