Java通过jdbc接口连接hive

1、版本信息

hive版本:3.1.2

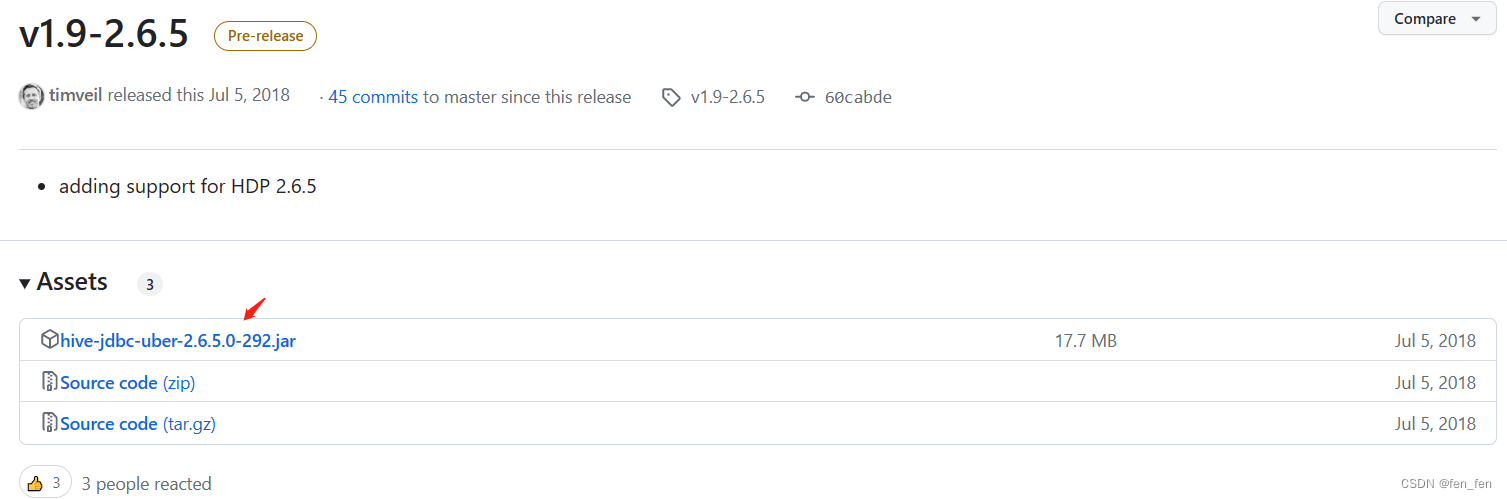

jdbc:hive-jdbc-uber-2.6.5.0-292.jar

下载驱动地址:https://github.com/timveil/hive-jdbc-uber-jar/releases/tag/v1.9-2.6.5

2、pom.xml引用

<dependency> <groupId>org.appache.hive</groupId> <artifactId>hive-jdbc-uber</artifactId> <version>2.6.5.0-292</version> <scope>system</scope> <systemPath>${pom.basedir}/src/main/resources/lib/hive-jdbc-uber-2.6.5.0-292.jar</systemPath> </dependency>

3、对应的数据库表

CREATE TABLE regre_one.hive2_varchar(

ID int,

aes varchar(1000),

sm4 varchar(1000),

sm4_a varchar(1000),

email varchar(1000),

phone varchar(1000),

ssn varchar(1000),

military varchar(1000),

passport varchar(1000),

intelssn varchar(1000),

intelpassport varchar(1000),

intelmilitary varchar(1000),

intelganghui varchar(1000),

inteltaitonei varchar(1000),

credit_card_short varchar(1000),

credit_card_long varchar(1000),

job varchar(1000));

4、Java通过jdbc接口连接hive的Java代码

package utils;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.Statement;

/**

* Description :

*

* @author : HMF

* Date : Created in 15:28 2023/3/21

* @version :

*/

public class dbTest_hive {

public Connection conn;

public dbTest_hive(){

//String driver="org.apache.hive.jdbc.HiveDriver";

String driver="com.ciphergateway.aoe.plugin.engine.AOEDriver";

String url="jdbc:hive2:aoe://10.1.1.242:10000/default";

String user="xxx";

String password="xxx";

try{

Class.forName(driver);

conn= DriverManager.getConnection(url,user,password);

}catch (Exception e){

e.printStackTrace();

System.exit(1);

}

}

public static void main(String[] args) {

dbTest_hive db=new dbTest_hive();

String insertSql="INSERT INTO default.hive_char (id,aes, sm4, sm4_a, email, phone, ssn, military, passport, intelssn, intelpassport, intelmilitary, intelganghui, inteltaitonei, credit_card_short, credit_card_long, job) VALUES (1,'小芬', '北京xx网络技术有限公司', '北京市', '[email protected]', '15652996964', '210302199608124861', '武水电字第3632734号', 'BWP018930705', '210302199608124861', 'BWP018930705', '武水电字第3632734号', 'H21157232', '9839487602', '117', '6227612145830440', '高级测试开发工程师')";

String selectSql="select id,aes, sm4, sm4_a, email, phone, ssn, military, passport, intelssn, intelpassport, intelmilitary, intelganghui, inteltaitonei, credit_card_short, credit_card_long, job from default.hive_char";

//String deleteSql="truncate table regre_one.hivetest_varchar";

//db.DBExecute(deleteSql);

db.DBExecute(insertSql);

db.DBQuery(selectSql);

System.exit(0);

}

void DBExecute(String sqlStr){

try{

Statement stmt=conn.createStatement();

boolean result=stmt.execute(sqlStr);

System.out.println("+++++sqlStr:"+sqlStr);

stmt.close();

}catch (Exception e){

e.printStackTrace();

}

}

void DBQuery(String sqlStr){

try{

Statement statement=conn.createStatement();

ResultSet rs=statement.executeQuery(sqlStr);

int columnCount=rs.getMetaData().getColumnCount();

System.out.println("+++++sqlStr:"+sqlStr);

while (rs.next()){

String result="";

for(int i=0;i<columnCount;i++){

result +="\t"+rs.getString(i+1);

}

System.out.println(result);

}

rs.close();

statement.close();

}catch (Exception e){

e.printStackTrace();

}

}

}

版权归原作者 fen_fen 所有, 如有侵权,请联系我们删除。