OpenShift简介

RedHat OpenShift 是一个领先的企业级 Kubernetes 容器平台,它为本地、混合和多云部署提供了基础。通过自动化运营和简化的生命周期管理,OpenShift 使开发团队能够构建和部署新的应用程序,并帮助运营团队配置、管理和扩展 Kubernetes 平台,OpenShift 还提供了一个CLI,该CLI支持Kubernetes CLI提供的操作的超集。

OpenShift有多个版本,两个主要版本:

- 红帽OpenShift的开源社区版本称为OKD(The Origin Community Distribution of Kubernetes,或OpenShift Kubernetes Distribution的缩写,原名OpenShiftOrigin),是 Red Hat OpenShift Container Platform (OCP) 的上游和社区支持版本。

- 红帽OpenShift的企业版本称为OCP(Red Hat OpenShift Container Platform ),OpenShift 的私有云产品,不购买订阅也可以安装使用,只是不提供技术支持。

OpenShift安装方式分为以下两种:

- IPI(Installer Provisioned Infrastructure)方式:安装程序配置的基础架构集群,基础架构引导和配置委托给安装程序,而不是自己进行。安装程序会创建支持集群所需的所有网络、机器和操作系统。

- UPI(User Provisioned Infrastructure)方式:用户配置的基础架构集群,必须由用户自行提供所有集群基础架构和资源,包括引导节点、网络、负载均衡、存储和集群的每个节点。

本文基于VMware vSphere7.0.3环境创建多个虚拟机,并在虚拟机上使用UPI模式手动部署OpenShift OKD 4.10版本集群,即官方介绍的Bare Metal (UPI)模式。

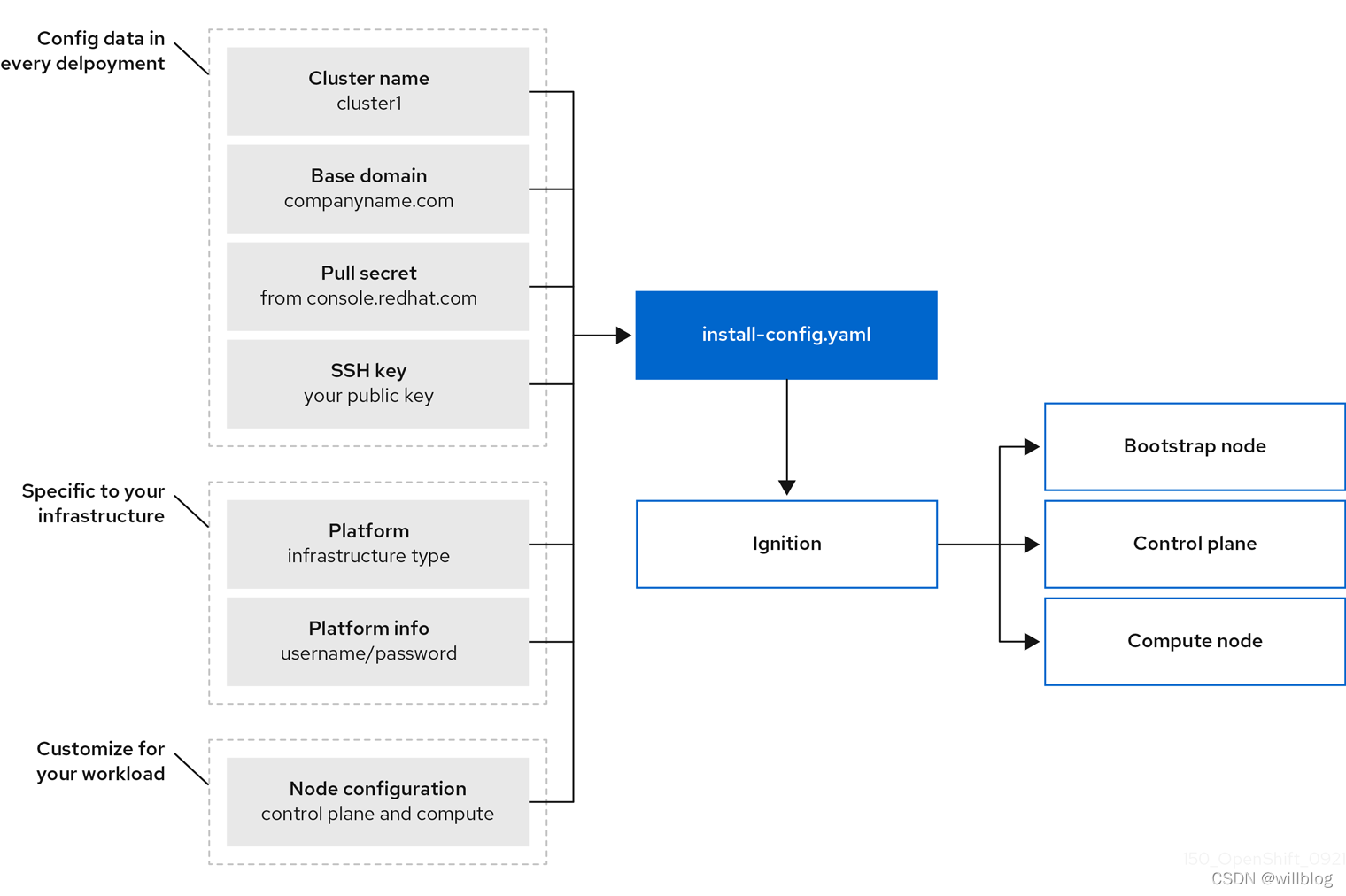

安装架构示意图:

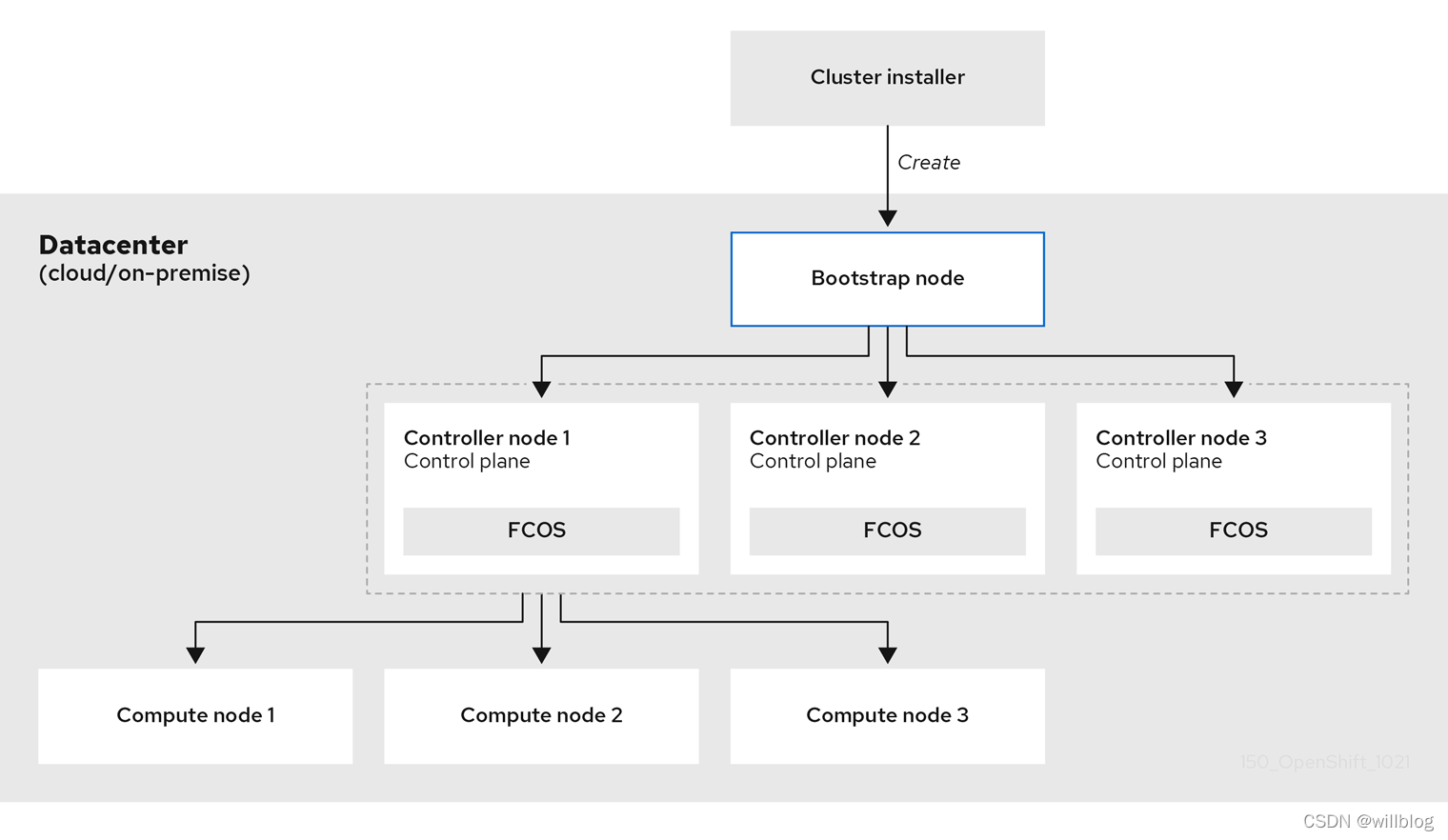

安装流程示意图:

OKD社区版安装

官方文档参考:https://docs.okd.io/latest/installing/installing_bare_metal/installing-bare-metal.html

备注:本篇文章大多内容出自官方文档示例。

集群基本信息

- 集群名称:okd4

- 基本域名:example.com

- 集群规格:3个maste节点,2个worker节点

节点配置清单:

前期只需创建一个bastion节点,在bastion节点准备就绪后,其他节点需要逐个手动引导启动,无需提前创建。

HostnameFQDNIPaddressNodeTypeCPUMemDiskOSbastionbastion.okd4.example.com192.168.72.20基础节点2C4G100GUbuntu 20.04.4 LTSbootstrapbootstrap.okd4.example.com192.168.72.21引导节点4C16G100GFedora CoreOS 35master0master0.okd4.example.com192.168.72.22主控节点4C16G100GFedora CoreOS 35master1master1.okd4.example.com192.168.72.23主控节点4C16G100GFedora CoreOS 35master2master2.okd4.example.com192.168.72.24主控节点4C16G100GFedora CoreOS 35worker0worker0.okd4.example.com192.168.72.25工作节点2C8G100GFedora CoreOS 35worker1worker1.okd4.example.com192.168.72.26工作节点2C8G100GFedora CoreOS 35api serverapi.okd4.example.com192.168.72.20Kubernetes APIapi-intapi-int.okd4.example.com192.168.72.20Kubernetes APIapps*.apps.okd4.example.com192.168.72.20Appsregistryregistry.example.com192.168.72.20镜像仓库

节点类型介绍:

- Bastion节点,基础节点或堡垒机节点,提供http服务和registry的本地安装仓库服务,同时所有的ign点火文件,coreos所需要的ssh-rsa密钥等都由这个节点生成,OS类型可以任意。

- Bootstrap节点,引导节点,引导工作完成后续可以删除,OS类型必须为Fedora CoreOS

- Master节点,openshift的管理节点,操作系统必须为Fedora CoreOS

- Worker节点,openshift的工作节点,操作系统可以在 Fedora CoreOS、Fedora 8.4 或 Fedora 8.5 之间进行选择。

bastion节点需要安装以下组件:

组件名称组件说明Docker容器环境Bind9DNS服务器Haproxy负载均衡服务器NginxWeb服务器Harbor容器镜像仓库OpenShift CLIoc命令行客户端OpenShift-Installopenshift安装程序

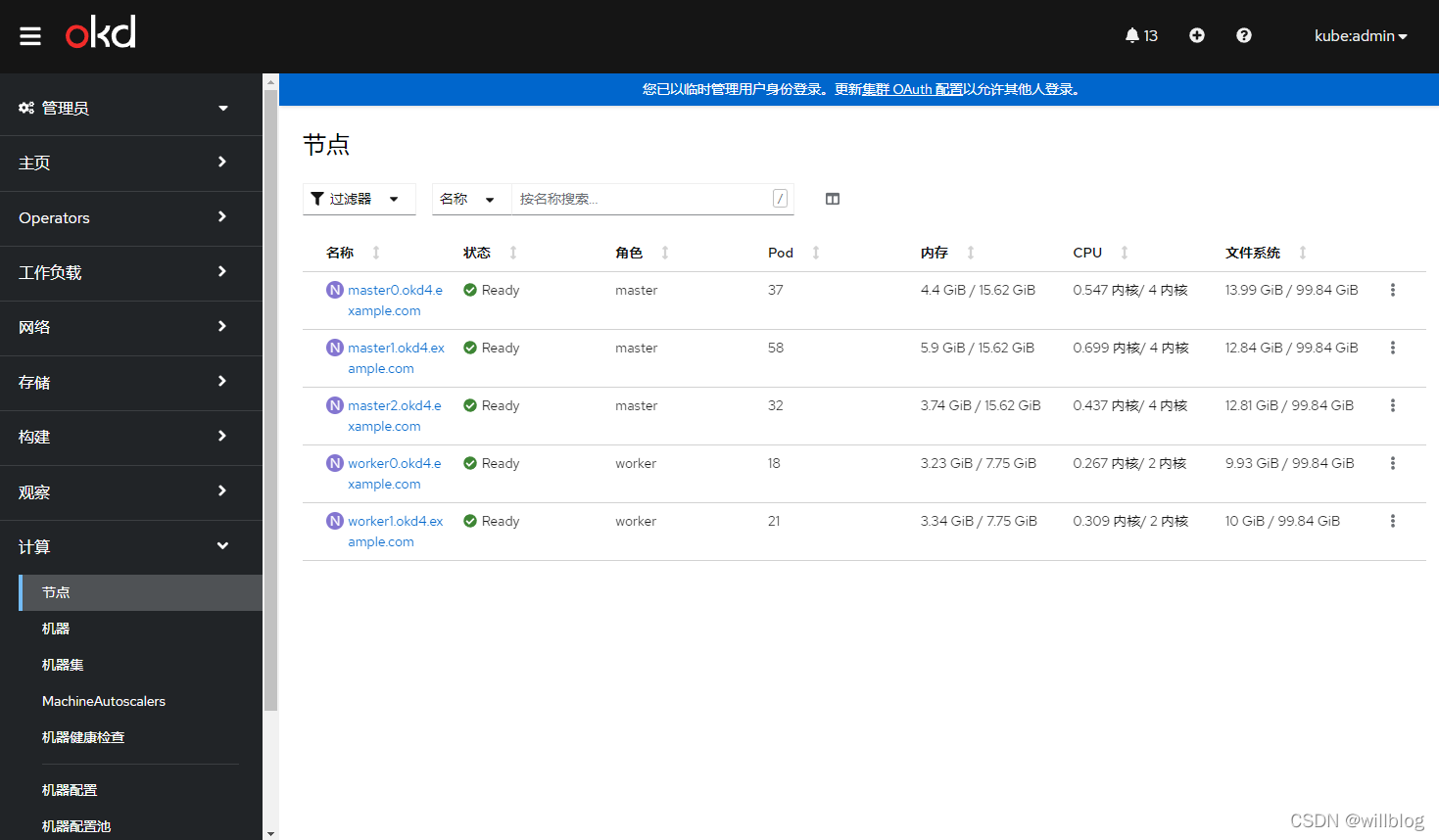

部署完成后的基础资源信息:

部署完成后的openshift节点信息:

Bastion环境准备

首先创建一台Bastion 节点,配置静态IP地址,作为基础部署节点,操作系统类型没有要求,这里使用ubuntu,无特殊说明以下所有操作在该节点执行。

1、修改主机名

hostnamectl set-hostname bastion.okd4.example.com

2、安装docker

curl -fsSL https://get.docker.com |bash -s docker --mirror Aliyun

systemctl status dockerdocker version

3、查看节点ip信息

root@bastion:~# ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:99:0d:57 brd ff:ff:ff:ff:ff:ff

inet 192.168.72.20/24 brd 192.168.72.255 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe99:d57/64 scope link

valid_lft forever preferred_lft forever

4、查看节点OS信息

root@bastion:~# cat /etc/os-release NAME="Ubuntu"VERSION="20.04.4 LTS (Focal Fossa)"ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 20.04.4 LTS"VERSION_ID="20.04"HOME_URL="https://www.ubuntu.com/"SUPPORT_URL="https://help.ubuntu.com/"BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"VERSION_CODENAME=focal

UBUNTU_CODENAME=focal

Bind安装

在 OKD 部署中,以下组件需要 DNS 名称解析:

- Kubernetes API

- OKD 应用访问入口

- 引导节点、控制平面和计算节点

Kubernetes API、引导机器、控制平面机器和计算节点也需要反向 DNS 解析。DNS A/AAAA 或 CNAME 记录用于名称解析,PTR 记录用于反向名称解析。反向记录很重要,因为 Fedora CoreOS (FCOS) 使用反向记录来设置所有节点的主机名,除非主机名由 DHCP 提供。此外,反向记录用于生成 OKD 需要操作的证书签名请求 (CSR)。

在每条记录中,

<cluster_name>

是集群名称,并且

<base_domain>

是在

install-config.yaml

文件中指定的基本域。完整的 DNS 记录采用以下形式:

<component>.<cluster_name>.<base_domain>.

.

1、创建bind配置文件目录

mkdir -p /etc/bind

mkdir -p /var/lib/bind

mkdir -p /var/cache/bind

2、创建bind主配置文件

options {

directory "/var/cache/bind";

listen-on { any;};

listen-on-v6 { any;};

allow-query { any;};

allow-query-cache { any;};

recursion yes;

allow-recursion { any;};

allow-transfer { none;};

allow-update { none;};

auth-nxdomain no;

dnssec-validation no;

forward first;

forwarders {114.114.114.114;8.8.8.8;};};

zone "example.com" IN {type master;file"/var/lib/bind/example.com.zone";};

zone "72.168.192.in-addr.arpa" IN {type master;file"/var/lib/bind/72.168.192.in-addr.arpa";};

EOF

4、创建正向解析配置文件

cat>/var/lib/bind/example.com.zone<<'EOF'

$TTL 1W

@ IN SOA ns1.example.com. root (

2019070700 ; serial

3H ; refresh (3 hours)

30M ; retry (30 minutes)

2W ; expiry (2 weeks)

1W ) ; minimum (1 week)

IN NS ns1.example.com.

IN MX 10 smtp.example.com.

;

ns1.example.com. IN A 192.168.72.20

smtp.example.com. IN A 192.168.72.20

;

registry.example.com. IN A 192.168.72.20

api.okd4.example.com. IN A 192.168.72.20

api-int.okd4.example.com. IN A 192.168.72.20

;

*.apps.okd4.example.com. IN A 192.168.72.20

;

bastion.okd4.example.com. IN A 192.168.72.20

bootstrap.okd4.example.com. IN A 192.168.72.21

;

master0.okd4.example.com. IN A 192.168.72.22

master1.okd4.example.com. IN A 192.168.72.23

master2.okd4.example.com. IN A 192.168.72.24

;

worker0.okd4.example.com. IN A 192.168.72.25

worker1.okd4.example.com. IN A 192.168.72.26

EOF

5、创建反向解析配置文件

cat>/var/lib/bind/72.168.192.in-addr.arpa<<'EOF'

$TTL 1W

@ IN SOA ns1.example.com. root (

2019070700 ; serial

3H ; refresh (3 hours)

30M ; retry (30 minutes)

2W ; expiry (2 weeks)

1W ) ; minimum (1 week)

IN NS ns1.example.com.

;

20.72.168.192.in-addr.arpa. IN PTR api.okd4.example.com.

20.72.168.192.in-addr.arpa. IN PTR api-int.okd4.example.com.

;

20.72.168.192.in-addr.arpa. IN PTR bastion.okd4.example.com.

21.72.168.192.in-addr.arpa. IN PTR bootstrap.okd4.example.com.

;

22.72.168.192.in-addr.arpa. IN PTR master0.okd4.example.com.

23.72.168.192.in-addr.arpa. IN PTR master1.okd4.example.com.

24.72.168.192.in-addr.arpa. IN PTR master2.okd4.example.com.

;

25.72.168.192.in-addr.arpa. IN PTR worker0.okd4.example.com.

26.72.168.192.in-addr.arpa. IN PTR worker1.okd4.example.com.

EOF

配置文件权限,允许容器有读写权限

chmod -R a+rwx /etc/bind

chmod -R a+rwx /var/lib/bind/

chmod -R a+rwx /var/cache/bind/

6、ubuntu中的dns由systemd-resolved管理,修改以下配置项,指定dns为本地DNS:

root@ubuntu:~# cat /etc/systemd/resolved.conf [Resolve]DNS=192.168.72.20

重启systemd-resolved服务

systemctl restart systemd-resolved.service

创建到resolv.conf的链接:

ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

查看resolv.conf配置,确认输出内容如下:

root@ubuntu:~# cat /etc/resolv.conf ......# operation for /etc/resolv.conf.

nameserver 192.168.72.20

nameserver 114.114.114.114

7、以容器方式启动bind服务,注意绑定到本机IP,以免与ubuntu默认dns服务53端口冲突:

docker run -d --name bind9 \

--restart always \

--name=bind9 \

-e TZ=Asia/Shanghai \

--publish 192.168.72.20:53:53/udp \

--publish 192.168.72.20:53:53/tcp \

--publish 192.168.72.20:953:953/tcp \

--volume /etc/bind:/etc/bind \

--volume /var/cache/bind:/var/cache/bind \

--volume /var/lib/bind:/var/lib/bind \

--volume /var/log/bind:/var/log \

internetsystemsconsortium/bind9:9.18

8、使用dig命令来验证正向域名解析

dig +noall +answer @192.168.72.20 registry.example.com

dig +noall +answer @192.168.72.20 api.okd4.example.com

dig +noall +answer @192.168.72.20 api-int.okd4.example.com

dig +noall +answer @192.168.72.20 console-openshift-console.apps.okd4.example.com

dig +noall +answer @192.168.72.20 bootstrap.okd4.example.com

dig +noall +answer @192.168.72.20 master0.okd4.example.com

dig +noall +answer @192.168.72.20 master1.okd4.example.com

dig +noall +answer @192.168.72.20 master2.okd4.example.com

dig +noall +answer @192.168.72.20 worker0.okd4.example.com

dig +noall +answer @192.168.72.20 worker1.okd4.example.com

正向解析结果如下,确认每一项都能够正常解析

root@bastion:~# dig +noall +answer @192.168.72.20 registry.example.com

registry.example.com. 604800 IN A 192.168.72.20

root@bastion:~# dig +noall +answer @192.168.72.20 api.okd4.example.com

api.okd4.example.com. 604800 IN A 192.168.72.20

root@bastion:~# dig +noall +answer @192.168.72.20 api-int.okd4.example.com

api-int.okd4.example.com. 604800 IN A 192.168.72.20

root@bastion:~# dig +noall +answer @192.168.72.20 console-openshift-console.apps.okd4.example.com

console-openshift-console.apps.okd4.example.com. 604800 IN A 192.168.72.20

root@bastion:~# dig +noall +answer @192.168.72.20 bootstrap.okd4.example.com

bootstrap.okd4.example.com. 604800 IN A 192.168.72.21

root@bastion:~# dig +noall +answer @192.168.72.20 master0.okd4.example.com

master0.okd4.example.com. 604800 IN A 192.168.72.22

root@bastion:~# dig +noall +answer @192.168.72.20 master1.okd4.example.com

master1.okd4.example.com. 604800 IN A 192.168.72.23

root@bastion:~# dig +noall +answer @192.168.72.20 master2.okd4.example.com

master2.okd4.example.com. 604800 IN A 192.168.72.24

root@bastion:~# dig +noall +answer @192.168.72.20 worker0.okd4.example.com

worker0.okd4.example.com. 604800 IN A 192.168.72.25

root@bastion:~# dig +noall +answer @192.168.72.20 worker1.okd4.example.com

worker1.okd4.example.com. 604800 IN A 192.168.72.26

验证反向域名解析

dig +noall +answer @192.168.72.20 -x 192.168.72.21

dig +noall +answer @192.168.72.20 -x 192.168.72.22

dig +noall +answer @192.168.72.20 -x 192.168.72.23

dig +noall +answer @192.168.72.20 -x 192.168.72.24

dig +noall +answer @192.168.72.20 -x 192.168.72.25

dig +noall +answer @192.168.72.20 -x 192.168.72.26

反向解析结果如下,同样需要确认每一项都能够正常解析

root@bastion:~# dig +noall +answer @192.168.72.20 -x 192.168.72.2121.72.168.192.in-addr.arpa. 604800 IN PTR bootstrap.okd4.example.com.

root@bastion:~# dig +noall +answer @192.168.72.20 -x 192.168.72.2222.72.168.192.in-addr.arpa. 604800 IN PTR master0.okd4.example.com.

root@bastion:~# dig +noall +answer @192.168.72.20 -x 192.168.72.2323.72.168.192.in-addr.arpa. 604800 IN PTR master1.okd4.example.com.

root@bastion:~# dig +noall +answer @192.168.72.20 -x 192.168.72.2424.72.168.192.in-addr.arpa. 604800 IN PTR master2.okd4.example.com.

root@bastion:~# dig +noall +answer @192.168.72.20 -x 192.168.72.2525.72.168.192.in-addr.arpa. 604800 IN PTR worker0.okd4.example.com.

root@bastion:~# dig +noall +answer @192.168.72.20 -x 192.168.72.2626.72.168.192.in-addr.arpa. 604800 IN PTR worker1.okd4.example.com.

安装Haproxy

使用haproxy创建负载均衡器,负载machine-config、kube-apiserver和集群ingress controller。

1、创建haproxy配置目录

mkdir -p /etc/haproxy

2、创建haproxy配置文件

cat>/etc/haproxy/haproxy.cfg<<EOF

global

log 127.0.0.1 local2

maxconn 4000

daemon

defaults

mode http

log global

option dontlognull

option http-server-close

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend stats

bind *:1936

mode http

log global

maxconn 10

stats enable

stats hide-version

stats refresh 30s

stats show-node

stats show-desc Stats for openshift cluster

stats auth admin:openshift

stats uri /stats

frontend openshift-api-server

bind *:6443

default_backend openshift-api-server

mode tcp

option tcplog

backend openshift-api-server

balance source

mode tcp

server bootstrap 192.168.72.21:6443 check

server master0 192.168.72.22:6443 check

server master1 192.168.72.23:6443 check

server master2 192.168.72.24:6443 check

frontend machine-config-server

bind *:22623

default_backend machine-config-server

mode tcp

option tcplog

backend machine-config-server

balance source

mode tcp

server bootstrap 192.168.72.21:22623 check

server master0 192.168.72.22:22623 check

server master1 192.168.72.23:22623 check

server master2 192.168.72.24:22623 check

frontend ingress-http

bind *:80

default_backend ingress-http

mode tcp

option tcplog

backend ingress-http

balance source

mode tcp

server worker0 192.168.72.25:80 check

server worker1 192.168.72.26:80 check

frontend ingress-https

bind *:443

default_backend ingress-https

mode tcp

option tcplog

backend ingress-https

balance source

mode tcp

server worker0 192.168.72.25:443 check

server worker1 192.168.72.26:443 check

EOF

以容器方式启动haproxy服务

docker run -d --name haproxy \

--restart always \

-p 1936:1936 \

-p 6443:6443 \

-p 22623:22623 \

-p 80:80 -p 443:443 \

--sysctl net.ipv4.ip_unprivileged_port_start=0\

-v /etc/haproxy/:/usr/local/etc/haproxy:ro \

haproxy:2.5.5-alpine3.15

安装Nginx

OpenShift 集群部署时需要从 web服务器下载 CoreOS Image 和 Ignition 文件,这里使用nginx提供文件下载。

1、创建nginx相关目录

mkdir -p /etc/nginx/templates

mkdir -p /usr/share/nginx/html/{ignition,install}

2、创建nginx配置文件,打开目录浏览功能(可选)

cat>/etc/nginx/templates/default.conf.template<<EOF

server {

listen 80;

listen [::]:80;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

autoindex on;

autoindex_exact_size off;

autoindex_format html;

autoindex_localtime on;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

修改文件权限,允许容器内部读写

chmod -R a+rwx /etc/nginx/

chmod -R a+rwx /usr/share/nginx/

3、以容器方式启动nginx服务,注意修改为以下端口以免冲突

docker run -d --name nginx-okd \

--restart always \

-p 8088:80 \

-v /etc/nginx/templates:/etc/nginx/templates \

-v /usr/share/nginx/html:/usr/share/nginx/html:ro \

nginx:1.21.6-alpine

浏览器访问验证:

安装OpenShift CLI

OpenShift CLI ( oc) 用于从命令行界面与 OKD 交互,可以在 Linux、Windows 或 macOS 上安装oc。

下载地址:https://github.com/openshift/okd/releases

1、下载openshift-client到本地,如果网络不好可以使用浏览器下载后在上传到bastion节点

wget https://github.com/openshift/okd/releases/download/4.10.0-0.okd-2022-03-07-131213/openshift-client-linux-4.10.0-0.okd-2022-03-07-131213.tar.gz

2、解压到/usr/local/bin目录下

tar -zxvf openshift-client-linux-4.10.0-0.okd-2022-03-07-131213.tar.gz

cp oc /usr/local/bin/

cp kubectl /usr/local/bin/

3、检查版本,后续拉取镜像需要该版本信息

[root@bastion ~]# oc version

Client Version: 4.10.0-0.okd-2022-03-07-131213

安装OpenShift安装程序

openshift-install是OpenShift 4.x cluster的安装程序,是openshift集群的安装部署工具。

下载地址:https://github.com/openshift/okd/releases

1、下载openshift-install到本地,版本与openshift CLI要一致:

wget https://github.com/openshift/okd/releases/download/4.10.0-0.okd-2022-03-07-131213/openshift-install-linux-4.10.0-0.okd-2022-03-07-131213.tar.gz

2、解压到/usr/local/bin目录下

tar -zxvf openshift-install-linux-4.10.0-0.okd-2022-03-07-131213.tar.gz

cp openshift-install /usr/local/bin/

3、检查版本

[root@bastion ~]# openshift-install version

openshift-install 4.10.0-0.okd-2022-03-07-131213

built from commit 3b701903d96b6375f6c3852a02b4b70fea01d694

release image quay.io/openshift/okd@sha256:2eee0db9818e22deb4fa99737eb87d6e9afcf68b4e455f42bdc3424c0b0d0896

release architecture amd64

安装harbor镜像仓库

使用harbor作为openshift镜像仓库,提前将对应版本镜像同步到本地仓库,加快后续安装过程。

1、安装docker-compose

curl -L "https://get.daocloud.io/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

docker-compose version

2、下载harbor并解压

curl -L https://github.com/goharbor/harbor/releases/download/v2.4.2/harbor-offline-installer-v2.4.2.tgz -o ./harbor-offline-installer-v2.4.2.tgz

tar -zxf harbor-offline-installer-v2.4.2.tgz -C /opt/

如果下载较慢,可以考虑使用国内清华源地址:

https://mirrors.tuna.tsinghua.edu.cn/github-release/goharbor/harbor/v2.4.2/harbor-offline-installer-v2.4.2.tgz

3、生成harbor https证书,注意修改域名信息,参考自harbor官方文档

mkdir -p /opt/harbor/cert

cd /opt/harbor/cert

openssl genrsa -out ca.key 4096

openssl req -x509 -new -nodes -sha512 -days 3650\

-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=registry.example.com"\

-key ca.key \

-out ca.crt

openssl genrsa -out registry.example.com.key 4096

openssl req -sha512 -new \

-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=registry.example.com"\

-key registry.example.com.key \

-out registry.example.com.csr

cat> v3.ext <<-EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

DNS.1=registry.example.com

DNS.2=registry.example

DNS.3=registry

EOF

openssl x509 -req -sha512 -days 3650\

-extfile v3.ext \

-CA ca.crt -CAkey ca.key -CAcreateserial \

-in registry.example.com.csr \

-out registry.example.com.crt

查看生成的证书

root@bastion:/opt/harbor/cert# ll

total 28

drwxr-xr-x 2 root root 158 Apr 321:51 ./

drwxr-xr-x 3 root root 19 Apr 321:39 ../

-rw-r--r-- 1 root root 2069 Apr 321:49 ca.crt

-rw------- 1 root root 3243 Apr 321:49 ca.key

-rw-r--r-- 1 root root 41 Apr 321:51 ca.srl

-rw-r--r-- 1 root root 2151 Apr 321:51 registry.example.com.crt

-rw-r--r-- 1 root root 1716 Apr 321:50 registry.example.com.csr

-rw------- 1 root root 3243 Apr 321:50 registry.example.com.key

-rw-r--r-- 1 root root 277 Apr 321:50 v3.ext

复制证书到操作系统目录

cp ca.crt registry.example.com.crt /usr/local/share/ca-certificates/

update-ca-certificates

复制证书到harbor运行目录

mkdir -p /data/cert/

cp registry.example.com.crt /data/cert/

cp registry.example.com.key /data/cert/

将证书提供给docker

openssl x509 -inform PEM -in registry.example.com.crt -out registry.example.com.cert

mkdir -p /etc/docker/certs.d/registry.example.com:8443

cp registry.example.com.cert /etc/docker/certs.d/registry.example.com:8443/

cp registry.example.com.key /etc/docker/certs.d/registry.example.com:8443/

cp ca.crt /etc/docker/certs.d/registry.example.com:8443/

4、修改harbor配置文件,调整以下内容,注意修改为以下端口,以免与haproxy冲突

cd /opt/harbor

cp harbor.yml.tmpl harbor.yml

# vi harbor.yml

hostname: registry.example.com

http:

port: 8080

https:

port: 8443

certificate: /data/cert/registry.example.com.crt

private_key: /data/cert/registry.example.com.key

5、安装并启动harbor

./install.sh

配置harbor开机自启动

cat>/etc/systemd/system/harbor.service<<EOF

[Unit]

Description=Harbor

After=docker.service systemd-networkd.service systemd-resolved.service

Requires=docker.service

Documentation=http://github.com/goharbor/harbor

[Service]

Type=simple

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/docker-compose -f /opt/harbor/docker-compose.yml up

ExecStop=/usr/local/bin/docker-compose -f /opt/harbor/docker-compose.yml down

[Install]

WantedBy=multi-user.target

EOF

systemctl enable harbor

确认harbor运行状态正常

root@bastion:/opt/harbor# docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------------------------------------------------------------------------

harbor-core /harbor/entrypoint.sh Up (healthy)

harbor-db /docker-entrypoint.sh 9613 Up (healthy)

harbor-jobservice /harbor/entrypoint.sh Up (healthy)

harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy)127.0.0.1:1514->10514/tcp

harbor-portal nginx -g daemon off; Up (healthy)

nginx nginx -g daemon off; Up (healthy)0.0.0.0:8080->8080/tcp,:::8080->8080/tcp, 0.0.0.0:8443->8443/tcp,:::8443->8443/tcp

redis redis-server /etc/redis.conf Up (healthy)

registry /home/harbor/entrypoint.sh Up (healthy)

registryctl /home/harbor/start.sh Up (healthy)

验证登录harbor,用户名为admin,默认密码为Harbor12345

docker login registry.example.com:8443

浏览器访问Harbor,注意,本地配置好hosts解析或指定dns服务器

https://registry.example.com:8443

手动创建一个项目名为openshift

同步okd镜像到harbor仓库

harbor镜像仓库准备就绪后,开始将quay.io中的openshit okd容器镜像同步到本地。

1、创建一个openshift临时安装目录

mkdir -p /opt/okd-install/4.10.0/

cd /opt/okd-install/4.10.0/

2、在红帽网站注册账号,下载pull-secret:https://console.redhat.com/openshift/install/pull-secret,(理论上可选,以下pull-secret内容仅为演示不可用,需要自行下载)

root@bastion:/opt/okd-install/4.10.0# cat pull-secret.txt{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfZjBjYmJiMDgyN2QyNGI0NDhjM2NkYjFiNTg0Y2M5MTY6VVBPRjZVTFRUQUpDTVhMSzFaNElNQkxWRUQwVjQ0VUFQOFVBSzZIR0pQWVNONUtZUDdETk1YMlZWWkw4M1A3TQ==","email":"[email protected]"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2NsdfasdfsafMDgyN2QyNGI0NDhjM2NkYjFiNTg0Y2M5MTY6VVBPRjZVTFRUQUpDTVhMSzFaNElNQkxWRUQwVjQ0VUFQOFVBSzZIR0pQWVNONUtZUDdETk1YMlZWWkw4M1A3TQ==","email":"[email protected]"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLWExMWJiZjQ5LWExMzktNDBhNC1hZjM3LTViMWU1MzkyNjg2MzpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXlaREUzTkdWall6WXpaalUwTkRZMkasdfsadfsafUzWkNKOS5oOUN2ZXNrbGJfaTM1TXVfMXhtWWdwMW91YzhYNHQxM2lNQW1ESk40M0dlQ1lKQlpBVXFQYVZGN09aeXNuSHBYaHprNWpYZWc4MzAwUUFRaTNBVnBNNFIxZ2VaeElVQjQ5S2lDbzVTWXRibHVPWGhRc2xXcUdFRklzbnJMSkxwS0hPNXUzX1BaWVNRNWxGNDFIWks5MERPdlo4YVAwMmpIMmZmZ0x4MXFmZFBkUXIxdTNDc3pCYTlWcFBzQjRMWlNzQkQwQWVCYmZibHNpUkRXbDBUSWx4bnFiTWItSFMydlBnSHZQbWs0ZENub2Q5TDdWek5FMVpJNkdPOC1LM0NCb1NacFVNTm9JdlFFWHpBZVJoRnZVZlF2RlJYRDJUblJQSHg2UzdOemM4SnRja3R1OE1FSmJmV0ZvQ2NyeE9uejNsaXpsSkVYYzdjLTA2V0NpVmZndVpfTXpOU1dmSlhWOW9QMlBxQV94eFhELS1hWE84OUhUczdmNWQ4VXgtTEhqczQybWhSMU9jeWYyT2ZYbUloSkRGaVRIaENqUGVSTHBDbEFSeDI1ZzN4NVBXSzdWYXdRMEFodlgtSlhvRXJLMlNreGcxdGxFclVfS3NSblpMYkIyTm82Q3IySVN0aUZ3WUxMSHl6LUp3QWdsel92VnBzeVF2eExtNFNCcy1BdUpPdjFqRjVGQnc4VzNCSG9sX0ZrUEVVZnZTQWRqaG5odXRXMVM3TlFYZ3FJR1lkRGEzTERFRWxta3FwYlVELWZCSzBQa0MwQTA4a3FscGROUmhyNG5PSGZCRFJVdjJMamppUEtSbFU5d012WjhuNGRkcVFLOXNEMGpYaW1UV3hpLTduanlvOEJKaTBrTDF6VGVzMmh4d0RweS1lTFdWSlJYZFdlRE9OZEVucw==","email":"[email protected]"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLWExMWJiZjQ5LWExMzktNDBhNC1hZjM3LTViMWU1MzkyNjg2MzpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXlaREUzTkdWall6WXpaalUwTkRZMk9EZasdfsadfasdfS5oOUN2ZXNrbGJfaTM1TXVfMXhtWWdwMW91YzhYNHQxM2lNQW1ESk40M0dlQ1lKQlpBVXFQYVZGN09aeXNuSHBYaHprNWpYZWc4MzAwUUFRaTNBVnBNNFIxZ2VaeElVQjQ5S2lDbzVTWXRibHVPWGhRc2xXcUdFRklzbnJMSkxwS0hPNXUzX1BaWVNRNWxGNDFIWks5MERPdlo4YVAwMmpIMmZmZ0x4MXFmZFBkUXIxdTNDc3pCYTlWcFBzQjRMWlNzQkQwQWVCYmZibHNpUkRXbDBUSWx4bnFiTWItSFMydlBnSHZQbWs0ZENub2Q5TDdWek5FMVpJNkdPOC1LM0NCb1NacFVNTm9JdlFFWHpBZVJoRnZVZlF2RlJYRDJUblJQSHg2UzdOemM4SnRja3R1OE1FSmJmV0ZvQ2NyeE9uejNsaXpsSkVYYzdjLTA2V0NpVmZndVpfTXpOU1dmSlhWOW9QMlBxQV94eFhELS1hWE84OUhUczdmNWQ4VXgtTEhqczQybWhSMU9jeWYyT2ZYbUloSkRGaVRIaENqUGVSTHBDbEFSeDI1ZzN4NVBXSzdWYXdRMEFodlgtSlhvRXJLMlNreGcxdGxFclVfS3NSblpMYkIyTm82Q3IySVN0aUZ3WUxMSHl6LUp3QWdsel92VnBzeVF2eExtNFNCcy1BdUpPdjFqRjVGQnc4VzNCSG9sX0ZrUEVVZnZTQWRqaG5odXRXMVM3TlFYZ3FJR1lkRGEzTERFRWxta3FwYlVELWZCSzBQa0MwQTA4a3FscGROUmhyNG5PSGZCRFJVdjJMamppUEtSbFU5d012WjhuNGRkcVFLOXNEMGpYaW1UV3hpLTduanlvOEJKaTBrTDF6VGVzMmh4d0RweS1lTFdWSlJYZFdlRE9OZEVucw==","email":"[email protected]"}}}

转换为json格式

aptinstall -y jq

cat ./pull-secret.txt | jq .> pull-secret.json

生成本地harbor镜像仓库base64位的加密口令

echo -n 'admin:Harbor12345'| base64 -w0

创建harbor镜像仓库登录文件

cat >pull-secret-local.json<<EOF{"auths":{"registry.example.com:8443":{"auth":"YWRtaW46SGFyYm9yMTIzNDU=","email":""}}}EOF

将harbor镜像仓库登录文件内容追加到pull-secret.json中,最终示例如下:

root@bastion:~# cat pull-secret.json{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJasdfasd3NfZjBjYmJiMDgyN2QyNGI0NDhjM2NkYjFiNTg0Y2M5MTY6VVBPRjZVTFRUQUpDTVasfdasdafkxWRUQwVjQ0VUFQOFVBSzZIR0pQWVNONUtZUDdETk1YMlZWWkw4M1A3TQ==","email":"[email protected]"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2KasfdassadjM2NkYjFiNTg0Y2M5MTY6VVBPRjZVTFRUQUpDTasfdxWRUQwVjQ0VUFQOFVBSzZIR0pQWVNONUtZUDdETk1YMlZWWkw4M1A3TQ==","email":"[email protected]"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLWExMWJiZjQ5LWExMzktNDBhNC1hZjM3LTViMWU1MzkyNjg2MzpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXlaREUzTkdWall6WXpaalUwTkRZMk9EZ3dOekZrWVRjME9UYzRPR0UzWkNKOS5oOUN2ZXNrbGJfaTM1TXVfMXhtWWdwMW91YzhYNHQxM2lNQW1ESk40M0dlQ1lKQlpBVXFQYVZGasdfasdfsadfaVaeElVQjQ5S2lDbzVTWXRibHVPWGhRc2xXcUdFRklzbnJMSkxwS0hPNXUzX1BaWVNRNWxGNDFIWks5MERPdlo4YVAwMmpIMmZmZ0x4MXFmZFBkUXIxdTNDc3pCYTlWcFBzQjRMasdfasfbHNpUkRXbDBUSWx4bnFiTWItSFMydlBnSHZQbWs0ZENub2Q5TDdWek5FMVpJNkdPOC1LM0NCb1NacFVNTm9JdlFFWHpBZVJoRnZVZlF2RlJYRDJUblJQSHg2UzdOemM4SnRja3R1OE1FSmJmV0ZvQ2NyeE9uejNsaXpsSkVYYzdjLTA2V0NpVmZndVpfTXpOU1dmSlhWOW9QMlBxQV94eFhELS1hWE84OUhUczdmNWQ4VXgtTEhqczQybWhSMU9jeWYyT2ZYbUloSkRGaVRIaENqUGVSTHBDbEFSeDI1ZzN4NVBXSzdWYXdRMEFodlgtSlhvRXJLMlNreGcxdGxFclVfS3NSblpMYkIyTm82Q3IySVN0aUZ3WUxMSHl6LUp3QWdsel92VnBzeVF2eExtNFNCcy1BdUpPdjFqRjVGQnc4VzNCSG9sX0ZrUEVVZnZTQWRqaG5odXRXMVM3TlFYZ3FJR1lkRGEzTERFRWxta3FwYlVELWZCSzBQa0MwQTA4a3FscGROUmhyNG5PSGZCRFJVdjJMamppUEtSbFU5d012WjhuNGRkcVFLOXNEMGpYaW1UV3hpLTduanlvOEJKaTBrTDF6VGVzMmh4d0RweS1lTFdWSlJYZFdlRE9OZEVucw==","email":"[email protected]"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLWExMWJiZjQ5LWExMzktNDBhNC1hZjM3LTViMWU1MzkyNjg2MzpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXlaREUzTkdWall6WXpaalUwTkRZMk9EZ3dOekZrWVRjME9UYzRPR0UzWkNKOS5oOUN2ZXNrbGJfaTM1TXVfMXhtWWdwMW91YzhYNHQxM2lNQW1ESk40M0dlQ1lKQlpBVXFQYVZGN09aeXNuSHBYaHprNWpYZWc4MzAwUUFRaTNBVnBNNFIxZ2VaeElVQjQ5S2lDbzVTWXRibHVPWGhRc2xXcUdFRklzbnJMSkxwS0hPNXUzX1BaWVNRNWxGNDFIWks5MERPdlo4YVAwMmpIMmZmZ0x4MXFmZFBkUXIxdTNDc3pCYTlWcFBzQjRMWlNzQkQwQWVCYmZibHNpUkRXbDBUSWx4bnFiTWItSFMydlBnSHZQbWs0ZENub2Q5TDdWek5FMVpJNkdPOC1LM0NCb1NacFVNTm9JdlFFWHpBZVJoRnZVZlF2RlJYRDJUblJQSHg2UzdOemM4SnRja3R1OE1FSmJmV0ZvQ2NyeE9uejNsaXpsSkVYYzdjLTA2V0NpVmZndVpfTXpOU1dmSlhWOW9QMlBxQV94eFhELS1hWE84OUhUczdmNWQ4VXgtTEhqczQybWhSMU9jeWYyT2ZYbUloSkRGaVRIaENqUGVSTHBDbEFSeDI1ZzN4NVBXSzdWYXdRMEFodlgtSlhvRXJLMlNreGcxdGxFclVfS3NSblpMYkIyTm82Q3IySVN0aUZ3WUxMSHl6LUp3QWdsel92VnBzeVF2eExtNFNCcy1BdUpPdjFqRjVGQnc4VzNCSG9sX0ZrUEVVZnZTQWRqaG5odXRXMVM3TlFYZ3FJR1lkRGEzTERFRWxta3FwYlVELWZCSzBQa0MwQTA4a3FscGROUmhyNG5PSGZCRFJVdjJMamppUEtSbFU5d012WjhuNGRkcVFLOXNEMGpYaW1UV3hpLTduanlvOEJKaTBrTDF6VGVzMmh4d0RweS1lTFdWSlJYZFdlRE9OZEVucw==","email":"[email protected]"},"registry.example.com:8443":{"auth":"YWRtaW46SGFyYm9yMTIzNDU=","email":""}}}

3、查看oc版本号

root@bastion:/opt/okd-install/4.10.0# oc version

Client Version: 4.10.0-0.okd-2022-03-07-131213

配置以下变量

exportOKD_RELEASE="4.10.0-0.okd-2022-03-07-131213"exportLOCAL_REGISTRY='registry.example.com:8443'exportLOCAL_REPOSITORY='openshift/okd'exportPRODUCT_REPO='openshift'exportLOCAL_SECRET_JSON='/opt/okd-install/4.10.0/pull-secret.json'exportRELEASE_NAME="okd"

开始从quay.io拉取okd镜像并同步到本地harbor仓库:

oc adm release mirror -a ${LOCAL_SECRET_JSON}\

--from=quay.io/${PRODUCT_REPO}/${RELEASE_NAME}:${OKD_RELEASE}\

--to=${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}\

--to-release-image=${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}:${OKD_RELEASE}

查看执行过程,执行完成后如下,记录末尾imageContentSources内容,后续需要添加到安装配置文件中。

sha256:f70bce9d6de9c5e3cb5d94ef9745629ef0fb13a8873b815499bba55dd7b8f04b registry.example.com:8443/openshift/okd:4.10.0-0.okd-2022-03-07-131213-x86_64-multus-whereabouts-ipam-cni

sha256:d4e2220f04f6073844155a68cc53b93badfad700bf3da2da1a9240dff8ba4984 registry.example.com:8443/openshift/okd:4.10.0-0.okd-2022-03-07-131213-x86_64-csi-external-attacher

info: Mirroring completed in 6m5.16s (2.9MB/s)

Success

Update image: registry.example.com:8443/openshift/okd:4.10.0-0.okd-2022-03-07-131213

Mirror prefix: registry.example.com:8443/openshift/okd

Mirror prefix: registry.example.com:8443/openshift/okd:4.10.0-0.okd-2022-03-07-131213

To use the new mirrored repository to install, add the following section to the install-config.yaml:

imageContentSources:

- mirrors:

- registry.example.com:8443/openshift/okd

source: quay.io/openshift/okd

- mirrors:

- registry.example.com:8443/openshift/okd

source: quay.io/openshift/okd-content

To use the new mirrored repository for upgrades, use the following to create an ImageContentSourcePolicy:

apiVersion: operator.openshift.io/v1alpha1

kind: ImageContentSourcePolicy

metadata:

name: example

spec:

repositoryDigestMirrors:

- mirrors:

- registry.example.com:8443/openshift/okd

source: quay.io/openshift/okd

- mirrors:

- registry.example.com:8443/openshift/okd

source: quay.io/openshift/okd-content

4、登录harbor仓库,确认镜像已经存在,当前版本共169个镜像,占用磁盘空间约12.5GB:

创建OpenShift安装配置文件

1、为集群节点 SSH 访问生成密钥对。

在 OKD 安装期间,可以向安装程序提供 SSH 公钥。密钥通过其 Ignition 配置文件传递给 Fedora CoreOS (FCOS) 节点,并用于验证对节点的 SSH 访问,将密钥传递给节点后,您可以使用密钥对以

core

用户身份 SSH 进入 FCOS 节点。

[root@bastion ~]# ssh-keygen -t rsa -b 4096 -N '' -f ~/.ssh/id_rsa

启动 ssh-agent 进程为后台任务

[root@bastion ~]# eval "$(ssh-agent -s)"

将 SSH 私钥添加到 ssh-agent

[root@bastion ~]# ssh-add ~/.ssh/id_rsa

查看生成的ssh公钥,后续需要复制到install-config.yaml文件的

sshKey

字段:

[root@bastion ~]# cat /root/.ssh/id_rsa.pub

2、查看harbor CA证书信息,后续需要复制到install-config.yaml文件的

additionalTrustBundle

字段:

[root@bastion ~]# cat /opt/harbor/cert/ca.crt

3、查看harbor仓库登录密钥,使用jq将密钥压缩为一行,后续需要复制到install-config.yaml文件的

pullSecret

字段:

root@bastion:~# cat /opt/okd-install/4.10.0/pull-secret.json|jq -c

4、手动创建安装配置文件,必须命名为install-config.yaml:

[root@bastion ~]# vi /opt/okd-install/4.10.0/install-config.yaml

配置如下内容:

apiVersion: v1

baseDomain: example.com

compute:-hyperthreading: Enabled

name: worker

replicas:0controlPlane:hyperthreading: Enabled

name: master

replicas:3metadata:name: okd4

networking:clusterNetwork:-cidr: 10.128.0.0/14

hostPrefix:23networkType: OVNKubernetes

serviceNetwork:- 172.30.0.0/16

platform:none:{}fips:falsepullSecret:'{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtasdfasfdasfasfsadfasfdasfDF0NDhjM2NkYjFiNTg0Y2M5MTY6VVBPRjZVTFRUQUpDTVhMSzFaNElNQkxWRUQwVjQ0VUFQOFVBSzZIR0pQWVNONUtZUDdETk1YMlZWWkw4M1A3TQ==","email":"[email protected]"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfZjBjYmJiMDgyN2QyNGI0NDhjM2NkYjFiNTg0Y2M5MTY6VVBPRjZVTFRUQUpDTVhMSzFaNElNQkxWRUQwVjQ0VUFQOFVBSzZIR0pQWVNONUtZUDdETk1YMlZWWkw4M1A3TQ==","email":"[email protected]"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLWExMWJiZjQ5LWExMzktNDBhNC1hZjM3LTViMWU1MzkyNjg2MzpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXlaREUzTkdWall6WXpaalUwTkRZMk9EZ3dOekZrWVRjME9UYzRPR0UzWkNKOS5oOUN2ZXNrbGJfaTM1TXVfMXhtWWdwMW91YzhYNHQxM2lNQW1ESk40M0dlQ1lKQlpBVXFQYVZGN09aeXNuSHBYaHprNWpYZWc4MzAwUUFRaTNBVnBNNFIxZ2VaeElVQjQ5S2lDbzVTWXRibHVPWGhRc2xXcUdFRklzbnJMSkxwS0hPNXUzASDFASDFASFSAFDAIMmZmZ0x4MXFmZFBkUXIxdTNDc3pCYTlWcFBzQjRMWlNzQkQwQWVCYmZibHNpUkRXbDBUSWx4bnFiTWItSFMydlBnSHZQbWs0ZENub2Q5TDdWek5FMVpJNkdPOC1LM0NCb1NacFVNTm9JdlFFWHpBZVJoRnZVZlF2RlJYRDJUblJQSHg2UzdOemM4SnRja3R1OE1FSmJmV0ZvQ2NyeE9uejNsaXpsSkVYYzdjLTA2V0NpVmZndVpfTXpOU1dmSlhWOW9QMlBxQV94eFhELS1hWE84OUhUczdmNWQ4VXgtTEhqczQybWhSMU9jeWYyT2ZYbUloSkRGaVRIaENqUGVSTHBDbEFSeDI1ZzN4NVBXSzdWYXdRMEFodlgtSlhvRXJLMlNreGcxdGxFclVfS3NSblpMYkIyTm82Q3IySVN0aUZ3WUxMSHl6LUp3QWdsel92VnBzeVF2eExtNFNCcy1BdUpPdjFqRjVGQnc4VzNCSG9sX0ZrUEVVZnZTQWRqaG5odXRXMVM3TlFYZ3FJR1lkRGEzTERFRWxta3FwYlVELWZCSzBQa0MwQTA4a3FscGROUmhyNG5PSGZCRFJVdjJMamppUEtSbFU5d012WjhuNGRkcVFLOXNEMGpYaW1UV3hpLTduanlvOEJKaTBrTDF6VGVzMmh4d0RweS1lTFdWSlJYZFdlRE9OZEVucw==","email":"[email protected]"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLWExMWJiZjQ5LWExMzktNDBhNC1hZjM3LTViMWU1MzkyNjg2MzpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXlaREUzTkdWall6WXpaalUwTkRZMk9EZ3dOekZrWVRjME9UYzRPR0UzWkNKOS5oOUN2ZXNrbGJfaTM1TXVfMXhtWWdwMW91YzhYNHQxM2lNQW1ESk40M0dlQ1lKQlpBVXFQYVZGN09aeXNuSHBYaHprNWpYZWc4MzAwUUFRaTNBVnBNNFIxZ2VaeElVQjQ5S2lDbzVTWXRibHVPWGhRc2xXcUdFRklzbnJMSkxwS0hPNXUzX1BaWWQREQWERREQWERQWERQWERWQERQWERWQRQWRQWRPOC1LM0NCb1NacFVNTm9JdlFFWHpBZVJoRnZVZlF2RlJYRDJUblJQSHg2UzdOemM4SnRja3R1OE1FSmJmV0ZvQ2NyeE9uejNsaXpsSkVYYzdjLTA2V0NpVmZndVpfTXpOU1dmSlhWOW9QMlBxQV94eFhELS1hWE84OUhUczdmNWQ4VXgtTEhqczQybWhSMU9jeWYyT2ZYbUloSkRGaVRIaENqUGVSTHBDbEFSeDI1ZzN4NVBXSzdWYXdRMEFodlgtSlhvRXJLMlNreGcxdGxFclVfS3NSblpMYkIyTm82Q3IySVN0aUZ3WUxMSHl6LUp3QWdsel92VnBzeVF2eExtNFNCcy1BdUpPdjFqRjVGQnc4VzNCSG9sX0ZrUEVVZnZTQWRqaG5odXRXMVM3TlFYZ3FJR1lkRGEzTERFRWxta3FwYlVELWZCSzBQa0MwQTA4a3FscGROUmhyNG5PSGZCRFJVdjJMamppUEtSbFU5d012WjhuNGRkcVFLOXNEMGpYaW1UV3hpLTduanlvOEJKaTBrTDF6VGVzMmh4d0RweS1lTFdWSlJYZFdlRE9OZEVucw==","email":"[email protected]"},"registry.example.com:8443":{"auth":"YWRtaW46SGFyYm9yMTIzNDU=","email":""}}}'sshKey:'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQCviB7Wuuzwfdv5Ax81bYpbTFNHu9ZIHF9VflnFcYxoV7clzP5YNRYkZ4wi0CMTIWCO/wVG2Vi5EkuhrUwJpAKtY0z/ahx7Nv64XOZq2JSXYdgGwgKemB0gknLDLwBAlUYRrik0t4dihmbSXdIqaWHjUskG3EwIXLod5nEMrB7R0I9C/Hl7xLNVuGbBrLsUlGNW0k7HWFMejXcwZ7wTjvMQFys7iwNOfcDOsIis8pU7EkwfG5PfLBRTl5zojtSXe6CxVTFtnlXawBKzT35ALopYX2dumejfNU3QdkMOv0AmhSe2H50xpN18VcaA8v+Tu70iHuLQWERQWERQWERQWERWQERWQERvbVkFyCHnE3BvFs/gl7rJ9Y3gMP0+YRSbrY/GxtYx++4Ha0zp30K7Zgbtvc7y8vJrGvcjcsNgMFz2J+HbNLXwFuRh4C8HW6mCoC3VjMYC4BCHhtOLkvDtQ06uRm9IGxvLmSfDOw87xMv1eBD7lyfnUW5XqjYNU8/6TfXwtvf/H8lpEPB5wg2/m0rKc068xqUQApyiF8Pm4C2mbhSFAN0s0GpMTwlRJICQnu/v6ml1nnLRKmLo850ggwiweYKWbEaMO7llcGblDVJzdmUtLBcQUV5dhr+Wz9zY0RJeR1mTOLy+p40qISS1CqnWXUwQ== [email protected]'additionalTrustBundle:|

-----BEGIN CERTIFICATE-----

MIIFyzCCA7OgAwIBAgIUTnWem/2tfnp3D1iHVG80CJ1NS6UwDQYJKoZIhvcNAQEN

BQAwdTELMAkGA1UEBhMCQ04xEDAOBgNVBAgMB0JlaWppbmcxEDAOBgNVBAcMB0Jl

aWppbmcxEDAOBgNVBAoMB2V4YW1wbGUxETAPBgNVBAsMCFBlcnNvbmFsMR0wGwYD

VQQDDBRyZWdpc3RyeS5leGFtcGxlLmNvbTAeFw0yMjA0MDMxMzQ5NDlaFw0zMjAz

MzExMzQ5NDlaMHUxCzAJBgNVBAYTAkNOMRAwDgYDVQQIDAdCZWlqaW5nMRAwDgYD

VQQHDAdCZWlqaW5nMRAwDgYDVQQKDAdleGFtcGxlMREwDwYDVQQLDAhQZXJzb25h

bDEdMBsGA1UEAwwUcmVnaXN0cnkuZXhhbXBsZS5jb20wggIiMA0GCSqGSIb3DQEB

AQUAA4ICDwAwggIQWREWEQE5HrJrtWERQWQRQWERWREQ3OEDQrGWO5zXYBbeMgDw

6wTPrQXErApKT28eLzDbgSQlNqzooYcq3SF93TWUMyDZpA8iIKiUChayueymcSA4

AYpOOJGDGIDC3mFXUSuc8Lflm7snj7OjqEEsyP9NX3DuNfxzhf1/OaXKX0KPgQck

xTZNiddeb+PAg8fWXuw2mWpVLbijGAK2bE4Y0Gs3LTp1AbeI5uRrJeRe6WLElXmy

QS9kwVFqto8qzRwVnXZw0YC6AiiDwIGsQ0NZWnfABy6qG4f350NwsF7pSCY2Dw5N

z46lrlIWPy1ZhXCiHpPI9A1dQRphHqTPJXRoOso/H+2QW9TjBxeWflXiXF9GwMvr

nFM0bJe/6WMBVHmo5r8cV09SdjJyd42+Ufd56KRBpQOeZBOMrE0+6HrpZj7OKwAj

dgWiwlzKlyyP6YcAbw2tPqKiwA6O9sDw4szyrAr6PvpgCE+HnJYli24s1d1QofYE

12J+c1wbq/g3uQLw6nGYVH5RQrrXu1XghkTfqBaHsW6l1lFHchS0E7NHulf37SpS

sgh814sQ8hGTsElu5jZaDC+74lG63SlZuDBBFFZpIwUWnhpq2LmGQRFtrbCinelj

EhnK/ngjmuXtIvOxKB7BaYX0DOUtG7AK2mNrkjNBY89mLXz5WbeZS2/cyyz1y+2Z

swIDAQABo1MwUTAdBgNVHQ4EFgQUvYXyYIJcm1IdXJEyQp+7jvvKHNowHwYDVR0j

BBgwFoAUvYXyYIJcm1IdXJEyQp+7jvvKHNowDwYDVR0TAQH/BAUwAwEB/zANBgkq

hkiG9w0BAQ0FAAOCAgEAFPbMq6lq34uq2Oav2fhjeV6h/C5QCUhs/7+n4RAgO1+s

8QrRwLBRyyZ3K0Uqkw64kUq3k4QxeSC9svOA7pyt14Z2KJRlZ2bNZ1vYJVDd9ZUQ

lQGdXgGg1l/PloQWERWQWEQRQWRQWREQWWQRWQEErgZHlVueJvuxl/e//D1XQ/Et

JEQpisUQR+Knhp85kQpg91GBXBkSaX5z76HiSkFHopEaUXORO13rdIqg/QeixVtG

5WXU14QLkYYoIyalbZ0oPawsFicAbBPEDQzfoWl/g2Jk+r+AMpYA2s2Q9UwMpc7H

96s2q7sDRQKrzBL//ypo4wRAEwd8mSmg756ZuDcltbYvl4roLw7VYlfvUvUSsaQT

EoE7cMarQZrss48nKRtCUoLbrjcPFG7AbwiU8J/Uz52IV7EdaJ1/2s07G7sclAgX

TQNA0E/wDMPZSHat+RaLDBjHGhLINF6ey/J2rJ+bHbKq49CT07RshOfs0a287RqM

U00XNCi+ujyIQmfiI0Lg6vgm6lm6HLw1B66jzdC9K07J6LNPE1125hdxFr04UH6b

CP/oiH6v/aJenQe+E0EypdK15dA2ozRD0zXcEZcVbEgr+jXSK3rXDi3UlQ21IAN6

WPmLWepuCyQLI6bKSPxWC/a8FL7WJiBnSS1bzzh9TYGHw7iS0EqdHdtLVzWWzM4=

-----END CERTIFICATE-----imageContentSources:-mirrors:- registry.example.com:8443/openshift/okd

source: quay.io/openshift/okd

-mirrors:- registry.example.com:8443/openshift/okd

source: quay.io/openshift/okd-content

配置参数说明:

- baseDomain: example.com ##配置基础域名,OpenShift 所有DNS 记录必须是此基础域名的子域,并包含集群名称

- compute.hyperthreading : ##表示启用同步多线程或超线程。默认启用同步多线程,可以提高机器内核的性能

- compute.replicas: ## 配置 Worker Node 数量,UPI模式手动创建 Worker Node,这里必须设置为 0

- controlPlane. replicas:: ##集群master节点数量

- metadata.name ##集群名称,必须与DNS 记录中指定的集群名称一致

- networking. clusterNetwork: ##Pod的IP地址池配置,不能与物理网络冲突

- networking. serviceNetwork: ##Service的IP地址池配置,不能与物理网络冲突

- platform: ##平台类型 ,使用裸金属安装类别,配置为none

- pullSecret: ‘text’ ##这里的text即上文中registry登录密钥格式中的内容

- sshKey: ‘text’ ##这里的text即上文中远程登录rsa公钥获取中的内容

- additionalTrustBundle: ## 镜像仓库CA证书,注意缩进两个空格

- imageContentSources: ##指定自建registry仓库地址

创建k8s清单和ignition配置文件

1、创建安装目录并复制配置文件

mkdir -p /opt/openshift/

cp /opt/okd-install/4.10.0/install-config.yaml /opt/openshift/

2、切换到包含 OKD 安装程序目录,并为集群生成 Kubernetes 清单

cd /opt/openshift/

openshift-install create manifests --dir=/opt/openshift

3、修改 manifests/cluster-scheduler-02-config.yml 文件,将

mastersSchedulable

的值设为

flase

,以防止将 Pod 调度到 Master Node,如果仅安装三节点集群,可以跳过以下步骤以允许控制平面节点可调度。

sed -i 's/mastersSchedulable: true/mastersSchedulable: False/' /opt/openshift/manifests/cluster-scheduler-02-config.yml

4、创建 Ignition 配置文件,OKD 安装程序生成的 Ignition 配置文件包含 24 小时后过期的证书,建议在证书过期之前完成集群安装,避免安装失败。

openshift-install create ignition-configs --dir=/opt/openshift

查看生成的相关配置文件

root@bastion:/opt/openshift# tree.

├── auth

│ ├── kubeadmin-password

│ └── kubeconfig

├── bootstrap.ign

├── master.ign

├── metadata.json

└── worker.ign

复制点火配置文件到nginx目录

cp /opt/openshift/*.ign /usr/share/nginx/html/ignition

chmod -R a+rwx /usr/share/nginx/html/ignition

配置bastion节点使用oc和kubectl命令,每次在bastion更新新版本oc时,以及install新的ign点火文件后,都需要更新这个目录,确保kube的正常使用。

mkdir -p /root/.kube

cp /opt/openshift/auth/kubeconfig ~/.kube/config

如果后续部署失败,需要清理bastion节点以下内容,重新执行上面步骤后再进行引导部署:

rm -rf /opt/openshift/*

rm -rf /opt/openshift/.openshift_install*

rm -rf /usr/share/nginx/html/ignition/*

rm -rf ~/.kube/config

下载CoresOS引导ISO

使用UPI方式部署可以选择两种引导模式,本次使用方式1,手动引导:

- 方式1:基于fedora-coreos-live.iso引导的方法,这种方法不是官方推荐的生产部署方法

- 方式2:大规模安装官方推荐基于pxe的自动化安装,如果要采用pxe的自动化安装方法,就需要大量的DNS反向解析条目和DHCP,来帮助集群自动识别和修改主机名

1、下载fedora-coreos镜像到nginx目录,下载地址:https://getfedora.org/coreos/download/

cd /usr/share/nginx/html/install

wget https://builds.coreos.fedoraproject.org/prod/streams/stable/builds/35.20220313.3.1/x86_64/fedora-coreos-35.20220313.3.1-metal.x86_64.raw.xz

wget https://builds.coreos.fedoraproject.org/prod/streams/stable/builds/35.20220313.3.1/x86_64/fedora-coreos-35.20220313.3.1-metal.x86_64.raw.xz.sig

mv fedora-coreos-35.20220313.3.1-metal.x86_64.raw.xz fcos.raw.xz

mv fedora-coreos-35.20220313.3.1-metal.x86_64.raw.xz.sig fcos.xz.sig

chmod -R a+rwx /usr/share/nginx/html/install

raw.xz是coreos安装包,raw.xz.sig是校验文件,安装的时候必须和raw.xz放在同一个http目录底下。

2、下载Fedora coreos livecd iso

wget https://builds.coreos.fedoraproject.org/prod/streams/stable/builds/35.20220313.3.1/x86_64/fedora-coreos-35.20220313.3.1-live.x86_64.iso

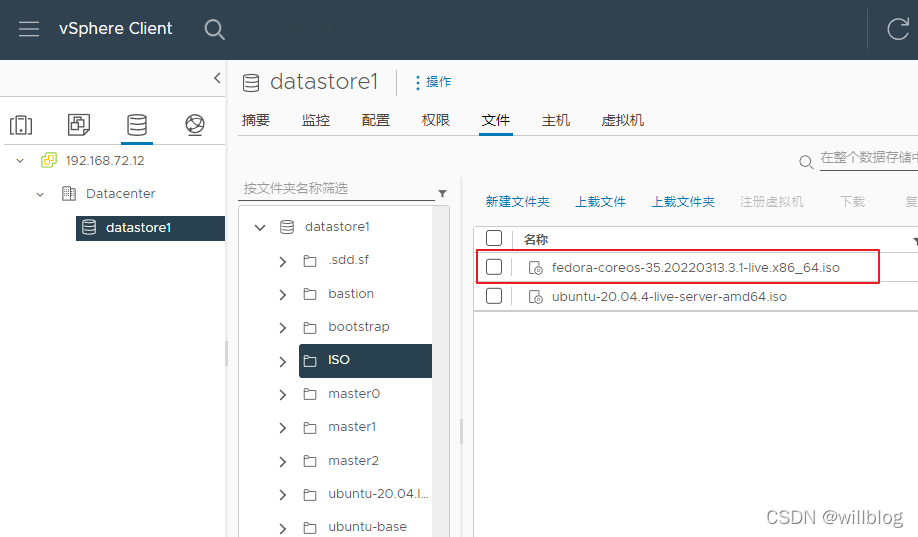

将fedora-coreos-iso制作成启动U盘,或配置到虚拟机中的DVD引导选项,本次部署基于vmware vsphere环境,因此下载ISO后需要上传都数据存储中:

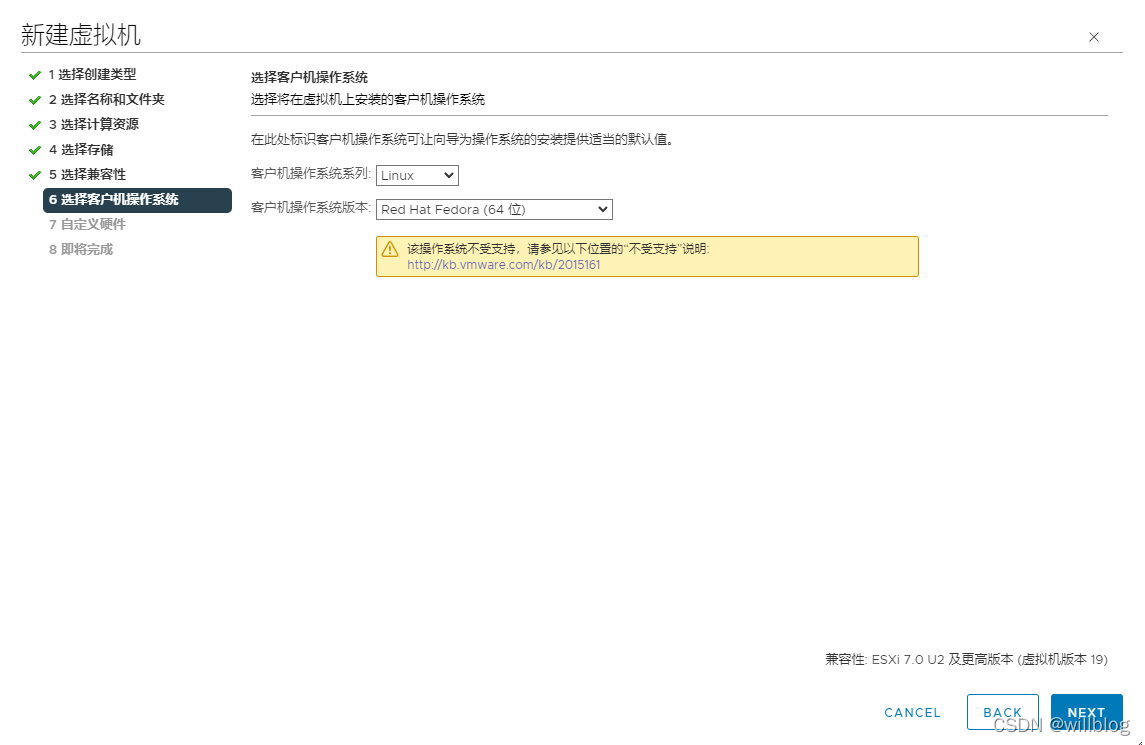

3、开始创建虚拟机,需要1个bootstrap节点,3个master节点及2个worker节点,以bootstrap节点为例,选择客户机操作系统类型为Fedora

4、配置虚拟光驱挂载iso镜像为fedora-coreos,所有节点创建完成后暂不需要启动

引导boostrap节点

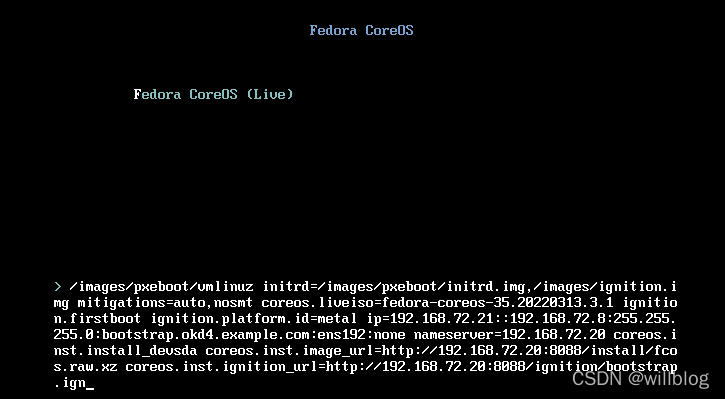

开始启动boostrap节点,看到启动画面后快速按下 tab 键,进入 Kernel 参数配置页面,填写引导信息。

备注:vmware workstation支持对接vcenter并管理其中的虚拟机,vmware workstation编辑选项中支持粘贴文本功能,可以省略手动输入的繁琐操作。

ip=192.168.72.21::192.168.72.8:255.255.255.0:bootstrap.okd4.example.com:ens192:none nameserver=192.168.72.20 coreos.inst.install_dev=/dev/sda coreos.inst.image_url=http://192.168.72.20:8088/install/fcos.raw.xz coreos.inst.ignition_url=http://192.168.72.20:8088/ignition/bootstrap.ign

参数说明:

- ip=本机ip地址::网关地址:子网掩码:本机主机名:网卡名:none

- nameserver=dns地址

- coreos.inst.install_dev=/dev/sda

- coreos.inst.image_url=coreos.raw.xz的http访问地址

- coreos.inst.ignition_url=bootstrap.ign的http访问地址

最终效果如下,确认无误后回车开始启动bootstrap节点:

查看bootstrap引导日志

root@bastion:~# openshift-install --dir=/opt/openshift wait-for bootstrap-complete --log-level=debug

DEBUG OpenShift Installer 4.10.0-0.okd-2022-03-07-131213

......

从bastion节点SSH连接到bootstrap节点

root@bastion:~# ssh -i ~/.ssh/id_rsa [email protected][core@bootstarp ~]$ sudo -i

查看bootstrap服务运行日志

[root@bootstrap ~]# journalctl -b -f -u release-image.service -u bootkube.service

等待一段时间查看运行以下容器,并处于Running状态,说明bootstrap节点已经就绪,等待下一阶段引导master节点

[root@bootstrap ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

6d6934f426cea 57cb23b4dd54b86edec76c373b275d336d22752d2269d438bd96fbb1676641bc 10 minutes ago Running kube-controller-manager 2 d6574a4a417e4

e2bd2c3e23c54 57cb23b4dd54b86edec76c373b275d336d22752d2269d438bd96fbb1676641bc 10 minutes ago Running kube-scheduler 2 da8455e61b32d

92f06a438fc25 2dcba596e247eb8940ba59e48dd079facb3a17beae00b3a7b1b75acb1c782048 10 minutes ago Running kube-apiserver-insecure-readyz 18 d47dd0ff6e586

b066e0b17df23 57cb23b4dd54b86edec76c373b275d336d22752d2269d438bd96fbb1676641bc 10 minutes ago Running kube-apiserver 18 d47dd0ff6e586

7ab95cc1d0ed8 quay.io/openshift/okd@sha256:2eee0db9818e22deb4fa99737eb87d6e9afcf68b4e455f42bdc3424c0b0d0896 11 minutes ago Running cluster-version-operator 1 a63ac0c9a1e72

f4443fc71a580 ae99f186ae09868748a605e0e5cc1bee0daf931a4b072aafd55faa4bc0d918df 11 minutes ago Running cluster-policy-controller 1 d6574a4a417e4

75dfba68b1c74 194a3e4cff36cd53d443e209ca379da2017766e6c8d676ead8e232c4361a41ed 11 minutes ago Running cloud-credential-operator 1 03305d772455d

ea634f3723b20 f4c2fcf0b6e255c7b96298ca39b3c08f60d3fef095a1b88ffaa9495b8b301f13 6 hours ago Running machine-config-server 0 1dbe3ff66be8a

ad902a32559ec 4e1485364a88b0d4dab5949b0330936aa9863fe5f7aa77917e85f72be6cea3ad 6 hours ago Running etcd 0 af73d75d8da46

6cce15d64f5fb 4e1485364a88b0d4dab5949b0330936aa9863fe5f7aa77917e85f72be6cea3ad 6 hours ago Running etcdctl 0 af73d75d8da46

引导启动Master节点

同样的,从fedora-coreos-live.iso引导启动3个master节点,按tab键进入内核参数配置界面,输入以下内容,注意修改ignition_url,改为使用master.ign点火文件:

master0引导配置:

ip=192.168.72.22::192.168.72.8:255.255.255.0:master0.okd4.example.com:ens192:none nameserver=192.168.72.20 coreos.inst.install_dev=/dev/sda coreos.inst.image_url=http://192.168.72.20:8088/install/fcos.raw.xz coreos.inst.ignition_url=http://192.168.72.20:8088/ignition/master.ign

master1引导配置:

ip=192.168.72.23::192.168.72.8:255.255.255.0:master1.okd4.example.com:ens192:none nameserver=192.168.72.20 coreos.inst.install_dev=/dev/sda coreos.inst.image_url=http://192.168.72.20:8088/install/fcos.raw.xz coreos.inst.ignition_url=http://192.168.72.20:8088/ignition/master.ign

master2引导配置:

ip=192.168.72.24::192.168.72.8:255.255.255.0:master2.okd4.example.com:ens192:none nameserver=192.168.72.20 coreos.inst.install_dev=/dev/sda coreos.inst.image_url=http://192.168.72.20:8088/install/fcos.raw.xz coreos.inst.ignition_url=http://192.168.72.20:8088/ignition/master.ign

查看bootstrap引导日志:

root@bastion:~# openshift-install --dir=/opt/openshift wait-for bootstrap-complete --log-level=debug

DEBUG OpenShift Installer 4.10.0-0.okd-2022-03-07-131213

DEBUG Built from commit 3b701903d96b6375f6c3852a02b4b70fea01d694

INFO Waiting up to 20m0s (until 1:08PM)for the Kubernetes API at https://api.okd4.example.com:6443...

INFO API v1.23.3-2003+e419edff267ffa-dirty up

INFO Waiting up to 30m0s (until 1:18PM)for bootstrapping to complete...

DEBUG Bootstrap status: complete

INFO It is now safe to remove the bootstrap resources

INFO Time elapsed: 0s

登录bootstrap节点查看bootstrap服务运行日志,bootstrap完成master引导后提示可以安全删除bootstrap节点,后续不再需要bootstrap参与,下一阶段手动加入worker节点。

[root@bootstrap ~]# journalctl -b -f -u bootkube.service......

Apr 05 04:41:26 bootstrap.okd4.example.com wait-for-ceo[10441]: I0405 04:41:26.409405 1 waitforceo.go:64] Cluster etcd operator bootstrapped successfully

Apr 05 04:41:26 bootstrap.okd4.example.com bootkube.sh[10366]: I0405 04:41:26.409405 1 waitforceo.go:64] Cluster etcd operator bootstrapped successfully

Apr 05 04:41:26 bootstrap.okd4.example.com wait-for-ceo[10441]: I0405 04:41:26.410461 1 waitforceo.go:58] cluster-etcd-operator bootstrap etcd

Apr 05 04:41:26 bootstrap.okd4.example.com bootkube.sh[10366]: I0405 04:41:26.410461 1 waitforceo.go:58] cluster-etcd-operator bootstrap etcd

Apr 05 04:41:26 bootstrap.okd4.example.com podman[10366]: 2022-04-05 04:41:26.442815213 +0000 UTC m=+0.510491821 container died 6efbafe4f46b53e24cb6f387606d48e61ec2799ecff31a9ff7d2237590be4299 (image=quay.io/openshift/okd-content@sha256:95b40765f68115a467555be8dfe7a59242883c1d6f1430dfbbf9f7cf0d4a464c, name=wait-for-ceo)

Apr 05 04:41:26 bootstrap.okd4.example.com bootkube.sh[6969]: bootkube.service complete

Apr 05 04:41:26 bootstrap.okd4.example.com podman[10453]: 2022-04-05 04:41:26.541427752 +0000 UTC m=+0.111583623 container cleanup 6efbafe4f46b53e24cb6f387606d48e61ec2799ecff31a9ff7d2237590be4299 (image=quay.io/openshift/okd-content@sha256:95b40765f68115a467555be8dfe7a59242883c1d6f1430dfbbf9f7cf0d4a464c, name=wait-for-ceo, vcs-ref=9619a078840a25e131ac0dcee19fc3602e47e271, io.openshift.build.commit.ref=release-4.10, io.k8s.description=ART equivalent image openshift-4.10-openshift-enterprise-base - rhel-8/base-repos, io.openshift.build.namespace=, io.openshift.build.commit.author=, url=https://access.redhat.com/containers/#/registry.access.redhat.com/openshift/ose-base/images/v4.10.0-202202160023.p0.g544601e.assembly.stream, vcs-type=git, io.k8s.display-name=4.10-base, io.openshift.release.operator=true, io.openshift.build.commit.url=https://github.com/openshift/images/commit/544601e82413bc549bfe2eb8b54a7ff9f8c7c42e, io.openshift.maintainer.component=Release, description=This is the base image from which all OpenShift Container Platform images inherit., vendor=Red Hat, Inc., architecture=x86_64, io.openshift.build.commit.message=, io.openshift.expose-services=, io.openshift.build.commit.id=9619a078840a25e131ac0dcee19fc3602e47e271, name=openshift/ose-base, License=GPLv2+, io.openshift.tags=openshift,base, summary=Provides the latest release of Red Hat Universal Base Image 8., build-date=2022-02-16T05:12:16.796162, com.redhat.license_terms=https://www.redhat.com/agreements, io.openshift.build.name=, io.buildah.version=1.22.3, com.redhat.build-host=cpt-1006.osbs.prod.upshift.rdu2.redhat.com, release=202202160023.p0.g544601e.assembly.stream, maintainer=Red Hat, Inc., io.openshift.build.source-context-dir=, io.openshift.maintainer.product=OpenShift Container Platform, version=v4.10.0, io.openshift.ci.from.base=sha256:cf46506104eadcdfd9cb7f7113840fca2a52f4b97c5085048cb118dfc611a594, distribution-scope=public, io.openshift.build.commit.date=, vcs-url=https://github.com/openshift/cluster-etcd-operator, com.redhat.component=openshift-enterprise-base-container, io.openshift.build.source-location=https://github.com/openshift/cluster-etcd-operator)

Apr 05 04:41:26 bootstrap.okd4.example.com systemd[1]: bootkube.service: Deactivated successfully.

Apr 05 04:41:26 bootstrap.okd4.example.com systemd[1]: bootkube.service: Consumed 6.508s CPU time.

在bastion节点查看所有master节点是否正常启动

root@bastion:~# oc get nodes

NAME STATUS ROLES AGE VERSION

master0.okd4.example.com Ready master 18m v1.23.3+759c22b

master1.okd4.example.com Ready master 16m v1.23.3+759c22b

master2.okd4.example.com Ready master 10m v1.23.3+759c22b

引导启动worker节点

master节点就绪后,可以开始从fedora-coreos-live.iso引导启动2个worker节点,按tab键进入内核参数配置界面,输入以下内容,注意修改ignition_url,改为使用worker.ign点火文件:

worker0引导配置:

ip=192.168.72.25::192.168.72.8:255.255.255.0:worker0.okd4.example.com:ens192:none nameserver=192.168.72.20 coreos.inst.install_dev=/dev/sda coreos.inst.image_url=http://192.168.72.20:8088/install/fcos.raw.xz coreos.inst.ignition_url=http://192.168.72.20:8088/ignition/worker.ign

worker1引导配置:

ip=192.168.72.26::192.168.72.8:255.255.255.0:worker1.okd4.example.com:ens192:none nameserver=192.168.72.20 coreos.inst.install_dev=/dev/sda coreos.inst.image_url=http://192.168.72.20:8088/install/fcos.raw.xz coreos.inst.ignition_url=http://192.168.72.20:8088/ignition/worker.ign

查看VNC启动界面,等待worker完全启动。

批准机器的证书签名请求

将机器添加到集群时,会为添加的每台机器生成两个待处理的证书签名请求 (CSR)。必须确认这些 CSR 已获得批准,或在必要时自行批准。必须首先批准客户端请求,然后是服务器请求。

在某些 CSR 获得批准之前,前面的输出可能不包括计算节点,也称为工作节点。

root@bastion:~# oc get nodes

NAME STATUS ROLES AGE VERSION

master0.okd4.example.com Ready master,worker 33m v1.23.3+759c22b

master1.okd4.example.com Ready master,worker 32m v1.23.3+759c22b

master2.okd4.example.com Ready master,worker 3m19s v1.23.3+759c22b

查看待处理的 CSR,并确保看到添加到集群的每台机器的客户端请求为

Pending

或状态

root@bastion:~# oc get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-5mklm 33m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-9dh5q 33m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-bdmjm 28m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-bsdwv 35m kubernetes.io/kubelet-serving system:node:master0.okd4.example.com <none> Approved,Issued

csr-ddgpl 113s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-ld97n 36m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-nbqqm 27m kubernetes.io/kubelet-serving system:node:master2.okd4.example.com <none> Approved,Issued

csr-nrd89 2m32s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-qnv96 33m kubernetes.io/kubelet-serving system:node:master1.okd4.example.com <none> Approved,Issued

csr-slqcv 97s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

csr-tccqf 36m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-vp8j5 28m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-wbhdk 2m16s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending

system:openshift:openshift-authenticator-48cmp 27m kubernetes.io/kube-apiserver-client system:serviceaccount:openshift-authentication-operator:authentication-operator <none> Approved,Issued

system:openshift:openshift-monitoring-x6l9v 24m kubernetes.io/kube-apiserver-client system:serviceaccount:openshift-monitoring:cluster-monitoring-operator <none> Approved,Issued

在此示例中,两台机器正在加入集群。可能会在列表中看到更多已批准的 CSR。如果 CSR 未获批准,则在添加的机器的所有待处理 CSR 都处于

Pending

状态后,批准集群机器的 CSR:

oc get csr -o go-template='{{range .items}}{{if not .status}}{{.metadata.name}}{{"\n"}}{{end}}{{end}}'|xargs --no-run-if-empty oc adm certificate approve

输出结果如下

certificatesigningrequest.certificates.k8s.io/csr-ddgpl approved

certificatesigningrequest.certificates.k8s.io/csr-nrd89 approved

certificatesigningrequest.certificates.k8s.io/csr-slqcv approved

certificatesigningrequest.certificates.k8s.io/csr-wbhdk approved

查看节点状态,等待片刻两个新的worker节点处于Ready状态

root@bastion:~# oc get nodes

NAME STATUS ROLES AGE VERSION

master0.okd4.example.com Ready master 40m v1.23.3+759c22b

master1.okd4.example.com Ready master 37m v1.23.3+759c22b

master2.okd4.example.com Ready master 31m v1.23.3+759c22b

worker0.okd4.example.com Ready worker 2m57s v1.23.3+759c22b

worker1.okd4.example.com Ready worker 3m v1.23.3+759c22b

此时两个worker节点CSR处于Pending状态,运行以下命令,再次批准所有待处理的 CSR:

oc get csr -o go-template='{{range .items}}{{if not .status}}{{.metadata.name}}{{"\n"}}{{end}}{{end}}'|xargs oc adm certificate approve

确认所有客户端和服务器 CSR 都已获得批准:

root@bastion:~# oc get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-5mklm 39m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-9dh5q 39m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-bdmjm 34m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-bsdwv 41m kubernetes.io/kubelet-serving system:node:master0.okd4.example.com <none> Approved,Issued

csr-c6kjg 4m50s kubernetes.io/kubelet-serving system:node:worker1.okd4.example.com <none> Approved,Issued

csr-ddgpl 7m51s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-hxjck 4m47s kubernetes.io/kubelet-serving system:node:worker0.okd4.example.com <none> Approved,Issued

csr-ld97n 42m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-nbqqm 33m kubernetes.io/kubelet-serving system:node:master2.okd4.example.com <none> Approved,Issued

csr-nrd89 8m30s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-qnv96 39m kubernetes.io/kubelet-serving system:node:master1.okd4.example.com <none> Approved,Issued

csr-slqcv 7m35s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-tccqf 42m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-vp8j5 34m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

csr-wbhdk 8m14s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued

system:openshift:openshift-authenticator-48cmp 33m kubernetes.io/kube-apiserver-client system:serviceaccount:openshift-authentication-operator:authentication-operator <none> Approved,Issued

system:openshift:openshift-monitoring-x6l9v 30m kubernetes.io/kube-apiserver-client system:serviceaccount:openshift-monitoring:cluster-monitoring-operator <none> Approved,Issued

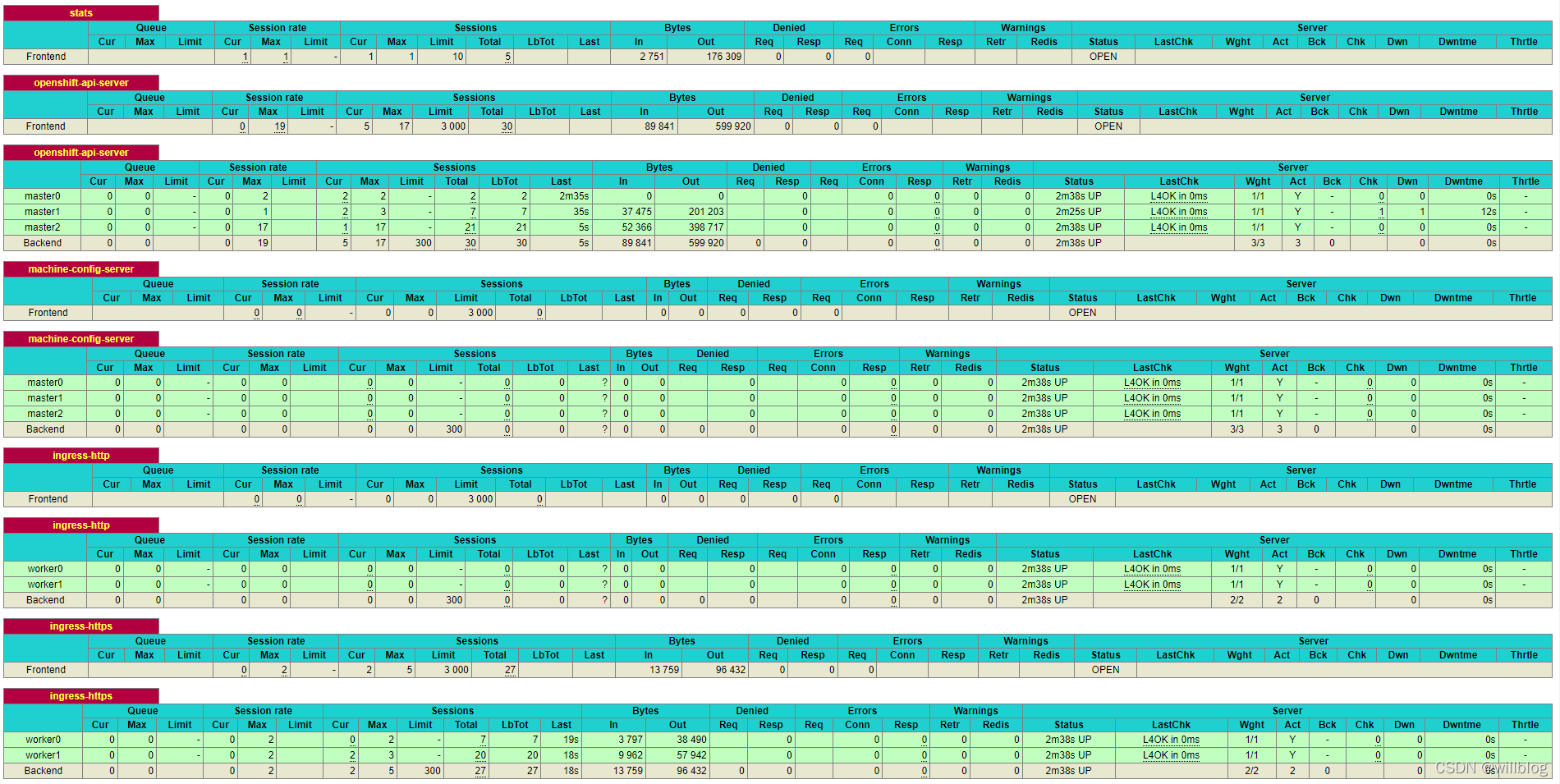

清理Haproxy配置

此时bootstrap节点已经可以删除,并且需要清理haproxy配置,删除以下两行内容

root@bastion:~# cat /etc/haproxy/haproxy.cfg |grep bootstrap

server bootstrap 192.168.72.21:6443 check

server bootstrap 192.168.72.21:22623 check

重新加载配置

dockerkill -s HUP haproxy

登录haproxy状态页面,默认用户名密码在haproxy.cfg配置文件中可以获取。

http://192.168.72.20:1936/stats

查看所有负载端口健康状态

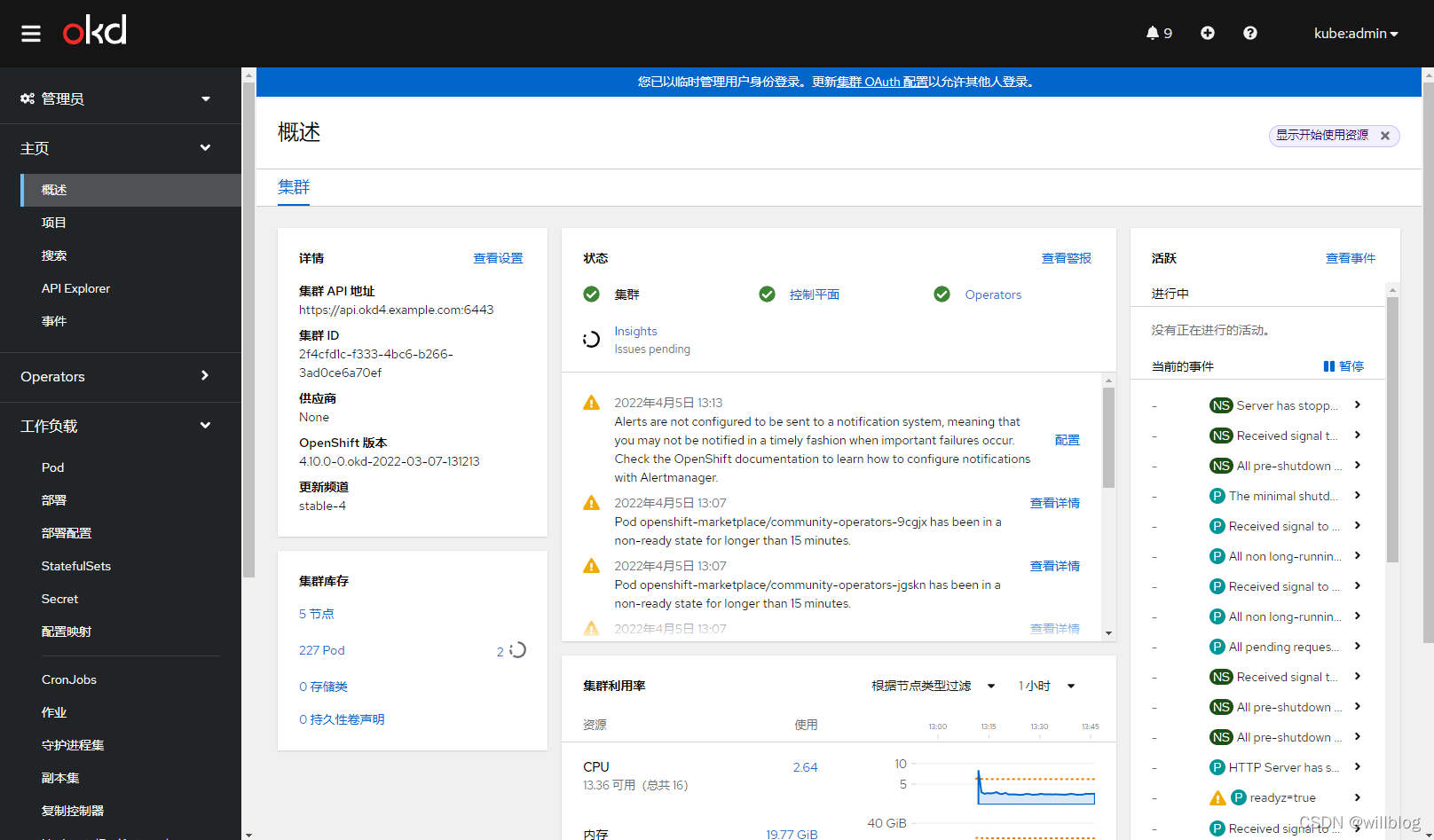

查看Operator运行状态

控制平面初始化后,您必须立即配置一些 Operator,以便它们都可用。列出在集群中运行的 Operator。输出包括 Operator 版本、可用性和正常运行时间信息,确认AVAILABLE列状态全部为True:

root@bastion:~# oc get clusteroperators

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.10.0-0.okd-2022-03-07-131213 True False False 26m

baremetal 4.10.0-0.okd-2022-03-07-131213 True False False 63m

cloud-controller-manager 4.10.0-0.okd-2022-03-07-131213 True False False 74m

cloud-credential 4.10.0-0.okd-2022-03-07-131213 True False False 86m

cluster-autoscaler 4.10.0-0.okd-2022-03-07-131213 True False False 63m

config-operator 4.10.0-0.okd-2022-03-07-131213 True False False 67m

console 4.10.0-0.okd-2022-03-07-131213 True False False 31m

csi-snapshot-controller 4.10.0-0.okd-2022-03-07-131213 True False False 65m

dns 4.10.0-0.okd-2022-03-07-131213 True False False 63m

etcd 4.10.0-0.okd-2022-03-07-131213 True False False 64m

image-registry 4.10.0-0.okd-2022-03-07-131213 True False False 57m

ingress 4.10.0-0.okd-2022-03-07-131213 True False False 35m

insights 4.10.0-0.okd-2022-03-07-131213 True False False 58m

kube-apiserver 4.10.0-0.okd-2022-03-07-131213 True False False 61m

kube-controller-manager 4.10.0-0.okd-2022-03-07-131213 True False False 64m

kube-scheduler 4.10.0-0.okd-2022-03-07-131213 True False False 61m

kube-storage-version-migrator 4.10.0-0.okd-2022-03-07-131213 True False False 58m

machine-api 4.10.0-0.okd-2022-03-07-131213 True False False 63m

machine-approver 4.10.0-0.okd-2022-03-07-131213 True False False 66m

machine-config 4.10.0-0.okd-2022-03-07-131213 True False False 63m

marketplace 4.10.0-0.okd-2022-03-07-131213 True False False 63m

monitoring 4.10.0-0.okd-2022-03-07-131213 True False False 29m

network 4.10.0-0.okd-2022-03-07-131213 True False False 64m

node-tuning 4.10.0-0.okd-2022-03-07-131213 True False False 63m

openshift-apiserver 4.10.0-0.okd-2022-03-07-131213 True False False 58m

openshift-controller-manager 4.10.0-0.okd-2022-03-07-131213 True False False 63m

openshift-samples 4.10.0-0.okd-2022-03-07-131213 True False False 53m

operator-lifecycle-manager 4.10.0-0.okd-2022-03-07-131213 True False False 64m

operator-lifecycle-manager-catalog 4.10.0-0.okd-2022-03-07-131213 True False False 63m

operator-lifecycle-manager-packageserver 4.10.0-0.okd-2022-03-07-131213 True False False 58m

service-ca 4.10.0-0.okd-2022-03-07-131213 True False False 67m

storage 4.10.0-0.okd-2022-03-07-131213 True False False 67m

查看所有的pod状态,确认全部为

Running

或

Completed

状态:

root@bastion:~# oc get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

demo example-tomcat-548b6678f9-lvbsd 1/1 Running 0 3h34m

openshift-apiserver-operator openshift-apiserver-operator-fbbcfffdb-txthk 1/1 Running 1(12h ago) 12h

openshift-apiserver apiserver-76666f747-b699f 2/2 Running 0 12h

openshift-apiserver apiserver-76666f747-f2k79 2/2 Running 0 12h

openshift-apiserver apiserver-76666f747-jc2j9 2/2 Running 0 12h

openshift-authentication-operator authentication-operator-56585c6d7f-zk4vx 1/1 Running 1(12h ago) 12h

openshift-authentication oauth-openshift-69b4944495-d5g5m 1/1 Running 0 12h

openshift-authentication oauth-openshift-69b4944495-qt9dd 1/1 Running 0 12h

openshift-authentication oauth-openshift-69b4944495-zhqmw 1/1 Running 0 12h

openshift-cloud-controller-manager-operator cluster-cloud-controller-manager-operator-b6ccd5ff4-nm6cz 2/2 Running 2(12h ago) 12h

openshift-cloud-credential-operator cloud-credential-operator-66699d5d4c-p8rfk 2/2 Running 0 12h

openshift-cluster-machine-approver machine-approver-7f6ff596f8-rn82z 2/2 Running 0 12h

openshift-cluster-node-tuning-operator cluster-node-tuning-operator-6764cd7b84-wjghr 1/1 Running 0 12h

openshift-cluster-node-tuning-operator tuned-25q7w 1/1 Running 0 12h

openshift-cluster-node-tuning-operator tuned-8frvm 1/1 Running 0 12h

openshift-cluster-node-tuning-operator tuned-bpqn6 1/1 Running 0 12h

openshift-cluster-node-tuning-operator tuned-nzdws 1/1 Running 0 12h

openshift-cluster-node-tuning-operator tuned-tcrkc 1/1 Running 0 12h

openshift-cluster-samples-operator cluster-samples-operator-5dbddd7dfc-rb2w2 2/2 Running 0 12h

openshift-cluster-storage-operator cluster-storage-operator-75d44d7bcf-x5vhq 1/1 Running 1(12h ago) 12h

openshift-cluster-storage-operator csi-snapshot-controller-6b56df796f-wsccc 1/1 Running 0 12h

openshift-cluster-storage-operator csi-snapshot-controller-6b56df796f-x6q8f 1/1 Running 0 12h

openshift-cluster-storage-operator csi-snapshot-controller-operator-6b5776c58f-wlzb7 1/1 Running 0 12h

openshift-cluster-storage-operator csi-snapshot-webhook-556df58965-7pt5w 1/1 Running 0 12h

openshift-cluster-storage-operator csi-snapshot-webhook-556df58965-gvvqv 1/1 Running 0 12h

openshift-cluster-version cluster-version-operator-6f57fcb854-r6wrm 1/1 Running 0 12h

openshift-config-operator openshift-config-operator-95d566bb9-kctj6 1/1 Running 1(12h ago) 12h

openshift-console-operator console-operator-568fcf9d45-94nlm 1/1 Running 0 12h

openshift-console console-76c6d59bfb-vvwhl 1/1 Running 1(11h ago) 12h

openshift-console console-76c6d59bfb-zkvl4 1/1 Running 1(11h ago) 12h

openshift-console downloads-58b68d9689-795l2 1/1 Running 0 12h

openshift-console downloads-58b68d9689-k9hr4 1/1 Running 0 12h

openshift-controller-manager-operator openshift-controller-manager-operator-d9c574d99-6r9nv 1/1 Running 1(12h ago) 12h

openshift-controller-manager controller-manager-km925 1/1 Running 0 122m

openshift-controller-manager controller-manager-ndlmq 1/1 Running 0 122m

openshift-controller-manager controller-manager-rjbdw 1/1 Running 0 122m

openshift-dns-operator dns-operator-f57cc4d6f-7l64v 2/2 Running 0 12h

openshift-dns dns-default-9wzs4 2/2 Running 0 12h

openshift-dns dns-default-htchk 2/2 Running 0 12h

openshift-dns dns-default-swtd9 2/2 Running 0 12h

openshift-dns dns-default-wxv99 2/2 Running 0 12h

openshift-dns dns-default-xhd5q 2/2 Running 0 12h

openshift-dns node-resolver-48mwj 1/1 Running 0 12h

openshift-dns node-resolver-7zpxr 1/1 Running 0 12h

openshift-dns node-resolver-gbrqz 1/1 Running 0 12h

openshift-dns node-resolver-ntlfj 1/1 Running 0 12h

openshift-dns node-resolver-xdcrv 1/1 Running 0 12h

openshift-etcd-operator etcd-operator-7cdf659f76-2llmq 1/1 Running 1(12h ago) 12h

openshift-etcd etcd-master0.okd4.example.com 4/4 Running 0 12h

openshift-etcd etcd-master1.okd4.example.com 4/4 Running 0 12h

openshift-etcd etcd-master2.okd4.example.com 4/4 Running 0 12h

openshift-etcd etcd-quorum-guard-55c858456b-mvv4d 1/1 Running 0 12h

openshift-etcd etcd-quorum-guard-55c858456b-rbxw7 1/1 Running 0 12h

openshift-etcd etcd-quorum-guard-55c858456b-vz2p5 1/1 Running 0 12h

openshift-etcd installer-3-master1.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-5-master2.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-7-master1.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-7-master2.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-7-retry-1-master0.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-8-master0.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-8-master1.okd4.example.com 0/1 Completed 0 12h

openshift-etcd installer-8-master2.okd4.example.com 0/1 Completed 0 12h

openshift-etcd revision-pruner-7-master0.okd4.example.com 0/1 Completed 0 12h

openshift-etcd revision-pruner-7-master1.okd4.example.com 0/1 Completed 0 12h

openshift-etcd revision-pruner-7-master2.okd4.example.com 0/1 Completed 0 12h

openshift-etcd revision-pruner-8-master0.okd4.example.com 0/1 Completed 0 12h

openshift-etcd revision-pruner-8-master1.okd4.example.com 0/1 Completed 0 12h

openshift-etcd revision-pruner-8-master2.okd4.example.com 0/1 Completed 0 12h

openshift-image-registry cluster-image-registry-operator-ddd96d697-p4fdx 1/1 Running 0 12h

openshift-image-registry node-ca-7zt48 1/1 Running 0 12h

openshift-image-registry node-ca-8fb9j 1/1 Running 0 12h

openshift-image-registry node-ca-dtsrl 1/1 Running 0 12h

openshift-image-registry node-ca-kn4pl 1/1 Running 0 12h

openshift-image-registry node-ca-vt6fm 1/1 Running 0 12h

openshift-ingress-canary ingress-canary-kr74s 1/1 Running 0 12h

openshift-ingress-canary ingress-canary-x4ggt 1/1 Running 0 12h

openshift-ingress-operator ingress-operator-848cb57596-hjmqz 2/2 Running 1(12h ago) 12h

openshift-ingress router-default-df465c48f-jvbpc 1/1 Running 0 12h

openshift-ingress router-default-df465c48f-l982j 1/1 Running 0 12h

openshift-insights insights-operator-6c98b65bd-frsfv 1/1 Running 1(12h ago) 12h

openshift-kube-apiserver-operator kube-apiserver-operator-c5b54866c-892jt 1/1 Running 1(12h ago) 12h

openshift-kube-apiserver installer-10-master0.okd4.example.com 0/1 Completed 0 4h10m

openshift-kube-apiserver installer-10-master1.okd4.example.com 0/1 Completed 0 4h13m

openshift-kube-apiserver installer-10-master2.okd4.example.com 0/1 Completed 0 4h8m

openshift-kube-apiserver installer-11-master0.okd4.example.com 0/1 Completed 0 146m

openshift-kube-apiserver installer-11-master1.okd4.example.com 0/1 Completed 0 149m

openshift-kube-apiserver installer-11-master2.okd4.example.com 0/1 Completed 0 143m

openshift-kube-apiserver installer-12-master0.okd4.example.com 0/1 Completed 0 119m

openshift-kube-apiserver installer-12-master1.okd4.example.com 0/1 Completed 0 122m

openshift-kube-apiserver installer-12-master2.okd4.example.com 0/1 Completed 0 116m

openshift-kube-apiserver installer-13-master0.okd4.example.com 0/1 Completed 0 6m7s

openshift-kube-apiserver installer-13-master1.okd4.example.com 0/1 Completed 0 9m4s

openshift-kube-apiserver installer-13-master2.okd4.example.com 0/1 Completed 0 3m7s

openshift-kube-apiserver installer-9-master0.okd4.example.com 0/1 Completed 0 11h

openshift-kube-apiserver installer-9-master1.okd4.example.com 0/1 Completed 0 11h

openshift-kube-apiserver installer-9-master2.okd4.example.com 0/1 Completed 0 11h

openshift-kube-apiserver kube-apiserver-guard-master0.okd4.example.com 1/1 Running 0 12h

openshift-kube-apiserver kube-apiserver-guard-master1.okd4.example.com 1/1 Running 0 12h

openshift-kube-apiserver kube-apiserver-guard-master2.okd4.example.com 1/1 Running 0 12h

openshift-kube-apiserver kube-apiserver-master0.okd4.example.com 5/5 Running 0 4m7s

openshift-kube-apiserver kube-apiserver-master1.okd4.example.com 5/5 Running 0 7m4s

openshift-kube-apiserver kube-apiserver-master2.okd4.example.com 5/5 Running 0 68s

openshift-kube-apiserver revision-pruner-10-master0.okd4.example.com 0/1 Completed 0 4h13m

openshift-kube-apiserver revision-pruner-10-master1.okd4.example.com 0/1 Completed 0 4h13m

openshift-kube-apiserver revision-pruner-10-master2.okd4.example.com 0/1 Completed 0 4h13m

openshift-kube-apiserver revision-pruner-11-master0.okd4.example.com 0/1 Completed 0 149m

openshift-kube-apiserver revision-pruner-11-master1.okd4.example.com 0/1 Completed 0 149m

openshift-kube-apiserver revision-pruner-11-master2.okd4.example.com 0/1 Completed 0 149m

openshift-kube-apiserver revision-pruner-12-master0.okd4.example.com 0/1 Completed 0 122m

openshift-kube-apiserver revision-pruner-12-master1.okd4.example.com 0/1 Completed 0 123m

openshift-kube-apiserver revision-pruner-12-master2.okd4.example.com 0/1 Completed 0 122m

openshift-kube-apiserver revision-pruner-13-master0.okd4.example.com 0/1 Completed 0 9m18s

openshift-kube-apiserver revision-pruner-13-master1.okd4.example.com 0/1 Completed 0 9m20s

openshift-kube-apiserver revision-pruner-13-master2.okd4.example.com 0/1 Completed 0 9m15s

openshift-kube-apiserver revision-pruner-9-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-apiserver revision-pruner-9-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-apiserver revision-pruner-9-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager-operator kube-controller-manager-operator-57bc446b77-mfkwt 1/1 Running 1(12h ago) 12h

openshift-kube-controller-manager installer-3-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-5-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-5-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-6-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-6-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-6-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-7-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-7-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager installer-7-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager kube-controller-manager-guard-master0.okd4.example.com 1/1 Running 0 12h

openshift-kube-controller-manager kube-controller-manager-guard-master1.okd4.example.com 1/1 Running 0 12h

openshift-kube-controller-manager kube-controller-manager-guard-master2.okd4.example.com 1/1 Running 0 12h

openshift-kube-controller-manager kube-controller-manager-master0.okd4.example.com 4/4 Running 0 12h

openshift-kube-controller-manager kube-controller-manager-master1.okd4.example.com 4/4 Running 0 12h

openshift-kube-controller-manager kube-controller-manager-master2.okd4.example.com 4/4 Running 0 12h

openshift-kube-controller-manager revision-pruner-6-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager revision-pruner-6-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager revision-pruner-6-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager revision-pruner-7-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager revision-pruner-7-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-controller-manager revision-pruner-7-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler-operator openshift-kube-scheduler-operator-67cbb8d86f-nqbnd 1/1 Running 1(12h ago) 12h

openshift-kube-scheduler installer-4-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler installer-5-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler installer-6-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler installer-6-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler installer-7-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler installer-7-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler installer-7-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler openshift-kube-scheduler-guard-master0.okd4.example.com 1/1 Running 0 12h

openshift-kube-scheduler openshift-kube-scheduler-guard-master1.okd4.example.com 1/1 Running 0 12h

openshift-kube-scheduler openshift-kube-scheduler-guard-master2.okd4.example.com 1/1 Running 0 12h

openshift-kube-scheduler openshift-kube-scheduler-master0.okd4.example.com 3/3 Running 0 12h

openshift-kube-scheduler openshift-kube-scheduler-master1.okd4.example.com 3/3 Running 0 12h

openshift-kube-scheduler openshift-kube-scheduler-master2.okd4.example.com 3/3 Running 0 12h

openshift-kube-scheduler revision-pruner-6-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler revision-pruner-6-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler revision-pruner-6-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler revision-pruner-7-master0.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler revision-pruner-7-master1.okd4.example.com 0/1 Completed 0 12h

openshift-kube-scheduler revision-pruner-7-master2.okd4.example.com 0/1 Completed 0 12h

openshift-kube-storage-version-migrator-operator kube-storage-version-migrator-operator-85c88fcbcd-b24q5 1/1 Running 1(12h ago) 12h

openshift-kube-storage-version-migrator migrator-85976b4574-b2v8q 1/1 Running 0 12h

openshift-machine-api cluster-autoscaler-operator-6c6ffd9948-f7h62 2/2 Running 0 12h

openshift-machine-api cluster-baremetal-operator-76fd6798b6-hpsgr 2/2 Running 0 12h

openshift-machine-api machine-api-operator-74f4fbdcc9-vfft6 2/2 Running 0 12h

openshift-machine-config-operator machine-config-controller-bbc954c9c-spsnj 1/1 Running 0 12h

openshift-machine-config-operator machine-config-daemon-dzz9t 2/2 Running 0 12h

openshift-machine-config-operator machine-config-daemon-j5lvj 2/2 Running 0 12h

openshift-machine-config-operator machine-config-daemon-nkm9p 2/2 Running 0 12h

openshift-machine-config-operator machine-config-daemon-pbxbh 2/2 Running 0 12h

openshift-machine-config-operator machine-config-daemon-r4cml 2/2 Running 0 12h

openshift-machine-config-operator machine-config-operator-9c6d9dd78-q55n9 1/1 Running 0 12h

openshift-machine-config-operator machine-config-server-29slp 1/1 Running 0 12h

openshift-machine-config-operator machine-config-server-ps4zb 1/1 Running 0 12h

openshift-machine-config-operator machine-config-server-wt7kv 1/1 Running 0 12h

openshift-marketplace community-operators-z789f 1/1 Running 0 5m35s

openshift-marketplace marketplace-operator-7d44654db-xdcl8 1/1 Running 1(12h ago) 12h

openshift-monitoring alertmanager-main-0 6/6 Running 0 11h

openshift-monitoring alertmanager-main-1 6/6 Running 0 11h

openshift-monitoring cluster-monitoring-operator-7f57cd7fb-z4dwd 2/2 Running 0 12h

openshift-monitoring grafana-6cd855f567-thstn 3/3 Running 0 12h

openshift-monitoring kube-state-metrics-7dd5fcf48b-dbrxt 3/3 Running 0 12h

openshift-monitoring node-exporter-2xcfq 2/2 Running 0 12h

openshift-monitoring node-exporter-gzckr 2/2 Running 0 12h

openshift-monitoring node-exporter-js44v 2/2 Running 0 12h

openshift-monitoring node-exporter-kxcbk 2/2 Running 0 12h

openshift-monitoring node-exporter-zv4k6 2/2 Running 0 12h

openshift-monitoring openshift-state-metrics-57c84995c9-wmt7h 3/3 Running 0 12h

openshift-monitoring prometheus-adapter-667f7b6644-5w9gd 1/1 Running 0 123m

openshift-monitoring prometheus-adapter-667f7b6644-8std4 1/1 Running 0 123m

openshift-monitoring prometheus-k8s-0 6/6 Running 0 12h

openshift-monitoring prometheus-k8s-1 6/6 Running 0 12h

openshift-monitoring prometheus-operator-674f47f9f6-h6dwf 2/2 Running 0 12h

openshift-monitoring telemeter-client-78dcb6486c-bqmgc 3/3 Running 0 12h

openshift-monitoring thanos-querier-9847d5d6b-585qt 6/6 Running 0 12h

openshift-monitoring thanos-querier-9847d5d6b-hcxbg 6/6 Running 0 12h

openshift-multus multus-878zq 1/1 Running 0 12h

openshift-multus multus-additional-cni-plugins-bws8n 1/1 Running 0 12h

openshift-multus multus-additional-cni-plugins-clzcs 1/1 Running 0 12h

openshift-multus multus-additional-cni-plugins-mj84t 1/1 Running 0 12h

openshift-multus multus-additional-cni-plugins-qcf24 1/1 Running 0 12h

openshift-multus multus-additional-cni-plugins-xg6m9 1/1 Running 0 12h

openshift-multus multus-admission-controller-k5fsl 2/2 Running 0 12h

openshift-multus multus-admission-controller-nc6m4 2/2 Running 0 12h

openshift-multus multus-admission-controller-ngtd2 2/2 Running 0 12h

openshift-multus multus-rfpw4 1/1 Running 0 12h

openshift-multus multus-scttv 1/1 Running 0 12h

openshift-multus multus-wkrrs 1/1 Running 1 12h

openshift-multus multus-x4jml 1/1 Running 0 12h

openshift-multus network-metrics-daemon-b9jrt 2/2 Running 0 12h

openshift-multus network-metrics-daemon-cz4w9 2/2 Running 0 12h

openshift-multus network-metrics-daemon-d2wp5 2/2 Running 0 12h

openshift-multus network-metrics-daemon-gpb4z 2/2 Running 0 12h

openshift-multus network-metrics-daemon-tbs2j 2/2 Running 0 12h

openshift-network-diagnostics network-check-source-74645d55dd-qcs4x 1/1 Running 0 12h

openshift-network-diagnostics network-check-target-4j772 1/1 Running 0 12h

openshift-network-diagnostics network-check-target-5dwkn 1/1 Running 0 12h

openshift-network-diagnostics network-check-target-bgg8d 1/1 Running 0 12h

openshift-network-diagnostics network-check-target-bncc2 1/1 Running 0 12h

openshift-network-diagnostics network-check-target-qsn7j 1/1 Running 0 12h

openshift-network-operator network-operator-686ffb9ff7-982dw 1/1 Running 1(12h ago) 12h

openshift-oauth-apiserver apiserver-8577cd55d9-lknkj 1/1 Running 0 12h

openshift-oauth-apiserver apiserver-8577cd55d9-rnppv 1/1 Running 0 12h

openshift-oauth-apiserver apiserver-8577cd55d9-wp7rp 1/1 Running 0 12h

openshift-operator-lifecycle-manager catalog-operator-8567dd948-bv6bs 1/1 Running 0 12h

openshift-operator-lifecycle-manager collect-profiles-27486270-7pllk 0/1 Completed 0 37m

openshift-operator-lifecycle-manager collect-profiles-27486285-cxkjj 0/1 Completed 0 22m

openshift-operator-lifecycle-manager collect-profiles-27486300-s4nzf 0/1 Completed 0 7m36s

openshift-operator-lifecycle-manager olm-operator-5664cc68b5-zh2sk 1/1 Running 0 12h

openshift-operator-lifecycle-manager package-server-manager-54bf5b8858-jrnw9 1/1 Running 1(12h ago) 12h