文章目录

一、概述

Hadoop是Apache软件基金会下一个开源分布式计算平台,以HDFS(Hadoop Distributed File System)、MapReduce(Hadoop2.0加入了YARN,Yarn是资源调度框架,能够细粒度的管理和调度任务,还能够支持其他的计算框架,比如spark)为核心的Hadoop为用户提供了系统底层细节透明的分布式基础架构。hdfs的高容错性、高伸缩性、高效性等优点让用户可以将Hadoop部署在低廉的硬件上,形成分布式系统。目前最新版本已经是3.x了,官方文档。也可以参考我之前的文章:大数据Hadoop原理介绍+安装+实战操作(HDFS+YARN+MapReduce)

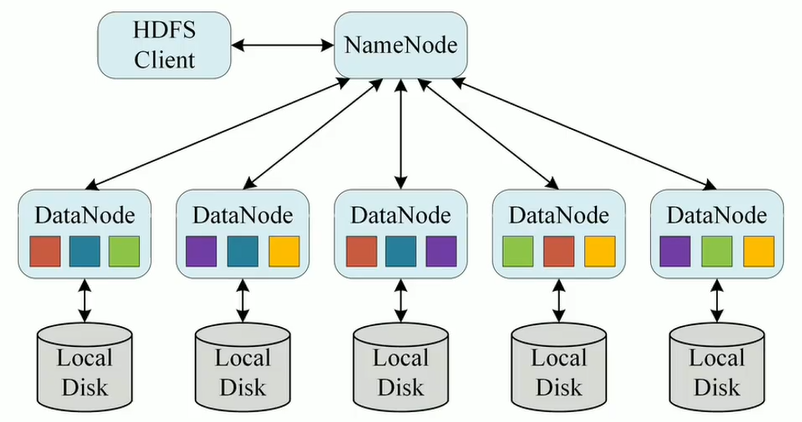

HDFS

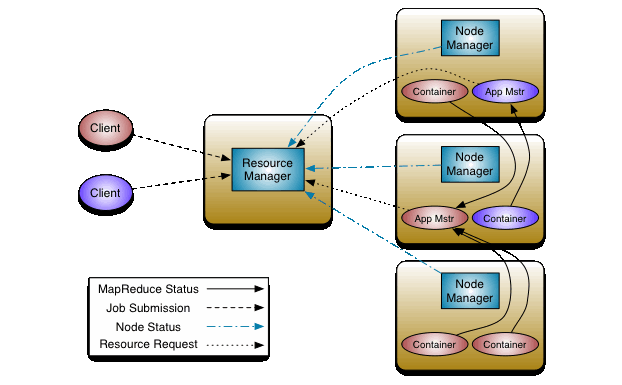

YARN

二、开始部署

1)添加源

地址:https://artifacthub.io/packages/helm/apache-hadoop-helm/hadoop

helm repo add apache-hadoop-helm https://pfisterer.github.io/apache-hadoop-helm/

helm pull apache-hadoop-helm/hadoop --version 1.2.0

tar -xf hadoop-1.2.0.tgz

2)构建镜像 Dockerfile

FROM myharbor.com/bigdata/centos:7.9.2009

RUN rm -f /etc/localtime &&ln -sv /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&echo"Asia/Shanghai"> /etc/timezone

RUN exportLANG=zh_CN.UTF-8

# 创建用户和用户组,跟yaml编排里的spec.template.spec.containers. securityContext.runAsUser: 9999

RUN groupadd --system --gid=9999 admin &&useradd --system --home-dir /home/admin --uid=9999 --gid=admin admin

# 安装sudo

RUN yum -y installsudo;chmod640 /etc/sudoers

# 给admin添加sudo权限

RUN echo"admin ALL=(ALL) NOPASSWD: ALL">> /etc/sudoers

RUN yum -y installinstall net-tools telnet wget

RUN mkdir /opt/apache/

ADD jdk-8u212-linux-x64.tar.gz /opt/apache/

ENV JAVA_HOME=/opt/apache/jdk1.8.0_212

ENV PATH=$JAVA_HOME/bin:$PATH

ENV HADOOP_VERSION 3.3.2

ENV HADOOP_HOME=/opt/apache/hadoop

ENV HADOOP_COMMON_HOME=${HADOOP_HOME}\HADOOP_HDFS_HOME=${HADOOP_HOME}\HADOOP_MAPRED_HOME=${HADOOP_HOME}\HADOOP_YARN_HOME=${HADOOP_HOME}\HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop \PATH=${PATH}:${HADOOP_HOME}/bin

#RUN curl --silent --output /tmp/hadoop.tgz https://ftp-stud.hs-esslingen.de/pub/Mirrors/ftp.apache.org/dist/hadoop/common/hadoop-${HADOOP_VERSION}/hadoop-${HADOOP_VERSION}.tar.gz && tar --directory /opt/apache -xzf /tmp/hadoop.tgz && rm /tmp/hadoop.tgz

ADD hadoop-${HADOOP_VERSION}.tar.gz /opt/apache

RUN ln -s /opt/apache/hadoop-${HADOOP_VERSION}${HADOOP_HOME}

RUN chown -R admin:admin /opt/apache

WORKDIR $HADOOP_HOME# Hdfs ports

EXPOSE 500105002050070500755009080209000# Mapred ports

EXPOSE 19888#Yarn ports

EXPOSE 8030803180328033804080428088#Other ports

EXPOSE 497072122

开始构建镜像

docker build -t myharbor.com/bigdata/hadoop:3.3.2 . --no-cache

### 参数解释# -t:指定镜像名称# . :当前目录Dockerfile# -f:指定Dockerfile路径# --no-cache:不缓存

推送到镜像仓库

docker push myharbor.com/bigdata/hadoop:3.3.2

调整目录结构

mkdir hadoop/templates/hdfs hadoop/templates/yarn

mv hadoop/templates/hdfs-* hadoop/templates/hdfs/

mv hadoop/templates/yarn-* hadoop/templates/yarn/

3)修改配置

hadoop/values.yaml

image:repository: myharbor.com/bigdata/hadoop

tag: 3.3.2

pullPolicy: IfNotPresent

...persistence:nameNode:enabled:truestorageClass:"hadoop-nn-local-storage"accessMode: ReadWriteOnce

size: 10Gi

local:-name: hadoop-nn-0host:"local-168-182-110"path:"/opt/bigdata/servers/hadoop/nn/data/data1"dataNode:enabled:truestorageClass:"hadoop-dn-local-storage"accessMode: ReadWriteOnce

size: 20Gi

local:-name: hadoop-dn-0host:"local-168-182-110"path:"/opt/bigdata/servers/hadoop/dn/data/data1"-name: hadoop-dn-1host:"local-168-182-110"path:"/opt/bigdata/servers/hadoop/dn/data/data2"-name: hadoop-dn-2host:"local-168-182-110"path:"/opt/bigdata/servers/hadoop/dn/data/data3"-name: hadoop-dn-3host:"local-168-182-111"path:"/opt/bigdata/servers/hadoop/dn/data/data1"-name: hadoop-dn-4host:"local-168-182-111"path:"/opt/bigdata/servers/hadoop/dn/data/data2"-name: hadoop-dn-5host:"local-168-182-111"path:"/opt/bigdata/servers/hadoop/dn/data/data3"-name: hadoop-dn-6host:"local-168-182-112"path:"/opt/bigdata/servers/hadoop/dn/data/data1"-name: hadoop-dn-7host:"local-168-182-112"path:"/opt/bigdata/servers/hadoop/dn/data/data2"-name: hadoop-dn-8host:"local-168-182-112"path:"/opt/bigdata/servers/hadoop/dn/data/data3"...service:nameNode:type: NodePort

ports:dfs:9000webhdfs:9870nodePorts:dfs:30900webhdfs:30870dataNode:type: NodePort

ports:dfs:9000webhdfs:9864nodePorts:dfs:30901webhdfs:30864resourceManager:type: NodePort

ports:web:8088nodePorts:web:30088...securityContext:runAsUser:9999privileged:true

hadoop/templates/hdfs/hdfs-nn-pv.yaml

{{- range .Values.persistence.nameNode.local }}---apiVersion: v1

kind: PersistentVolume

metadata:name:{{ .name }}labels:name:{{ .name }}spec:storageClassName:{{ $.Values.persistence.nameNode.storageClass }}capacity:storage:{{ $.Values.persistence.nameNode.size }}accessModes:- ReadWriteOnce

local:path:{{ .path }}nodeAffinity:required:nodeSelectorTerms:-matchExpressions:-key: kubernetes.io/hostname

operator: In

values:-{{ .host }}---{{- end }}

hadoop/templates/hdfs/hdfs-dn-pv.yaml

{{- range .Values.persistence.dataNode.local }}---apiVersion: v1

kind: PersistentVolume

metadata:name:{{ .name }}labels:name:{{ .name }}spec:storageClassName:{{ $.Values.persistence.dataNode.storageClass }}capacity:storage:{{ $.Values.persistence.dataNode.size }}accessModes:- ReadWriteOnce

local:path:{{ .path }}nodeAffinity:required:nodeSelectorTerms:-matchExpressions:-key: kubernetes.io/hostname

operator: In

values:-{{ .host }}---{{- end }}

- 修改hdfs service

mv hadoop/templates/hdfs/hdfs-nn-svc.yaml hadoop/templates/hdfs/hdfs-nn-svc-headless.yaml

mv hadoop/templates/hdfs/hdfs-dn-svc.yaml hadoop/templates/hdfs/hdfs-dn-svc-headless.yaml

# 注意修改名称,不要重复

hadoop/templates/hdfs/hdfs-nn-svc.yaml

# A headless service to create DNS recordsapiVersion: v1

kind: Service

metadata:name:{{ include "hadoop.fullname" . }}-hdfs-nn

labels:app.kubernetes.io/name:{{ include "hadoop.name" . }}helm.sh/chart:{{ include "hadoop.chart" . }}app.kubernetes.io/instance:{{ .Release.Name }}app.kubernetes.io/component: hdfs-nn

spec:ports:-name: dfs

port:{{ .Values.service.nameNode.ports.dfs }}protocol: TCP

nodePort:{{ .Values.service.nameNode.nodePorts.dfs }}-name: webhdfs

port:{{ .Values.service.nameNode.ports.webhdfs }}nodePort:{{ .Values.service.nameNode.nodePorts.webhdfs }}type:{{ .Values.service.nameNode.type }}selector:app.kubernetes.io/name:{{ include "hadoop.name" . }}app.kubernetes.io/instance:{{ .Release.Name }}app.kubernetes.io/component: hdfs-nn

hadoop/templates/hdfs/hdfs-dn-svc.yaml

# A headless service to create DNS recordsapiVersion: v1

kind: Service

metadata:name:{{ include "hadoop.fullname" . }}-hdfs-dn

labels:app.kubernetes.io/name:{{ include "hadoop.name" . }}helm.sh/chart:{{ include "hadoop.chart" . }}app.kubernetes.io/instance:{{ .Release.Name }}app.kubernetes.io/component: hdfs-nn

spec:ports:-name: dfs

port:{{ .Values.service.dataNode.ports.dfs }}protocol: TCP

nodePort:{{ .Values.service.dataNode.nodePorts.dfs }}-name: webhdfs

port:{{ .Values.service.dataNode.ports.webhdfs }}nodePort:{{ .Values.service.dataNode.nodePorts.webhdfs }}type:{{ .Values.service.dataNode.type }}selector:app.kubernetes.io/name:{{ include "hadoop.name" . }}app.kubernetes.io/instance:{{ .Release.Name }}app.kubernetes.io/component: hdfs-dn

- 修改yarn service

mv hadoop/templates/yarn/yarn-nm-svc.yaml hadoop/templates/yarn/yarn-nm-svc-headless.yaml

mv hadoop/templates/yarn/yarn-rm-svc.yaml hadoop/templates/yarn/yarn-rm-svc-headless.yaml

mv hadoop/templates/yarn/yarn-ui-svc.yaml hadoop/templates/yarn/yarn-rm-svc.yaml

# 注意修改名称,不要重复

hadoop/templates/yarn/yarn-rm-svc.yaml

# Service to access the yarn web uiapiVersion: v1

kind: Service

metadata:name:{{ include "hadoop.fullname" . }}-yarn-rm

labels:app.kubernetes.io/name:{{ include "hadoop.name" . }}helm.sh/chart:{{ include "hadoop.chart" . }}app.kubernetes.io/instance:{{ .Release.Name }}app.kubernetes.io/component: yarn-rm

spec:ports:-port:{{ .Values.service.resourceManager.ports.web }}name: web

nodePort:{{ .Values.service.resourceManager.nodePorts.web }}type:{{ .Values.service.resourceManager.type }}selector:app.kubernetes.io/name:{{ include "hadoop.name" . }}app.kubernetes.io/instance:{{ .Release.Name }}app.kubernetes.io/component: yarn-rm

- 修改控制器

在所有控制中新增如下内容:

containers:...securityContext:runAsUser:{{ .Values.securityContext.runAsUser }}privileged:{{ .Values.securityContext.privileged }}

hadoop/templates/hadoop-configmap.yaml

### 1、将/root换成/opt/apache### 2、TMP_URL="http://{{ include "hadoop.fullname" . }}-yarn-rm-headless:8088/ws/v1/cluster/info"

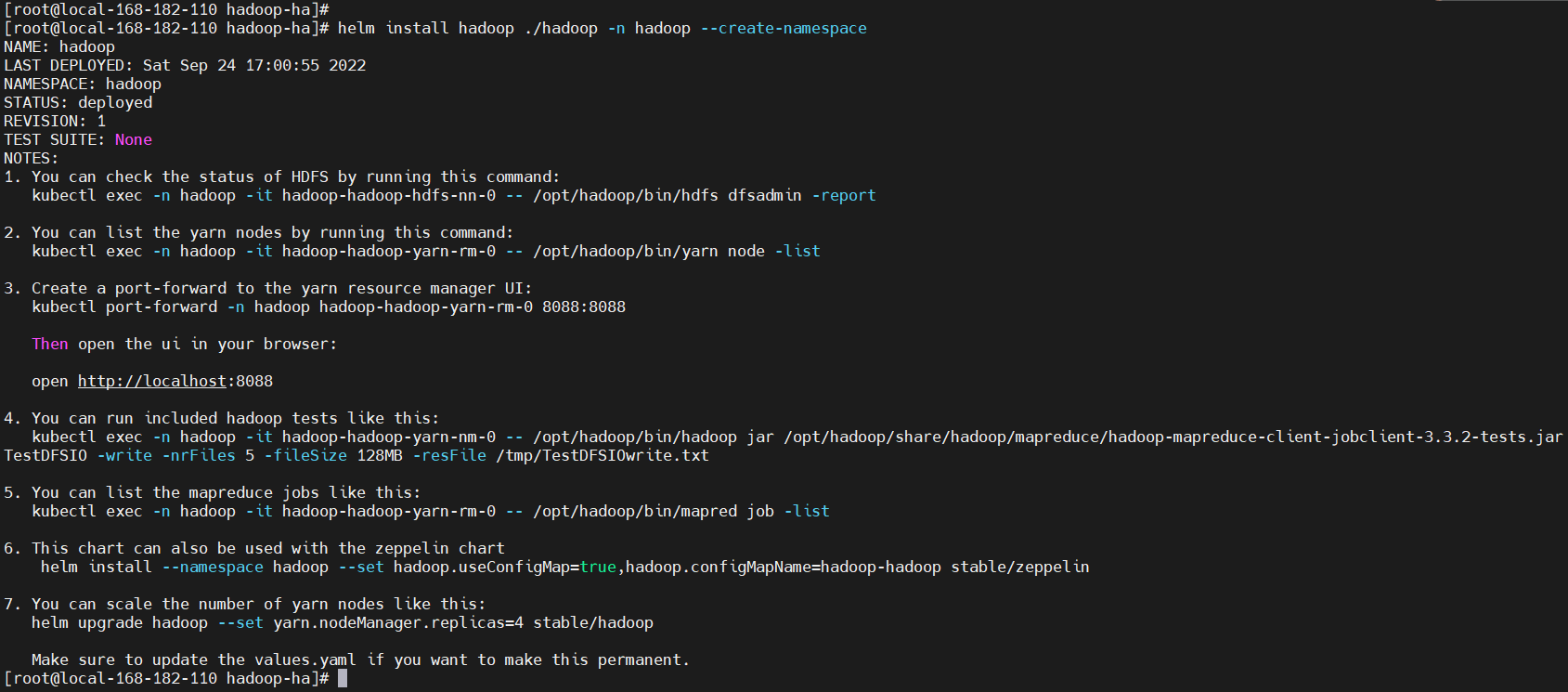

4)开始安装

# 创建存储目录mkdir -p /opt/bigdata/servers/hadoop/{nn,dn}/data/data{1..3}

helm install hadoop ./hadoop -n hadoop --create-namespace

NOTES

NAME: hadoop

LAST DEPLOYED: Sat Sep 2417:00:55 2022

NAMESPACE: hadoop

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. You can check the status of HDFS by running this command:

kubectl exec -n hadoop -it hadoop-hadoop-hdfs-nn-0 -- /opt/hadoop/bin/hdfs dfsadmin -report

2. You can list the yarn nodes by running this command:

kubectl exec -n hadoop -it hadoop-hadoop-yarn-rm-0 -- /opt/hadoop/bin/yarn node -list

3. Create a port-forward to the yarn resource manager UI:

kubectl port-forward -n hadoop hadoop-hadoop-yarn-rm-0 8088:8088

Then open the ui in your browser:

open http://localhost:8088

4. You can run included hadoop tests like this:

kubectl exec -n hadoop -it hadoop-hadoop-yarn-nm-0 -- /opt/hadoop/bin/hadoop jar /opt/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.2-tests.jar TestDFSIO -write -nrFiles 5 -fileSize 128MB -resFile /tmp/TestDFSIOwrite.txt

5. You can list the mapreduce jobs like this:

kubectl exec -n hadoop -it hadoop-hadoop-yarn-rm-0 -- /opt/hadoop/bin/mapred job -list

6. This chart can also be used with the zeppelin chart

helm install --namespace hadoop --set hadoop.useConfigMap=true,hadoop.configMapName=hadoop-hadoop stable/zeppelin

7. You can scale the number of yarn nodes like this:

helm upgrade hadoop --set yarn.nodeManager.replicas=4 stable/hadoop

Make sure to update the values.yaml if you want to make this permanent.

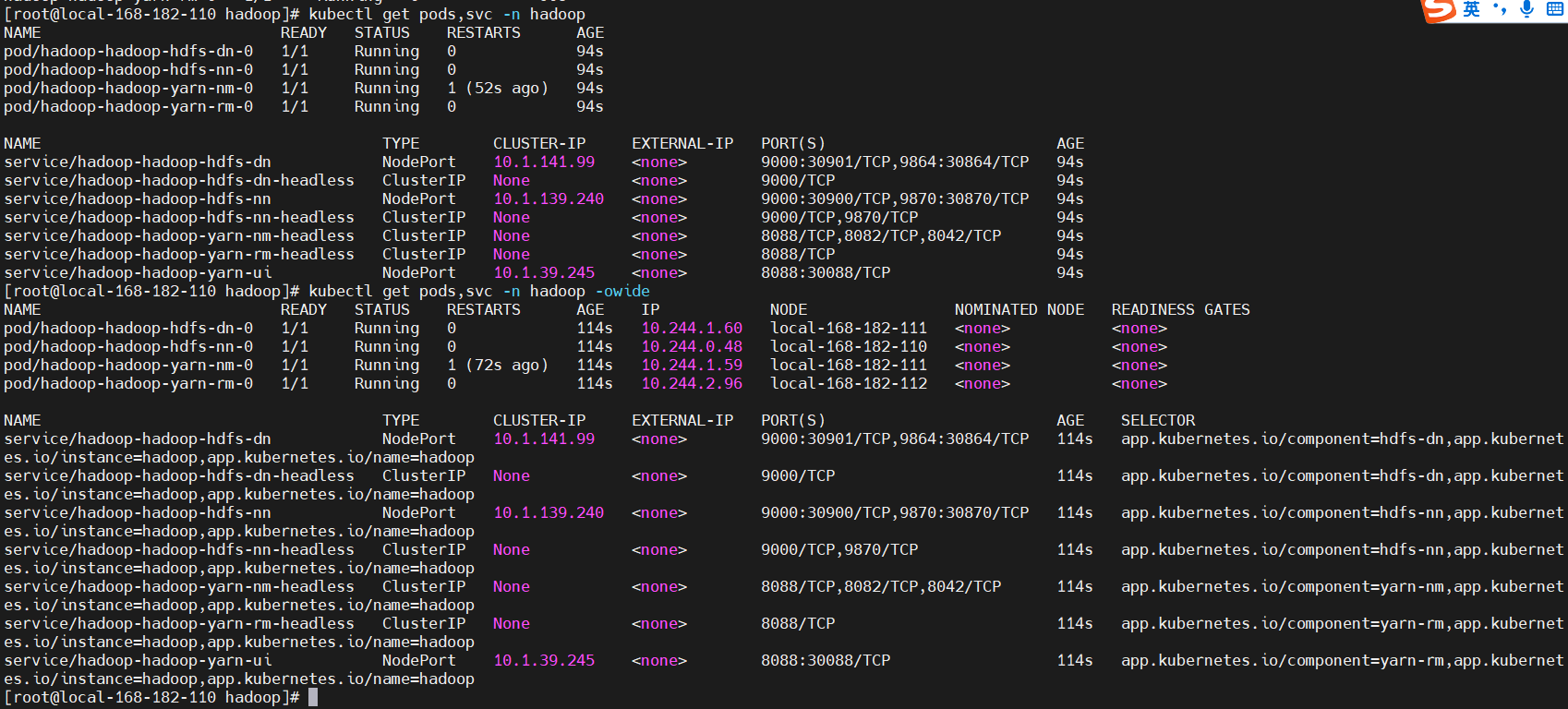

查看

kubectl get pods,svc -n hadoop -owide

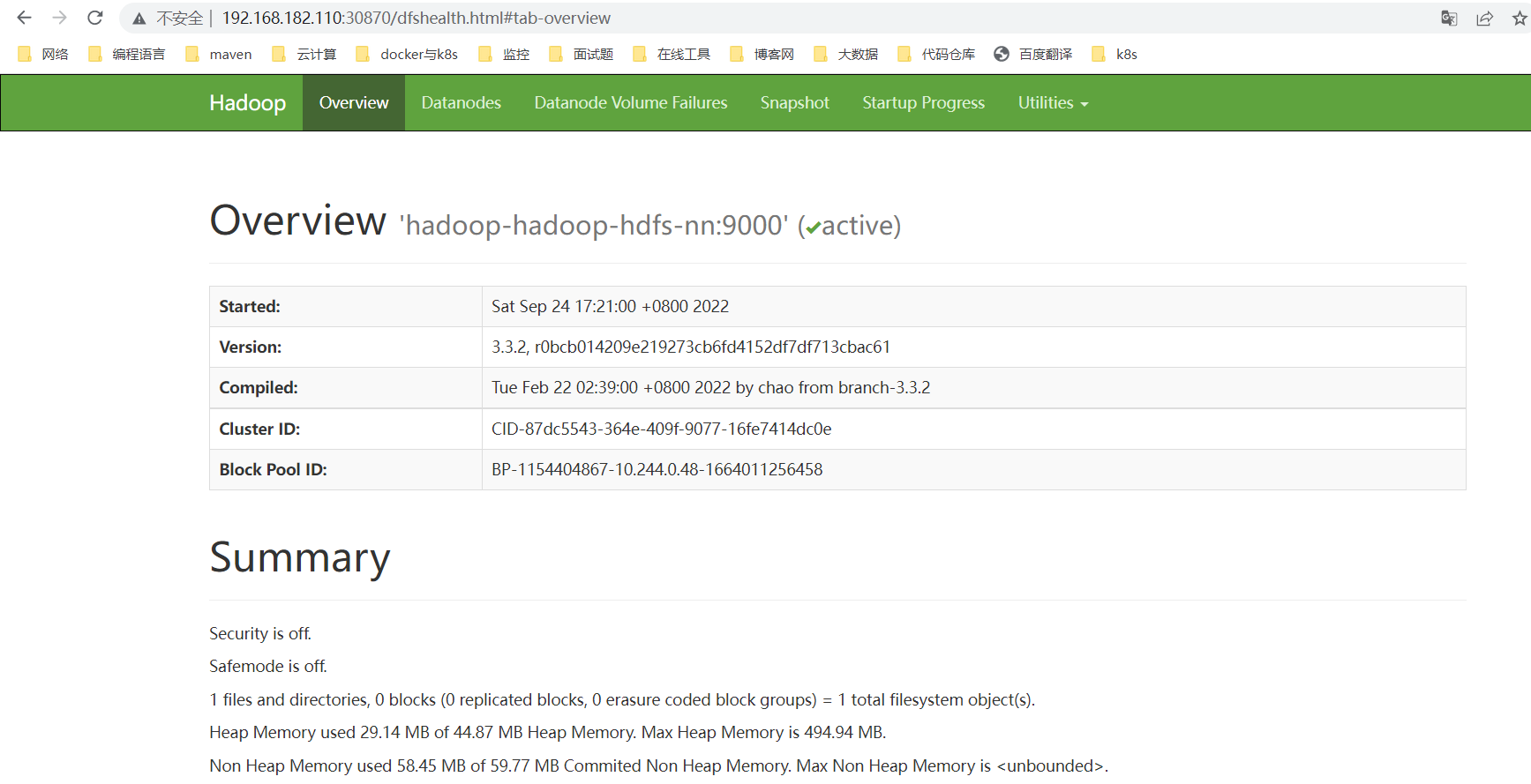

hdfs web:http://192.168.182.110:30870/

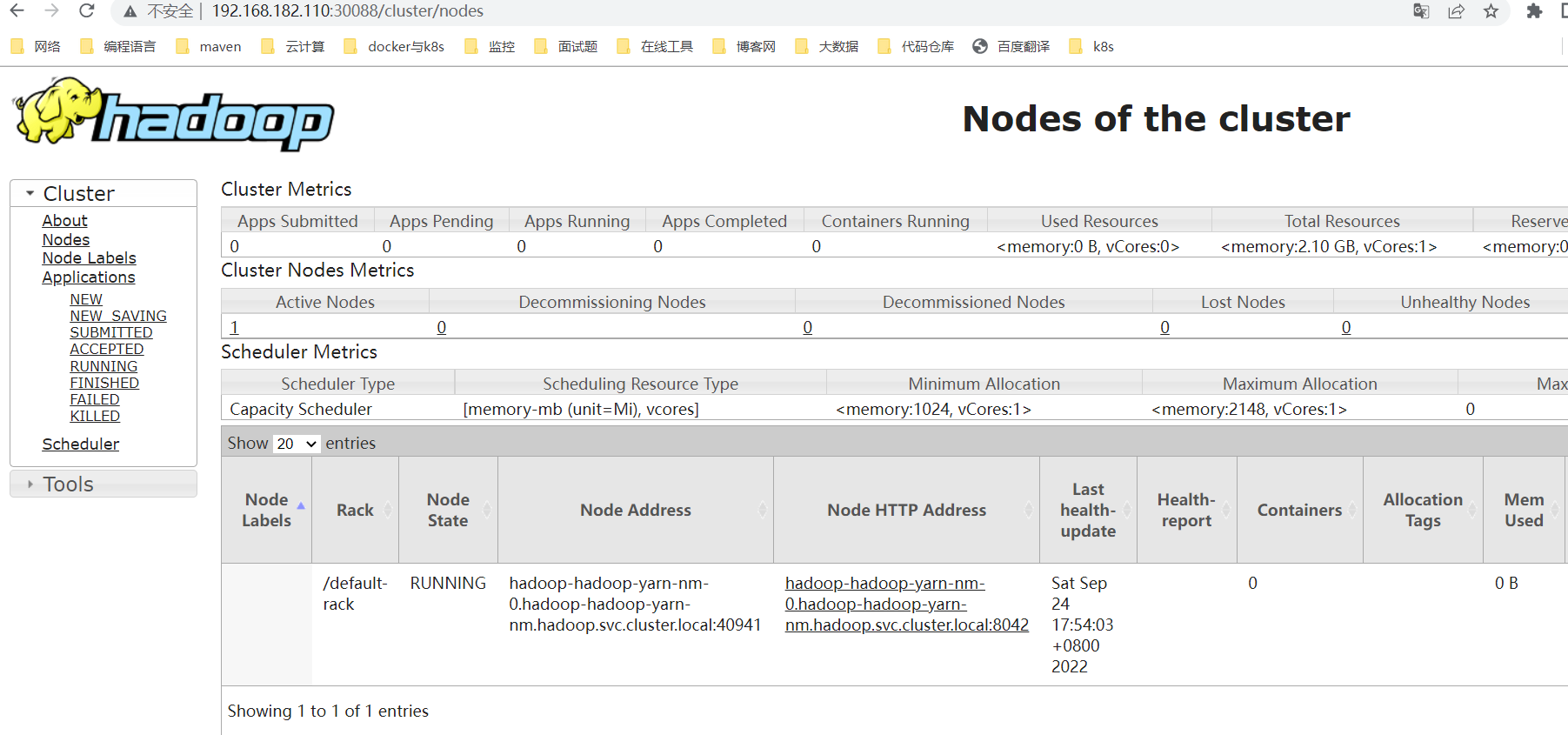

yarn web:http://192.168.182.110:30088/

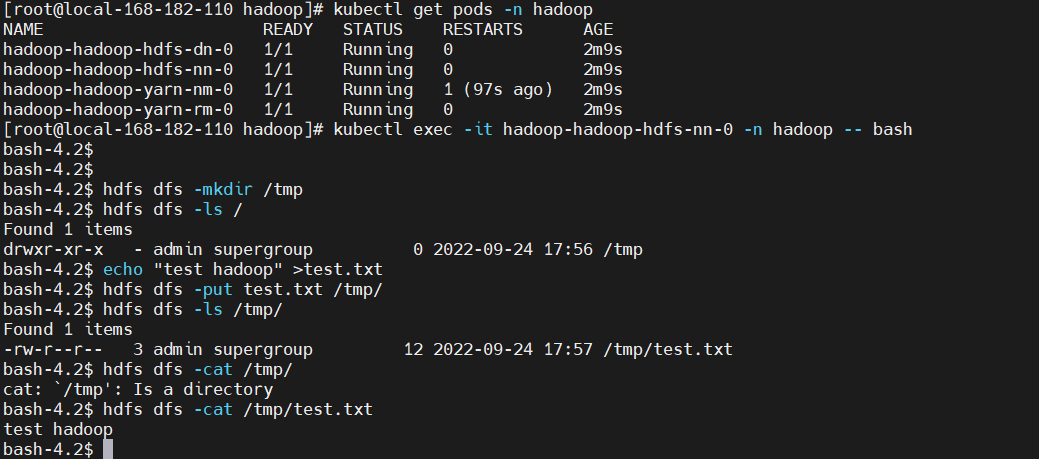

5)测试验证

HDFS 测试验证

kubectl exec -it hadoop-hadoop-hdfs-nn-0 -n hadoop -- bash[root@local-168-182-110 hadoop]# kubectl exec -it hadoop-hadoop-hdfs-nn-0 -n hadoop -- bash

bash-4.2$

bash-4.2$

bash-4.2$ hdfs dfs -mkdir /tmp

bash-4.2$ hdfs dfs -ls /

Found 1 items

drwxr-xr-x - admin supergroup 02022-09-24 17:56 /tmp

bash-4.2$ echo"test hadoop">test.txt

bash-4.2$ hdfs dfs -put test.txt /tmp/

bash-4.2$ hdfs dfs -ls /tmp/

Found 1 items

-rw-r--r-- 3 admin supergroup 122022-09-24 17:57 /tmp/test.txt

bash-4.2$ hdfs dfs -cat /tmp/

cat: `/tmp': Is a directory

bash-4.2$ hdfs dfs -cat /tmp/test.txt

test hadoop

bash-4.2$

Yarn 的测试验证等后面讲到hive on k8s再来测试验证。

6)卸载

helm uninstall hadoop -n hadoop

kubectl delete pod -n hadoop `kubectl get pod -n hadoop|awk'NR>1{print $1}'` --force

kubectl patch ns hadoop -p '{"metadata":{"finalizers":null}}'

kubectl delete ns hadoop --force

这里也提供git下载地址,有需要的小伙伴可以下载部署玩玩,https://gitee.com/hadoop-bigdata/hadoop-on-k8s

在k8s集群中yarn会慢慢被弱化,直接使用k8s资源调度,而不再使用yarn去调度资源了,这里只是部署了单点,仅限于测试环境使用,下一篇文章会讲Hadoop 高可用 on k8s 实现,请小伙伴耐心等待,有任何疑问欢迎给我留言~

版权归原作者 大数据老司机 所有, 如有侵权,请联系我们删除。