基于Binlog、FlinkCDC、Doris实现数据实时同步

Docker部署MySQL

docker pull mysql:5.7.32

- 通过挂载的方式开启一个mysql镜像

docker run -p 3307:3306 --name myMysql -v /usr/docker/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7.32

备注:需提前在宿主机目录下创建一个文件用于保存mysql的数据集,我这里创建的目录是 /usr/docker/mysql/data

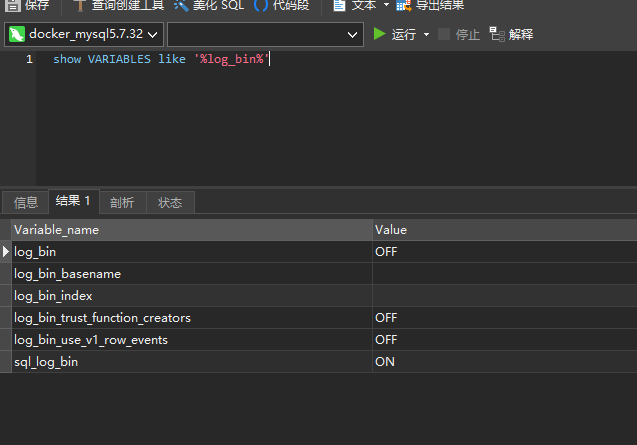

- 使用客户端连接工具连接上mysql,观察一下mysql_binlog的开启情况

- 开启bin_log

dockerexec myMysql bash -c "echo 'log-bin=/var/lib/mysql/mysql-bin' >> /etc/mysql/mysql.conf.d/mysqld.cnf"

dockerexec myMysql bash -c "echo 'server-id=123454' >> /etc/mysql/mysql.conf.d/mysqld.cnf"

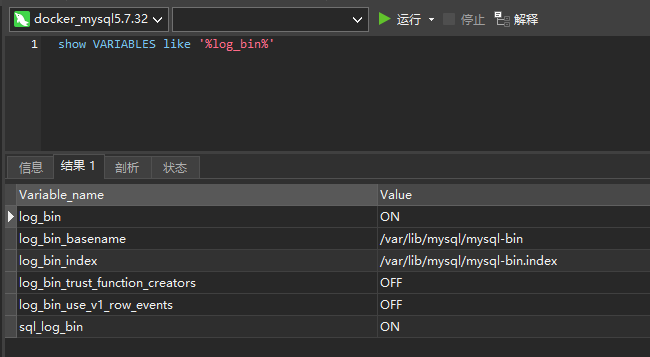

- 重启mysql镜像

docker restart myMysql

- **再次查看 **

show VARIABLES like '%log_bin%'-

- 准备数据-

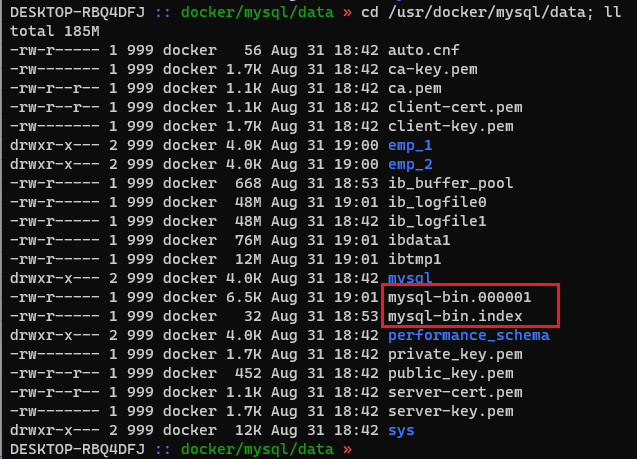

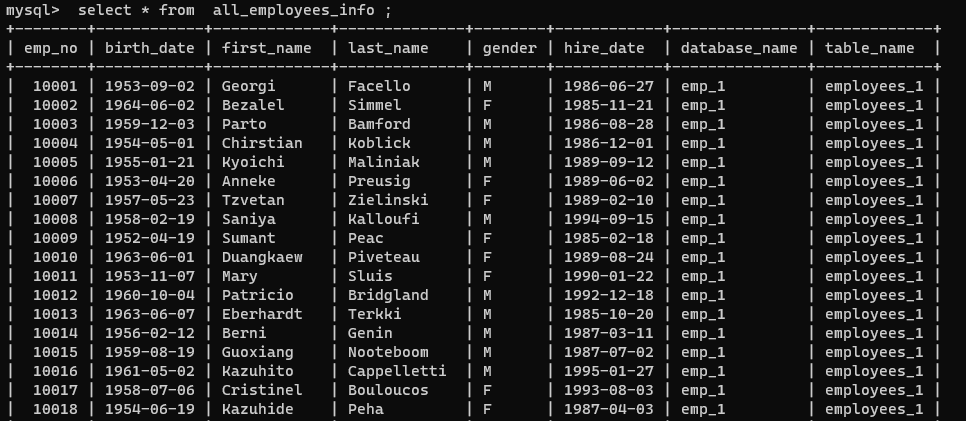

CREATE DATABASE emp_1; USE emp_1;CREATE TABLE employees_1 ( emp_no INT NOT NULL, birth_date DATE NOT NULL, first_name VARCHAR(14) NOT NULL, last_name VARCHAR(16) NOT NULL, gender ENUM ('M','F') NOT NULL, hire_date DATE NOT NULL, PRIMARY KEY (emp_no));INSERT INTO `employees_1` VALUES (10001,'1953-09-02','Georgi','Facello','M','1986-06-27'),(10002,'1964-06-02','Bezalel','Simmel','F','1985-11-21'),(10003,'1959-12-03','Parto','Bamford','M','1986-08-28'),(10004,'1954-05-01','Chirstian','Koblick','M','1986-12-01'),(10005,'1955-01-21','Kyoichi','Maliniak','M','1989-09-12'),(10006,'1953-04-20','Anneke','Preusig','F','1989-06-02'),(10007,'1957-05-23','Tzvetan','Zielinski','F','1989-02-10'),(10008,'1958-02-19','Saniya','Kalloufi','M','1994-09-15'),(10009,'1952-04-19','Sumant','Peac','F','1985-02-18'),(10010,'1963-06-01','Duangkaew','Piveteau','F','1989-08-24'),(10011,'1953-11-07','Mary','Sluis','F','1990-01-22'),(10012,'1960-10-04','Patricio','Bridgland','M','1992-12-18'),(10013,'1963-06-07','Eberhardt','Terkki','M','1985-10-20'),(10014,'1956-02-12','Berni','Genin','M','1987-03-11'),(10015,'1959-08-19','Guoxiang','Nooteboom','M','1987-07-02'),(10016,'1961-05-02','Kazuhito','Cappelletti','M','1995-01-27'),(10017,'1958-07-06','Cristinel','Bouloucos','F','1993-08-03'),(10018,'1954-06-19','Kazuhide','Peha','F','1987-04-03'),(10019,'1953-01-23','Lillian','Haddadi','M','1999-04-30'),(10020,'1952-12-24','Mayuko','Warwick','M','1991-01-26'),(10021,'1960-02-20','Ramzi','Erde','M','1988-02-10'),(10022,'1952-07-08','Shahaf','Famili','M','1995-08-22'),(10023,'1953-09-29','Bojan','Montemayor','F','1989-12-17'),(10024,'1958-09-05','Suzette','Pettey','F','1997-05-19'),(10025,'1958-10-31','Prasadram','Heyers','M','1987-08-17'),(10026,'1953-04-03','Yongqiao','Berztiss','M','1995-03-20'),(10027,'1962-07-10','Divier','Reistad','F','1989-07-07'),(10028,'1963-11-26','Domenick','Tempesti','M','1991-10-22'),(10029,'1956-12-13','Otmar','Herbst','M','1985-11-20'),(10030,'1958-07-14','Elvis','Demeyer','M','1994-02-17'),(10031,'1959-01-27','Karsten','Joslin','M','1991-09-01'),(10032,'1960-08-09','Jeong','Reistad','F','1990-06-20'),(10033,'1956-11-14','Arif','Merlo','M','1987-03-18'),(10034,'1962-12-29','Bader','Swan','M','1988-09-21'),(10035,'1953-02-08','Alain','Chappelet','M','1988-09-05'),(10036,'1959-08-10','Adamantios','Portugali','M','1992-01-03');CREATE TABLE employees_2 ( emp_no INT NOT NULL, birth_date DATE NOT NULL, first_name VARCHAR(14) NOT NULL, last_name VARCHAR(16) NOT NULL, gender ENUM ('M','F') NOT NULL, hire_date DATE NOT NULL, PRIMARY KEY (emp_no));INSERT INTO `employees_2` VALUES (10037,'1963-07-22','Pradeep','Makrucki','M','1990-12-05'),(10038,'1960-07-20','Huan','Lortz','M','1989-09-20'),(10039,'1959-10-01','Alejandro','Brender','M','1988-01-19'),(10040,'1959-09-13','Weiyi','Meriste','F','1993-02-14'),(10041,'1959-08-27','Uri','Lenart','F','1989-11-12'),(10042,'1956-02-26','Magy','Stamatiou','F','1993-03-21'),(10043,'1960-09-19','Yishay','Tzvieli','M','1990-10-20'),(10044,'1961-09-21','Mingsen','Casley','F','1994-05-21'),(10045,'1957-08-14','Moss','Shanbhogue','M','1989-09-02'),(10046,'1960-07-23','Lucien','Rosenbaum','M','1992-06-20'),(10047,'1952-06-29','Zvonko','Nyanchama','M','1989-03-31'),(10048,'1963-07-11','Florian','Syrotiuk','M','1985-02-24'),(10049,'1961-04-24','Basil','Tramer','F','1992-05-04'),(10050,'1958-05-21','Yinghua','Dredge','M','1990-12-25'),(10051,'1953-07-28','Hidefumi','Caine','M','1992-10-15'),(10052,'1961-02-26','Heping','Nitsch','M','1988-05-21'),(10053,'1954-09-13','Sanjiv','Zschoche','F','1986-02-04'),(10054,'1957-04-04','Mayumi','Schueller','M','1995-03-13');CREATE DATABASE emp_2;USE emp_2;CREATE TABLE employees_1 ( emp_no INT NOT NULL, birth_date DATE NOT NULL, first_name VARCHAR(14) NOT NULL, last_name VARCHAR(16) NOT NULL, gender ENUM ('M','F') NOT NULL, hire_date DATE NOT NULL, PRIMARY KEY (emp_no));INSERT INTO `employees_1` VALUES (10055,'1956-06-06','Georgy','Dredge','M','1992-04-27'),(10056,'1961-09-01','Brendon','Bernini','F','1990-02-01'),(10057,'1954-05-30','Ebbe','Callaway','F','1992-01-15'),(10058,'1954-10-01','Berhard','McFarlin','M','1987-04-13'),(10059,'1953-09-19','Alejandro','McAlpine','F','1991-06-26'),(10060,'1961-10-15','Breannda','Billingsley','M','1987-11-02'),(10061,'1962-10-19','Tse','Herber','M','1985-09-17'),(10062,'1961-11-02','Anoosh','Peyn','M','1991-08-30'),(10063,'1952-08-06','Gino','Leonhardt','F','1989-04-08'),(10064,'1959-04-07','Udi','Jansch','M','1985-11-20'),(10065,'1963-04-14','Satosi','Awdeh','M','1988-05-18'),(10066,'1952-11-13','Kwee','Schusler','M','1986-02-26'),(10067,'1953-01-07','Claudi','Stavenow','M','1987-03-04'),(10068,'1962-11-26','Charlene','Brattka','M','1987-08-07'),(10069,'1960-09-06','Margareta','Bierman','F','1989-11-05'),(10070,'1955-08-20','Reuven','Garigliano','M','1985-10-14'),(10071,'1958-01-21','Hisao','Lipner','M','1987-10-01'),(10072,'1952-05-15','Hironoby','Sidou','F','1988-07-21'),(10073,'1954-02-23','Shir','McClurg','M','1991-12-01'),(10074,'1955-08-28','Mokhtar','Bernatsky','F','1990-08-13'),(10075,'1960-03-09','Gao','Dolinsky','F','1987-03-19'),(10076,'1952-06-13','Erez','Ritzmann','F','1985-07-09'),(10077,'1964-04-18','Mona','Azuma','M','1990-03-02'),(10078,'1959-12-25','Danel','Mondadori','F','1987-05-26'),(10079,'1961-10-05','Kshitij','Gils','F','1986-03-27'),(10080,'1957-12-03','Premal','Baek','M','1985-11-19'),(10081,'1960-12-17','Zhongwei','Rosen','M','1986-10-30'),(10082,'1963-09-09','Parviz','Lortz','M','1990-01-03'),(10083,'1959-07-23','Vishv','Zockler','M','1987-03-31'),(10084,'1960-05-25','Tuval','Kalloufi','M','1995-12-15');CREATE TABLE employees_2( emp_no INT NOT NULL, birth_date DATE NOT NULL, first_name VARCHAR(14) NOT NULL, last_name VARCHAR(16) NOT NULL, gender ENUM ('M','F') NOT NULL, hire_date DATE NOT NULL, PRIMARY KEY (emp_no));INSERT INTO `employees_2` VALUES (10085,'1962-11-07','Kenroku','Malabarba','M','1994-04-09'),(10086,'1962-11-19','Somnath','Foote','M','1990-02-16'),(10087,'1959-07-23','Xinglin','Eugenio','F','1986-09-08'),(10088,'1954-02-25','Jungsoon','Syrzycki','F','1988-09-02'),(10089,'1963-03-21','Sudharsan','Flasterstein','F','1986-08-12'),(10090,'1961-05-30','Kendra','Hofting','M','1986-03-14'),(10091,'1955-10-04','Amabile','Gomatam','M','1992-11-18'),(10092,'1964-10-18','Valdiodio','Niizuma','F','1989-09-22'),(10093,'1964-06-11','Sailaja','Desikan','M','1996-11-05'),(10094,'1957-05-25','Arumugam','Ossenbruggen','F','1987-04-18'),(10095,'1965-01-03','Hilari','Morton','M','1986-07-15'),(10096,'1954-09-16','Jayson','Mandell','M','1990-01-14'),(10097,'1952-02-27','Remzi','Waschkowski','M','1990-09-15'),(10098,'1961-09-23','Sreekrishna','Servieres','F','1985-05-13'),(10099,'1956-05-25','Valter','Sullins','F','1988-10-18'),(10100,'1953-04-21','Hironobu','Haraldson','F','1987-09-21'),(10101,'1952-04-15','Perla','Heyers','F','1992-12-28'),(10102,'1959-11-04','Paraskevi','Luby','F','1994-01-26'),(10103,'1953-11-26','Akemi','Birch','M','1986-12-02'),(10104,'1961-11-19','Xinyu','Warwick','M','1987-04-16'),(10105,'1962-02-05','Hironoby','Piveteau','M','1999-03-23'),(10106,'1952-08-29','Eben','Aingworth','M','1990-12-19'),(10107,'1956-06-13','Dung','Baca','F','1994-03-22'),(10108,'1952-04-07','Lunjin','Giveon','M','1986-10-02'),(10109,'1958-11-25','Mariusz','Prampolini','F','1993-06-16'),(10110,'1957-03-07','Xuejia','Ullian','F','1986-08-22'),(10111,'1963-08-29','Hugo','Rosis','F','1988-06-19'),(10112,'1963-08-13','Yuichiro','Swick','F','1985-10-08'),(10113,'1963-11-13','Jaewon','Syrzycki','M','1989-12-24'),(10114,'1957-02-16','Munir','Demeyer','F','1992-07-17'),(10115,'1964-12-25','Chikara','Rissland','M','1986-01-23'),(10116,'1955-08-26','Dayanand','Czap','F','1985-05-28'); - 这时在宿主机下已经产生了日志文件-

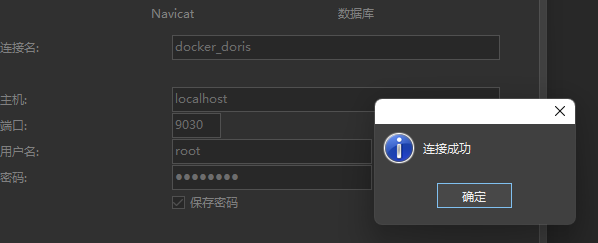

Docker 部署 Doris

docker pull ashuang123/doris-fe:11.111

docker pull ashuang123/doris-be:11.111

创建挂载的文件夹

mkdir -p /opt/doris/doris-meta

mkdir -p /opt/doris/log/doris-fe

mkdir -p /opt/doris/doris-storage

mkdir -p /opt/doris/log/doris-be

运行Fe

docker run --name doris-fe -p 8030:8030 -p 9030:9030 -d -v /opt/doris/doris-meta:/doris-meta -v /opt/doris/log/doris-fe:/code/log ashuang123/doris-fe:11.111

运行Be

docker run --name doris-be -p 8040:8040 -v /opt/doris/doris-storage:/doris-storage -v /opt/doris/log/doris-be:/code/log -d ashuang123/doris-be:11.111

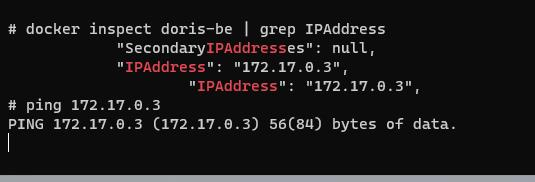

查看容器IP

DESKTOP-RBQ4DFJ :: /opt/doris » docker inspect doris-fe |grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress":"172.17.0.2",

"IPAddress":"172.17.0.2",

DESKTOP-RBQ4DFJ :: /opt/doris »

DESKTOP-RBQ4DFJ :: /opt/doris » docker inspect doris-be |grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress":"172.17.0.3",

"IPAddress":"172.17.0.3",

DESKTOP-RBQ4DFJ :: /opt/doris »

测试服务是否正常

PS C:\Users\Lenovo> mysql -h 172.17.0.2 -P 9030 -uroot

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 0

Server version: 5.7.37 Doris version trunk-Unknown

Copyright (c)2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h'for help. Type '\c' to clear the current input statement.

mysql>

添加BE至FE中

mysql> alter system add backend "172.17.0.3:9050";

Query OK, 0 rows affected (0.05 sec)

mysql>

查看Be状态

mysql> show proc '/backends' \G

*************************** 1. row ***************************

BackendId: 10002

Cluster: default_cluster

IP: 172.17.0.3

HostName: 172.17.0.3

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2022-09-02 04:27:22

LastHeartbeat: 2022-09-02 04:31:49

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 0

DataUsedCapacity: 0.000

AvailCapacity: 230.809 GB

TotalCapacity: 250.982 GB

UsedPct: 8.04 %

MaxDiskUsedPct: 8.04 %

Tag: {"location" : "default"}

ErrMsg:

Version: trunk-Unknown

Status: {"lastSuccessReportTabletsTime":"2022-09-02 04:31:28","lastStreamLoadTime":-1,"isQueryDisabled":false,"isLoadDisabled":false}

1 row in set (13.09 sec)

mysql>

修改数据库root密码

mysql> set PASSWORD FOR 'root' = PASSWORD('ofbiz@123');

Query OK, 0 rows affected (0.02 sec)

mysql>

Flink安装配置

**准备Flink安装包 **

flink-1.14.4-bin-scala_2.12.tgz

tar -zxvf flink-1.14.4-bin-scala_2.12.tgz -C .

准备两个Jar放到Flink/lib目录下

wget https://jiafeng-1308700295.cos.ap-hongkong.myqcloud.com/flink-doris-connector-1.14_2.12-1.0.0-SNAPSHOT.jar

wget https://repo1.maven.org/maven2/com/ververica/flink-sql-connector-mysql-cdc/2.2.1/flink-sql-connector-mysql-cdc-2.2.1.jar

启动Flink

bin/start-cluster.sh

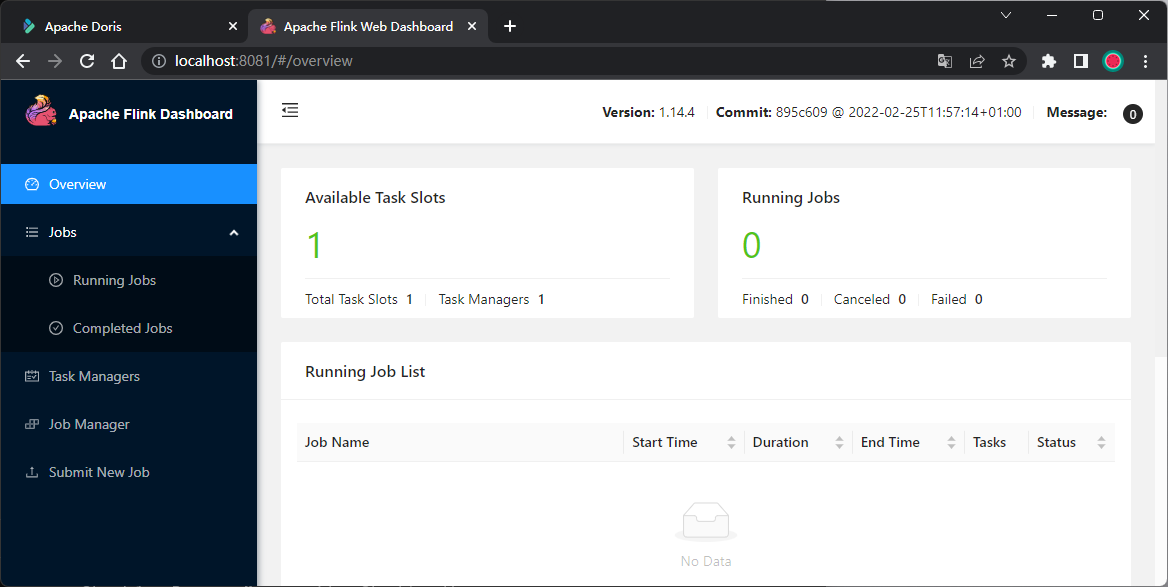

进入页面

同步数据到Doris

创建Doris数据库及表

createdatabase demo;use demo;CREATETABLE all_employees_info (

emp_no intNOTNULL,

birth_date date,

first_name varchar(20),

last_name varchar(20),

gender char(2),

hire_date date,

database_name varchar(50),

table_name varchar(200))UNIQUEKEY(`emp_no`,`birth_date`)DISTRIBUTEDBYHASH(`birth_date`) BUCKETS 1

PROPERTIES ("replication_allocation"="tag.location.default: 1");

进入Flink SQL Client

# bin/sql-client.sh embedded

Command history file path: /root/.flink-sql-history

▒▓██▓██▒

▓████▒▒█▓▒▓███▓▒

▓███▓░░ ▒▒▒▓██▒ ▒

░██▒ ▒▒▓▓█▓▓▒░ ▒████

██▒ ░▒▓███▒ ▒█▒█▒

░▓█ ███ ▓░▒██

▓█ ▒▒▒▒▒▓██▓░▒░▓▓█

█░ █ ▒▒░ ███▓▓█ ▒█▒▒▒

████░ ▒▓█▓ ██▒▒▒ ▓███▒

░▒█▓▓██ ▓█▒ ▓█▒▓██▓ ░█░

▓░▒▓████▒ ██ ▒█ █▓░▒█▒░▒█▒

███▓░██▓ ▓█ █ █▓ ▒▓█▓▓█▒

░██▓ ░█░ █ █▒ ▒█████▓▒ ██▓░▒

███░ ░ █░ ▓ ░█ █████▒░░ ░█░▓ ▓░

██▓█ ▒▒▓▒ ▓███████▓░ ▒█▒ ▒▓ ▓██▓

▒██▓ ▓█ █▓█ ░▒█████▓▓▒░ ██▒▒ █ ▒ ▓█▒

▓█▓ ▓█ ██▓ ░▓▓▓▓▓▓▓▒ ▒██▓ ░█▒

▓█ █ ▓███▓▒░ ░▓▓▓███▓ ░▒░ ▓█

██▓ ██▒ ░▒▓▓███▓▓▓▓▓██████▓▒ ▓███ █

▓███▒ ███ ░▓▓▒░░ ░▓████▓░ ░▒▓▒ █▓

█▓▒▒▓▓██ ░▒▒░░░▒▒▒▒▓██▓░ █▓

██ ▓░▒█ ▓▓▓▓▒░░ ▒█▓ ▒▓▓██▓ ▓▒ ▒▒▓

▓█▓ ▓▒█ █▓░ ░▒▓▓██▒ ░▓█▒ ▒▒▒░▒▒▓█████▒

██░ ▓█▒█▒ ▒▓▓▒ ▓█ █░ ░░░░ ░█▒

▓█ ▒█▓ ░ █░ ▒█ █▓

█▓ ██ █░ ▓▓ ▒█▓▓▓▒█░

█▓ ░▓██░ ▓▒ ▓█▓▒░░░▒▓█░ ▒█

██ ▓█▓░ ▒ ░▒█▒██▒ ▓▓

▓█▒ ▒█▓▒░ ▒▒ █▒█▓▒▒░░▒██

░██▒ ▒▓▓▒ ▓██▓▒█▒ ░▓▓▓▓▒█▓

░▓██▒ ▓░ ▒█▓█ ░░▒▒▒

▒▓▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░▓▓ ▓░▒█░

______ _ _ _ _____ ____ _ _____ _ _ _ BETA

| ____| (_) | | / ____|/ __ \| | / ____| (_) | |

| |__ | |_ _ __ | | __ | (___ | | | | | | | | |_ ___ _ __ | |_

| __| | | | '_ \| |/ / \___ \| | | | | | | | | |/ _ \ '_ \| __|

| | | | | | | | < ____) | |__| | |____ | |____| | | __/ | | | |_

|_| |_|_|_| |_|_|\_\ |_____/ \___\_\______| \_____|_|_|\___|_| |_|\__|

Welcome! Enter 'HELP;' to list all available commands. 'QUIT;' to exit.

Flink SQL>

开启 checkpoint,每隔10秒做一次 checkpoint , Checkpoint 默认是不开启的,我们需要开启 Checkpoint 来让 Iceberg 可以提交事务。Source在启动时会扫描全表,将表按照主键分成多个chunk。并使用增量快照算法逐个读取每个chunk的数据。作业会周期性执行Checkpoint,记录下已经完成的chunk。当发生Failover时,只需要继续读取未完成的chunk。当chunk全部读取完后,会从之前获取的Binlog位点读取增量的变更记录。Flink作业会继续周期性执行Checkpoint,记录下Binlog位点,当作业发生Failover,便会从之前记录的Binlog位点继续处理,从而实现Exactly Once语义

注意: 这里是演示,生产环境建议checkpoint间隔60秒

SET execution.checkpointing.interval = 10s;

创建MySQL CDC表

在Flink SQL Client 下执行下面的 SQL

CREATETABLE employees_source (

database_name STRING METADATA VIRTUAL,

table_name STRING METADATA VIRTUAL,

emp_no intNOTNULL,

birth_date date,

first_name STRING,

last_name STRING,

gender STRING,

hire_date date,PRIMARYKEY(`emp_no`)NOT ENFORCED

)WITH('connector'='mysql-cdc','hostname'='localhost','port'='3307','username'='root','password'='root','database-name'='emp_[0-9]+','table-name'='employees_[0-9]+');

- ‘database-name’ = ‘emp_[0-9]+’: 这里是使用了正则表达式,同时连接多个库

- ‘table-name’ = ‘employees_[0-9]+’:这里是使用了正则表达式,同时连接多个表

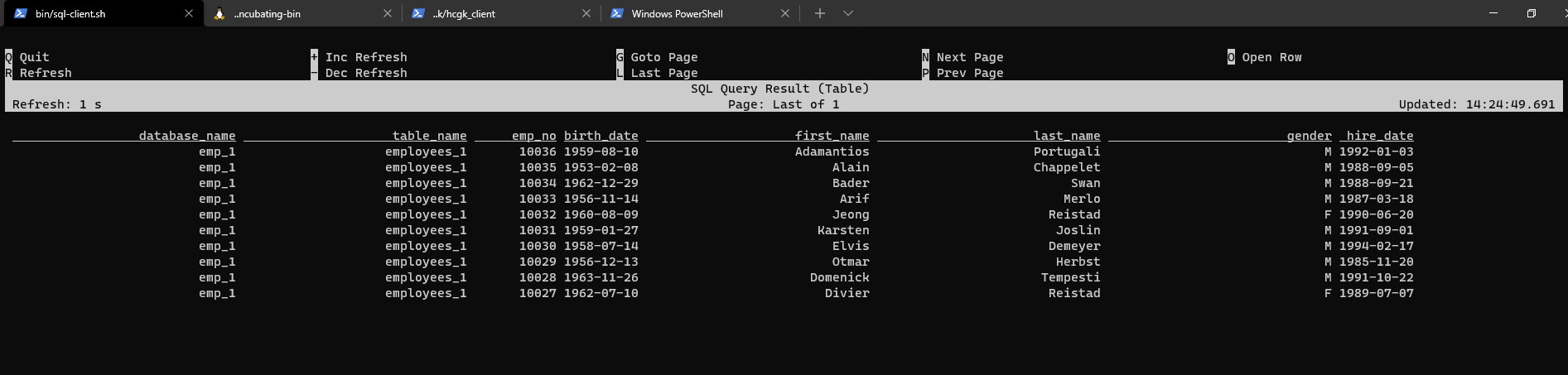

查询CDC表

select*from employees_source limit10;

创建 Doris Sink 表

CREATE TABLE cdc_doris_sink (

emp_no int ,

birth_date STRING,

first_name STRING,

last_name STRING,

gender STRING,

hire_date STRING,

database_name STRING,

table_name STRING

)

WITH (

'connector' = 'doris',

'fenodes' = '172.17.0.3:8030',

'table.identifier' = 'demo.all_employees_info',

'username' = 'root',

'password' = 'mysql密码',

'sink.properties.two_phase_commit'='true',

'sink.label-prefix'='doris_demo_emp_001'

);

参数说明:

- connector : 指定连接器是doris

- fenodes:doris FE节点IP地址及http port

- table.identifier : Doris对应的数据库及表名

- username:doris用户名

- password:doris用户密码

- sink.properties.two_phase_commit:指定使用两阶段提交,这样在stream load的时候,会在http header里加上

two_phase_commit:true,不然会失败- sink.label-prefix : 这个是在两阶段提交的时候必须要加的一个参数,才能保证两端数据一致性,否则会失败

- 其他参数参考官方文档 https://doris.apache.org/zh-CN/

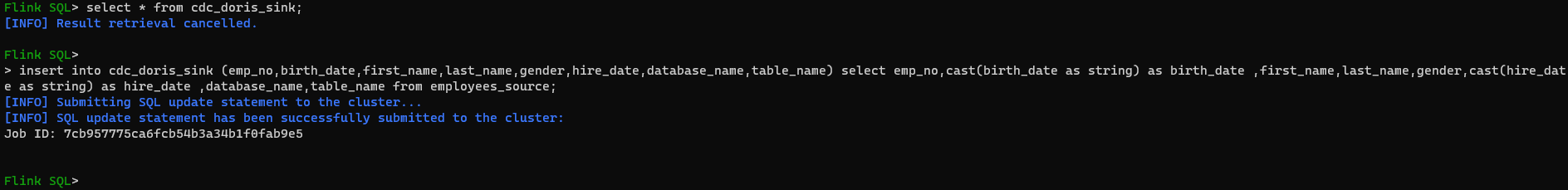

查询Doris sink表 这时候还没有数据

select*from cdc_doris_sink;

将数据插入到Doris表里

insertinto cdc_doris_sink (emp_no,birth_date,first_name,last_name,gender,hire_date,database_name,table_name)select emp_no,cast(birth_date as string)as birth_date ,first_name,last_name,gender,cast(hire_date as string)as hire_date ,database_name,table_name from employees_source;

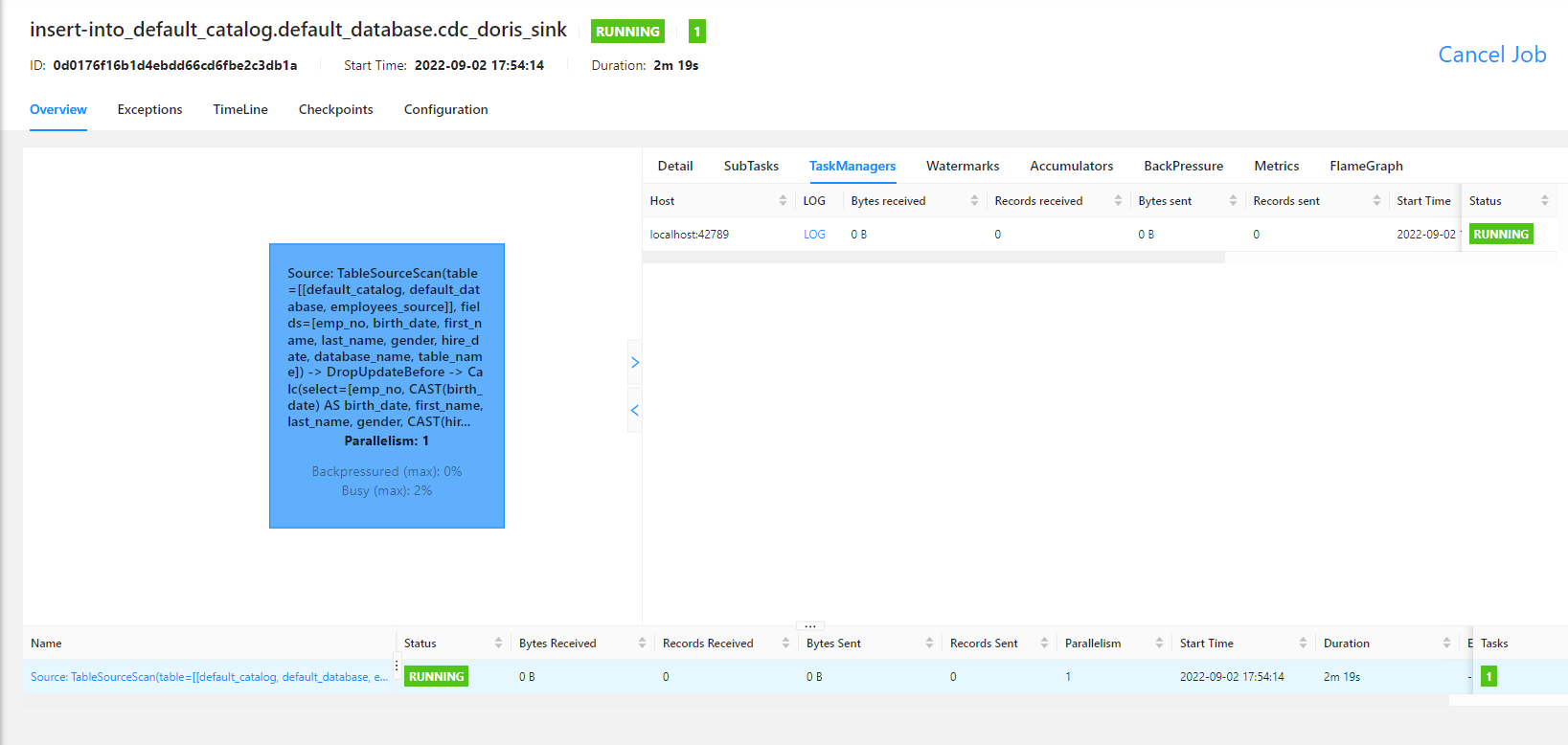

可以看到Flink WEB UI上的任务运行信息

查看Doris数据

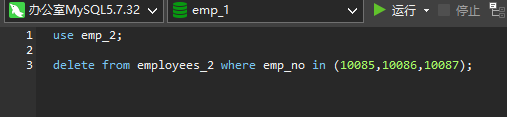

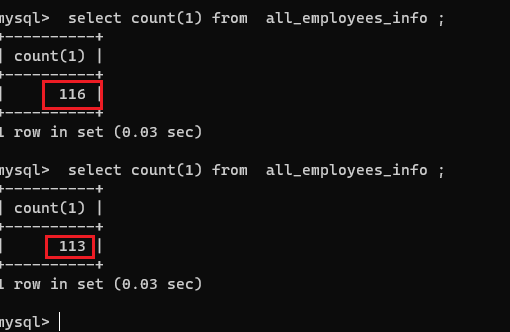

测试MySQL删除

Doris同步更新

验证Doris数据删除 , mysql更新数据, doris中也会更新

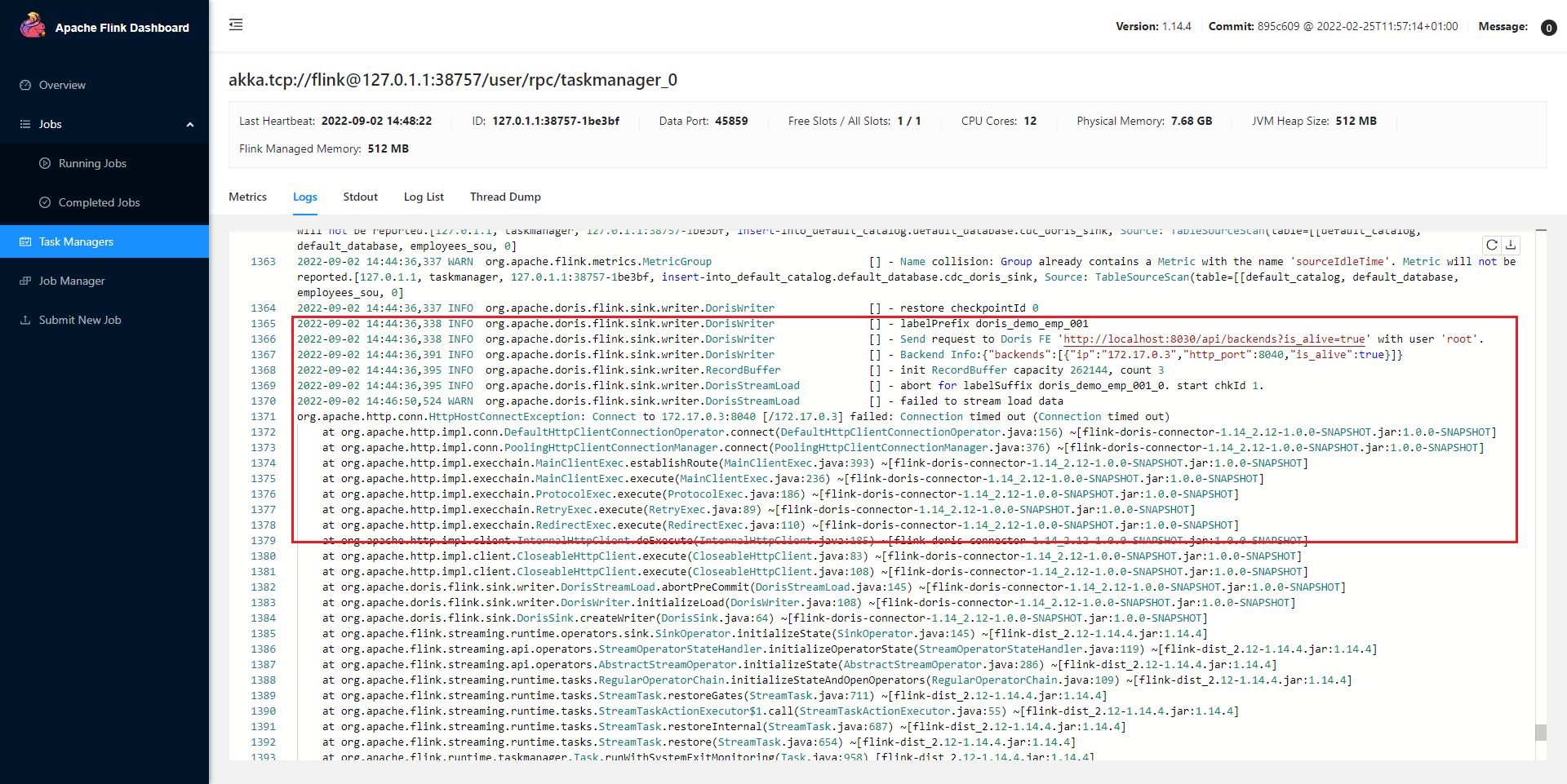

踩坑

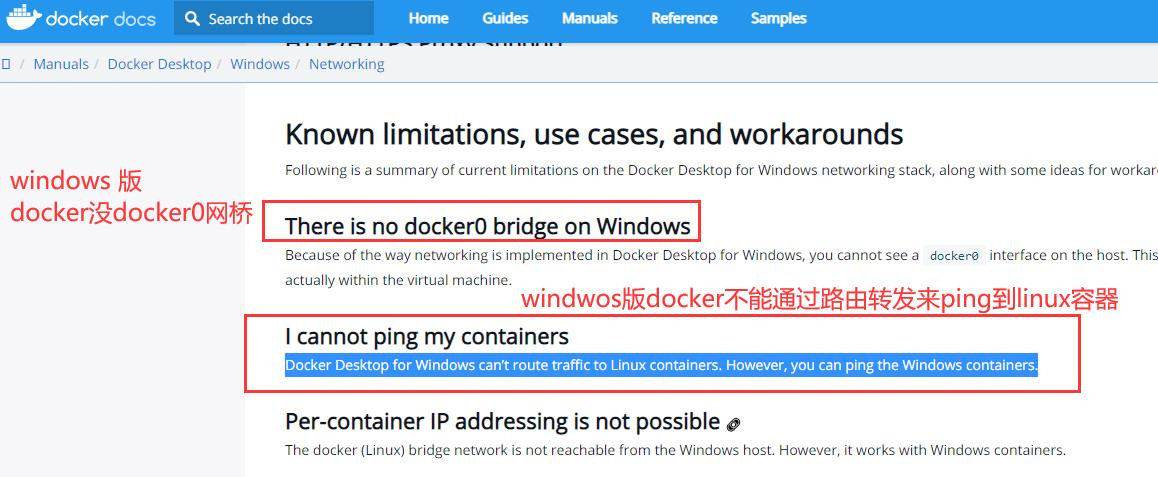

- Flink 连接 Doris Be 超时-

- 原因 : flink和be的网不通 , 因为我这里是WSL , 还没找到WSL的方案, 我暂时是放到了Linux系统上-

- 原因 : flink和be的网不通 , 因为我这里是WSL , 还没找到WSL的方案, 我暂时是放到了Linux系统上-  -

-

版权归原作者 何以问_ 所有, 如有侵权,请联系我们删除。