部署ELKF+Kafka

基础部分

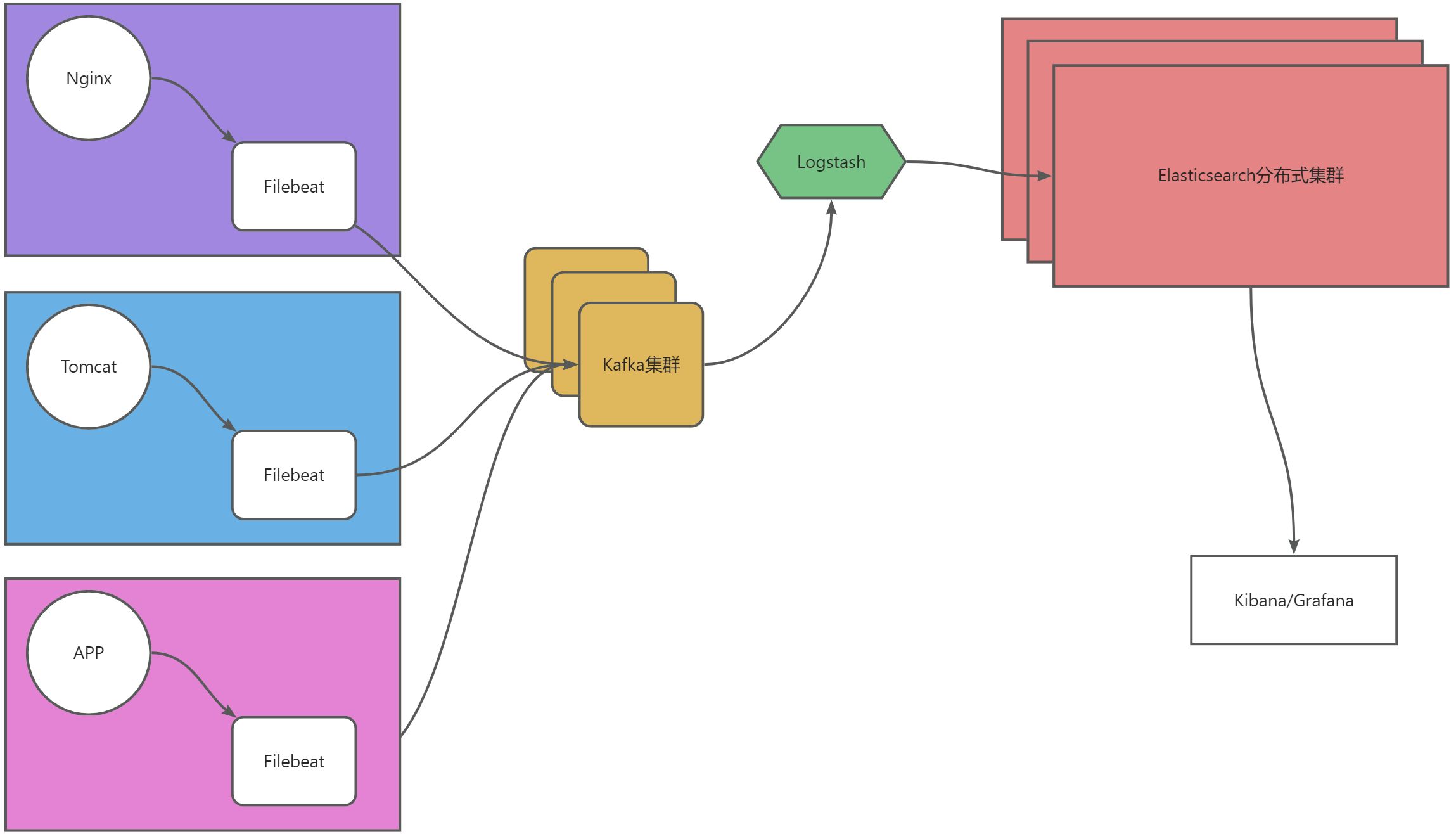

系统架构

主机规划

主机地址主机名称CPU/MEM主机用途192.168.23.141elk-node-014C/8Gjdk+zookeeper+kafkaElasticsearch+Filebeat+Nginx192.168.23.142elk-node-024C/8Gjdk+zookeeper+kafkaElasticsearch+Filebeat+Tomcat192.168.23.143elk-node-034C/8Gjdk+zookeeper+kafkaElasticsearch+Logstash+Kibana

基础配置

三节点都执行

- 设置主机名称

三节点分别执行

#各个主机单独执行命令

hostnamectl set-hostname elk-node-01

hostnamectl set-hostname elk-node-02

hostnamectl set-hostname elk-node-03

- 配置hosts

cat>> /etc/hosts <<EOF

#for zookeeper

192.168.23.141 elk-node-01

192.168.23.142 elk-node-02

192.168.23.143 elk-node-03

EOF

- 关闭防火墙

#所有节点都要执行(可以选择关闭防火墙,也可以选择添加防火墙策略)

systemctl stop firewalld

systemctl disable firewalld

- 关闭selinux

#所有节点都要执行

setenforce 0sed-i"s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

- 关闭swap

swapoff -a&&sysctl-wvm.swappiness=0sed-ri'/^[^#]*swap/s@^@#@' /etc/fstab

- 配置yum源

#所有节点都要执行wget-O /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget-O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all

yum makecache

- 优化sshd服务

#所有节点都要执行sed-ri's/^#UseDNS yes/UseDNS no/g' /etc/ssh/sshd_config

sed-ri's/^GSSAPIAuthentication yes/GSSAPIAuthentication no/g' /etc/ssh/sshd_config

- 集群时间同步

#所有节点都要执行

yum -yinstall ntpdate chrony

sed-ri's/^server/#server/g' /etc/chrony.conf

echo"server ntp.aliyun.com iburst">> /etc/chrony.conf

systemctl start chronyd

systemctl enable chronyd

#显示chronyd服务正在使用的NTP源服务器的详细状态

chronyc sourcestats -v

- 调整限制

cat>> /etc/security/limits.conf <<EOF

#change for kafka

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

- 调整限制-内存限制

sysctl-wvm.max_map_count=262144

Kafka部分

安装服务

三节点都安装

- jdk

- 下载JDK

由于每次去oracle下载JDK都要登录密码,此次上传的JDK1.8版本

- 解压JDK

tar-zxvf jdk-8u401-linux-x64.tar.gz -C /usr/local/

- 环境变量

cat>> /etc/profile <<'EOF'

#for jdk

export HISTTIMEFORMAT="%F %T "

export JAVA_HOME=/usr/local/jdk1.8.0_401/

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

EOFsource /etc/profile

- 查看版本

java-version

- zookeeper

- 下载Zookeeper

wget https://archive.apache.org/dist/zookeeper/zookeeper-3.8.3/apache-zookeeper-3.8.3-bin.tar.gz

- 解压Zookeeper

tar-zxvf apache-zookeeper-3.8.3-bin.tar.gz -C /usr/local/

- 环境变量

cat>> /etc/profile <<'EOF'

#for zookeeper

export ZOOKEEPER_HOME=/usr/local/apache-zookeeper-3.8.3-bin

PATH=$ZOOKEEPER_HOME/bin:$JAVA_HOME/bin:$PATH

EOFsource /etc/profile

- 查看版本

zkServer.sh version

- kafka

- 下载Kafka

wget https://downloads.apache.org/kafka/3.7.0/kafka_2.12-3.7.0.tgz

- 解压Kafka

tar-zxvf kafka_2.12-3.7.0.tgz -C /usr/local/

- 环境变量

cat>> /etc/profile <<'EOF'

#for kafka

export KAFKA_HOME=/usr/local/kafka_2.12-3.7.0

PATH=$KAFKA_HOME/bin:$JAVA_HOME/bin:$PATH

EOFsource /etc/profile

- 查看版本

kafka-server-start.sh --version

配置服务

三节点都执行

- zookeeper

- 创建目录

mkdir-p /usr/local/apache-zookeeper-3.8.3-bin/{logs,data}

- 编辑配置

cat> /usr/local/apache-zookeeper-3.8.3-bin/conf/zoo.cfg <<EOF

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/apache-zookeeper-3.8.3-bin/data

dataLogDir=/usr/local/apache-zookeeper-3.8.3-bin/logs

clientPort=2181

admin.serverPort=8888

#集群配置

server.141=elk-node-01:2888:3888

server.142=elk-node-02:2888:3888

server.143=elk-node-03:2888:3888

EOF

- 编辑myid

三节点分别执行

#kafka-node-01echo"141"> /usr/local/apache-zookeeper-3.8.3-bin/data/myid

#kafka-node-02echo"142"> /usr/local/apache-zookeeper-3.8.3-bin/data/myid

#kafka-node-03echo"143"> /usr/local/apache-zookeeper-3.8.3-bin/data/myid

- Kafka

- 创建目录

mkdir-p /usr/local/kafka_2.12-3.7.0/data

- 编辑配置

cat> /usr/local/kafka_2.12-3.7.0/config/server.properties <<EOF

#每个节点的broker.id都能一样,broker.id是集群中唯一值

broker.id=0

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

#端口设置

#集群中数据存储路径

log.dirs=/usr/local/kafka_2.12-3.7.0/data

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.retention.check.interval.ms=300000

#集群连接ZooKeeper设置

zookeeper.connect=elk-node-01:2181,elk-node-02:2181,elk-node-03:2181/kafka

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

EOF

- 修改broker.id

三节点分别执行

#kafka-node-01sed-i's/broker.id=0/broker.id=141/g' /usr/local/kafka_2.12-3.7.0/config/server.properties

#kafka-node-02sed-i's/broker.id=0/broker.id=142/g' /usr/local/kafka_2.12-3.7.0/config/server.properties

#kafka-node-03sed-i's/broker.id=0/broker.id=143/g' /usr/local/kafka_2.12-3.7.0/config/server.properties

启动服务

三节点都执行

- ZooKeeper

#启动服务

zkServer.sh start

- Kafka

#启动服务

kafka-server-start.sh -daemon /usr/local/kafka_2.12-3.7.0/config/server.properties

检查服务

三节点都执行

- ZooKeeper

- 查看ZooKeeper集群状态

zkServer.sh status

- 查看ZooKeeper集群端口

ss -ntl

- 查看ZooKeeper进程

jps -l

- Kafka

- 查看Kafka集群端口

ss -ntl

- 查看Kafka进程

jps -l

ELKF部分

安装服务

- Elasticsearch

三节点都执行

- 创建用户

useradd elasticstack

- 下载安装包

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.17.22-linux-x86_64.tar.gz

- 安装Elasticsearch

tar-zxvf elasticsearch-7.17.22-linux-x86_64.tar.gz -C /usr/local/

mkdir-p /usr/local/elasticsearch-7.17.22/{data,logs}chown-R elasticstack:elasticstack /usr/local/elasticsearch-7.17.22/

- 环境变量

cat>> /etc/profile <<'EOF'

#for elasticsearch

export ELASTICSEARCH_HOME=/usr/local/elasticsearch-7.17.22

PATH=$ELASTICSEARCH_HOME/bin:$PATH

EOFsource /etc/profile

- Logstash

elk-node-03节点安装

- 下载安装包

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.17.22-linux-x86_64.tar.gz

- 安装Logstash

tar-zxvf logstash-7.17.22-linux-x86_64.tar.gz -C /usr/local/

mkdir-p /usr/local/logstash-7.17.22/{data,logs}chown-R elasticstack:elasticstack /usr/local/logstash-7.17.22/

- 环境变量

cat>> /etc/profile <<'EOF'

#for logstash

export LOGSTASH_HOME=/usr/local/logstash-7.17.22

PATH=$LOGSTASH_HOME/bin:$PATH

EOFsource /etc/profile

- Kibana

elk-node-03节点安装

- 下载安装包

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.17.22-linux-x86_64.tar.gz

- 安装Kibana

tar-zxvf kibana-7.17.22-linux-x86_64.tar.gz -C /usr/local/

mkdir-p /usr/local/kibana-7.17.22-linux-x86_64/{data,logs}chown-R elasticstack:elasticstack /usr/local/kibana-7.17.22-linux-x86_64/

- 环境变量

cat>> /etc/profile <<'EOF'

#for kibana

export KIBANA_HOME=/usr/local/kibana-7.17.22-linux-x86_64

PATH=$KIBANA_HOME/bin:$PATH

EOFsource /etc/profile

- Filebeat

elk-node-01和elk-node-02节点安装

- 下载安装包

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.17.22-linux-x86_64.tar.gz

- 安装Filebeat

tar-zxvf filebeat-7.17.22-linux-x86_64.tar.gz -C /usr/local/

chown-R elasticstack:elasticstack /usr/local/filebeat-7.17.22-linux-x86_64/

- 环境变量

cat>> /etc/profile <<'EOF'

#for filebeat

export FILEBEAT_HOME=/usr/local/filebeat-7.17.22-linux-x86_64

PATH=$FILEBEAT_HOME:$PATH

EOFsource /etc/profile

配置服务

- Elasticsearch

- 编辑配置文件

三节点都执行

cp /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml_`date +%F`cat> /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml <<EOF

cluster.name: ELK-learning

node.name: elk-node

path.data: /usr/local/elasticsearch-7.17.22/data

path.logs: /usr/local/elasticsearch-7.17.22/logs

network.host: 192.168.23.1

http.port: 9200

discovery.seed_hosts: ["192.168.23.141", "192.168.23.142", "192.168.23.143"]

cluster.initial_master_nodes: ["192.168.23.141", "192.168.23.142", "192.168.23.143"]

EOF

- 调整配置文件

三节点分别执行

#elk-node-01sed-i's/node.name: elk-node/node.name: elk-node-01/g' /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml

sed-i's/network.host: 192.168.23.1/network.host: 192.168.23.141/g' /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml

#elk-node-02sed-i's/node.name: elk-node/node.name: elk-node-02/g' /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml

sed-i's/network.host: 192.168.23.1/network.host: 192.168.23.142/g' /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml

#elk-node-03sed-i's/node.name: elk-node/node.name: elk-node-03/g' /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml

sed-i's/network.host: 192.168.23.1/network.host: 192.168.23.143/g' /usr/local/elasticsearch-7.17.22/config/elasticsearch.yml

- Logstash

- 编辑配置文件

elk-node-03节点执行

cp /usr/local/logstash-7.17.22/config/logstash.yml /usr/local/logstash-7.17.22/config/logstash.yml_`date +%F`cat> /usr/local/logstash-7.17.22/config/logstash.yml <<EOF

#设置节点名称,一般使用主机名

node.name: elk-node-03

#logstash存储插件等数据目录

path.data: /usr/local/logstash-7.17.22/data

#Logstash监听地址

http.host: "0.0.0.0"

#Logstash监听端口,可以是单个端口,也可以是范围端口,默认9600-9700

http.port: 9600

#Logstash日志存放路径

path.logs: /usr/local/logstash-7.17.22/logs

EOF

- Kibana

- 编辑配置文件

elk-node-03节点执行

cp /usr/local/kibana-7.17.22-linux-x86_64/config/kibana.yml /usr/local/kibana-7.17.22-linux-x86_64/config/kibana.yml_`date +%F`cat> /usr/local/kibana-7.17.22-linux-x86_64/config/kibana.yml <<EOF

server.port: 5601

server.host: "192.168.23.143"

server.maxPayload: 1048576

server.name: "ELK-Kibana"

elasticsearch.hosts: ["http://192.168.23.141:9200", "http://192.168.23.142:9200", "http://192.168.23.143:9200"]

i18n.locale: "zh-CN"

EOF

- Filebeat

elk-node-01和elk-node-02节点执行

- 编辑配置

cat> /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-stdin-to-console.yml <<EOF

filebeat.inputs:

- type: stdin

output.console:

pretty: true

EOF

启动/检查服务

- Elasticsearch

三节点都执行

- 启动服务

su - elasticstack

nohup elasticsearch &

- 检查端口

ss -ntl

- 验证服务

curl-iv192.168.23.141:9200

curl-iv192.168.23.142:9200

curl-iv192.168.23.143:9200

- Logstash

elk-node-03节点执行

- 编译采集配置–测试使用

cp /usr/local/logstash-7.17.22/config/logstash-sample.conf /usr/local/logstash-7.17.22/config/logstash-sample.conf_`date +%F`cat> /usr/local/logstash-7.17.22/config/logstash-sample.conf <<EOF

input {

stdin { }

}

output {

stdout { }

}

EOFchown elasticstack:elasticstack /usr/local/logstash-7.17.22/config/logstash-sample.conf

- 启动服务

su - elasticstack

logstash -f /usr/local/logstash-7.17.22/config/logstash-sample.conf

- Kibana

elk-node-03节点执行

- 启动服务

su - elasticstack

nohup kibana &

- 验证服务

curl-iv http://192.168.23.143:5601

- Filebeat

elk-node-01和elk-node-02节点执行

- 编辑采集配置–测试使用

cat> /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-stdin-to-console.yml <<EOF

filebeat.inputs:

- type: stdin

output.console:

pretty: true

EOFchmod go-w /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-stdin-to-console.yml

chown elasticstack:elasticstack /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-stdin-to-console.yml

- 启动服务

su - elasticstack

filebeat -e-c /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-stdin-to-console.yml

ELKF+Kafka整合

Kafka调整

- 创建topic

#测试使用topic

kafka-topics.sh --bootstrap-server "192.168.23.141:9092,192.168.23.142:9092,192.168.23.143:9092"--create--topic"test"--partitions3 --replication-factor 3

- 查看topic

kafka-topics.sh --bootstrap-server "192.168.23.141:9092,192.168.23.142:9092,192.168.23.143:9092"--list

Logstash调整

- 编辑采集文件

cat> /usr/local/logstash-7.17.22/config/logstash-kafka.conf <<EOF

input {

kafka {

bootstrap_servers => ["192.168.23.141:9092,192.168.23.142:9092,192.168.23.143:9092"]

topics => "test"

}

}

output {

elasticsearch {

hosts => ["http://192.168.23.141:9200", "http://192.168.23.142:9200", "http://192.168.23.143:9200"]

index => "app-logs-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

EOFchown elasticstack:elasticstack /usr/local/logstash-7.17.22/config/logstash-kafka.conf

- 启动Logstash

nohup logstash -f /usr/local/logstash-7.17.22/config/logstash-kafka.conf &

Filebeat调整-测试使用

- 编辑配置文件

cat> /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml <<EOF

filebeat.inputs:

- type: stdin

#定义日志输出

output.kafka:

enabled: true

hosts: ["192.168.23.141:9092","192.168.23.142:9092","192.168.23.143:9092"]

topic: "test"

EOFchown elasticstack:elasticstack /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml

- 启动Filebeat

filebeat -e-c /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml

ELKF+Kafka采集日志

Kafka主题

- 创建主题

#创建Nginx主题

kafka-topics.sh --bootstrap-server "192.168.23.141:9092,192.168.23.142:9092,192.168.23.143:9092"--create--topic"nginx"--partitions3 --replication-factor 3#创建Tomcat主题

kafka-topics.sh --bootstrap-server "192.168.23.141:9092,192.168.23.142:9092,192.168.23.143:9092"--create--topic"tomcat"--partitions3 --replication-factor 3

- 查看主题

kafka-topics.sh --bootstrap-server "192.168.23.141:9092,192.168.23.142:9092,192.168.23.143:9092"--list

Nginx环境

安装Nginx

useradd nginx

wget https://nginx.org/download/nginx-1.22.1.tar.gz

tar-zxvf nginx-1.22.1.tar.gz -C /usr/local/

cd /usr/local/nginx-1.22.1

./configure --prefix=/usr/local/nginx \--user=nginx --group=nginx \

--with-http_realip_module \

--with-http_v2_module \

--with-http_stub_status_module \

--with-http_ssl_module \

--with-http_gzip_static_module \

--with-stream \

--with-stream_ssl_module \

--with-http_sub_module

chown-R nginx:nginx /usr/local/nginx

配置Nginx

mkdir-p /data/html/

cat> /data/html/index.html <<EOF

test learning for EFK

EFK 的学习测试

EOFchown-R nginx:nginx /data/html/

cat> /usr/local/nginx/conf/nginx.conf <<'EOF'

worker_processes 1;

error_log logs/error.log info;

pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main;

sendfile on;

keepalive_timeout 65;

server {

listen 80;

server_name localhost;

charset utf-8;

location / {

root /data/html/;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

EOF

启动Nginx

setcap cap_net_bind_service=+ep /usr/local/nginx/sbin/nginx

su - nginx

/usr/local/nginx/sbin/nginx -t

/usr/local/nginx/sbin/nginx

日志采集-原生日志

- 编辑filebeat配置文件

cat> /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml <<EOF

filebeat.inputs:

#定义Nginx access日志收集

- type: log

enabled: true

paths:

- /usr/local/nginx/logs/access.log*

#自定义tag

tags: ["Nginx", "logs", "access"]

#定义Nginx error日志收集

- type: log

enabled: true

paths:

- /usr/local/nginx/logs/error.log*

#自定义tag

tags: ["Nginx", "logs", "error"]

#定义Elasticsearch模板配置

setup.template.settings:

#定义Elasticsearch中每个索引的主分片数量,单位个

index.number_of_shards: 3

#定义Elasticsearch中每个索引的副本分片数量,单位个(注意:设置的副本数量需要小于Elasticsearch集群的主机数量)

index.number_of_replicas: 2

#定义Elasticsearch中每个索引的刷新频率,单位秒(s)

index.refresh_interval: 5s

#禁用索引生命周期管理(如果不禁用,自定义索引无法生效)

setup.ilm.enabled: false

#自定义索引名称

setup.template.name: "app-logs"

#自定义索引匹配规则

setup.template.pattern: "app-logs*"

#定义日志输出

output.kafka:

enabled: true

hosts: ["192.168.23.141:9092","192.168.23.142:9092","192.168.23.143:9092"]

topic: "nginx"

EOF

- 启动Filebeat

filebeat -e-c /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml

Tomcat环境

安装Tomcat

#安装JDK#上传JDK安装包tar-zxvf jdk-8u401-linux-x64.tar.gz -C /usr/local/

cat>> /etc/profile <<'EOF'exportHISTTIMEFORMAT="%F %T "exportJAVA_HOME=/usr/local/jdk1.8.0_401/

exportPATH=$JAVA_HOME/bin:$PATHexportCLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

EOF

#安装Tomcatwget https://archive.apache.org/dist/tomcat/tomcat-7/v7.0.109/bin/apache-tomcat-7.0.109.tar.gz

tar-zxvf apache-tomcat-7.0.109.tar.gz -C /usr/local/

useradd tomcat

chown-R tomcat:tomcat /usr/local/apache-tomcat-7.0.109/

启动Tomcat

su - tomcat

/usr/local/apache-tomcat-7.0.109/bin/startup.sh

日志采集-原生日志

- 编辑Filebeat配置文件

cat> /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml <<EOF

filebeat.inputs:

#定义Tomcat catalina日志收集

- type: log

enabled: true

paths:

- /usr/local/apache-tomcat-7.0.109/logs/catalina*

#自定义tag

tags: ["Tomcat", "logs", "catalina"]

#定义Tomcat host-manager日志收集

- type: log

enabled: true

paths:

- /usr/local/apache-tomcat-7.0.109/logs/host-manager*

#自定义tag

tags: ["Tomcat", "logs", "host-manager"]

#定义Tomcat localhost日志收集

- type: log

enabled: true

paths:

- /usr/local/apache-tomcat-7.0.109/logs/localhost*

#自定义tag

tags: ["Tomcat", "logs", "localhost"]

#定义Tomcat manager日志收集

- type: log

enabled: true

paths:

- /usr/local/apache-tomcat-7.0.109/logs/manager*

#自定义tag

tags: ["Tomcat", "logs", "manager"]

#定义Elasticsearch模板配置

setup.template.settings:

#定义Elasticsearch中每个索引的主分片数量,单位个

index.number_of_shards: 3

#定义Elasticsearch中每个索引的副本分片数量,单位个(注意:设置的副本数量需要小于Elasticsearch集群的主机数量)

index.number_of_replicas: 2

#定义Elasticsearch中每个索引的刷新频率,单位秒(s)

index.refresh_interval: 5s

#禁用索引生命周期管理(如果不禁用,自定义索引无法生效)

setup.ilm.enabled: false

#自定义索引名称

setup.template.name: "app-logs"

#自定义索引匹配规则

setup.template.pattern: "app-logs*"

#定义日志输出

output.kafka:

enabled: true

hosts: ["192.168.23.141:9092","192.168.23.142:9092","192.168.23.143:9092"]

topic: "tomcat"

EOF

- 启动Filebeat

filebeat -e-c /usr/local/filebeat-7.17.22-linux-x86_64/filebeat-kafka.yml

版权归原作者 王茗渠 所有, 如有侵权,请联系我们删除。