背景

最近在做

Spark 3.1

升级

Spark 3.5

的过程中,遇到了一批SQL在运行的过程中 Driver OOM的情况,排查到是AQE开启导致的问题,再次分析记录一下,顺便了解一下Spark中指标的事件处理情况

结论

SQLAppStatusListener

类在内存中存放着 一个整个SQL查询链的所有stage以及stage的指标信息,在AQE中 一个job会被拆分成很多job,甚至几百上千的job,这个时候 stageMetrics的数据就会成百上倍的被存储在内存中,从而导致

Driver OOM

。

解决方法:

- 关闭AQE

spark.sql.adaptive.enabled false - 合并对应的PR-SPARK-45439

分析

背景知识:对于一个完整链接的sql语句来说(比如说从 读取数据源,到 数据处理操作,再到插入hive表),这可以称其为一个最小的SQL执行单元,这最小的数据执行单元在Spark内部是可以跟踪的,也就是用

executionId

来进行跟踪的。

对于一个sql,举例来说 :

insert into TableA select * from TableB;

在生成 物理计划的过程中会调用 QueryExecution.assertOptimized 方法,该方法会触发eagerlyExecuteCommands调用,最终会到

SQLExecution.withNewExecutionId

方法:

def assertOptimized(): Unit = optimizedPlan

...

lazy val commandExecuted: LogicalPlan = mode match {

case CommandExecutionMode.NON_ROOT => analyzed.mapChildren(eagerlyExecuteCommands)

case CommandExecutionMode.ALL => eagerlyExecuteCommands(analyzed)

case CommandExecutionMode.SKIP => analyzed

}

...

lazy val optimizedPlan: LogicalPlan = {

// We need to materialize the commandExecuted here because optimizedPlan is also tracked under

// the optimizing phase

assertCommandExecuted()

executePhase(QueryPlanningTracker.OPTIMIZATION) {

// clone the plan to avoid sharing the plan instance between different stages like analyzing,

// optimizing and planning.

val plan =

sparkSession.sessionState.optimizer.executeAndTrack(withCachedData.clone(), tracker)

// We do not want optimized plans to be re-analyzed as literals that have been constant

// folded and such can cause issues during analysis. While `clone` should maintain the

// `analyzed` state of the LogicalPlan, we set the plan as analyzed here as well out of

// paranoia.

plan.setAnalyzed()

plan

}

def assertCommandExecuted(): Unit = commandExecuted

...

private def eagerlyExecuteCommands(p: LogicalPlan) = p transformDown {

case c: Command =>

// Since Command execution will eagerly take place here,

// and in most cases be the bulk of time and effort,

// with the rest of processing of the root plan being just outputting command results,

// for eagerly executed commands we mark this place as beginning of execution.

tracker.setReadyForExecution()

val qe = sparkSession.sessionState.executePlan(c, CommandExecutionMode.NON_ROOT)

val name = commandExecutionName(c)

val result = QueryExecution.withInternalError(s"Eagerly executed $name failed.") {

SQLExecution.withNewExecutionId(qe, Some(name)) {

qe.executedPlan.executeCollect()

}

}

而

SQLExecution.withNewExecutionId

主要的作用是设置当前计划的所属的

executionId

:

val executionId = SQLExecution.nextExecutionId

sc.setLocalProperty(EXECUTION_ID_KEY, executionId.toString)

该

EXECUTION_ID_KEY

的值会在JobStart的时候传递给Event,以便记录跟踪整个执行过程中的指标信息。

同时我们在方法中

eagerlyExecuteCommands

看到

qe.executedPlan.executeCollect()

这是具体的执行方法,针对于

insert into

操作来说,物理计划就是

InsertIntoHadoopFsRelationCommand

,这里的run方法最终会流转到

DAGScheduler.submitJob

方法:

eventProcessLoop.post(JobSubmitted(

jobId, rdd, func2, partitions.toArray, callSite, waiter,

JobArtifactSet.getActiveOrDefault(sc),

Utils.cloneProperties(properties)))

最终会被

DAGScheduler.handleJobSubmitted

处理,其中会发送

SparkListenerJobStart

事件:

listenerBus.post(

SparkListenerJobStart(job.jobId, jobSubmissionTime, stageInfos,

Utils.cloneProperties(properties)))

该事件会被

SQLAppStatusListener

捕获,从而转到

onJobStart

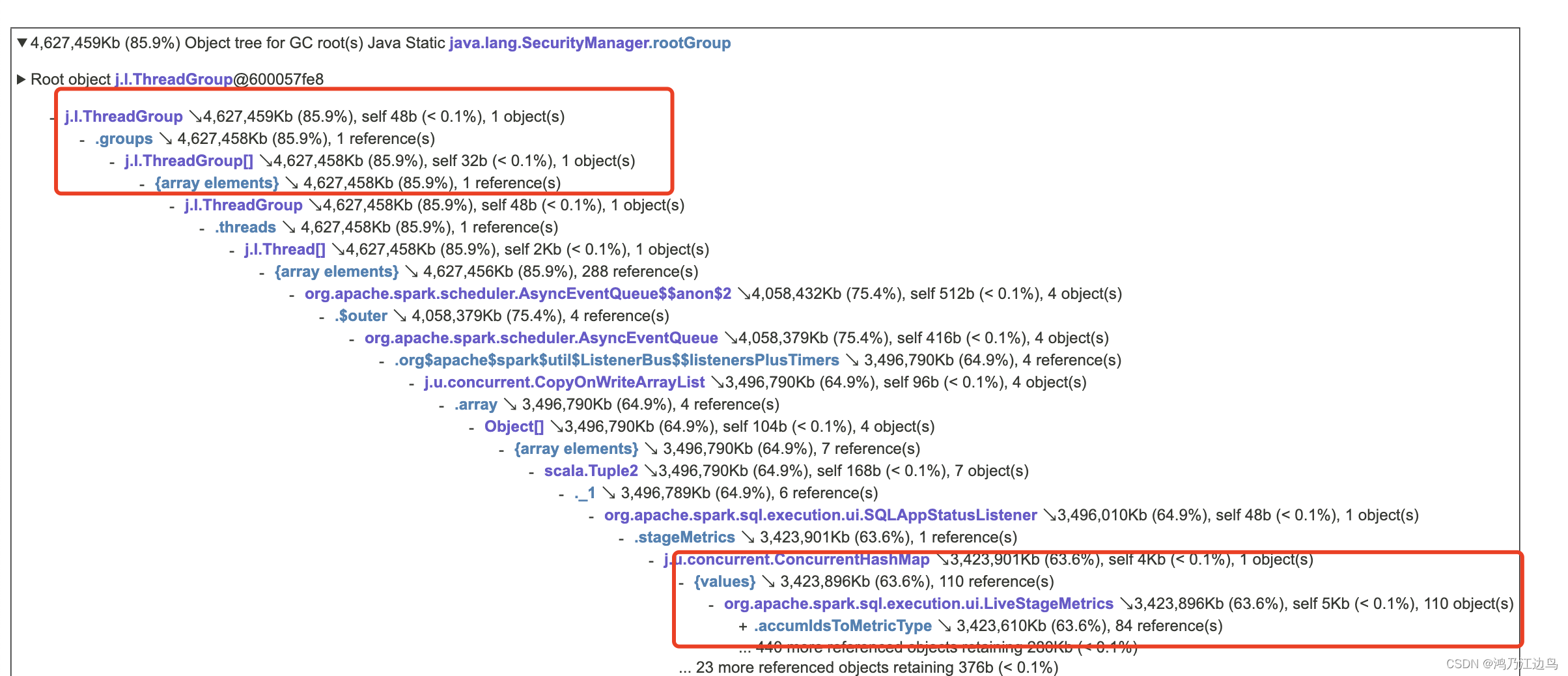

处理,这里有会涉及到指标信息的存储,这里我们截图出dump的内存占用情况:

可以看到 SQLAppStatusListener 的 LiveStageMetrics 占用很大,也就是 accumIdsToMetricType占用很大

那在AQE中是怎么回事呢?

我们知道再AQE中,任务会从source节点按照shuffle进行分割,从而形成单独的job,从而生成对应的shuffle指标,具体的分割以及执行代码在

AdaptiveSparkPlanExec.getFinalPhysicalPlan

中,如下:

var result = createQueryStages(currentPhysicalPlan)

val events = new LinkedBlockingQueue[StageMaterializationEvent]()

val errors = new mutable.ArrayBuffer[Throwable]()

var stagesToReplace = Seq.empty[QueryStageExec]

while (!result.allChildStagesMaterialized) {

currentPhysicalPlan = result.newPlan

if (result.newStages.nonEmpty) {

stagesToReplace = result.newStages ++ stagesToReplace

executionId.foreach(onUpdatePlan(_, result.newStages.map(_.plan)))

// SPARK-33933: we should submit tasks of broadcast stages first, to avoid waiting

// for tasks to be scheduled and leading to broadcast timeout.

// This partial fix only guarantees the start of materialization for BroadcastQueryStage

// is prior to others, but because the submission of collect job for broadcasting is

// running in another thread, the issue is not completely resolved.

val reorderedNewStages = result.newStages

.sortWith {

case (_: BroadcastQueryStageExec, _: BroadcastQueryStageExec) => false

case (_: BroadcastQueryStageExec, _) => true

case _ => false

}

// Start materialization of all new stages and fail fast if any stages failed eagerly

reorderedNewStages.foreach { stage =>

try {

stage.materialize().onComplete { res =>

if (res.isSuccess) {

events.offer(StageSuccess(stage, res.get))

} else {

events.offer(StageFailure(stage, res.failed.get))

}

// explicitly clean up the resources in this stage

stage.cleanupResources()

}(AdaptiveSparkPlanExec.executionContext)

这里就是得看

stage.materialize()

这个方法,这两个stage只有两类:

BroadcastQueryStageExec 和 ShuffleQueryStageExec

,

这两个物理计划稍微分析一下如下:

- BroadcastQueryStageExec 数据流如下:

broadcast.submitBroadcastJob || \/promise.future || \/relationFuture || \/child.executeCollectIterator()其中promise的设置在relationFuture方法中,而relationFuture会被doPrepare调用,而submitBroadcastJob会调用executeQuery,从而调用doPrepare,executeCollectIterator()最终也会发送JobSubmitted事件,分析和上面的一样 - ShuffleQueryStageExec

shuffle.submitShuffleJob || \/ sparkContext.submitMapStage(shuffleDependency) || \/ dagScheduler.submitMapStage

该

submitMapStage

会发送

MapStageSubmitted

事件:

eventProcessLoop.post(MapStageSubmitted(

jobId, dependency, callSite, waiter, JobArtifactSet.getActiveOrDefault(sc),

Utils.cloneProperties(properties)))

最终会被

DAGScheduler.handleMapStageSubmitted

处理,其中会发送

SparkListenerJobStart

事件:

listenerBus.post(

SparkListenerJobStart(job.jobId, jobSubmissionTime, stageInfos,

Utils.cloneProperties(properties)))

该事件会被

SQLAppStatusListener

捕获,从而转到

onJobStart

处理:

private val liveExecutions = new ConcurrentHashMap[Long, LiveExecutionData]()

private val stageMetrics = new ConcurrentHashMap[Int, LiveStageMetrics]()

...

override def onJobStart(event: SparkListenerJobStart): Unit = {

val executionIdString = event.properties.getProperty(SQLExecution.EXECUTION_ID_KEY)

if (executionIdString == null) {

// This is not a job created by SQL

return

}

val executionId = executionIdString.toLong

val jobId = event.jobId

val exec = Option(liveExecutions.get(executionId))

该方法会获取事件中的

executionId

,在AQE中,同一个执行单元的

executionId

是一样的,所以

stageMetrics

内存占用会越来越大。

而这里指标的更新是在

AdaptiveSparkPlanExec.onUpdatePlan

等方法中。

这样整个事件的数据流以及问题的产生原因就应该很清楚了。

其他

为啥AQE以后多个Job还是共享一个executionId呢?因为原则上来说,如果没有开启AQE之前,一个SQL执行单元的是属于同一个Job的,开启了AQE之后,因为AQE的原因,一个Job被拆成了了多个Job,但是从逻辑上来说,还是属于同一个SQL处理单元的所以还是得归属到一次执行中。

版权归原作者 鸿乃江边鸟 所有, 如有侵权,请联系我们删除。