Flink 系列文章

1、Flink1.12.7或1.13.5详细介绍及本地安装部署、验证

2、Flink1.13.5二种部署方式(Standalone、Standalone HA )、四种提交任务方式(前两种及session和per-job)验证详细步骤

3、flink重要概念(api分层、角色、执行流程、执行图和编程模型)及dataset、datastream详细示例入门和提交任务至on yarn运行

4、介绍Flink的流批一体、transformations的18种算子详细介绍、Flink与Kafka的source、sink介绍

5、Flink 的 source、transformations、sink的详细示例(一)

5、Flink的source、transformations、sink的详细示例(二)-source和transformation示例

5、Flink的source、transformations、sink的详细示例(三)-sink示例

6、Flink四大基石之Window详解与详细示例(一)

6、Flink四大基石之Window详解与详细示例(二)

7、Flink四大基石之Time和WaterMaker详解与详细示例(watermaker基本使用、kafka作为数据源的watermaker使用示例以及超出最大允许延迟数据的接收实现)

8、Flink四大基石之State概念、使用场景、持久化、批处理的详解与keyed state和operator state、broadcast state使用和详细示例

9、Flink四大基石之Checkpoint容错机制详解及示例(checkpoint配置、重启策略、手动恢复checkpoint和savepoint)

10、Flink的source、transformations、sink的详细示例(二)-source和transformation示例【补充示例】

11、Flink配置flink-conf.yaml详细说明(HA配置、checkpoint、web、安全、zookeeper、historyserver、workers、zoo.cfg)

12、Flink source和sink 的 clickhouse 详细示例

13、Flink 的table api和sql的介绍、示例等系列综合文章链接

文章目录

本文主要介绍Flink的window,针对常用的window详细介绍。具体示例在下一篇进行介绍。

本文部分图片来源于互联网。

截至本篇之前,针对Flink的基本操作已经完成。通过前面的内容,可以熟练的使用Flink的基本功能,比如source、transformation、sink。从本篇开始介绍Flink的四大基石,即Windows、State、Time-watermarker和Checkpoint,本篇为第一篇,开始介绍Window。

一、Flink的window

1、window介绍

官网地址:https://nightlies.apache.org/flink/flink-docs-release-1.12/dev/stream/operators/windows.html

Windows are at the heart of processing infinite streams. Windows split the stream into “buckets” of finite size, over which we can apply computations. This document focuses on how windowing is performed in Flink and how the programmer can benefit to the maximum from its offered functionality.

The general structure of a windowed Flink program is presented below. The first snippet refers to keyed streams, while the second to non-keyed ones. As one can see, the only difference is the keyBy(…) call for the keyed streams and the window(…) which becomes windowAll(…) for non-keyed streams. This is also going to serve as a roadmap for the rest of the page.

流计算中一般在对流数据进行操作之前都会先进行开窗,即基于一个什么样的窗口上做这个计算。Flink提供了开箱即用的各种窗口,比如滑动窗口、滚动窗口、会话窗口以及非常灵活的自定义的窗口。

在流处理应用中,数据是连续不断的,有时需要做一些聚合类的处理,例如在过去的1分钟内有多少用户点击了我们的网页。

在这种情况下,必须定义一个窗口(window),用来收集最近1分钟内的数据,并对这个窗口内的数据进行计算。

2、window API

- Keyed Windows

stream

.keyBy(...)<- keyed versus non-keyed windows

.window(...)<- required:"assigner"[.trigger(...)]<- optional:"trigger"(elsedefault trigger)[.evictor(...)]<- optional:"evictor"(else no evictor)[.allowedLateness(...)]<- optional:"lateness"(else zero)[.sideOutputLateData(...)]<- optional:"output tag"(else no side output for late data).reduce/aggregate/fold/apply()<- required:"function"[.getSideOutput(...)]<- optional:"output tag"

- Non-Keyed Windows

stream

.windowAll(...)<- required:"assigner"[.trigger(...)]<- optional:"trigger"(elsedefault trigger)[.evictor(...)]<- optional:"evictor"(else no evictor)[.allowedLateness(...)]<- optional:"lateness"(else zero)[.sideOutputLateData(...)]<- optional:"output tag"(else no side output for late data).reduce/aggregate/fold/apply()<- required:"function"[.getSideOutput(...)]<- optional:"output tag"

In the above, the commands in square brackets ([…]) are optional.

This reveals that Flink allows you to customize your windowing logic in many different ways so that it best fits your needs.

使用keyby的流,应该使用window方法

未使用keyby的流,应该调用windowAll方法

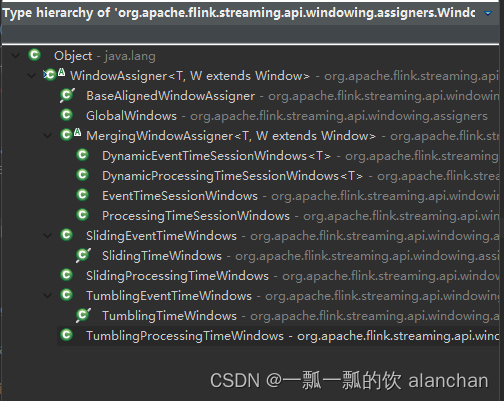

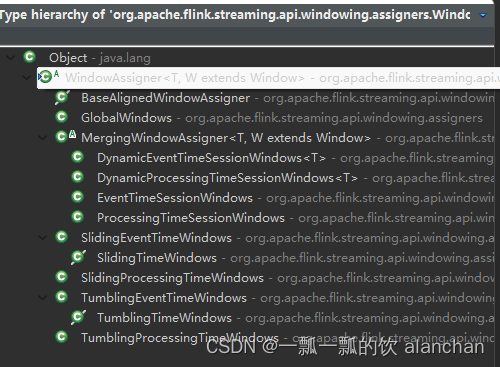

1)、WindowAssigner

window/windowAll 方法接收的输入是一个 WindowAssigner, WindowAssigner 负责将每条输入的数据分发到正确的 window 中,Flink提供了很多各种场景用的WindowAssigner:

如果需要自己定制数据分发策略,则可以实现一个 class,继承自 WindowAssigner。

2)、Trigger

trigger 用来判断一个窗口是否需要被触发,每个 WindowAssigner 都自带一个默认的trigger,如果默认的 trigger 不能满足你的需求,则可以自定义一个类,继承自Trigger 即可。

- onElement()

- onEventTime()

- onProcessingTime() 此抽象类的这三个方法会返回一个 TriggerResult, TriggerResult 有如下几种可能的选择:

- CONTINUE 不做任何事情

- FIRE 触发 window

- PURGE 清空整个 window 的元素并销毁窗口

- FIRE_AND_PURGE 触发窗口,然后销毁窗口

Trigger 的抽象类源码如下

packageorg.apache.flink.streaming.api.windowing.triggers;importorg.apache.flink.annotation.PublicEvolving;importorg.apache.flink.api.common.functions.RuntimeContext;importorg.apache.flink.api.common.state.MergingState;importorg.apache.flink.api.common.state.State;importorg.apache.flink.api.common.state.StateDescriptor;importorg.apache.flink.api.common.state.ValueState;importorg.apache.flink.api.common.typeinfo.TypeInformation;importorg.apache.flink.metrics.MetricGroup;importorg.apache.flink.streaming.api.windowing.windows.Window;importjava.io.Serializable;/**

* A {@code Trigger} determines when a pane of a window should be evaluated to emit the

* results for that part of the window.

*

* <p>A pane is the bucket of elements that have the same key (assigned by the

* {@link org.apache.flink.api.java.functions.KeySelector}) and same {@link Window}. An element can

* be in multiple panes if it was assigned to multiple windows by the

* {@link org.apache.flink.streaming.api.windowing.assigners.WindowAssigner}. These panes all

* have their own instance of the {@code Trigger}.

*

* <p>Triggers must not maintain state internally since they can be re-created or reused for

* different keys. All necessary state should be persisted using the state abstraction

* available on the {@link TriggerContext}.

*

* <p>When used with a {@link org.apache.flink.streaming.api.windowing.assigners.MergingWindowAssigner}

* the {@code Trigger} must return {@code true} from {@link #canMerge()} and

* {@link #onMerge(Window, OnMergeContext)} most be properly implemented.

*

* @param <T> The type of elements on which this {@code Trigger} works.

* @param <W> The type of {@link Window Windows} on which this {@code Trigger} can operate.

*/@PublicEvolvingpublicabstractclassTrigger<T,WextendsWindow>implementsSerializable{/**

* Called for every element that gets added to a pane. The result of this will determine

* whether the pane is evaluated to emit results.

* 每次往 window 增加一个元素的时候都会触发

* @param element The element that arrived.

* @param timestamp The timestamp of the element that arrived.

* @param window The window to which the element is being added.

* @param ctx A context object that can be used to register timer callbacks.

*/publicabstractTriggerResultonElement(T element,long timestamp,W window,TriggerContext ctx)throwsException;/**

* Called when a processing-time timer that was set using the trigger context fires.

* 当 processing-time timer 被触发的时候会调用

* @param time The timestamp at which the timer fired.

* @param window The window for which the timer fired.

* @param ctx A context object that can be used to register timer callbacks.

*/publicabstractTriggerResultonProcessingTime(long time,W window,TriggerContext ctx)throwsException;/**

* Called when an event-time timer that was set using the trigger context fires.

* 当 event-time timer 被触发的时候会调用

* @param time The timestamp at which the timer fired.

* @param window The window for which the timer fired.

* @param ctx A context object that can be used to register timer callbacks.

*/publicabstractTriggerResultonEventTime(long time,W window,TriggerContext ctx)throwsException;/**

* Returns true if this trigger supports merging of trigger state and can therefore

* be used with a

* {@link org.apache.flink.streaming.api.windowing.assigners.MergingWindowAssigner}.

*

* <p>If this returns {@code true} you must properly implement

* {@link #onMerge(Window, OnMergeContext)}

*/publicbooleancanMerge(){returnfalse;}/**

* Called when several windows have been merged into one window by the

* {@link org.apache.flink.streaming.api.windowing.assigners.WindowAssigner}.

* 对两个 `rigger 的 state 进行 merge 操作

* @param window The new window that results from the merge.

* @param ctx A context object that can be used to register timer callbacks and access state.

*/publicvoidonMerge(W window,OnMergeContext ctx)throwsException{thrownewUnsupportedOperationException("This trigger does not support merging.");}/**

* Clears any state that the trigger might still hold for the given window. This is called

* when a window is purged. Timers set using {@link TriggerContext#registerEventTimeTimer(long)}

* and {@link TriggerContext#registerProcessingTimeTimer(long)} should be deleted here as

* well as state acquired using {@link TriggerContext#getPartitionedState(StateDescriptor)}.

* window 销毁的时候被调用

*/publicabstractvoidclear(W window,TriggerContext ctx)throwsException;// ------------------------------------------------------------------------/**

* A context object that is given to {@link Trigger} methods to allow them to register timer

* callbacks and deal with state.

*/publicinterfaceTriggerContext{/**

* Returns the current processing time.

*/longgetCurrentProcessingTime();/**

* Returns the metric group for this {@link Trigger}. This is the same metric

* group that would be returned from {@link RuntimeContext#getMetricGroup()} in a user

* function.

*

* <p>You must not call methods that create metric objects

* (such as {@link MetricGroup#counter(int)} multiple times but instead call once

* and store the metric object in a field.

*/MetricGroupgetMetricGroup();/**

* Returns the current watermark time.

*/longgetCurrentWatermark();/**

* Register a system time callback. When the current system time passes the specified

* time {@link Trigger#onProcessingTime(long, Window, TriggerContext)} is called with the time specified here.

*

* @param time The time at which to invoke {@link Trigger#onProcessingTime(long, Window, TriggerContext)}

*/voidregisterProcessingTimeTimer(long time);/**

* Register an event-time callback. When the current watermark passes the specified

* time {@link Trigger#onEventTime(long, Window, TriggerContext)} is called with the time specified here.

*

* @param time The watermark at which to invoke {@link Trigger#onEventTime(long, Window, TriggerContext)}

* @see org.apache.flink.streaming.api.watermark.Watermark

*/voidregisterEventTimeTimer(long time);/**

* Delete the processing time trigger for the given time.

*/voiddeleteProcessingTimeTimer(long time);/**

* Delete the event-time trigger for the given time.

*/voiddeleteEventTimeTimer(long time);/**

* Retrieves a {@link State} object that can be used to interact with

* fault-tolerant state that is scoped to the window and key of the current

* trigger invocation.

*

* @param stateDescriptor The StateDescriptor that contains the name and type of the

* state that is being accessed.

* @param <S> The type of the state.

* @return The partitioned state object.

* @throws UnsupportedOperationException Thrown, if no partitioned state is available for the

* function (function is not part os a KeyedStream).

*/<SextendsState>SgetPartitionedState(StateDescriptor<S,?> stateDescriptor);/**

* Retrieves a {@link ValueState} object that can be used to interact with

* fault-tolerant state that is scoped to the window and key of the current

* trigger invocation.

*

* @param name The name of the key/value state.

* @param stateType The class of the type that is stored in the state. Used to generate

* serializers for managed memory and checkpointing.

* @param defaultState The default state value, returned when the state is accessed and

* no value has yet been set for the key. May be null.

*

* @param <S> The type of the state.

* @return The partitioned state object.

* @throws UnsupportedOperationException Thrown, if no partitioned state is available for the

* function (function is not part os a KeyedStream).

* @deprecated Use {@link #getPartitionedState(StateDescriptor)}.

*/@Deprecated<SextendsSerializable>ValueState<S>getKeyValueState(String name,Class<S> stateType,S defaultState);/**

* Retrieves a {@link ValueState} object that can be used to interact with

* fault-tolerant state that is scoped to the window and key of the current

* trigger invocation.

*

* @param name The name of the key/value state.

* @param stateType The type information for the type that is stored in the state.

* Used to create serializers for managed memory and checkpoints.

* @param defaultState The default state value, returned when the state is accessed and

* no value has yet been set for the key. May be null.

*

* @param <S> The type of the state.

* @return The partitioned state object.

* @throws UnsupportedOperationException Thrown, if no partitioned state is available for the

* function (function is not part os a KeyedStream).

* @deprecated Use {@link #getPartitionedState(StateDescriptor)}.

*/@Deprecated<SextendsSerializable>ValueState<S>getKeyValueState(String name,TypeInformation<S> stateType,S defaultState);}/**

* Extension of {@link TriggerContext} that is given to

* {@link Trigger#onMerge(Window, OnMergeContext)}.

*/publicinterfaceOnMergeContextextendsTriggerContext{<SextendsMergingState<?,?>>voidmergePartitionedState(StateDescriptor<S,?> stateDescriptor);}}

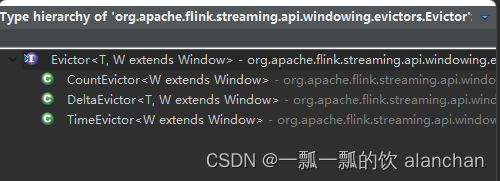

3)、Evictor

evictor 主要用于做一些数据的自定义操作,可以在执行用户代码之前,也可以在执行用户代码之后。

The evictBefore() contains the eviction logic to be applied before the window function, while the evictAfter() contains the one to be applied after the window function. Elements evicted before the application of the window function will not be processed by it.

CountEvictor: keeps up to a user-specified number of elements from the window and discards the remaining ones from the beginning of the window buffer.

DeltaEvictor: takes a DeltaFunction and a threshold, computes the delta between the last element in the window buffer and each of the remaining ones, and removes the ones with a delta greater or equal to the threshold.

TimeEvictor: takes as argument an interval in milliseconds and for a given window, it finds the maximum timestamp max_ts among its elements and removes all the elements with timestamps smaller than max_ts - interval.

本接口提供了两个重要的方法,即evicBefore 和 evicAfter两个方法,具体如下:

@PublicEvolvingpublicinterfaceEvictor<T,WextendsWindow>extendsSerializable{/**

* Optionally evicts elements. Called before windowing function.

*

* @param elements The elements currently in the pane.

* @param size The current number of elements in the pane.

* @param window The {@link Window}

* @param evictorContext The context for the Evictor

*/voidevictBefore(Iterable<TimestampedValue<T>> elements,int size,W window,EvictorContext evictorContext);/**

* Optionally evicts elements. Called after windowing function.

*

* @param elements The elements currently in the pane.

* @param size The current number of elements in the pane.

* @param window The {@link Window}

* @param evictorContext The context for the Evictor

*/voidevictAfter(Iterable<TimestampedValue<T>> elements,int size,W window,EvictorContext evictorContext);/**

* A context object that is given to {@link Evictor} methods.

*/interfaceEvictorContext{/**

* Returns the current processing time.

*/longgetCurrentProcessingTime();/**

* Returns the metric group for this {@link Evictor}. This is the same metric

* group that would be returned from {@link RuntimeContext#getMetricGroup()} in a user

* function.

*

* <p>You must not call methods that create metric objects

* (such as {@link MetricGroup#counter(int)} multiple times but instead call once

* and store the metric object in a field.

*/MetricGroupgetMetricGroup();/**

* Returns the current watermark time.

*/longgetCurrentWatermark();}}

Flink 提供了如下三种通用的 evictor:

- CountEvictor 保留指定数量的元素

- TimeEvictor 设定一个阈值 interval,删除所有不再 max_ts - interval 范围内的元素,其中 max_ts 是窗口内时间戳的最大值

- DeltaEvictor 通过执行用户给定的 DeltaFunction 以及预设的 theshold,判断是否删 除一个元素。

3、window的生命周期

In a nutshell, a window is created as soon as the first element that should belong to this window arrives, and the window is completely

removed when the time (event or processing time) passes its end timestamp plus the user-specified allowed lateness (see Allowed

Lateness). Flink guarantees removal only for time-based windows and not for other types, e.g. global windows (see Window Assigners). For example, with an event-time-based windowing strategy that creates non-overlapping (or tumbling) windows every 5 minutes and has an allowed lateness of 1 min, Flink will create a new window for the interval between 12:00 and 12:05 when the first element with a

timestamp that falls into this interval arrives, and it will remove it when the watermark passes the 12:06 timestamp.

In addition, each window will have a Trigger (see Triggers) and a function (ProcessWindowFunction, ReduceFunction, or AggregateFunction) (see Window Functions) attached to it. The function will contain the computation to be applied to the contents of the window, while the Trigger specifies the conditions under which the window is considered ready for the function to be applied. A triggering policy might be something like “when the number of elements in the window is more than 4”, or “when the watermark passes the end of the window”. A trigger can also decide to purge a window’s contents any time between its creation and removal. Purging in this case only refers to the elements in the window, and not the window metadata. This means that new data can still be added to that window.

Apart from the above, you can specify an Evictor (see Evictors) which will be able to remove elements from the window after the trigger

fires and before and/or after the function is applied.

In the following we go into more detail for each of the components above. We start with the required parts in the above snippet (see

Keyed vs Non-Keyed Windows, Window Assigner, and Window Function) before moving to the optional ones.

简单的说,当有第一个属于该window的元素到达时就创建了一个window,当时间或事件触发该windowremoved的时候则结束。每个window都有一个Trigger和一个Function,function用于计算,trigger用于触发window条件。同时也可以使用Evictor在Trigger触发前后对window的元素进行处理。

4、window的分类

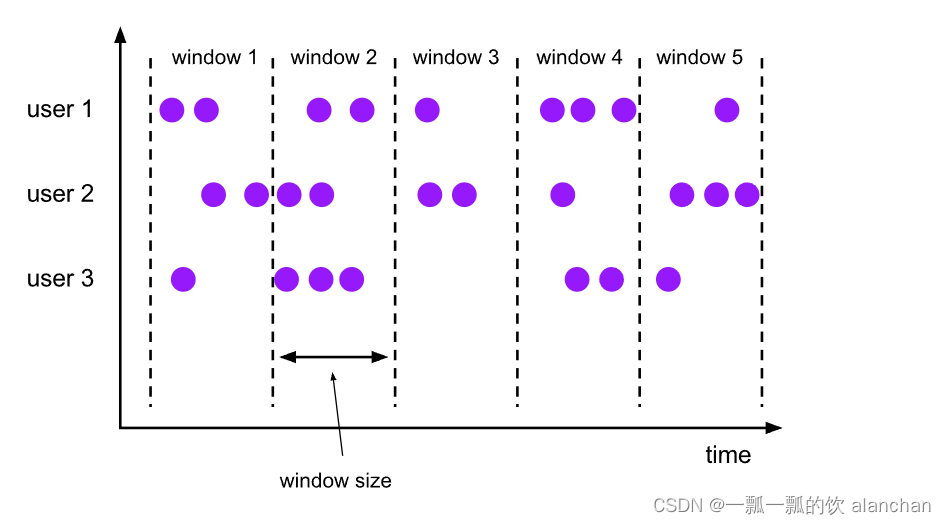

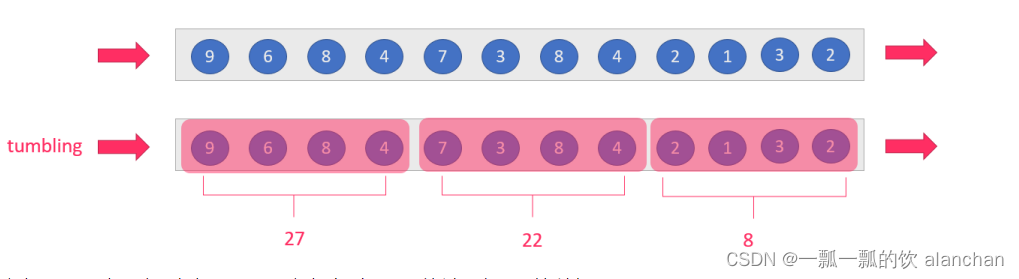

1)、Tumbling Windows

滚动窗口分配器(Tumbling windows assigner)将每个元素分配给指定窗口大小的窗口。滚动窗口具有固定大小,不会重叠。例如,如果指定大小为 5 分钟的滚动窗口,则将评估当前窗口,并且每 5 分钟启动一个新窗口,如下图所示。

示例代码

DataStream<T> input =...;// tumbling event-time windows

input

.keyBy(<key selector>).window(TumblingEventTimeWindows.of(Time.seconds(5))).<windowed transformation>(<window function>);// tumbling processing-time windows

input

.keyBy(<key selector>).window(TumblingProcessingTimeWindows.of(Time.seconds(5))).<windowed transformation>(<window function>);// daily tumbling event-time windows offset by -8 hours.

input

.keyBy(<key selector>).window(TumblingEventTimeWindows.of(Time.days(1),Time.hours(-8))).<windowed transformation>(<window function>);

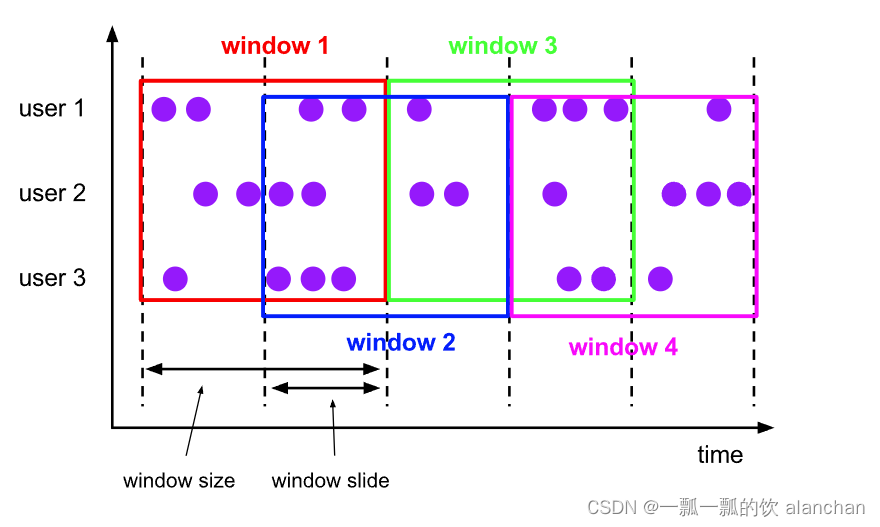

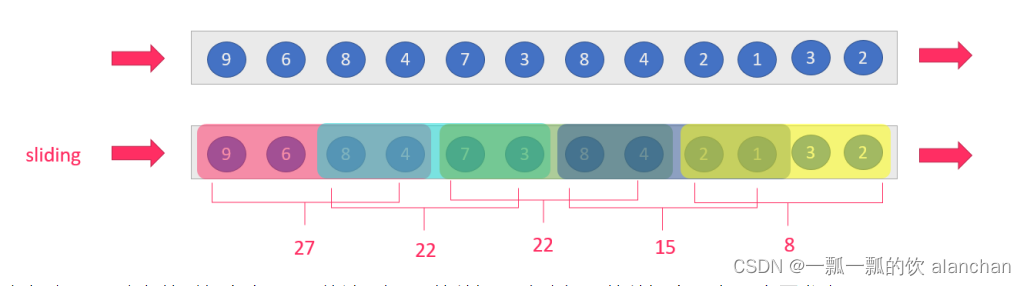

2)、Sliding Windows

滑动窗口分配器(sliding windows assigner)将元素分配给固定长度的窗口。与滚动窗口分配器类似,窗口的大小由窗口大小参数配置。窗口滑动参数控制滑动窗口的启动频率。因此,如果 sliding小于size,则滑动窗口可能会重叠。在这种情况下,元素被分配给多个窗口。例如,可以有大小为 10 分钟的窗口,该窗口滑动 5 分钟。这样,您每 5 分钟就会得到一个窗口,其中包含过去 10 分钟内到达的事件,如下图所示。

示例代码

ataStream<T> input =...;// sliding event-time windows

input

.keyBy(<key selector>).window(SlidingEventTimeWindows.of(Time.seconds(10),Time.seconds(5))).<windowed transformation>(<window function>);// sliding processing-time windows

input

.keyBy(<key selector>).window(SlidingProcessingTimeWindows.of(Time.seconds(10),Time.seconds(5))).<windowed transformation>(<window function>);// sliding processing-time windows offset by -8 hours

input

.keyBy(<key selector>).window(SlidingProcessingTimeWindows.of(Time.hours(12),Time.hours(1),Time.hours(-8))).<windowed transformation>(<window function>);

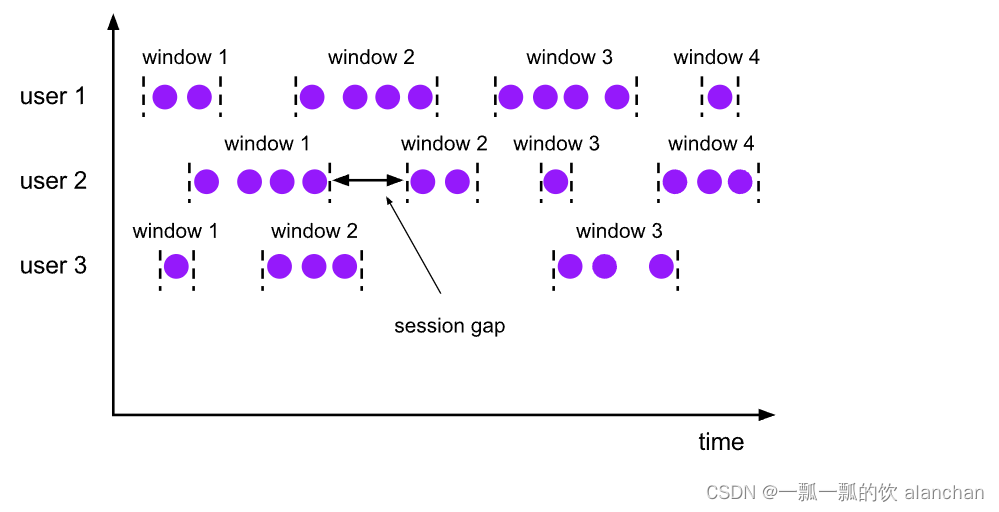

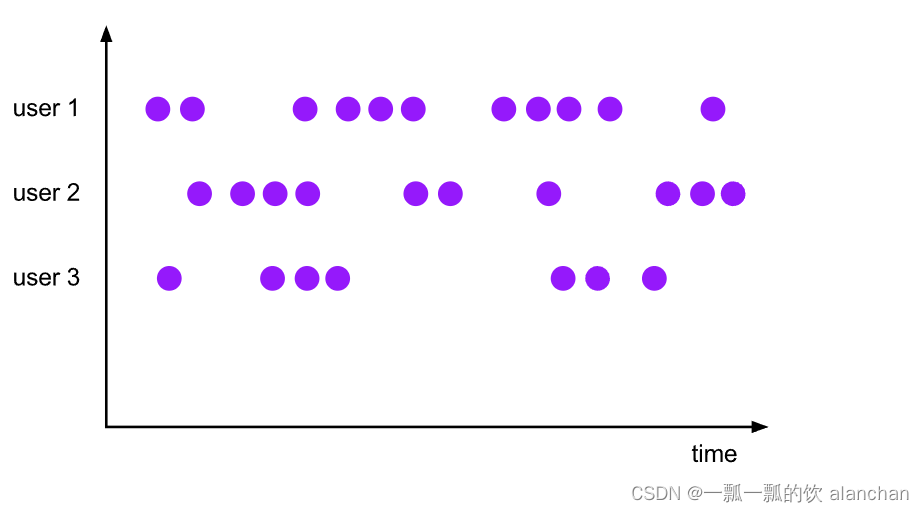

3)、Session Windows

会话窗口分配器(session windows assigner)按活动会话对元素进行分组。与滚动窗口和滑动窗口相比,会话窗口不重叠,也没有固定的开始和结束时间。相反,当会话窗口在一段时间内未收到元素时(即,当出现不活动间隙时),会话窗口将关闭。会话窗口分配器可以配置静态会话间隙或会话间隙提取器功能,该函数定义不活动时间的时间。当此时间段到期时,当前会话将关闭,后续元素将分配给新的会话窗口。

The session windows assigner groups elements by sessions of activity. Session windows do not overlap and do not have a fixed start and end time, in contrast to tumbling windows and sliding windows. Instead a session window closes when it does not receive elements for a certain period of time, i.e., when a gap of inactivity occurred. A session window assigner can be configured with either a static session gap or with a session gap extractor function which defines how long the period of inactivity is. When this period expires, the current session closes and subsequent elements are assigned to a new session window.

代码示例

DataStream<T> input =...;// event-time session windows with static gap

input

.keyBy(<key selector>).window(EventTimeSessionWindows.withGap(Time.minutes(10))).<windowed transformation>(<window function>);// event-time session windows with dynamic gap

input

.keyBy(<key selector>).window(EventTimeSessionWindows.withDynamicGap((element)->{// determine and return session gap})).<windowed transformation>(<window function>);// processing-time session windows with static gap

input

.keyBy(<key selector>).window(ProcessingTimeSessionWindows.withGap(Time.minutes(10))).<windowed transformation>(<window function>);// processing-time session windows with dynamic gap

input

.keyBy(<key selector>).window(ProcessingTimeSessionWindows.withDynamicGap((element)->{// determine and return session gap})).<windowed transformation>(<window function>);

由于会话窗口没有固定的开始和结束,因此它们的计算方式与翻转和滑动窗口不同。在内部,会话窗口运算符为每个到达记录创建一个新窗口,如果窗口彼此靠近而不是定义的间隙,则将它们合并在一起。为了可合并,会话窗口运算符需要一个合并触发器和一个合并窗口函数,例如 ReduceFunction、AggregateFunction 或 ProcessWindowFunction。

4)、Global Windows

全局窗口分配器(global windows assigner)将具有相同键的所有元素分配给同一个全局窗口。只有自己自定义触发器的时候该窗口才能使用。否则,将不会执行任何计算,因为全局窗口没有一个自然的终点,我们可以在该端点处理聚合元素。

A global windows assigner assigns all elements with the same key to the same single global window. This windowing scheme is only useful if you also specify a custom trigger. Otherwise, no computation will be performed, as the global window does not have a natural end at which we could process the aggregated elements.

代码示例

DataStream<T> input =...;

input

.keyBy(<key selector>).window(GlobalWindows.create()).<windowed transformation>(<window function>);

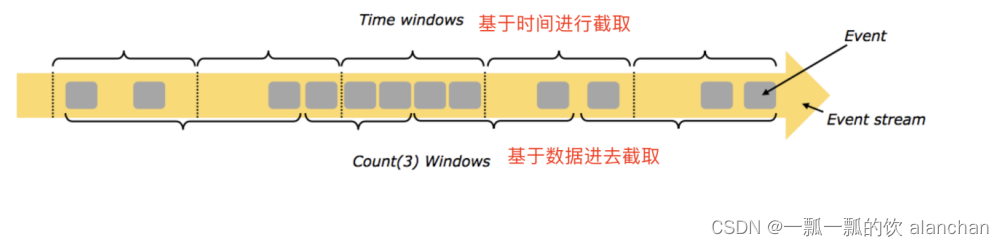

5)、按照时间time和数量count分类

- time-window,时间窗口,根据时间划分窗口,如:每xx小时统计最近xx小时的数据

- count-window,数量窗口,根据数量划分窗口,如:每xx条/行数据统计最近xx条/行数据

6)、按照滑动间隔slide和窗口大小size分类

- tumbling-window,滚动窗口,size=slide,如,每隔10s统计最近10s的数据

- sliding-window,滑动窗口,size>slide,如,每隔5s统计最近10s的数据

当size<slide的时候,如每隔15s统计最近10s的数据,会有数据丢失,视具体情况而定是否使用

结合实际的业务应用选择适用的接口很重要,一般而言,TumblingTimeWindows、SlidingTimeWindows需要重点关注,而EventTimeSessionWindows和ProcessingTimeSessionWindows是Flink的session会话窗口,需要设置会话超时时间,如果超时则触发window计算。

5、窗口函数

定义窗口分配器(window assigner)后,需要指定要在每个窗口上执行的计算。这是 window 函数的职责,一旦系统确定窗口已准备好处理,它就用于处理每个(可能是keyed)窗口的元素。

window 函数有 ReduceFunction、AggregateFunction 或 ProcessWindowFunction 。前两个可以更有效地执行,因为 Flink 可以在每个窗口到达时增量聚合元素。ProcessWindowFunction 获取窗口中包含的所有元素的可迭代对象,以及有关元素所属窗口的其他元信息。

使用 ProcessWindowFunction 的窗口化转换不能像其他情况那样有效地执行,因为 Flink 在调用函数之前必须在内部缓冲窗口的所有元素。通过将 ProcessWindowFunction 与 ReduceFunction 或 AggregateFunction 结合使用来获取窗口元素的增量聚合和 ProcessWindowFunction 接收的其他窗口元数据,可以缓解此问题。

1)、ReduceFunction

ReduceFunction 指定如何将输入中的两个元素组合在一起以生成相同类型的输出元素。Flink 使用 ReduceFunction 以增量方式聚合窗口的元素。

代码示例-计算2个字段的和

DataStream<Tuple2<String,Long>> input =...;

input

.keyBy(<key selector>).window(<window assigner>).reduce(newReduceFunction<Tuple2<String,Long>>{publicTuple2<String,Long>reduce(Tuple2<String,Long> v1,Tuple2<String,Long> v2){returnnewTuple2<>(v1.f0, v1.f1 + v2.f1);}});

2)、AggregateFunction

聚合函数是 ReduceFunction 的通用版本,具有三种类型:输入类型 (IN)、累加器类型 (ACC) 和输出类型 (OUT)。输入类型是输入流中的元素类型,AggregateFunction 具有将一个输入元素添加到累加器的方法。该接口还具有用于创建初始累加器、将两个累加器合并为一个累加器以及从累加器中提取输出(OUT 类型)的方法。与 ReduceFunction 相同,Flink 将在窗口的输入元素到达时增量聚合它们。

代码示例-计算两个字段的平均值

privatestaticclassAverageAggregateimplementsAggregateFunction<Tuple2<String,Long>,Tuple2<Long,Long>,Double>{@OverridepublicTuple2<Long,Long>createAccumulator(){returnnewTuple2<>(0L,0L);}@OverridepublicTuple2<Long,Long>add(Tuple2<String,Long> value,Tuple2<Long,Long> accumulator){returnnewTuple2<>(accumulator.f0 + value.f1, accumulator.f1 +1L);}@OverridepublicDoublegetResult(Tuple2<Long,Long> accumulator){return((double) accumulator.f0)/ accumulator.f1;}@OverridepublicTuple2<Long,Long>merge(Tuple2<Long,Long> a,Tuple2<Long,Long> b){returnnewTuple2<>(a.f0 + b.f0, a.f1 + b.f1);}}DataStream<Tuple2<String,Long>> input =...;

input

.keyBy(<key selector>).window(<window assigner>).aggregate(newAverageAggregate());

3)、ProcessWindowFunction

ProcessWindowFunction 获取一个包含窗口所有元素的 Iterable,以及一个可以访问时间和状态信息的 Context 对象,这使其能够提供比其他窗口函数更大的灵活性。这是以性能和资源消耗为代价的,因为元素不能增量聚合,而是需要在内部缓冲,直到窗口被认为准备好进行处理。

代码示例-统计个数

DataStream<Tuple2<String,Long>> input =...;

input

.keyBy(t -> t.f0).window(TumblingEventTimeWindows.of(Time.minutes(5))).process(newMyProcessWindowFunction());/* ... */publicclassMyProcessWindowFunctionextendsProcessWindowFunction<Tuple2<String,Long>,String,String,TimeWindow>{@Overridepublicvoidprocess(String key,Context context,Iterable<Tuple2<String,Long>> input,Collector<String> out){long count =0;for(Tuple2<String,Long> in: input){

count++;}

out.collect("Window: "+ context.window()+"count: "+ count);}}

将 ProcessWindowFunction 用于简单的聚合(如计数)效率非常低。一般是将 ReduceFunction 或 AggregateFunction 与 ProcessWindowFunction 结合使用,以获取增量聚合和 ProcessWindowFunction 的添加信息。

4)、ProcessWindowFunction with Incremental Aggregation

ProcessWindowFunction 可以与 ReduceFunction 或 AggregateFunction 结合使用,以便在元素到达窗口时以增量方式聚合元素。当窗口关闭时,将向进程窗口函数提供聚合结果。这允许它以增量方式计算窗口,同时可以访问 ProcessWindowFunction 的其他窗口元信息。

1、Incremental Window Aggregation with ReduceFunction

下面的示例演示如何将增量 ReduceFunction 与 ProcessWindowFunction 结合使用,以返回窗口中的最小事件以及窗口的开始时间。

DataStream<SensorReading> input =...;

input

.keyBy(<key selector>).window(<window assigner>).reduce(newMyReduceFunction(),newMyProcessWindowFunction());// Function definitionsprivatestaticclassMyReduceFunctionimplementsReduceFunction<SensorReading>{publicSensorReadingreduce(SensorReading r1,SensorReading r2){return r1.value()> r2.value()? r2 : r1;}}privatestaticclassMyProcessWindowFunctionextendsProcessWindowFunction<SensorReading,Tuple2<Long,SensorReading>,String,TimeWindow>{publicvoidprocess(String key,Context context,Iterable<SensorReading> minReadings,Collector<Tuple2<Long,SensorReading>> out){SensorReading min = minReadings.iterator().next();

out.collect(newTuple2<Long,SensorReading>(context.window().getStart(), min));}}

2、Incremental Window Aggregation with AggregateFunction

下面的示例演示如何将增量聚合函数与 ProcessWindowFunction 结合使用以计算平均值,并发出键和窗口以及平均值。

DataStream<Tuple2<String,Long>> input =...;

input

.keyBy(<key selector>).window(<window assigner>).aggregate(newAverageAggregate(),newMyProcessWindowFunction());// Function definitions/**

* The accumulator is used to keep a running sum and a count. The {@code getResult} method

* computes the average.

*/privatestaticclassAverageAggregateimplementsAggregateFunction<Tuple2<String,Long>,Tuple2<Long,Long>,Double>{@OverridepublicTuple2<Long,Long>createAccumulator(){returnnewTuple2<>(0L,0L);}@OverridepublicTuple2<Long,Long>add(Tuple2<String,Long> value,Tuple2<Long,Long> accumulator){returnnewTuple2<>(accumulator.f0 + value.f1, accumulator.f1 +1L);}@OverridepublicDoublegetResult(Tuple2<Long,Long> accumulator){return((double) accumulator.f0)/ accumulator.f1;}@OverridepublicTuple2<Long,Long>merge(Tuple2<Long,Long> a,Tuple2<Long,Long> b){returnnewTuple2<>(a.f0 + b.f0, a.f1 + b.f1);}}privatestaticclassMyProcessWindowFunctionextendsProcessWindowFunction<Double,Tuple2<String,Double>,String,TimeWindow>{publicvoidprocess(String key,Context context,Iterable<Double> averages,Collector<Tuple2<String,Double>> out){Double average = averages.iterator().next();

out.collect(newTuple2<>(key, average));}}

以上,详细的介绍了Flink的window的概念、编码示例,下一篇详细的介绍每个window的用法。

版权归原作者 一瓢一瓢的饮 alanchan 所有, 如有侵权,请联系我们删除。