Hadoop课设蔬菜统计

题目要求

蔬菜统计

根据“蔬菜.txt”的数据,利用Hadoop平台,实现价格统计与可视化显示。

要求:通过MapReduce分析列表中的蔬菜数据。

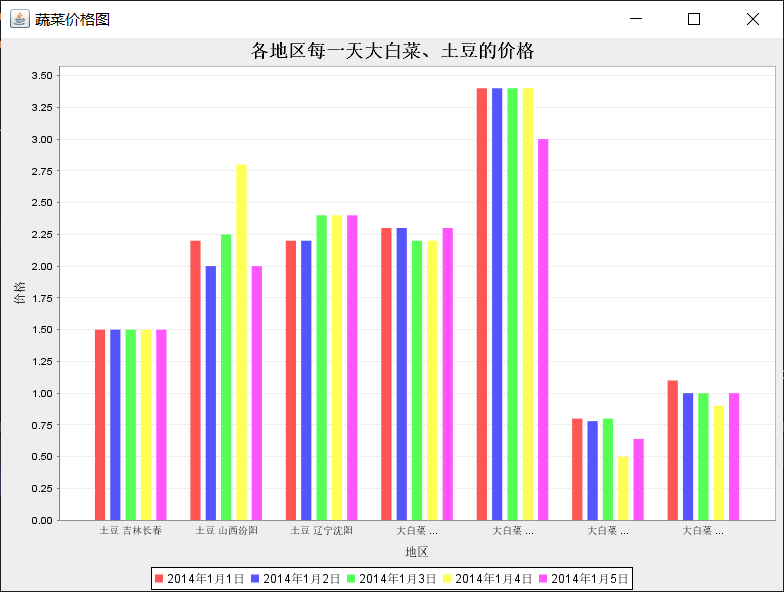

(1)统计各地区每一天大白菜、土豆的价格(柱状图)

(2)选取一个城市,统计各个蔬菜价格变化曲线(折线图)

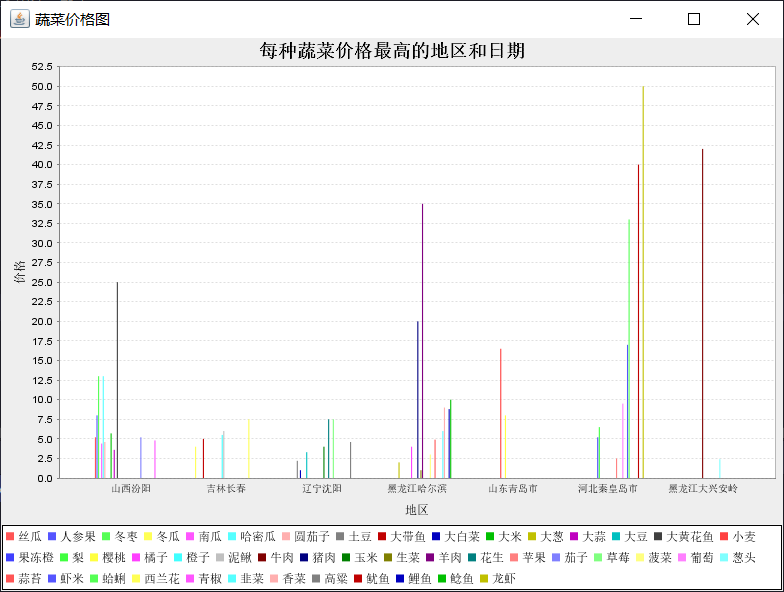

(3)统计每种蔬菜价格最高的地区和日期 (柱状图)

(4)加载Hbase、Hive等软件,并说明

API

(1)map:按列表进行分片分区

(2)reduce:按照要求进行统计

题目分析

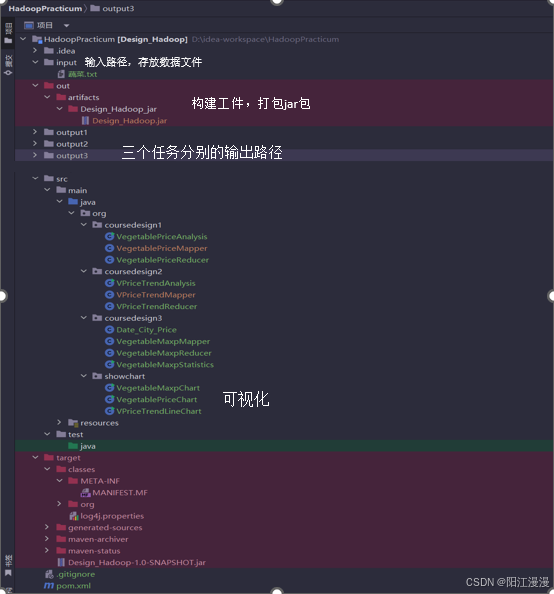

- 可以将整体分为三部分:- 数据分析与清理 理解数据格式- map reduce作业- 数据可视化

- 前置准备:我使用的是idea+maven框架 配置Hadoop2.8.3版本(推荐2x 不推荐3x)- 上传数据文件- 环境配置这里不过多赘述,大家随便搜都能找到很好的配置教程- 导入的Maven依赖 pom.xml> 这是我调整过很多次最终导入成功的依赖,如果不成功可以多刷新几次,或者可以换一换版本号,hadoop版本和依赖的版本有时需要一致

<?xml version="1.0" encoding="UTF-8"?><projectxmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>java_Hadoop</groupId><artifactId>Design_Hadoop</artifactId><version>1.0-SNAPSHOT</version><dependencies><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-common</artifactId><version>2.8.3</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-hdfs</artifactId><version>2.8.3</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-mapreduce-client-core</artifactId><version>2.8.3</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-mapreduce-client-jobclient</artifactId><version>2.8.3</version></dependency><dependency><groupId>log4j</groupId><artifactId>log4j</artifactId><version>1.2.17</version></dependency><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-server</artifactId>

<version>2.3.5</version></dependency><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-client</artifactId><version>2.3.5</version></dependency><dependency><groupId>org.apache.hive</groupId><artifactId>hive-exec</artifactId><version>3.1.2</version></dependency><dependency><groupId>org.jfree.chart</groupId><artifactId>com.springsource.org.jfree.chart</artifactId><version>1.0.9</version></dependency><dependency><groupId>org.jfree</groupId><artifactId>com.springsource.org.jfree</artifactId><version>1.0.12</version></dependency><dependency><groupId>javax.servlet</groupId><artifactId>com.springsource.javax.servlet</artifactId><version>2.4.0</version><scope>provided</scope></dependency></dependencies><build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-jar-plugin</artifactId><version>3.1.0</version><configuration><archive><manifest><addClasspath>true</addClasspath><classpathPrefix>lib/</classpathPrefix><mainClass>org.coursedesign2.VPriceTrendAnalysis</mainClass></manifest><manifestEntries><Class-Path>.</Class-Path></manifestEntries></archive><excludes><exclude>META-INF/*.SF</exclude><exclude>META-INF/*.DSA</exclude><exclude>META-INF/*.RSA</exclude></excludes></configuration></plugin></plugins></build><properties><maven.compiler.source>9</maven.compiler.source><maven.compiler.target>9</maven.compiler.target></properties></project>

- 可视化的部分我选择的是java的jfree类库,我觉得也可以使用Python绘图(题目里也没有指定)

代码部分

目录结构

问题一:

主程序入口类

packageorg.coursedesign1;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.fs.Path;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Job;importorg.apache.hadoop.mapreduce.lib.input.FileInputFormat;importorg.apache.hadoop.mapreduce.lib.output.FileOutputFormat;publicclassVegetablePriceAnalysis{publicstaticvoidmain(String[] args)throwsException{// 创建 Hadoop 配置对象Configuration conf =newConfiguration();// 获取 Job 实例Job job =Job.getInstance(conf,"VegetablePriceAnalysis");// 设置作业的 JAR 文件,这里使用当前类作为 JAR 文件的引用

job.setJarByClass(VegetablePriceAnalysis.class);// 设置 Mapper 类

job.setMapperClass(VegetablePriceMapper.class);// 设置 Reducer 类

job.setReducerClass(VegetablePriceReducer.class);// 设置作业输出的键和值的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);// 硬编码作业的输入路径FileInputFormat.setInputPaths(job,newPath("D:\\idea-workspace\\HadoopPracticum\\input"));// 硬编码作业的输出路径FileOutputFormat.setOutputPath(job,newPath("D:\\idea-workspace\\HadoopPracticum\\output1"));// 提交作业

job.submit();// 等待作业完成,并根据作业的成功与否退出程序boolean completed = job.waitForCompletion(true);System.exit(completed ?0:1);}}

Map类

packageorg.coursedesign1;importorg.apache.hadoop.io.LongWritable;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Mapper;importjava.io.IOException;publicclassVegetablePriceMapperextendsMapper<LongWritable,Text,Text,Text>{//输入键和值都是 Text 类型,输出键和值也都是 Text 类型publicvoidmap(LongWritable key,Text value,Context context)throwsIOException,InterruptedException{String line = value.toString();String[] data = line.split("\\s+");//一个或多个空格作为分隔符来分割字符串,得到数据数组 data// 跳过标题行(序号为1)if(key.get()==1){return;}//只处理包含"大白菜"或"土豆"的数据行if(!data[1].equals("大白菜")&&!data[1].equals("土豆"))return;//数据列从0开始,选取1和89作为键(蔬菜、省城),循环获取的价格作为值for(int i=2; i<=6; i++){

context.write(newText(data[1]+" "+ data[8]+data[9]),newText(data[i]));}}}

Reduce类

packageorg.coursedesign1;importorg.apache.hadoop.io.*;importorg.apache.hadoop.mapreduce.Reducer;importjava.io.IOException;publicclassVegetablePriceReducerextendsReducer<Text,Text,Text,Text>{//输入输出键和值都是Text类型publicvoidreduce(Text key,Iterable<Text> values,Context context)throwsIOException,InterruptedException{StringBuilder sb =newStringBuilder();for(Text val : values){

sb.append(val.toString());

sb.append(" ");}

context.write(key,newText(sb.toString()));}}

可视化

packageorg.showchart;importorg.jfree.chart.ChartFactory;importorg.jfree.chart.ChartPanel;importorg.jfree.chart.JFreeChart;importorg.jfree.chart.plot.PlotOrientation;importorg.jfree.data.category.DefaultCategoryDataset;importjavax.swing.*;importjava.io.BufferedReader;importjava.io.FileReader;importjava.io.IOException;publicclassVegetablePriceChartextendsJFrame{publicVegetablePriceChart(){super("蔬菜价格图");DefaultCategoryDataset dataset =newDefaultCategoryDataset();// 读取数据文件try(BufferedReader reader =newBufferedReader(newFileReader("D:\\idea-workspace\\HadoopPracticum\\output1\\part-r-00000"))){String line;while((line = reader.readLine())!=null){String[] parts = line.split("\\s+");String vegetable = parts[0];String city = parts[1];for(int i =2; i < parts.length; i++){double price =Double.parseDouble(parts[i]);String date ="2014年1月"+(i -1)+"日";

dataset.addValue(price, date, vegetable +" "+ city);}}}catch(IOException e){

e.printStackTrace();}// 创建图表JFreeChart barChart =ChartFactory.createBarChart("各地区每一天大白菜、土豆的价格","地区","价格",

dataset,PlotOrientation.VERTICAL,true,true,false);// 创建图表面板并添加到窗口ChartPanel chartPanel =newChartPanel(barChart);setContentPane(chartPanel);}publicstaticvoidmain(String[] args){SwingUtilities.invokeLater(()->{VegetablePriceChart example =newVegetablePriceChart();

example.setSize(800,600);

example.setLocationRelativeTo(null);

example.setDefaultCloseOperation(WindowConstants.EXIT_ON_CLOSE);

example.setVisible(true);});}}

](https://imgse.com/i/pkIz3Nj)

](https://imgse.com/i/pkIz3Nj)

问题二:

主程序入口类

packageorg.coursedesign2;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.fs.Path;importorg.apache.hadoop.io.*;importorg.apache.hadoop.mapreduce.*;importorg.apache.hadoop.mapreduce.lib.input.FileInputFormat;importorg.apache.hadoop.mapreduce.lib.output.FileOutputFormat;publicclassVPriceTrendAnalysis{publicstaticvoidmain(String[] args)throwsException{Configuration conf =newConfiguration();Job job =Job.getInstance(conf,"Vegetable Price Trend for Selected City");

job.setJarByClass(VPriceTrendAnalysis.class);

job.setMapperClass(VPriceTrendMapper.class);

job.setReducerClass(VPriceTrendReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);FileInputFormat.setInputPaths(job,newPath("D:\\idea-workspace\\HadoopPracticum\\input"));FileOutputFormat.setOutputPath(job,newPath("D:\\idea-workspace\\HadoopPracticum\\output2"));System.exit(job.waitForCompletion(true)?0:1);}}

Map类

packageorg.coursedesign2;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.fs.Path;importorg.apache.hadoop.io.*;importorg.apache.hadoop.mapreduce.*;importorg.apache.hadoop.mapreduce.lib.input.FileInputFormat;importorg.apache.hadoop.mapreduce.lib.output.FileOutputFormat;publicclassVPriceTrendAnalysis{publicstaticvoidmain(String[] args)throwsException{Configuration conf =newConfiguration();Job job =Job.getInstance(conf,"Vegetable Price Trend for Selected City");

job.setJarByClass(VPriceTrendAnalysis.class);

job.setMapperClass(VPriceTrendMapper.class);

job.setReducerClass(VPriceTrendReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);FileInputFormat.setInputPaths(job,newPath("D:\\idea-workspace\\HadoopPracticum\\input"));FileOutputFormat.setOutputPath(job,newPath("D:\\idea-workspace\\HadoopPracticum\\output2"));System.exit(job.waitForCompletion(true)?0:1);}}

Reduce类

packageorg.coursedesign2;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Reducer;importjava.io.IOException;publicclassVPriceTrendReducerextendsReducer<Text,Text,Text,Text>{publicvoidreduce(Text key,Iterable<Text> values,Context context)throwsIOException,InterruptedException{StringBuilder sb =newStringBuilder();for(Text val : values){

sb.append(val.toString());

sb.append(" ");}

context.write(key,newText(sb.toString()));}}

可视化

packageorg.showchart;importorg.jfree.chart.ChartFactory;importorg.jfree.chart.ChartPanel;importorg.jfree.chart.JFreeChart;importorg.jfree.chart.plot.PlotOrientation;importorg.jfree.chart.plot.XYPlot;importorg.jfree.chart.renderer.xy.XYLineAndShapeRenderer;importorg.jfree.data.xy.XYSeries;importorg.jfree.data.xy.XYSeriesCollection;importjavax.swing.*;importjava.awt.*;importjava.io.BufferedReader;importjava.io.FileReader;importjava.io.IOException;publicclassVPriceTrendLineChartextendsJFrame{publicVPriceTrendLineChart(){super("蔬菜价格趋势折线图");XYSeriesCollection dataset =newXYSeriesCollection();// 读取数据文件try(BufferedReader reader =newBufferedReader(newFileReader("D:\\idea-workspace\\HadoopPracticum\\output2\\part-r-00000"))){String line;while((line = reader.readLine())!=null){String[] parts = line.split("\\s+");String vegetable = parts[0];XYSeries series =newXYSeries(vegetable);for(int i =1; i < parts.length; i++){double price =Double.parseDouble(parts[i]);

series.add(i, price);}

dataset.addSeries(series);}}catch(IOException e){

e.printStackTrace();}// 创建图表JFreeChart lineChart =ChartFactory.createXYLineChart("山西汾阳蔬菜价格趋势折线图","日期","价格",

dataset,PlotOrientation.VERTICAL,true,true,false);// 自定义渲染器以显示数据点XYPlot plot = lineChart.getXYPlot();XYLineAndShapeRenderer renderer =newXYLineAndShapeRenderer();for(int i =0; i < dataset.getSeriesCount(); i++){

renderer.setSeriesShapesVisible(i,true);

renderer.setSeriesShapesFilled(i,true);}

plot.setRenderer(renderer);// 设置日期轴标签

plot.getDomainAxis().setLabel("日期");

plot.getDomainAxis().setTickLabelsVisible(true);

plot.getDomainAxis().setTickLabelFont(newFont("SansSerif",Font.PLAIN,12));

plot.getDomainAxis().setTickLabelPaint(Color.BLACK);// 设置价格轴标签

plot.getRangeAxis().setLabel("价格");

plot.getRangeAxis().setTickLabelsVisible(true);

plot.getRangeAxis().setTickLabelFont(newFont("SansSerif",Font.PLAIN,12));

plot.getRangeAxis().setTickLabelPaint(Color.BLACK);// 创建图表面板并添加到窗口ChartPanel chartPanel =newChartPanel(lineChart);setContentPane(chartPanel);}publicstaticvoidmain(String[] args){SwingUtilities.invokeLater(()->{VPriceTrendLineChart example =newVPriceTrendLineChart();

example.setSize(800,600);

example.setLocationRelativeTo(null);

example.setDefaultCloseOperation(WindowConstants.EXIT_ON_CLOSE);

example.setVisible(true);});}}

问题三:

主程序入口类

packageorg.coursedesign3;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.fs.Path;importorg.apache.hadoop.io.FloatWritable;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Job;importorg.apache.hadoop.mapreduce.lib.input.FileInputFormat;importorg.apache.hadoop.mapreduce.lib.output.FileOutputFormat;publicclassVegetableMaxpStatistics{publicstaticvoidmain(String[] args)throwsException{Configuration conf =newConfiguration();Job job =newJob(conf,"Vegetable Highest Price");

job.setJarByClass(VegetableMaxpStatistics.class);

job.setMapperClass(VegetableMaxpMapper.class);

job.setReducerClass(VegetableMaxpReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Date_City_Price.class);FileInputFormat.setInputPaths(job,newPath("D:\\idea-workspace\\HadoopPracticum\\input"));FileOutputFormat.setOutputPath(job,newPath("D:\\idea-workspace\\HadoopPracticum\\output3"));System.exit(job.waitForCompletion(true)?0:1);}}

Map类

packageorg.coursedesign3;importorg.apache.hadoop.io.LongWritable;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Mapper;importjava.io.IOException;publicclassVegetableMaxpMapperextendsMapper<LongWritable,Text,Text,Date_City_Price>{privateString[] dates;privateString marketName;privateString province;privateString city;@Overrideprotectedvoidsetup(Context context)throwsIOException,InterruptedException{// 读取日期行,假设第一行是标题行,第二行是日期行Text value =newText("2014年1月1日\t2014年1月2日\t2014年1月3日\t2014年1月4日\t2014年1月5日");String[] headerData = value.toString().split("\t");

dates =newString[headerData.length];System.arraycopy(headerData,0, dates,0, headerData.length);}publicvoidmap(LongWritable key,Text value,Context context)throwsIOException,InterruptedException{String line = value.toString();String[] data = line.split("\\s+");// 跳过标题行(序号为1)if(key.get()==1){return;}// 读取批发市场名称、省份和城市信息

marketName = data[7];

province = data[8];

city = data[9];// 第1列是蔬菜名称String vegetableName = data[1];// 第2到第6列是价格数据for(int i =2; i <=6; i++){// String priceStr = data[i].trim();String priceStr = data[i];if(!priceStr.isEmpty()){try{float price =Float.parseFloat(priceStr);// 构造键为蔬菜名称、日期、批发市场名称、省份和城市的组合// Text outKey = new Text(vegetableName + "\t" + dates[i-2] + "\t" + marketName + "\t" + province + "\t" + city);Text outKey =newText(vegetableName);Date_City_Price outValue =newDate_City_Price(dates[i-2],province+city,price);

context.write(outKey, outValue);}catch(NumberFormatException nfe){System.err.println("Error parsing price for "+ vegetableName +": "+ priceStr);}}}}}

自定义类

packageorg.coursedesign3;importorg.apache.hadoop.io.WritableComparable;importjava.io.DataInput;importjava.io.DataOutput;importjava.io.IOException;publicclassDate_City_PriceimplementsWritableComparable<Date_City_Price>{// 存储日期,城市,价格String date;String city;float price;publicDate_City_Price(String date,String city,float price){this.date = date;this.city = city;this.price = price;}publicDate_City_Price(){this.date ="";this.city ="";this.price =0.0f;}@OverridepublicintcompareTo(Date_City_Price o){returnFloat.compare(price,o.price);}@Overridepublicvoidwrite(DataOutput dataOutput)throwsIOException{

dataOutput.writeUTF(date);

dataOutput.writeUTF(city);

dataOutput.writeUTF(String.valueOf(price));}@OverridepublicvoidreadFields(DataInput dataInput)throwsIOException{

date = dataInput.readUTF();

city = dataInput.readUTF();

price =Float.parseFloat(dataInput.readUTF());}publicStringgetMes(){return date +" "+ city +" "+ price;}}

Reduce类

packageorg.coursedesign3;importorg.apache.hadoop.io.FloatWritable;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Reducer;importjava.io.IOException;publicclassVegetableMaxpReducerextendsReducer<Text,Date_City_Price,Text,Text>{privateText maxKey =newText();privateFloatWritable maxValue =newFloatWritable();publicvoidreduce(Text key,Iterable<Date_City_Price> values,Context context)throwsIOException,InterruptedException{float maxPrice =Float.MIN_VALUE;Date_City_Price temp =null;for(Date_City_Price val : values){if(val.price > maxPrice){

maxPrice = val.price;

temp = val;}}// 设置键为蔬菜名称和日期,值为最高价格// maxKey.set(key);// maxValue.set(maxPrice);

context.write(key,newText(temp.getMes()));}}

可视化

packageorg.showchart;importorg.jfree.chart.ChartFactory;importorg.jfree.chart.ChartPanel;importorg.jfree.chart.JFreeChart;importorg.jfree.chart.plot.PlotOrientation;importorg.jfree.data.category.DefaultCategoryDataset;importjavax.swing.*;importjava.io.BufferedReader;importjava.io.FileReader;importjava.io.IOException;publicclassVegetableMaxpChartextendsJFrame{publicVegetableMaxpChart(String title){super(title);// 创建数据集DefaultCategoryDataset dataset =createDataset();// 创建柱状图JFreeChart barChart =ChartFactory.createBarChart("每种蔬菜价格最高的地区和日期","地区","价格",

dataset,PlotOrientation.VERTICAL,true,true,false);// 将图表放入面板ChartPanel chartPanel =newChartPanel(barChart);

chartPanel.setPreferredSize(newjava.awt.Dimension(800,600));setContentPane(chartPanel);}privateDefaultCategoryDatasetcreateDataset(){DefaultCategoryDataset dataset =newDefaultCategoryDataset();try(BufferedReader br =newBufferedReader(newFileReader("D:\\idea-workspace\\HadoopPracticum\\output3\\part-r-00000"))){String line;while((line = br.readLine())!=null){String[] parts = line.split("\\s+");String vegetable = parts[0];String date = parts[1];String city = parts[2];double price =Double.parseDouble(parts[3]);

dataset.addValue(price, vegetable, city);}}catch(IOException e){

e.printStackTrace();}return dataset;}publicstaticvoidmain(String[] args){SwingUtilities.invokeLater(()->{VegetableMaxpChart example =newVegetableMaxpChart("蔬菜价格图");

example.setSize(800,600);

example.setLocationRelativeTo(null);

example.setDefaultCloseOperation(WindowConstants.EXIT_ON_CLOSE);

example.setVisible(true);});}}

特别说明:问题三的情况比较特殊,Map到Reduce阶段要同时携带多种数据项,不能丢失信息,但也不可全作为键,因此更改了值的类型,自定义Date_City_Price类作为outValue的类型

打包Jar包

有很多种方式,我选取了最直观的一种,也是搜索一下就可以模仿操作了

构建 --> 构建工件 --> 选择jar包 --> 构建

Hadoop启动

启动命令行窗口 输入命令start-all.cmd

成功后会出现四个窗口namen,datanode,namemanager和resourcemanager

输入jps查看当前进程

问题处理

已解决

- 数据的分割格式一定要自己查看,多个空格和一个tab识别的是不一样的

- log4j.properties日志报错

在resources目录下创建一个 log4j.properties的文件,在里面写如下内容即可解决

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

- 刚启动hadoop一堆节点什么的时候,不要马上运行代码,因为刚开启的时候默认打开了安全模式,过一会就自动关闭了

未解决

- log4j捆绑问题,由于这里面写了三个问题,造成个多个捆绑。网上有解决办法,但是我不想解决了(摆烂)

- 只能使用硬编码路径,不能在类的编辑配置中直接输入路径实参。感觉是我的maven依赖有点问题,导致我的jar包输出每次路径都是固定的,三个问题只能输出一个问题的结果

- 在HDFS上运行的时候,由于jar包存在Kerberos认证、授权以及加密传输的特点,出现了签名验证失败,导致我只能禁用签名验证(不推荐):作为临时解决方案,但这会降低安全性

如有解决办法可在评论区讨论交流;博主也是自学的,如有错误请批评指正~

求源码、可视化图片、实验报告、ppt请私聊(其实源码也该给的都给了)

版权归原作者 阳江漫漫 所有, 如有侵权,请联系我们删除。