文章目录

一、kerberos服务端

- 上传kerberos安装包到/opt/rpm

- 安装:rpm -Uvh --force --nodeps *.rpm

yum -y install krb5-libs krb5-workstation krb5-server - 修改配置:vi /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults] kdc_ports =88 kdc_tcp_ports =88[realms]HADOOP.COM={ acl_file =/var/kerberos/krb5kdc/kadm5.acl dict_file =/usr/share/dict/words max_life =24h 0m 0s max_renewable_life =7d max_life =1d admin_keytab =/var/kerberos/krb5kdc/kadm5.keytab supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal }``````[kdcdefaults] kdc_ports =88 #KDC监听的UDP端口 kdc_tcp_ports =88 #KDC监听TCP端口 [realms] #使用该KDC的所有域,一个KDC可以支持多个域 HADOOP.COM={ acl_file =/var/kerberos/krb5kdc/kadm5.acl #具有管理服务的用户其访问权限控制文件的位置,默认文件名:kadm5.acl dict_file =/usr/share/dict/words #列举了不允许用做密码的单词,这些单词拥有被破解或猜测 max_life =1d #票据默认的有效期,默认值是1d max_renewable_life =7d #票据默认的最大可再生时间,默认是0 admin_keytab =/var/kerberos/krb5kdc/kadm5.keytab #KDC进行校验的keytab。 supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal #KDC支持的加密类型 } - 修改配置:vi /etc/krb5.conf

# Configuration snippets may be placed in this directory as wellincludedir /etc/krb5.conf.d/[logging]default=FILE:/var/log/krb5libs.log kdc =FILE:/var/log/krb5kdc.log admin_server =FILE:/var/log/kadmind.log[libdefaults] default_realm =HADOOP.COM dns_lookup_realm =false ticket_lifetime =24h renew_lifetime =7d forwardable =true rdns =false pkinit_anchors =FILE:/etc/pki/tls/certs/ca-bundle.crt udp_preference_limit =1# default_realm =EXAMPLE.COM# default_ccache_name =KEYRING:persistent:%{uid}[realms]HADOOP.COM={ kdc =192.168.248.12 admin_server =192.168.248.12}[domain_realm].hadoop.com =HADOOP.COM hadoop.com =HADOOP.COM``````# Configuration snippets may be placed in this directory as wellincludedir /etc/krb5.conf.d/[logging] #日志配置 default=FILE:/var/log/krb5libs.log kdc =FILE:/var/log/krb5kdc.log admin_server =FILE:/var/log/kadmind.log[libdefaults] default_realm =HADOOP.COM #默认域,必须跟要配置的realm的名称一致。 dns_lookup_realm =false #表明是否通过DNSSRV记录定位KDC和realm,通常设置为false ticket_lifetime =24h #票据生效时限,通常为24小时 renew_lifetime =7d #票据最长延期时限,通常为一周,当票据过期后,对安全认证的服务的访问将失效 forwardable =true rdns =false #kerberos使用88端口只为tcp,不为udp(默认随机切换,一般开防火墙需要考虑这个配置) udp_preference_limit =1 pkinit_anchors =FILE:/etc/pki/tls/certs/ca-bundle.crt default_ccache_name =KEYRING:persistent:%{uid} #kdc票据缓存路径修改[realms]HADOOP.COM={ #由EXAMPLE.COM改为HADOOP.COM,和default_realm相对应 kdc =192.168.248.12 #此处填KDC主机名,密钥分发中心 admin_server =192.168.248.12 #admin server位置,仍是填写主机名 }[domain_realm].hadoop.com =HADOOP.COM # hadoop.com域名下的所有主机映射到HADOOP.COM hadoop.com =HADOOP.COM # hadoop.com域名本身映射到HADOOP.COM - 初始化数据库:/usr/sbin/kdb5_util create -s -r HADOOP.COM。密码设为:ffcsict1234!#%&

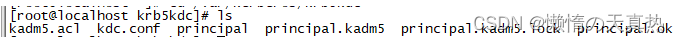

- 查看生成文件:cd /var/kerberos/krb5kdc --> ls

- 创建数据库管理员:/usr/sbin/kadmin.local -q “addprinc admin/admin”,密码为ffcsict1234!#%&

- 管理员设置ACL权限:vi /var/kerberos/krb5kdc/kadm5.acl

*/[email protected]* - 设置启动和开机自启动:systemctl enable kadmin -->systemctl start kadmin --> systemctl status kadmin

- 设置启动和开机自启动:systemctl enable krb5kdc -->systemctl start krb5kdc --> systemctl status krb5kdc

- 登录管理员:kadmin.localps:如果在非服务器上登录管理员,则需要kinit admin/admin -->kadmin

- 管理员操作ps1-登录:kinit admin/admin --> kadmin。注:如果是在服务端登录,可以直接输入 kadmin.local进入,客户端则如前面。ps2-查看:listprincsps3-创建用户:addprinc 用户名。注:如果需要添加管理员帐户密码。,只需要配置用户名以“/admin”结尾即可,如第7点。ps4-修改密码:kpasswd 用户名,先输入旧密码,在输入新密码。注:忘记密码,可用kadmin.local登录,change_password 用户名,直接输入新密码。ps5-删除用户:delprinc 用户名ps6-查看用户信息:getprinc 用户名ps7-查看所有命令:?

- 管理员创建kafka用户凭据在创建用户凭据前 ,需要了解客户端登录的机制有两种。第一种就是使用凭证登录,第二种就是使用账号密码登录。13.1 使用凭据登录 ps1-创建用户:addprinc -randkey kafka ps2-创建导出凭据:ktadd -k /root/kerberos/kafka.keytab kafka 或者 xst -k /root/kerberos/kafka.keytab kafka ps3-查看凭据信息:klist -ket /root/kerberos/kafka.keytab ps4:将生成的“kafka.keytab”凭据给客户端,客户端可凭借该凭据登录。13.2 使用账号密码登录 ps1-创建用户:addprinc kafka ,然后输入账号密码即可 ps2:将账号密码给客户端,客户端可以凭借账号密码登录。 ps3-创建凭据:这种方法创建的用户也可以凭借导出凭据登录,但是需要注意的是,在导出凭据的时候需要加上“-randkey ”关键字,否则每次导出密码会变动。这种情况下,只能在服务端的kadmin.local登录环境在操作。 如:ktadd -k /root/kerberos/kafka.keytab -norandkey kafka

- 导出联合凭据:xst -norandkey -k /root/kerberos/kafka.keytab-all kafka kadmin/admin (仅支持服务端的kadmin.local登录环境)

- 将用户信息全部导出: kdb5_util dump ./slava_data,将会生成slava_data 、slava_data.dump_ok两个文件,信息都存在slava_data 里。

二、kerberos客户端

- 下载:yum install -y krb5-lib krb5-workstation krb5-devel krb5-auth-dialog

- 将服务端的/etc/krb5.conf复制到其他节点的/etc底下

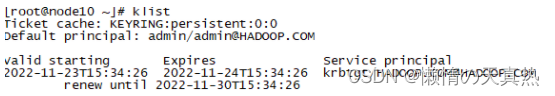

- 账号密码登录:kinit admin/admin–>klist

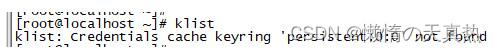

- 删除当前认证用户的缓存:kdestroy --> klist

- 凭据登录:kinit -kt /home/hadoop/kerberos/admin.keytab admin/admin --> klist

三、hadoop集群安装HTTPS服务

安装说明:生成CA证书hdfs_ca_key和hdfs_ca_cert只需要在任意一台节点上完成即可,其他每个节点包括生成证书的节点都需要执行第四步以后的操作,且必须使用root用户执行以下操作

- 在node10节点生成CA证书,需要输入两次密码,其中CN:中国简称;ST:省份;L:城市;O和OU:公司或个人域名;ha01是生成CA证书主机名

openssl req -new-x509 -keyout hdfs_ca_key -out hdfs_ca_cert -days 9999-subj /C=CN/ST=shanxi/L=xian/O=hlk/OU=hlk/CN=ha01ffcsict123 - 将node10节点上生成的CA证书hdfs_ca_key、hdfs_ca_cert分发到每个节点上的/tmp目录下ps:因为我是使用winscp直接拷贝的,如果使用scp指令,建议root用户也配置ssh免登录

scp /opt/https/hdfs_ca_cert [email protected]:/tmpscp /opt/https/hdfs_ca_key [email protected]:/tmp - 发送完成后删除node10节点上CA证书

- 在每一台机器上生成keystore和trustores(注意:集群中每个节点都需要执行以下命令)1)cd /tmp2)生成keystore,这里的keytool需要java环境,否则command not found

name="CN=$HOSTNAME, OU=hlk, O=hlk, L=xian, ST=shanxi, C=CN"#需要输入第一步输入的密码四次keytool -keystore keystore -alias localhost -validity 9999-genkey -keyalg RSA-keysize 2048-dname "$name"ffcsict1233) 添加CA到truststore,同样需要输入密码:keytool -keystore truststore -alias CARoot -import -file hdfs_ca_cert4)从keystore中导出cert:keytool -certreq -alias localhost -keystore keystore -file cert5)用CA对cert签名:openssl x509 -req -CA hdfs_ca_cert -CAkey hdfs_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial6)将CA的cert和用CA签名之后的cert导入keystorekeytool -keystore keystore -alias CARoot-import-file hdfs_ca_cert keytool -keystore keystore -alias localhost -import-file cert_signed7)将最终keystore,trustores放入合适的目录,并加上后缀jksmkdir -p /etc/security/https && chmod 755/etc/security/https cp keystore /etc/security/https/keystore.jks cp truststore /etc/security/https/truststore.jks8) 删除/tmp目录下产生的垃圾数据文件:rm -f keystore truststore hdfs_ca_key hdfs_ca_cert.srl hdfs_ca_cert cert_signed cert - 配置$HADOOP_HOME/etc/hadoop/ssl-server.xml和ssl-client.xml文件注:这两个配置文件在一台节点配好,发送到其他节点对应位置下!1) :vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/ssl-client.xml文件

<configuration><property><name>ssl.client.truststore.location</name><value>/etc/security/https/truststore.jks</value><description>Truststoretobe used by clients like distcp. Must be specified.</description></property><property><name>ssl.client.truststore.password</name><value>ffcsict123</value><description>Optional. Default value is "".</description></property><property><name>ssl.client.truststore.type</name><value>jks</value><description>Optional. The keystore file format,default value is "jks".</description></property><property><name>ssl.client.truststore.reload.interval</name><value>10000</value><description>Truststore reload check interval, in milliseconds.Default value is 10000(10 seconds).</description></property><property><name>ssl.client.keystore.location</name><value>/etc/security/https/keystore.jks</value><description>Keystoretobe used by clients like distcp. Must be specified.</description></property><property><name>ssl.client.keystore.password</name><value>ffcsict123</value><description>Optional. Default value is "".</description></property><property><name>ssl.client.keystore.keypassword</name><value>ffcsict123</value><description>Optional. Default value is "".</description></property><property><name>ssl.client.keystore.type</name><value>jks</value><description>Optional. The keystore file format,default value is "jks".</description></property></configuration>2)配置:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/ssl-server.xml文件<configuration><property><name>ssl.server.truststore.location</name><value>/etc/security/https/truststore.jks</value><description>Truststoretobe used by NN and DN. Must be specified.</description></property><property><name>ssl.server.truststore.password</name><value>ffcsict123</value><description>Optional. Default value is "".</description></property><property><name>ssl.server.truststore.type</name><value>jks</value><description>Optional. The keystore file format,default value is "jks".</description></property><property><name>ssl.server.truststore.reload.interval</name><value>10000</value><description>Truststore reload check interval, in milliseconds. Default value is 10000(10 seconds).</description></property><property><name>ssl.server.keystore.location</name><value>/etc/security/https/keystore.jks</value><description>Keystoretobe used by NN and DN. Must be specified.</description></property><property><name>ssl.server.keystore.password</name><value>ffcsict123</value><description>Must be specified.</description></property><property><name>ssl.server.keystore.keypassword</name><value>ffcsict123</value><description>Must be specified.</description></property><property><name>ssl.server.keystore.type</name><value>jks</value><description>Optional. The keystore file format,default value is "jks".</description></property></configuration> - 分发

xsync /home/hadoop/module/hadoop-3.2.2/etc/hadoop/ssl-server.xmlxsync /home/hadoop/module/hadoop-3.2.2/etc/hadoop/ssl-client.xml - 赋权:chown -R hadoop:hadoop /home/hadoop

四、kerberos整合zk

- 创建文件夹:mkdir /home/hadoop/kerberos

- 创建zk的principal,多建一些用户用于后面测试

密码设置:ffcsict123kadmin.localaddprinc -randkey zookeeper/node10addprinc -randkey zookeeper/node11addprinc -randkey zookeeper/node12addprinc -randkey zookeeper/node13 addprinc hadoop/node10addprinc hadoop/node11addprinc hadoop/node12addprinc hadoop/node13 addprinc -randkey HTTP/node10addprinc -randkey HTTP/node11addprinc -randkey HTTP/node12addprinc -randkey HTTP/node13 addprinc -randkey root/node10addprinc -randkey root/node11addprinc -randkey root/node12addprinc -randkey root/node13 addprinc -randkey zhanzhk/node10addprinc -randkey zhanzhk/node11addprinc -randkey zhanzhk/node12addprinc -randkey zhanzhk/node13 addprinc -randkey hadoop 每个节点操作xst -k /home/hadoop/kerberos/hadoop.keytab -norandkey zookeeper/node10 zookeeper/node11 zookeeper/node12 zookeeper/node13 hadoop/node10 hadoop/node11 hadoop/node12 hadoop/node13 http/node10 http/node11 http/node12 http/node13 HTTP/node10 HTTP/node11 HTTP/node12 HTTP/node13 root/node10 root/node11 root/node12 root/node13 zhanzhk/node10 zhanzhk/node11 zhanzhk/node12 zhanzhk/node13 rangeradmin/node12 rangerlookup/node12 hadoop - 拷贝keytab到所有的节点

- 修改所有节点zk的zoo.cfg配置文件:vi /home/hadoop/zookeeper/apache-zookeeper-3.6.3-bin/conf/zoo.cfg

kerberos.removeHostFromPrincipal=truekerberos.removeRealmFromPrincipal=trueauthProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProviderjaasLoginRenew=3600000 - 生成jaas.conf文件:vi /home/hadoop/zookeeper/apache-zookeeper-3.6.3-bin/conf/jaas.conf

Server{com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/home/hadoop/kerberos/hadoop.keytab" storeKey=true useTicketCache=false principal="zookeeper/[email protected]";};Client{com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/home/hadoop/kerberos/hadoop.keytab" storeKey=true useTicketCache=false principal="hadoop/[email protected]";}; - 分发到其他节点,需要修改principal

- 配置java.env文件:vi /home/hadoop/zookeeper/apache-zookeeper-3.6.3-bin/conf/java.env

export JVMFLAGS="-Djava.security.auth.login.config=/home/hadoop/zookeeper/apache-zookeeper-3.6.3-bin/conf/jaas.conf" - 赋权:chown -R hadoop /home/hadoop

- 启动zk

- 连接zk:cd /home/hadoop/zookeeper/apache-zookeeper-3.6.3-bin/bin/ --》 ./zkCli.sh -server node10:2181

- 关闭acl权限:不知道为何,本地可以,部署到线上后,Acl权限被开启了,导致一些文件夹无法操作。如果有遇到的,可以在zoo.cfg配置文件最后添加一行:skipAcl=true即可

五、kerberos整合ranger

- 创建principal

kadmin.localaddprinc -randkey rangadmin/node12ktadd -norandkey -kt /home/hadoop/kerberos/rangadmin.keytab HTTP/node12 rangadmin/node12 - 修改配置文件:vi /opt/RangerAdmin/ranger-2.2.0-admin/install.properties添加

spnego_principal=HTTP/[email protected]_keytab=/home/hadoop/kerberos/rangadmin.keytab admin_principal=rangadmin/[email protected]_keytab=/home/hadoop/kerberos/rangadmin.keytab lookup_principal=rangadmin/[email protected]_keytab=/home/hadoop/kerberos/rangadmin.keytab - 初始化ranger-admin脚本

cd /opt/RangerAdmin/ranger-2.2.0-admin./setup.sh - 启动:ranger-admin start

六、kerberos整合hdfs

ps1:如果怕ranger影响安装测试,可以考虑先移除ranger配置,kerberos测试通过后再重新加上ranger配置。可以使用插件自带的脚本disable-hdfs-plugin.sh

ps2:所有节点都要操作

- 修改所有节点core-site.xml配置:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/core-site.xml

<!-- 配置kerberos认证 --><property><name>hadoop.security.authentication</name><value>kerberos</value></property><property><name>hadoop.security.authorization</name><value>true</value></property><property><name>hadoop.rpc.protection</name><value>authentication</value></property><property><name>hadoop.http.authentication.type</name><value>kerberos</value></property> - 修改所有节点hdfs-site.xml配置:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/hdfs-site.xml新增

<!-- 配置集群namenode的kerberos认证 --><property><name>dfs.block.access.token.enable</name><value>true</value></property><property><name>dfs.namenode.keytab.file</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>dfs.namenode.kerberos.principal</name><value>hadoop/[email protected]</value></property><property><name>dfs.web.authentication.kerberos.principal</name><value>HTTP/[email protected]</value></property><property><name>dfs.web.authentication.kerberos.keytab</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><!-- 配置对NameNodeWebUI的SSL访问 --><property><name>dfs.webhdfs.enabled</name><value>true</value></property><property><name>dfs.http.policy</name><value>HTTPS_ONLY</value></property><property><name>dfs.namenode.https-address</name><value>0.0.0.0:50070</value></property><property><name>dfs.permissions.supergroup</name><value>hadoop</value><description>The name of the group of super-users.</description></property><!-- 配置集群datanode的kerberos认证 --><property><name>dfs.datanode.keytab.file</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>dfs.datanode.kerberos.principal</name><value>hadoop/[email protected]</value></property><!-- 配置datanode SASL配置 --><property><name>dfs.datanode.data.dir.perm</name><value>700</value></property><property><name>dfs.datanode.address</name><value>0.0.0.0:50010</value></property><property><name>dfs.datanode.http.address</name><value>0.0.0.0:50075</value></property><property><name>dfs.data.transfer.protection</name><value>integrity</value></property><!-- 配置集群journalnode的kerberos认证 --><property><name>dfs.journalnode.keytab.file</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>dfs.journalnode.kerberos.principal</name><value>hadoop/[email protected]</value></property><property><name>dfs.journalnode.kerberos.internal.spnego.principal</name><value>HTTP/[email protected]</value></property><property><name>dfs.journalnode.http-address</name><value>0.0.0.0:8480</value></property><property><name>dfs.https.port</name><value>50470</value></property><property><name>dfs.https.address</name><value>0.0.0.0:50470</value></property><property><name>dfs.namenode.kerberos.https.principal</name><value>HTTP/[email protected]</value></property><property><name>dfs.namenode.kerberos.internal.spnego.principal</name><value>HTTP/[email protected]</value></property>修改<!-- http通信地址 web端访问地址 --><property><name>dfs.namenode.https-address.mycluster.nn1</name><value>node10:50070</value></property><!-- http通信地址 web 端访问地址 --><property><name>dfs.namenode.https-address.mycluster.nn2</name><value>node11:50070</value></property> - 启动hdfs:/home/hadoop/module/hadoop-3.2.2/sbin/start-dfs.sh

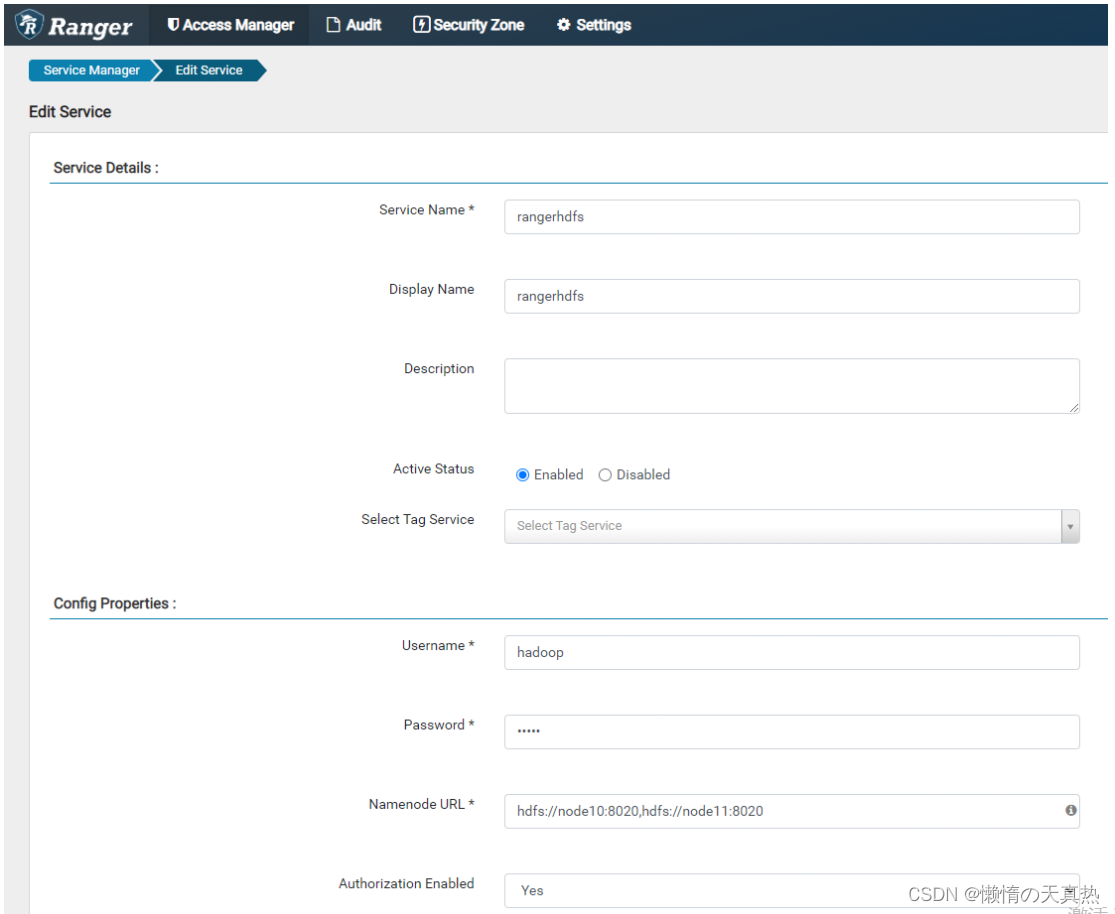

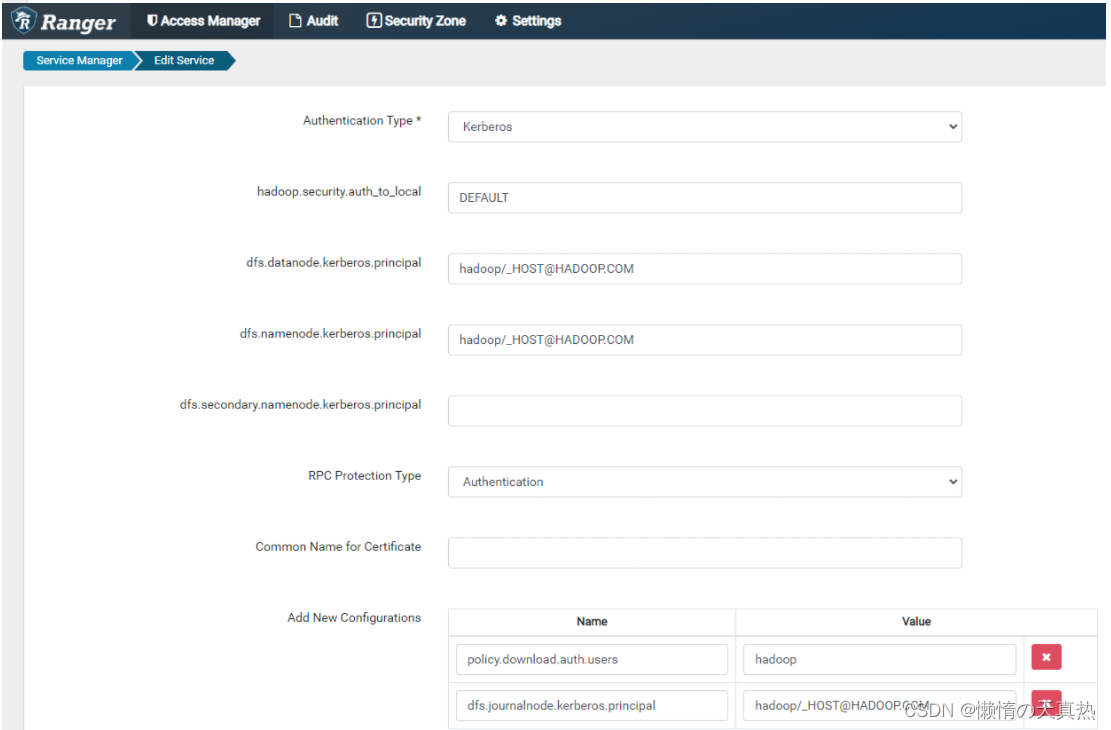

- 配置ranger服务

- 新增、并且在ranger web端,配置rangadmin用户所有目录权限

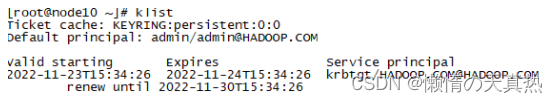

- 验证:首先分发各配置文件1)票据初始化(每个节点都执行):kinit -kt /home/hadoop/kerberos/hadoop.keytab hadoop/node102)使用 klist 命令查看当前是否生成票据,出现有效及过期时间即表示生成票据成功 3)测试:hadoop fs -ls /4)创建zhanzhk用户进行测试(无权限):hadoop fs -ls /5)hdfs配置zhanzhk权限(看前面文章),再次测试,成功!

七、kerberos整合yarn

ps1:如果怕ranger影响安装测试,可以考虑先移除ranger配置,kerberos测试通过后再重新加上ranger配置。可以使用插件自带的脚本disable-yarn-plugin.sh

ps2:所有节点都要操作

- 配置yarn:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/yarn-site.xml

<!-- 配置yarn的web ui 访问https --><property><name>yarn.http.policy</name><value>HTTPS_ONLY</value></property><!--RM1HTTP访问地址,查看集群信息 --><property><name>yarn.resourcemanager.webapp.https.address.rm1</name><value>node10:8088</value></property><!--RM2HTTP访问地址,查看集群信息 --><property><name>yarn.resourcemanager.webapp.https.address.rm2</name><value>node11:8088</value></property><!-- 配置yarn提交的app程序在hdfs上的日志存储路径 --><property><description>Wheretoaggregate logs to.</description><name>yarn.nodemanager.remote-app-log-dir</name><value>/home/hadoop/module/hadoop-3.2.2/logs</value></property><!--YARN kerberos security--><property><name>yarn.resourcemanager.keytab</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>yarn.resourcemanager.principal</name><value>hadoop/[email protected]</value></property><property><name>yarn.nodemanager.keytab</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>yarn.nodemanager.principal</name><value>hadoop/[email protected]</value></property><property><name>yarn.nodemanager.container-executor.class</name><value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value></property><!--此处的group为nodemanager用户所属组--><property><name>yarn.nodemanager.linux-container-executor.group</name><value>hadoop</value></property><property><name>yarn.nodemanager.linux-container-executor.path</name><value>/hdp/bin/container-executor</value></property> - 配置yarn:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/mapred-site.xml

<!--mapred kerberos security--><property><name>mapreduce.jobhistory.keytab</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>mapreduce.jobhistory.principal</name><value>hadoop/[email protected]</value></property><property><name>mapreduce.jobhistory.http.policy</name><value>HTTPS_ONLY</value></property><property><name>mapreduce.jobhistory.webapp.https.address</name><value>node10:19888</value></property><property><name>mapreduce.ssl.enabled</name><value>true</value></property><property><name>mapreduce.shuffle.ssl.enabled</name><value>true</value></property> - 分发修改的配置文件至各节点

- 配置container-executor:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/container-executor.cfgps:注意:该container-executor.cfg文件内不允许有空格或空行,否则会报错!

#configured value of yarn.nodemanager.linux-container-executor.groupyarn.nodemanager.linux-container-executor.group=hadoop#comma separated list of users who can not run applicationsbanned.users=mysql#Prevent other super-usersmin.user.id=500#comma separated list of system users who CAN run applicationsallowed.system.users=root - 配置Yarn使用LinuxContainerExecutor(各节点都需要操作)1)创建文件夹:mkdir -p /hdp/bin、mkdir -p /hdp/etc/hadoop2)拷贝文件:

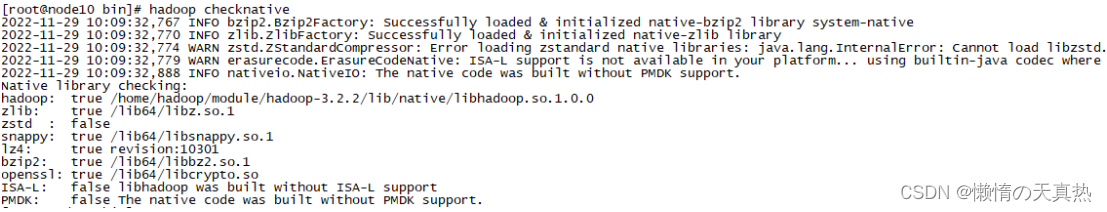

cp /home/hadoop/module/hadoop-3.2.2/bin/container-executor /hdp/bin/container-executorcp /home/hadoop/module/hadoop-3.2.2/etc/hadoop/container-executor.cfg /hdp/etc/hadoop/container-executor.cfg3)修改权限:chown -R root:hadoop /hdp/bin/container-executorchown -R root:hadoop /hdp/etc/hadoop/container-executor.cfgchown -R root:hadoop /hdp/binchown -R root:hadoop /hdp/etc/hadoopchown -R root:hadoop /hdp/etcchown -R root:hadoop /hdpchmod 6050 /hdp/bin/container-executor - 测试:hadoop checknative

- /hdp/bin/container-executor --checksetup,没输出东西说明正常

- 拷贝密钥:cp /home/hadoop/kerberos/hadoop.keytab /home/hadoop/hadoop.keytab

- 启动start-yarn.sh(root用户):/home/hadoop/module/hadoop-3.2.2/sbin/start-yarn.sh

- 验证(无权限):

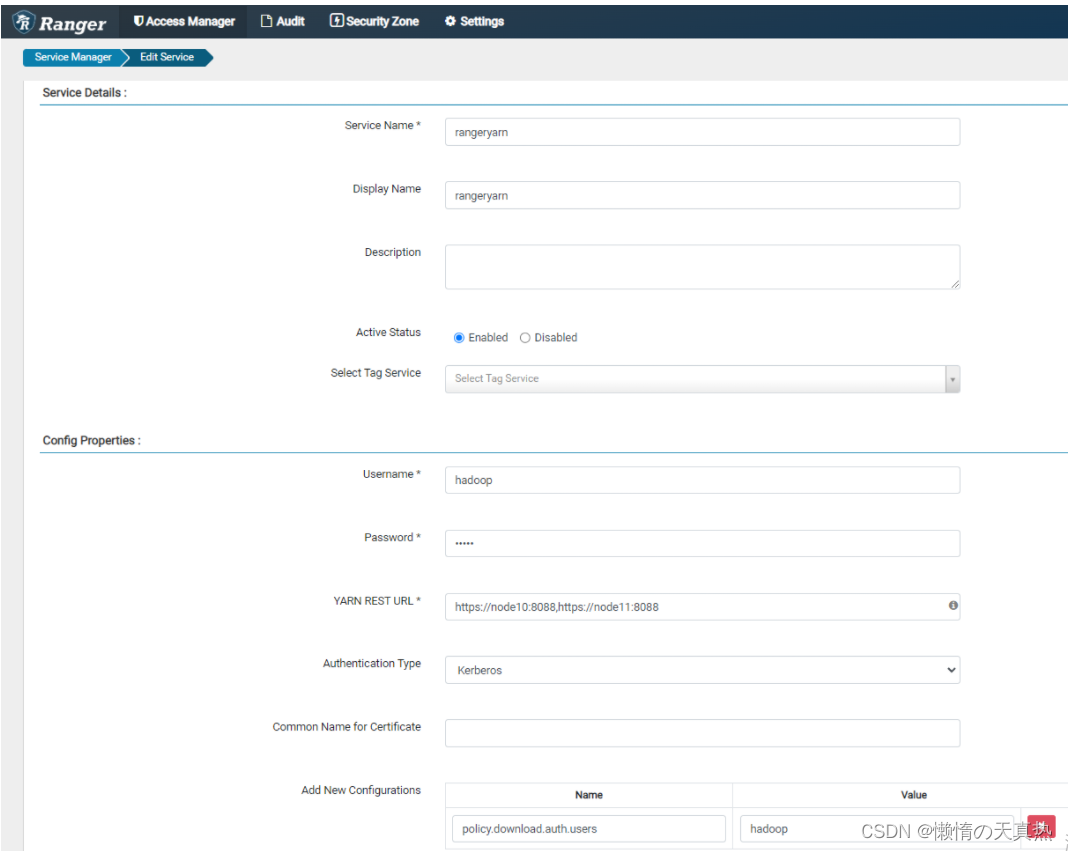

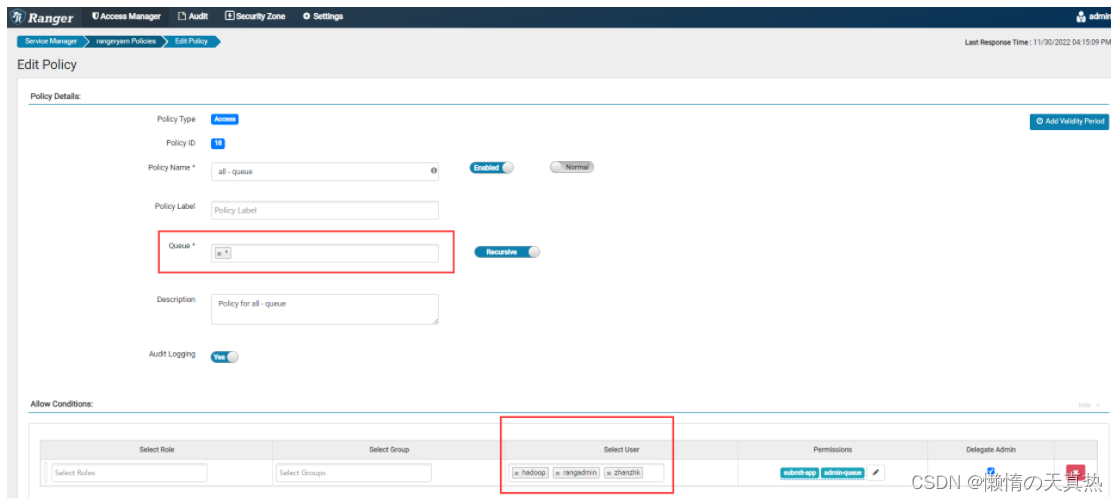

1. kinit -kt /home/hadoop/kerberos/hadoop.keytab zhanzhk/node102. hadoop jar /home/hadoop/module/hadoop-3.2.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.2.jar pi -Dmapred.job.queue.name=default22 - 修改rangeryarn服务ps:此时点击连接测试会报错,忽略即可```Unabletoretrieve any files using given parameters,You can still save the repository and start creating policies, but you would not be able touse autocomplete for resource names. Check ranger_admin.log for more info.org.apache.ranger.plugin.client.HadoopException:Unabletoget a valid response for expected mime type :[application/json]URL: https://node10:8088,https://node11:8088.Unabletoget a valid response for expected mime type :[application/json]URL: https://node10:8088,https://node11:8088- got null response.. ``````

- 配置添加zhanzhk账户策略即可!

- 重新测试,成功!

八、kerberos整合hive

ps1:如果怕ranger影响安装测试,可以考虑先移除ranger配置,kerberos测试通过后再重新加上ranger配置。可以使用插件自带的脚本disable-hive-plugin.sh

同时需要移除/home/hadoop/module/hive/conf/hiveserver2-site.xml配置文件。

- 配置hive-site.xml:vi /home/hadoop/module/hive/conf/hive-site.xml

<property><name>hive.server2.enable.doAs</name><value>true</value></property><property><name>hive.server2.authentication</name><value>KERBEROS</value></property><property><name>hive.server2.authentication.kerberos.principal</name><value>hadoop/[email protected]</value></property><property><name>hive.server2.authentication.kerberos.keytab</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>hive.server2.authentication.spnego.keytab</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>hive.server2.authentication.spnego.principal</name><value>hadoop/[email protected]</value></property><property><name>hive.metastore.sasl.enabled</name><value>true</value></property><property><name>hive.metastore.kerberos.keytab.file</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><property><name>hive.metastore.kerberos.principal</name><value>hadoop/[email protected]</value></property><!--配置hiveserver2高可用--><property><name>hive.server2.support.dynamic.service.discovery</name><value>true</value></property><property><name>hive.server2.zookeeper.namespace</name><value>hiveserver2_zk</value></property><property><name>hive.zookeeper.quorum</name><value> node10:2181,node11:2181,node12:2181</value></property><property><name>hive.zookeeper.client.port</name><value>2181</value></property> - 修改core-site.xml配置文件:vi /home/hadoop/module/hadoop-3.2.2/etc/hadoop/core-site.xml

<property><name>hadoop.proxyuser.hive.users</name><value>*</value></property><property><name>hadoop.proxyuser.hive.hosts</name><value>*</value></property> - 赋权:chown hadoop:hadoop /home/hadoop

- 启动hive客户端:cd /home/hadoop/module/hive —》 nohup ./bin/hiveserver2>> hiveserver2.log 2>&1 &

- 连接测试:ps:beeline连接必须使用配置文件配置的Hadoop连接,如下。如果想要区分用户,需要根据kinit用户进行区分

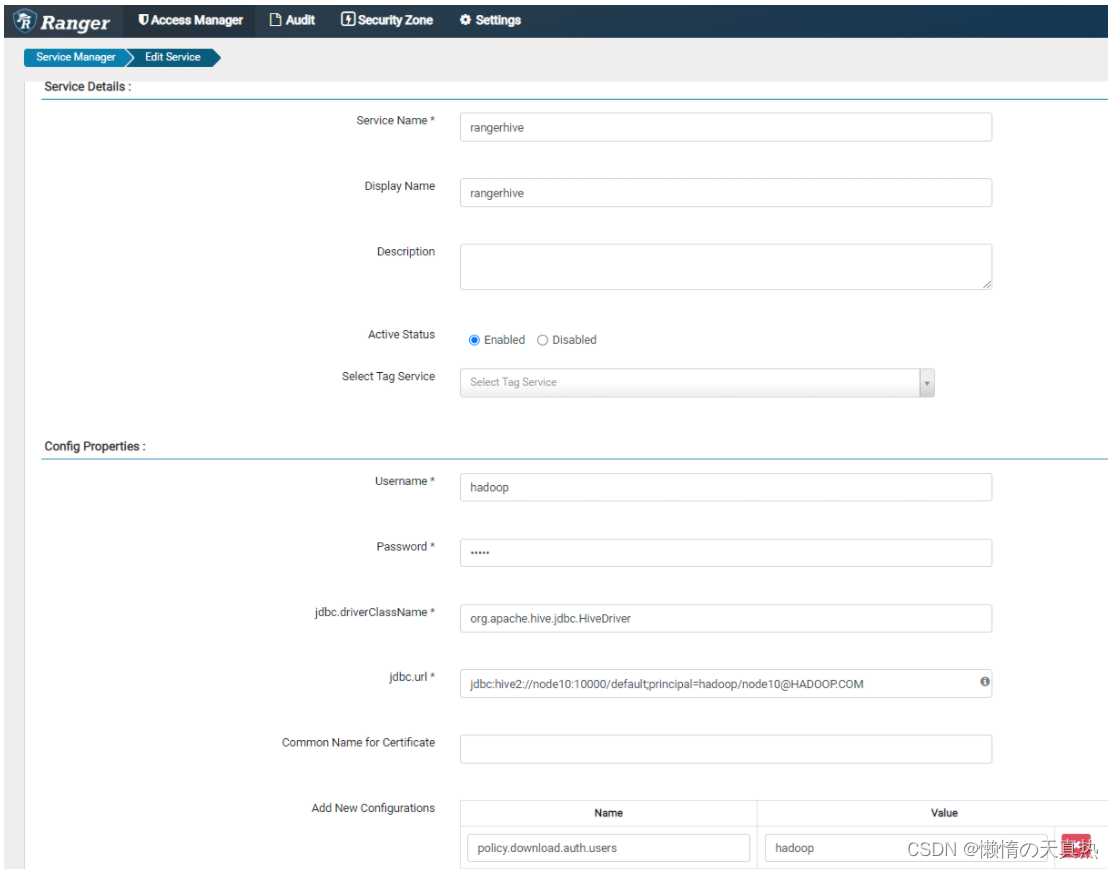

kinit -kt /home/hadoop/kerberos/hadoop.keytab zhanzhk/node10beeline -u "jdbc:hive2://node10:10000/default;principal=hadoop/[email protected]"create table zk(id int,name String);insert into zk values('4','abc222');show tables;报错无权限:Error: Error while compiling statement: FAILED: HiveAccessControlException Permission denied: user [zhanzhk] does not have [USE] privilege on [default] (state=42000,code=40000) - 重新配置hive的service

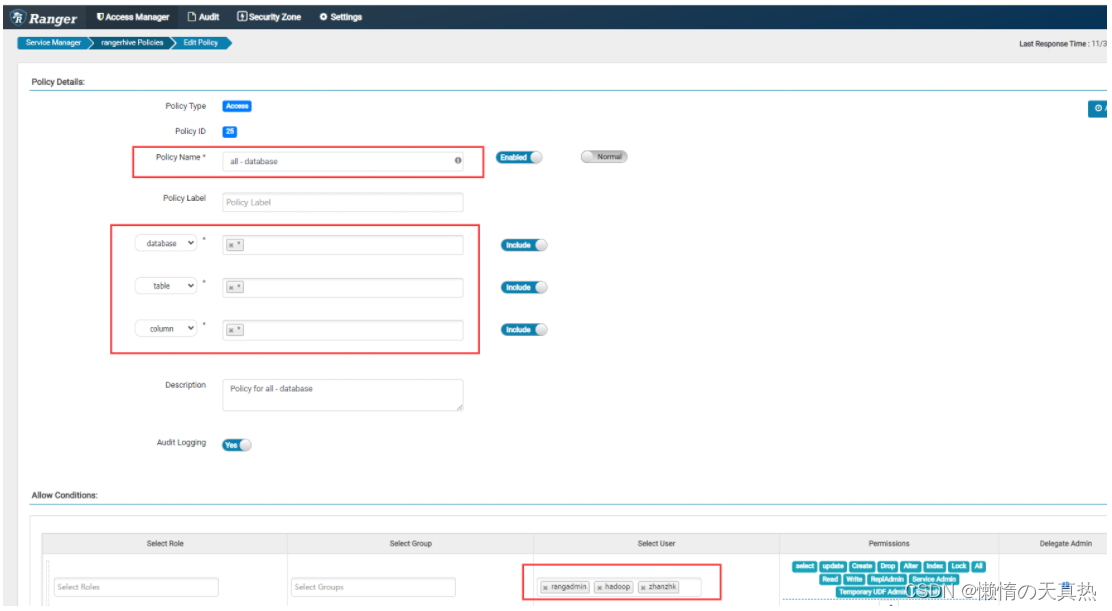

- 设置用户策略

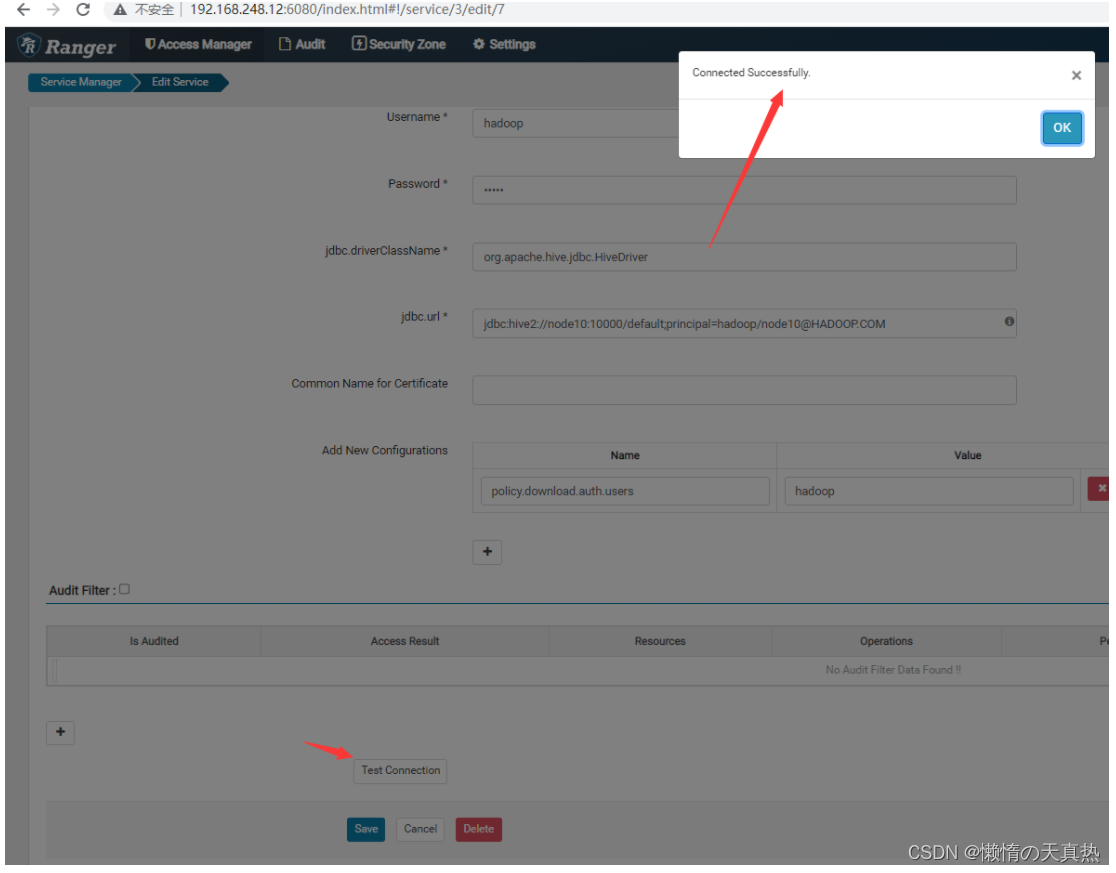

- 保存,然后回到service点击test,显示成功即可

- 重新连接测试:成功!

kinit -kt /home/hadoop/kerberos/hadoop.keytab zhanzhk/node10beeline -u "jdbc:hive2://node10:10000/default;principal=hadoop/[email protected]"create table zk(id int,name String);insert into zk values(13,'小红');show tables;beeline -u "jdbc:hive2://0003.novalocal:10000/default;principal=hadoop/[email protected]"bin/beeline -u jdbc:hive2://0003.novalocal:10000/default-n root -p ffcsict123create table sqoop_test(id int,name String,age int,address String,phone String);insert into sqoop_test values(13,'小红',18,'连花村','18854523654');

九、kerberos整合hbase

ps1:如果怕ranger影响安装测试,可以考虑先移除ranger配置,kerberos测试通过后再重新加上ranger配置。可以使用插件自带的脚本disable-hbase-plugin.sh

- 修改hbase-site.xml配置:vi /home/hadoop/hbase/hbase-2.1.0/conf/hbase-site.xml

<!-- hbase配置kerberos安全认证 --><property><name>hbase.security.authentication</name><value>kerberos</value></property><!-- 配置hbase rpc安全通信 --><property><name>hbase.rpc.engine</name><value>org.apache.hadoop.hbase.ipc.SecureRpcEngine</value></property><property><name>hbase.coprocessor.region.classes</name><value>org.apache.hadoop.hbase.security.token.TokenProvider</value></property><!-- hmaster配置kerberos安全凭据认证 --><property><name>hbase.master.kerberos.principal</name><value>hadoop/[email protected]</value></property><!-- hmaster配置kerberos安全证书keytab文件位置 --><property><name>hbase.master.keytab.file</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property><!-- regionserver配置kerberos安全凭据认证 --><property><name>hbase.regionserver.kerberos.principal</name><value>hadoop/[email protected]</value></property><!-- regionserver配置kerberos安全证书keytab文件位置 --><property><name>hbase.regionserver.keytab.file</name><value>/home/hadoop/kerberos/hadoop.keytab</value></property> - 创建文件:vi /home/hadoop/hbase/hbase-2.1.0/conf/zk-jaas.conf

Client{com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/home/hadoop/kerberos/hadoop.keytab" useTicketCache=false principal="hadoop/[email protected]";}; - 修改hbase-site.xml配置:vi /home/hadoop/hbase/hbase-2.1.0/conf/hbase-env.sh

#修改HBASE_OPTS属性为该内容export HBASE_OPTS="-XX:+UseConcMarkSweepGC -Djava.security.auth.login.config=/home/hadoop/hbase/hbase-2.1.0/conf/zk-jaas.conf" - 赋权:chown hadoop:hadoop /home/hadoop

- 重启

- 测试(无权限):

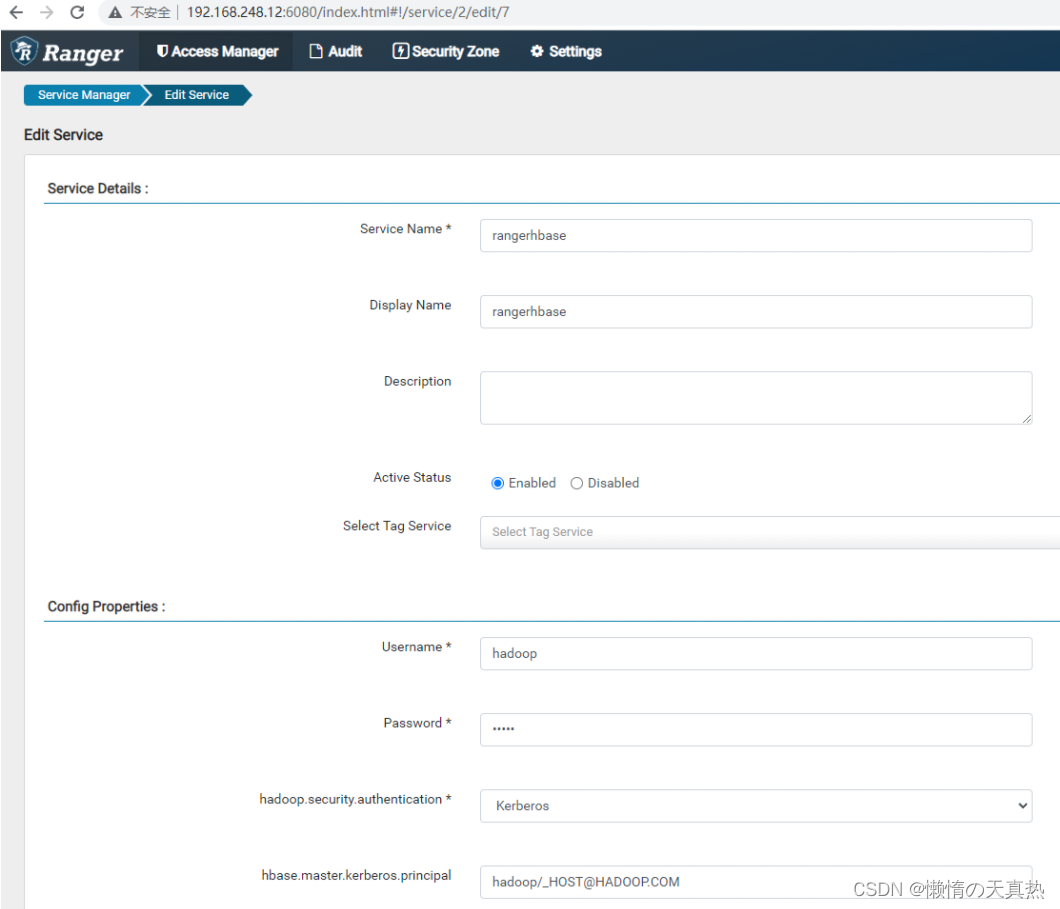

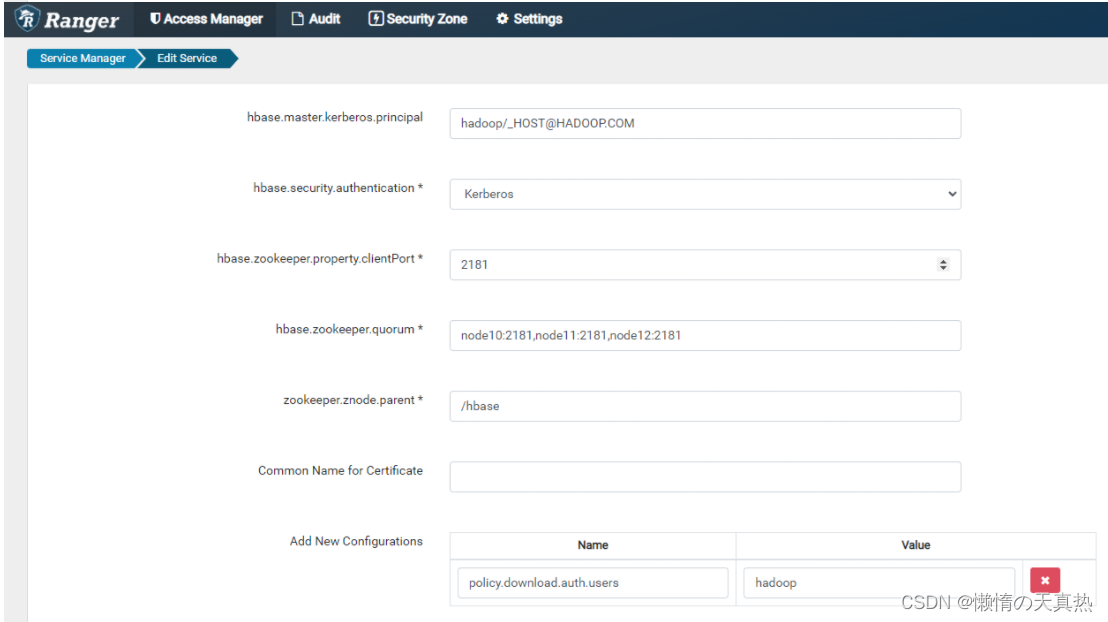

kinit -kt /home/hadoop/kerberos/hadoop.keytab zhanzhk/node10cd /home/hadoop/hbase/hbase-2.1.0/bin./hbase shellhive测试指令:1. create 'B_TEST_STU','cf' 2. 插入数据:put 'B_TEST_STU','001','cf:age','17'3. 查看表数据: scan 'B_TEST_STU' - 重新配置hbase的service

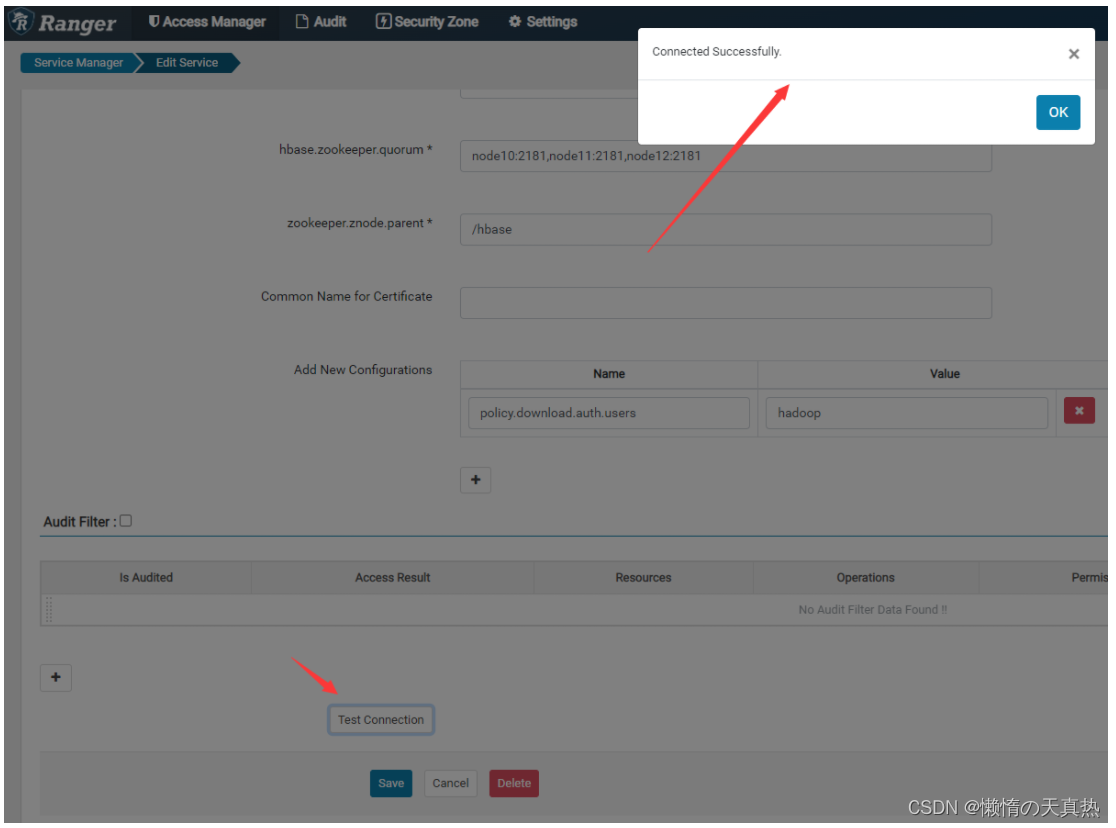

- 测试连接

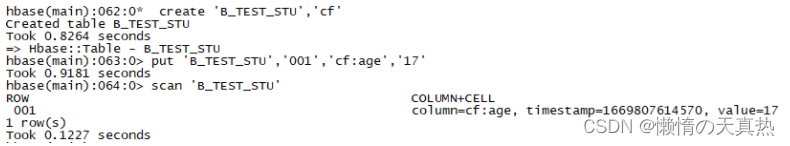

- 重新测试创建表

kinit -kt /home/hadoop/kerberos/hadoop.keytab zhanzhk/node10cd /home/hadoop/hbase/hbase-2.1.0/bin./hbase shellhbase测试指令:1. create 'B_TEST_STU','cf' 2. 插入数据:put 'B_TEST_STU','001','cf:age','17'3. 查看表数据: scan 'B_TEST_STU' - 成功!

十、遇到的问题

- 用户找不到问题:main : requested yarn user is zhanzhk,User zhanzhk not found。且kdc服务器已经创建了zhanzhk用户解决:在所有节点创建zhanzhk用户,useradd zhanzhk

- KeeperErrorCode = AuthFailed fo XXXXX解决:zookeeper整合kerberos即可

- RM could not transition to Active删除tmp底下的yarn相关文件夹即可

版权归原作者 懒惰の天真热 所有, 如有侵权,请联系我们删除。