文章目录

17. Yarn 案例实操

17.4 Yarn的Tool接口案例

17.4.1 回顾

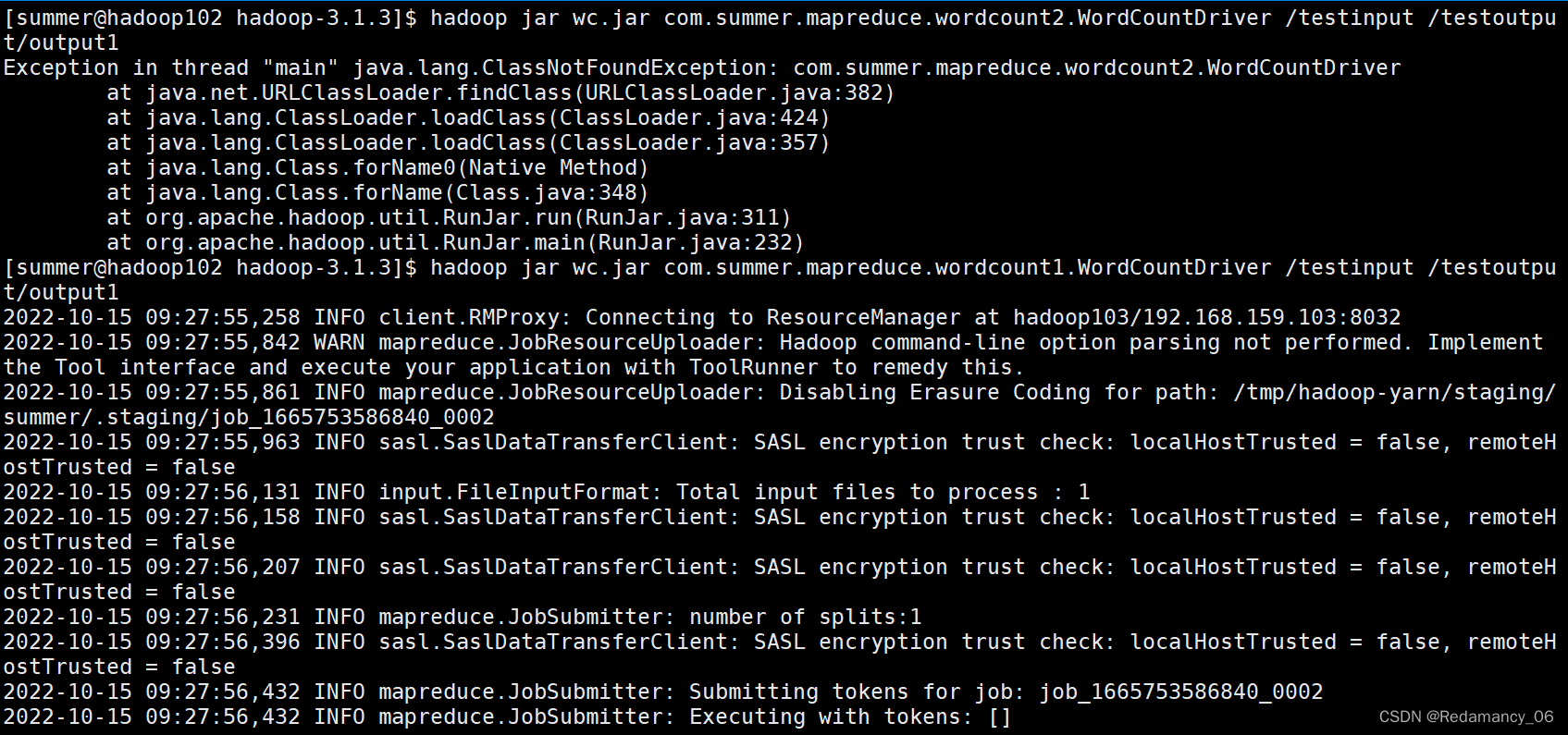

[summer@hadoop102 hadoop-3.1.3]$ hadoop jar wc.jar com.summer.mapreduce.wordcount2.WordCountDriver /testinput /testoutput/output1

期望可以动态传参,结果报错,误认为是第一个输入参数。

期望可以动态传参,结果报错,误认为是第一个输入参数。

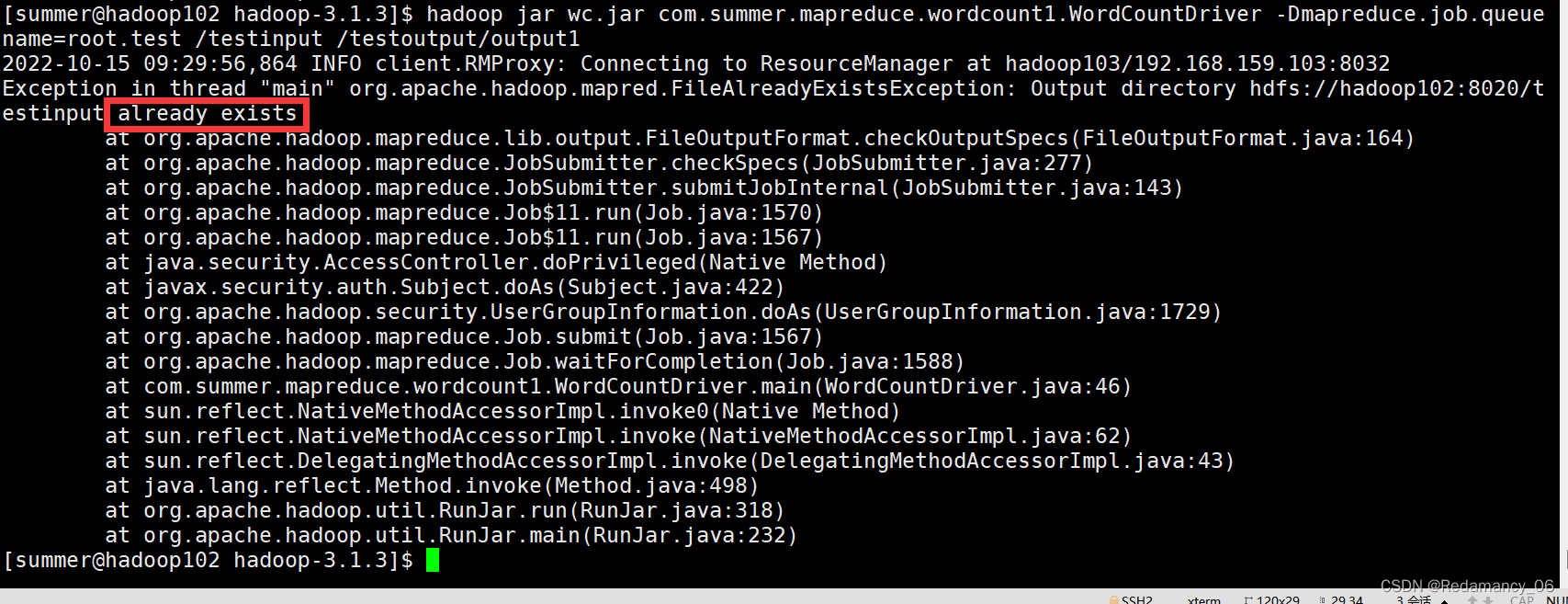

[summer@hadoop102 hadoop-3.1.3]$ hadoop jar wc.jar com.summer.mapreduce.wordcount1.WordCountDriver -Dmapreduce.job.queuename=root.test /testinput /testoutput/output1

17.4.2 需求

自己写的程序也可以动态修改参数。编写Yarn的Tool接口。

17.4.3 具体步骤

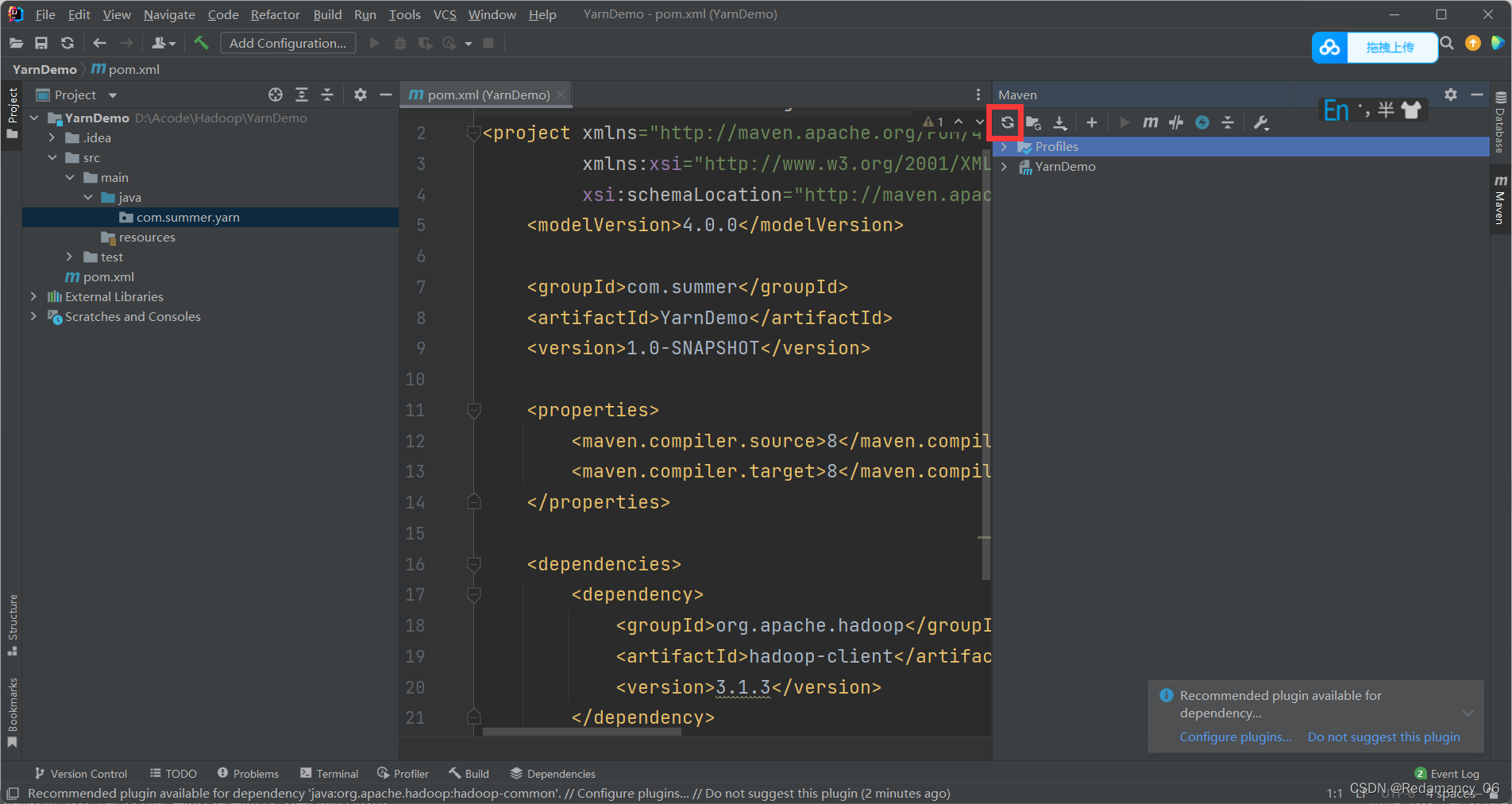

17.4.3.1 新建Maven项目YarnDemo,pom如下

<?xml version="1.0" encoding="UTF-8"?><projectxmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.summer</groupId><artifactId>YarnDemo</artifactId><version>1.0-SNAPSHOT</version><properties><maven.compiler.source>8</maven.compiler.source><maven.compiler.target>8</maven.compiler.target></properties><dependencies><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>3.1.3</version></dependency></dependencies></project>

添加依赖后记得刷新一下

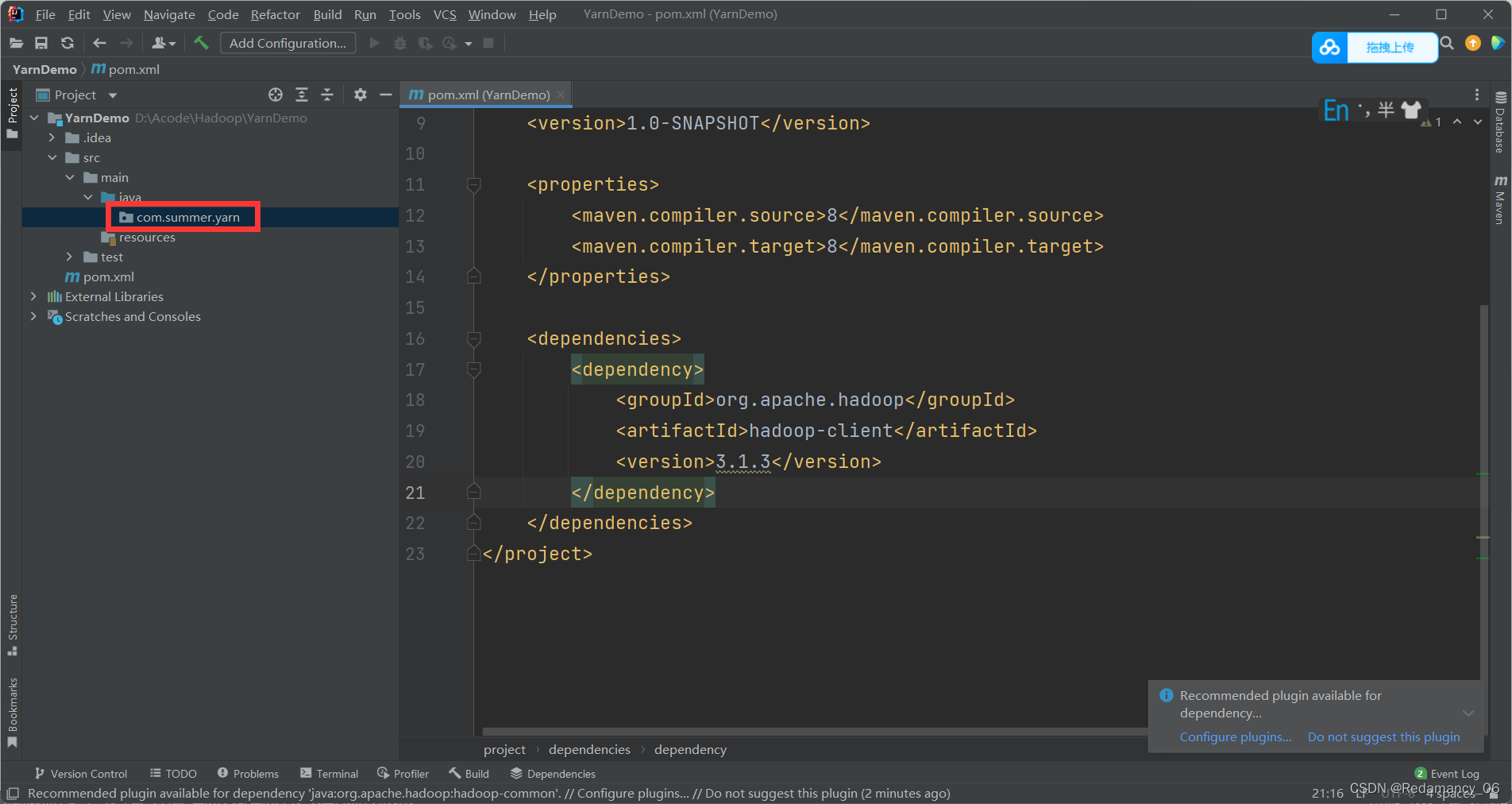

17.4.3.2 新建com.summer.yarn包名

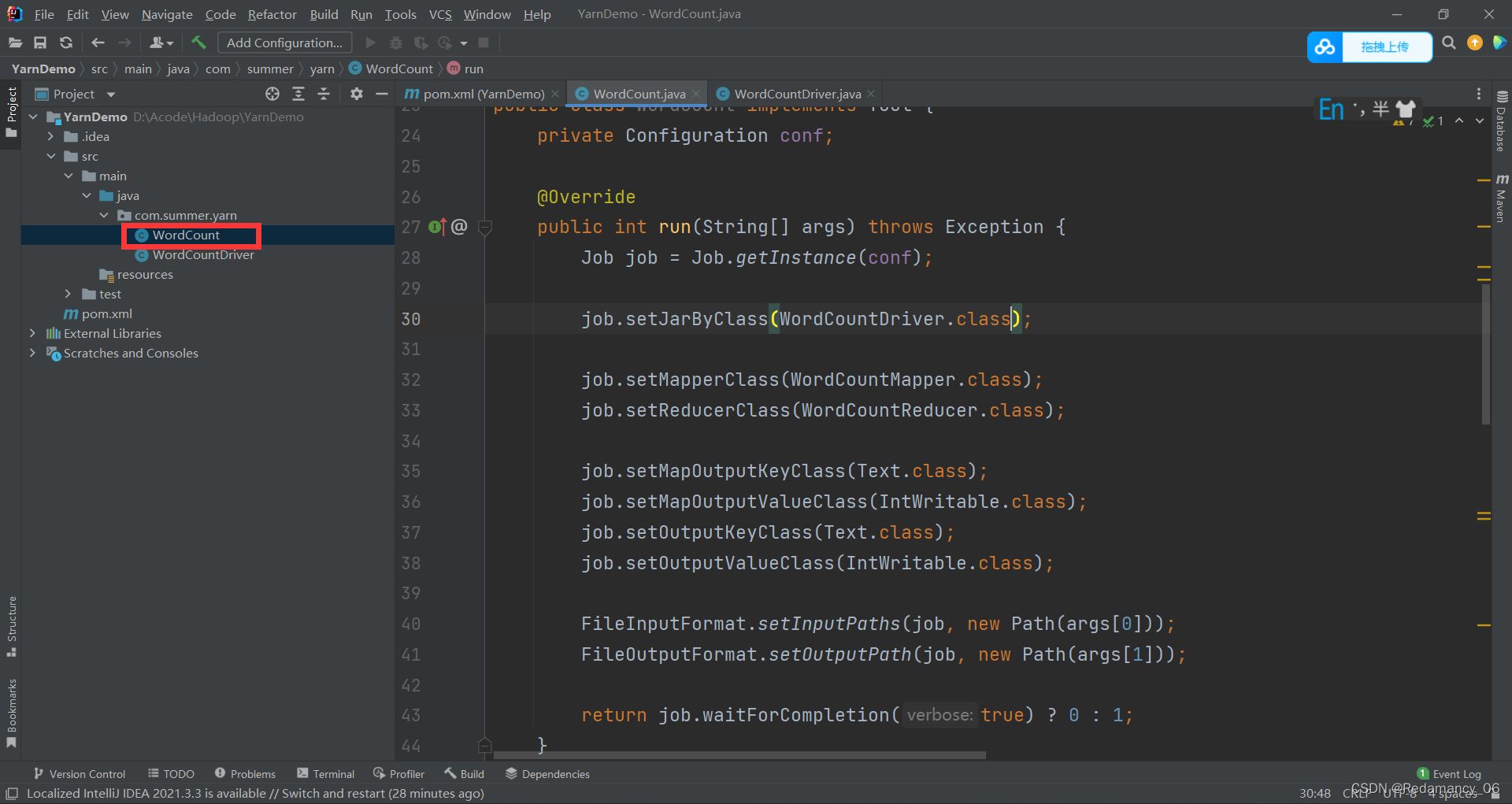

17.4.3.3 创建类WordCount并实现Tool接口

packagecom.summer.yarn;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.fs.Path;importorg.apache.hadoop.io.IntWritable;importorg.apache.hadoop.io.LongWritable;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.io.Writable;importorg.apache.hadoop.mapreduce.Job;importorg.apache.hadoop.mapreduce.Mapper;importorg.apache.hadoop.mapreduce.Reducer;importorg.apache.hadoop.mapreduce.lib.input.FileInputFormat;importorg.apache.hadoop.mapreduce.lib.output.FileOutputFormat;importorg.apache.hadoop.util.Tool;importorg.apache.hadoop.yarn.webapp.hamlet2.Hamlet;importjava.io.IOException;/**

* @author Redamancy

* @create 2022-10-15 15:00

*/publicclassWordCountimplementsTool{privateConfiguration conf;@Overridepublicintrun(String[] args)throwsException{Job job =Job.getInstance(conf);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);FileInputFormat.setInputPaths(job,newPath(args[0]));FileOutputFormat.setOutputPath(job,newPath(args[1]));return job.waitForCompletion(true)?0:1;}@OverridepublicvoidsetConf(Configuration configuration){this.conf = configuration;}@OverridepublicConfigurationgetConf(){return conf;}// maperpublicstaticclassWordCountMapperextendsMapper<LongWritable,Text,Text,IntWritable>{privateIntWritable outV =newIntWritable(1);privateText outK =newText();@Overrideprotectedvoidmap(LongWritable key,Text value,Mapper<LongWritable,Text,Text,IntWritable>.Context context)throwsIOException,InterruptedException{String line = value.toString();String[] words = line.split(" ");for(String word : words){

outK.set(word);

context.write(outK, outV);}}}//reducerpublicstaticclassWordCountReducerextendsReducer<Text,IntWritable,Text,IntWritable>{privateIntWritable outV =newIntWritable();@Overrideprotectedvoidreduce(Text key,Iterable<IntWritable> values,Reducer<Text,IntWritable,Text,IntWritable>.Context context)throwsIOException,InterruptedException{int sum =0;for(IntWritable value : values){

sum += value.get();}

outV.set(sum);

context.write(key, outV);}}}

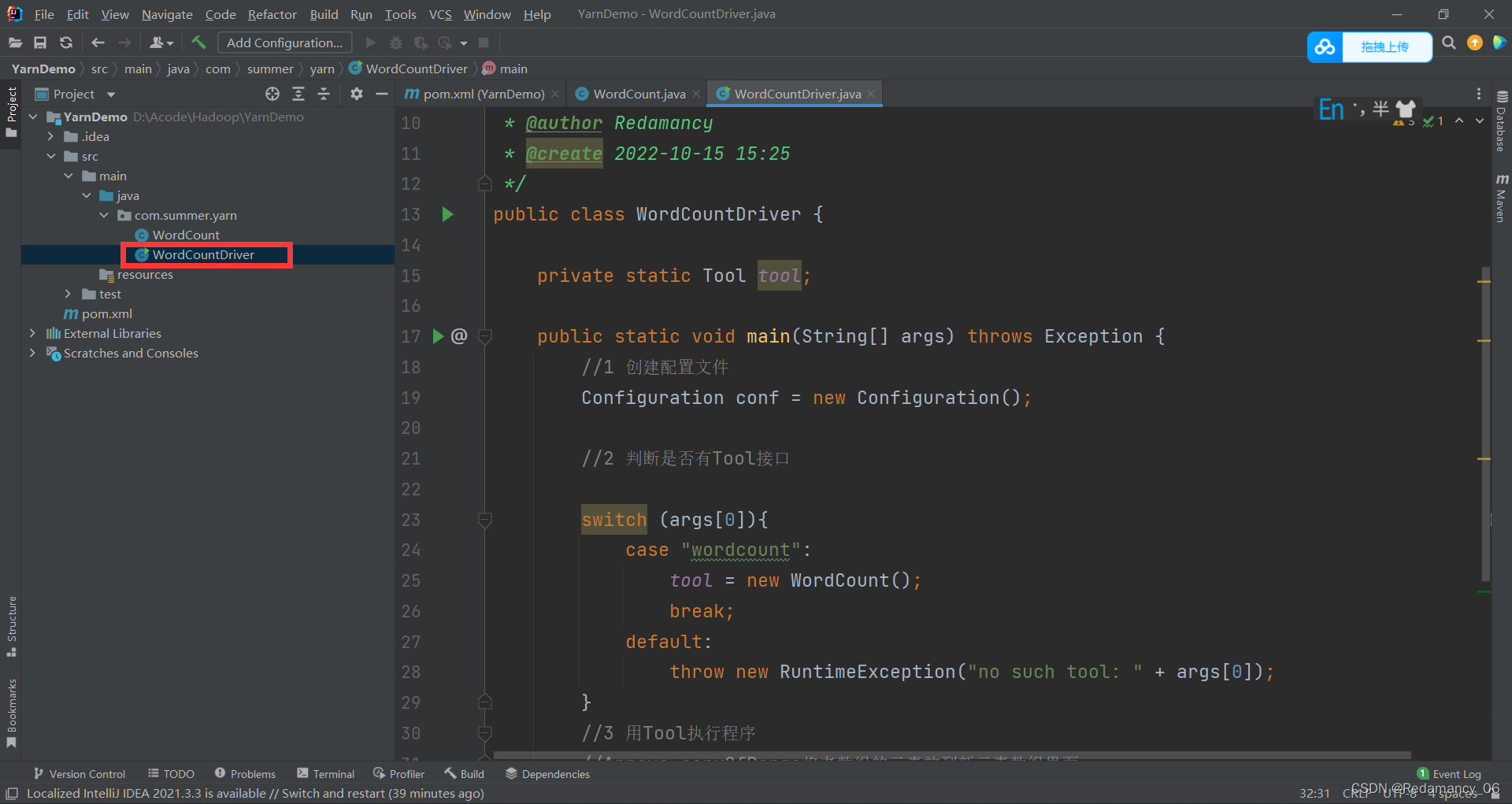

17.4.3.4 新建WordCountDriver

packagecom.summer.yarn;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.util.Tool;importorg.apache.hadoop.util.ToolRunner;importjava.util.Arrays;/**

* @author Redamancy

* @create 2022-10-15 15:25

*/publicclassWordCountDriver{privatestaticTool tool;publicstaticvoidmain(String[] args)throwsException{//1 创建配置文件Configuration conf =newConfiguration();//2 判断是否有Tool接口switch(args[0]){case"wordcount":

tool =newWordCount();break;default:thrownewRuntimeException("no such tool: "+ args[0]);}//3 用Tool执行程序//Arrays.copyOfRange将老数组的元素放到新元素数组里面int run =ToolRunner.run(conf, tool,Arrays.copyOfRange(args,1, args.length));System.exit(run);}}

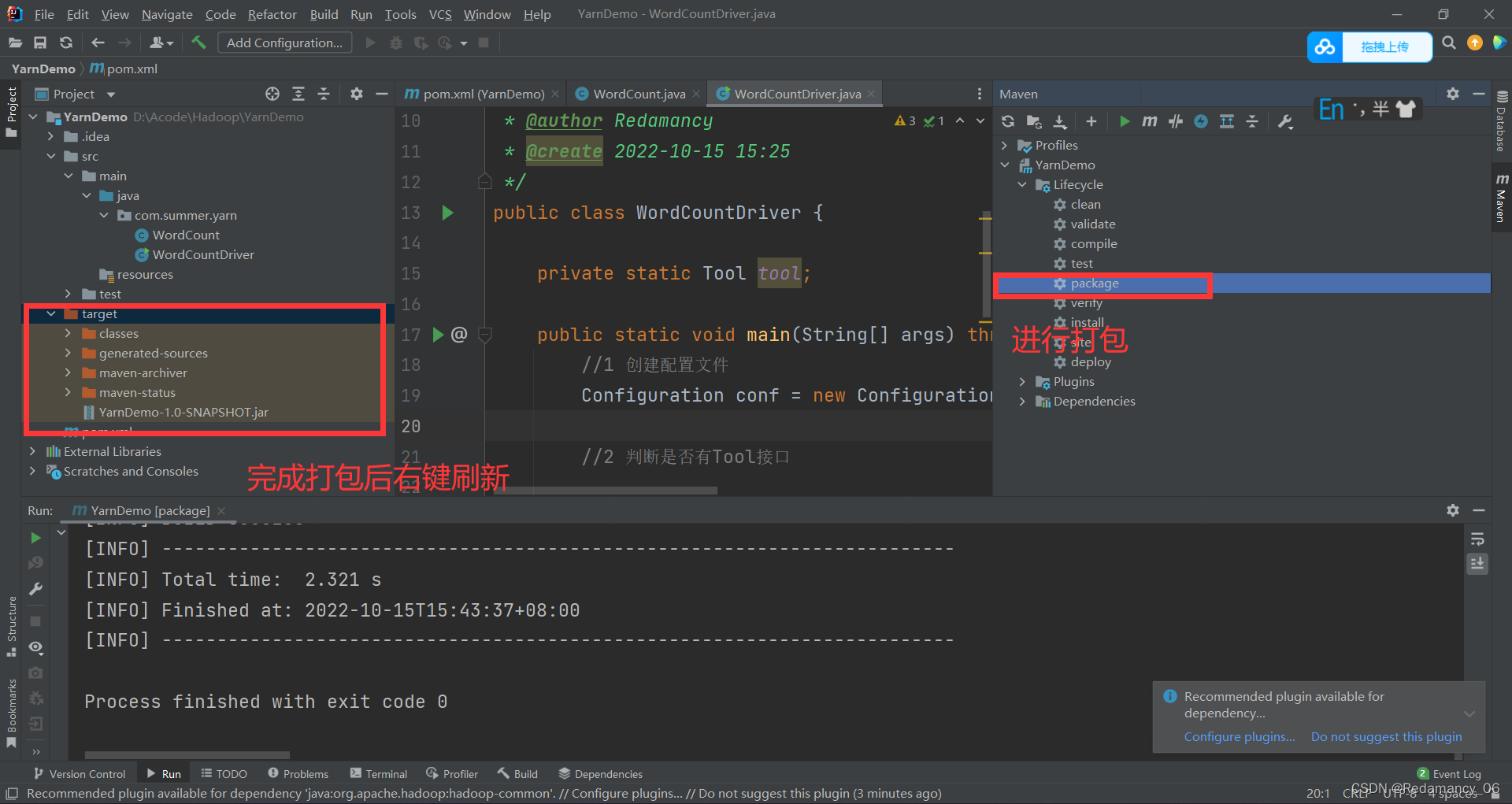

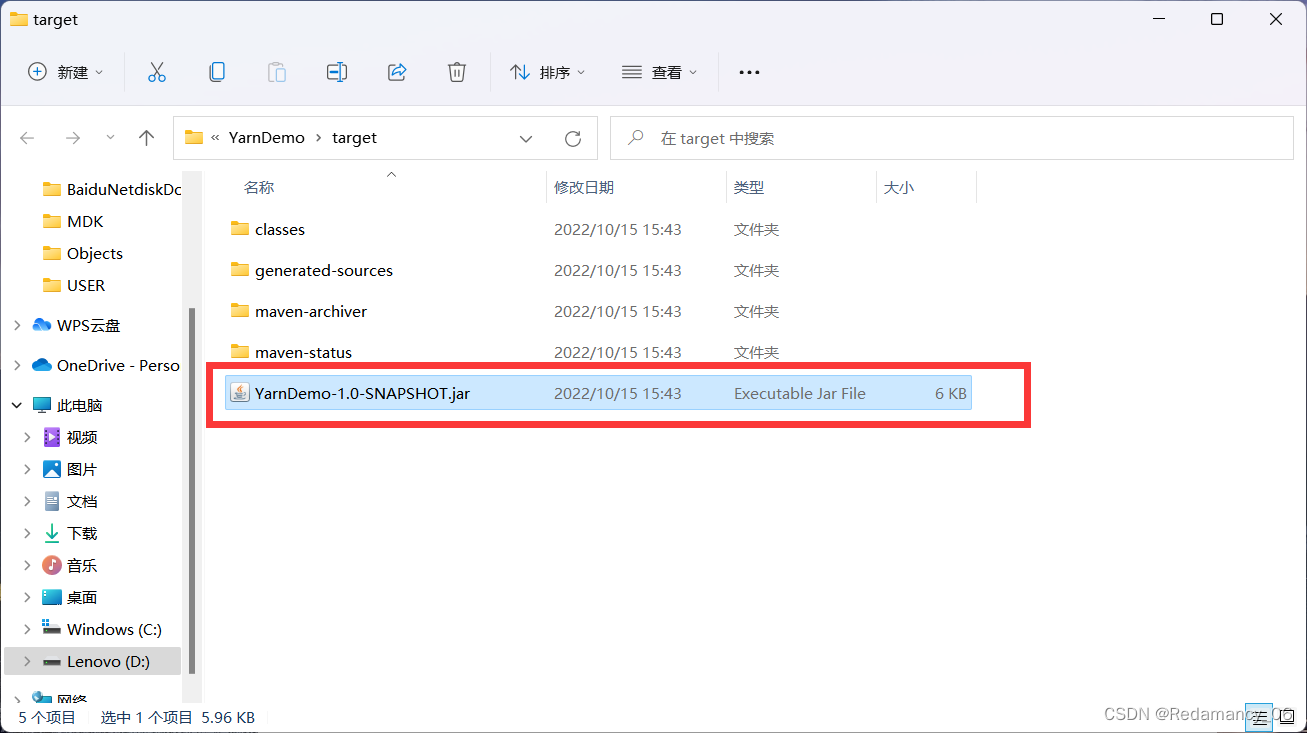

17.4.3.5 将包进行打包

点击reload from Disk进行刷新

点击reload from Disk进行刷新

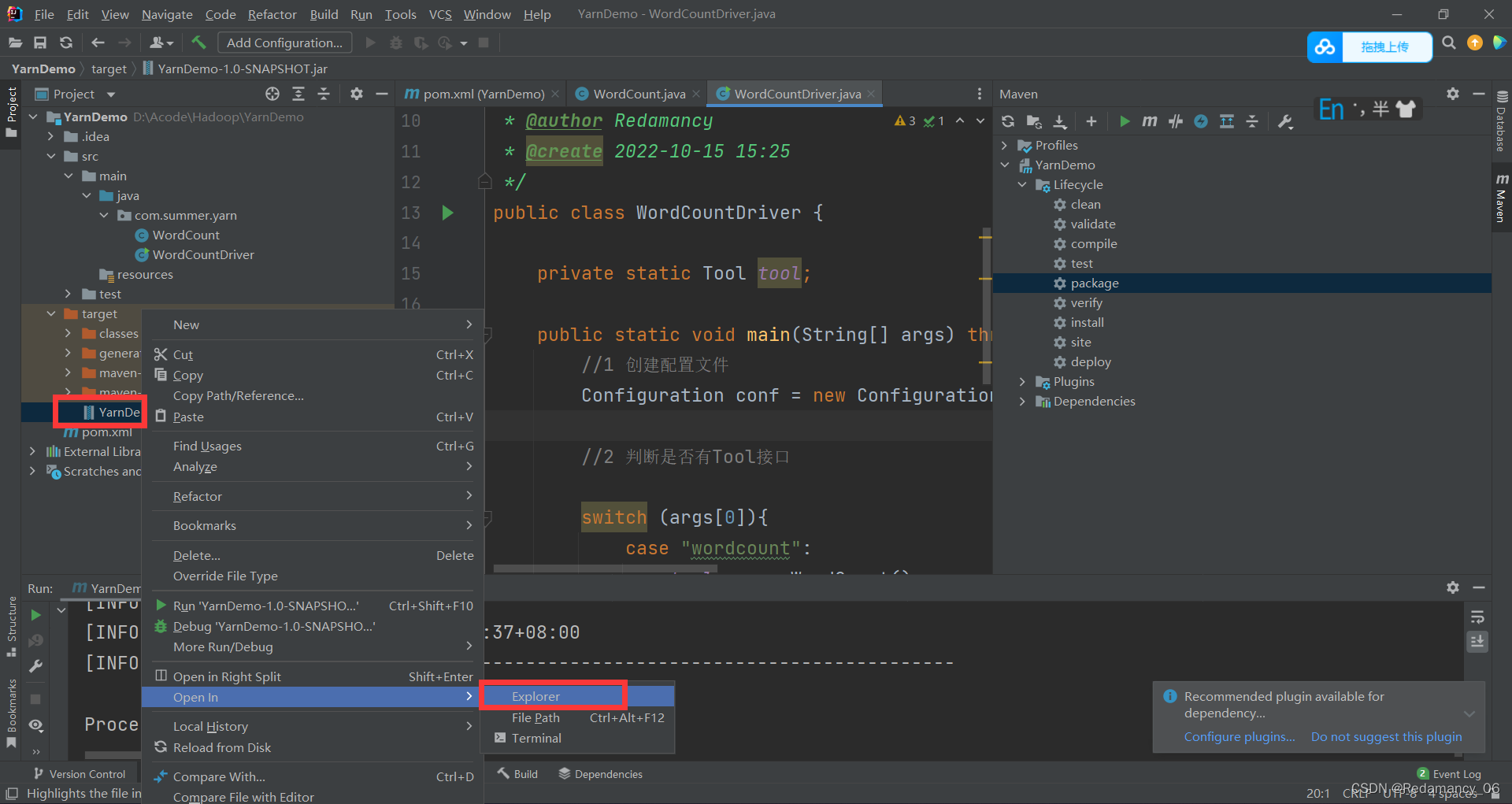

17.4.3.6 将jar包上传到Linux里

将这个复制到桌面

将这个复制到桌面

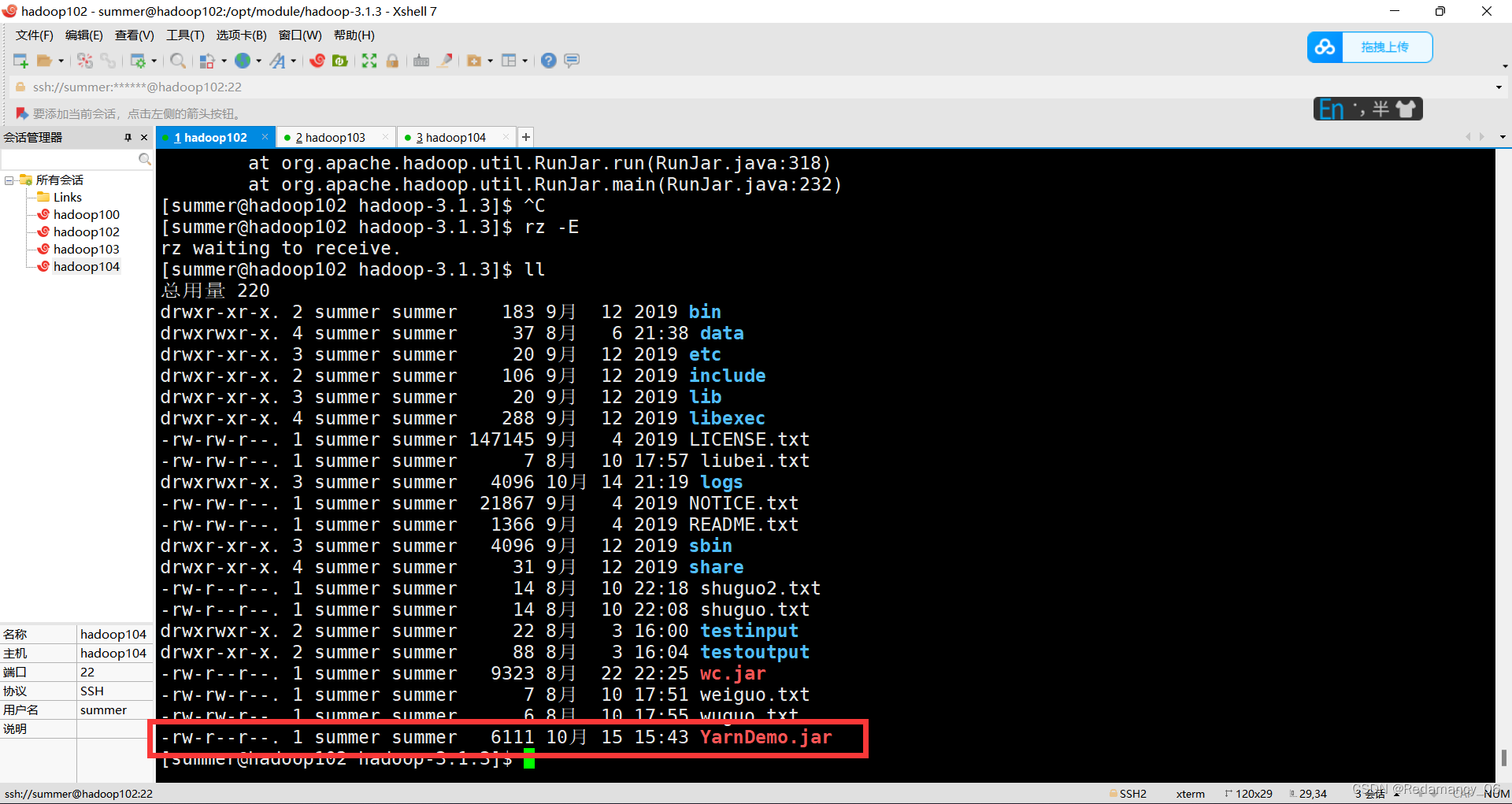

改名为YarnDemo,然后进行上传到Linux上

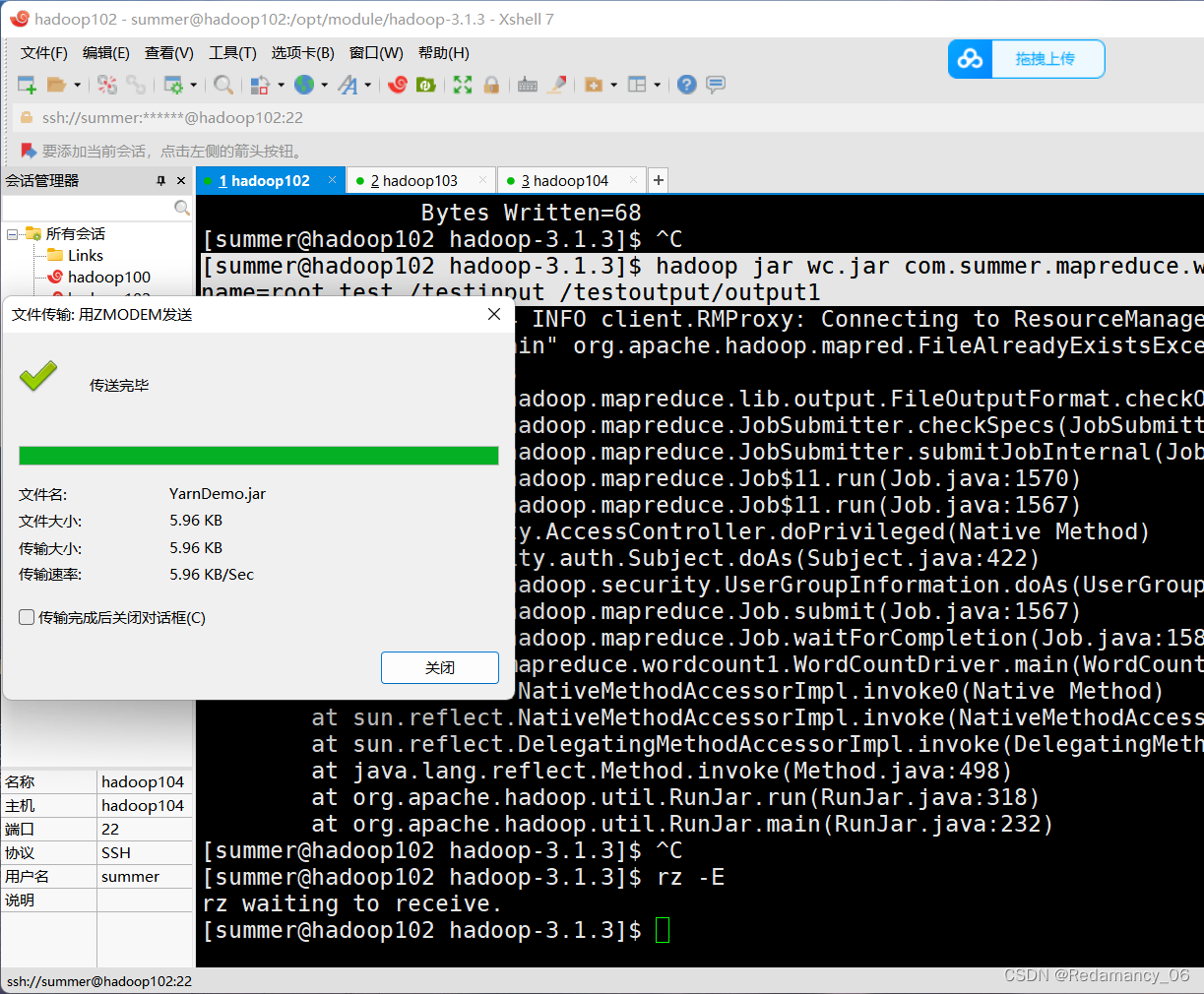

将jar包拖拽到XShell里面

将jar包拖拽到XShell里面

17.4.4 在HDFS上准备输入文件,假设为/testinput目录,向集群提交该Jar包

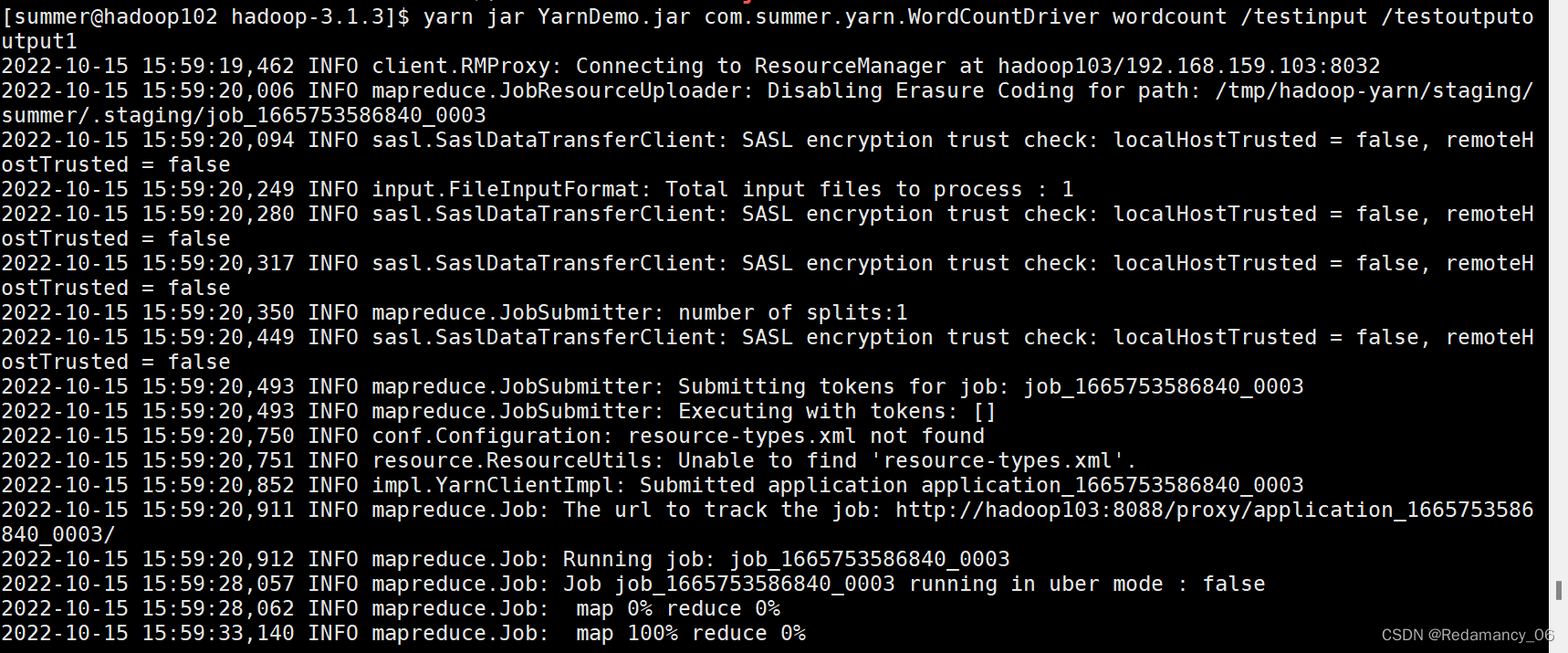

[summer@hadoop102 hadoop-3.1.3]$ yarn jar YarnDemo.jar com.summer.yarn.WordCountDriver wordcount /testinput /testoutputoutput1

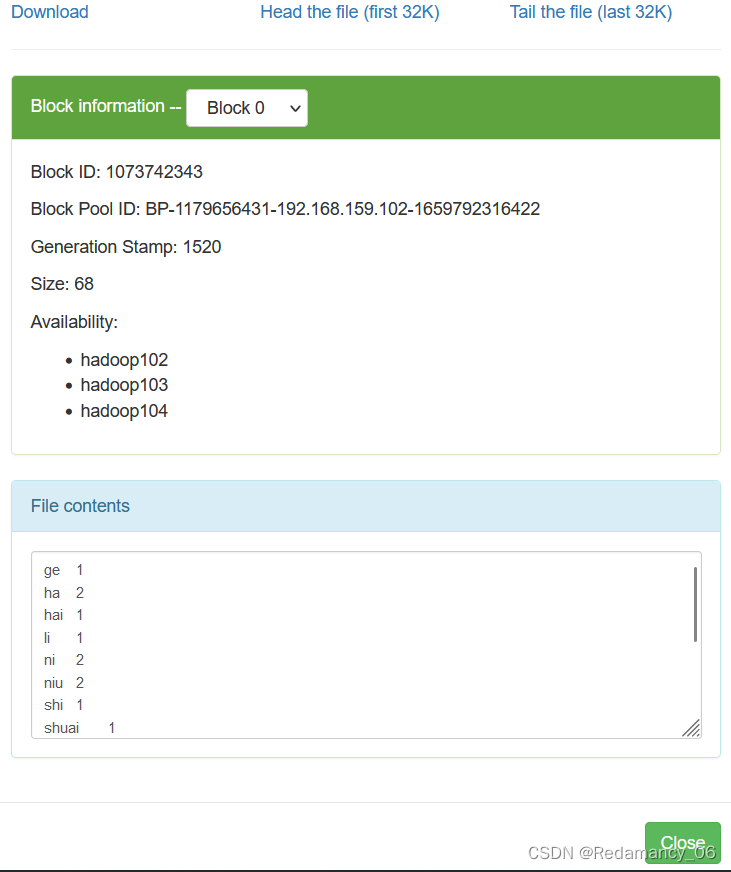

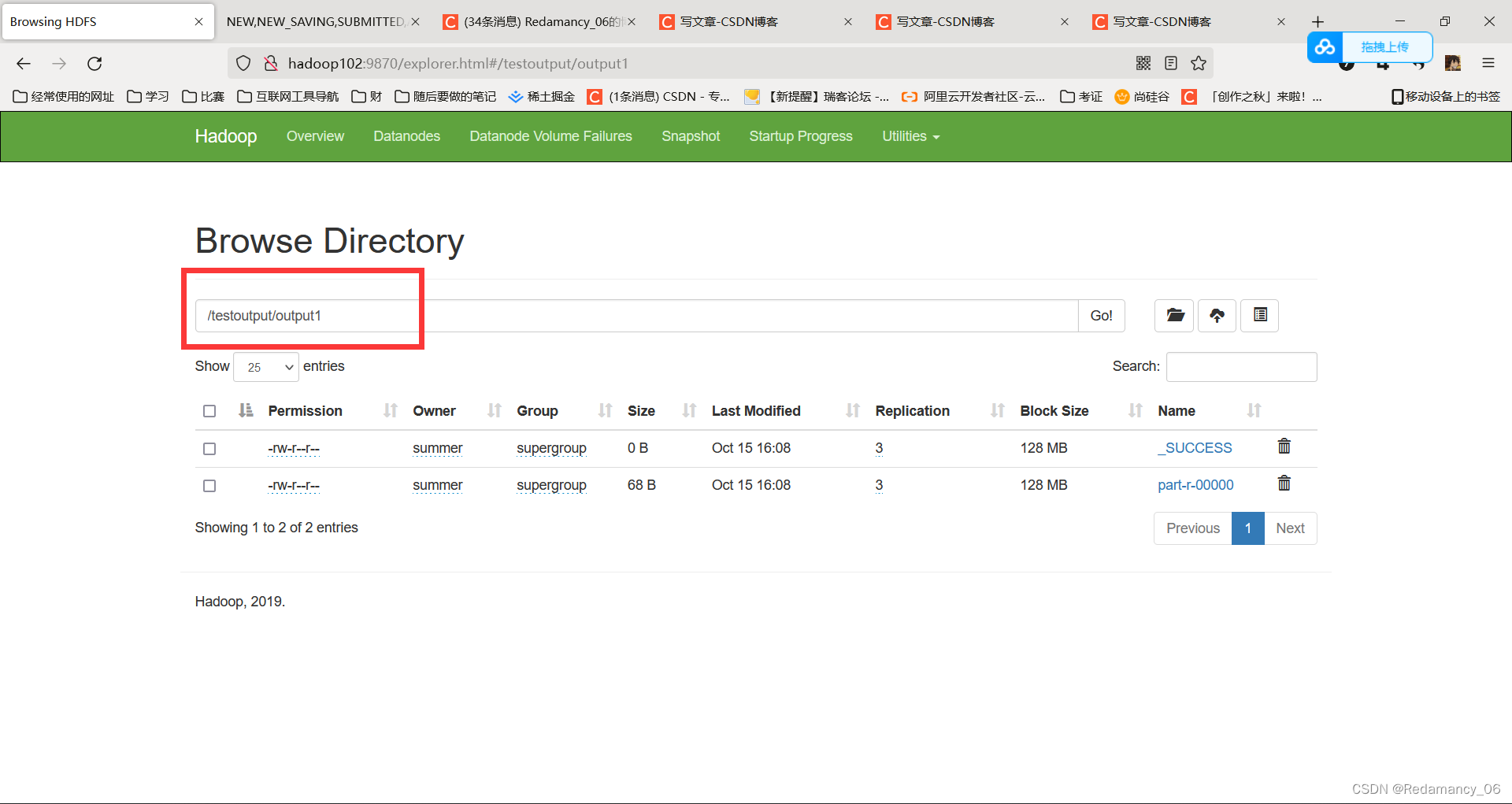

注意此时提交的3个参数,第一个用于生成特定的Tool,第二个和第三个为输入输出目录。此时如果我们希望加入设置参数,可以在wordcount后面添加参数,例如:

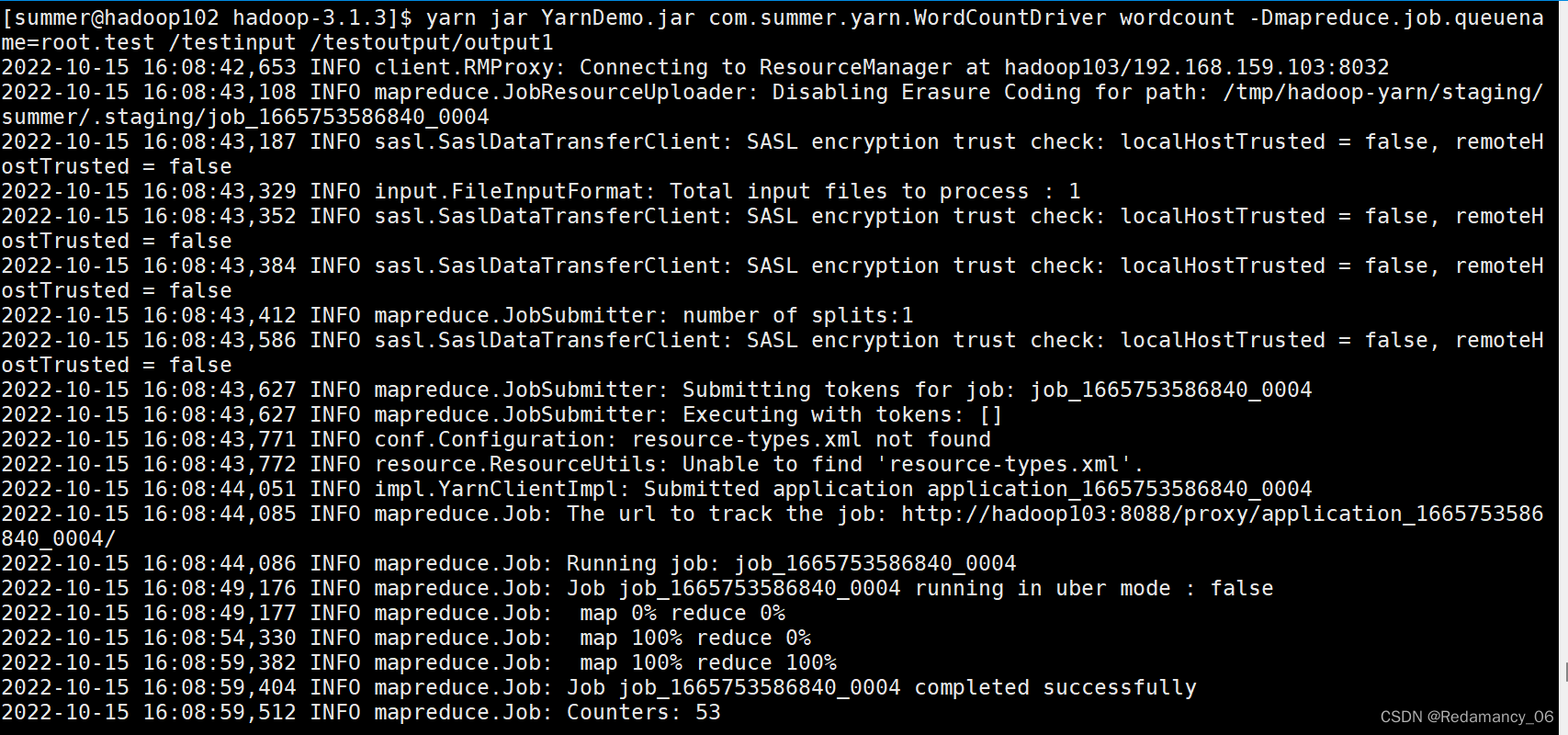

[summer@hadoop102 hadoop-3.1.3]$ yarn jar YarnDemo.jar com.summer.yarn.WordCountDriver wordcount -Dmapreduce.job.queuename=root.test /testinput /testoutput/output1

注:以上操作全部做完过后,快照回去或者手动将配置文件修改成之前的状态,因为本身资源就不够,分成了这么多,不方便以后测试。

版权归原作者 Redamancy_06 所有, 如有侵权,请联系我们删除。