Hive

文章目录

错误日志

- hive运行日志

- hadoop日志

错误

1. SessionHiveMetaStoreClient

FAILED: HiveException java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

删除一个文件一个目录: derby.log和metastore_db

然后再重新初始化:

bin/schematool -dbType derby -initSchema

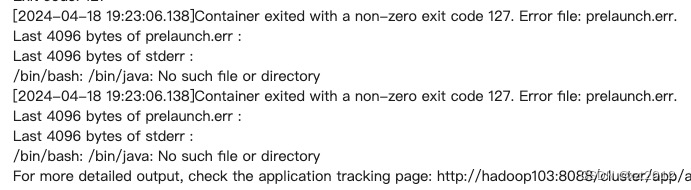

2. ql.Driver: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

查询hadoop日子:/bin/bash: /bin/java: No such file or directory

- 解决: 修改hadoop下的hadoop-env.sh文件里面的: export JAVA_HOME

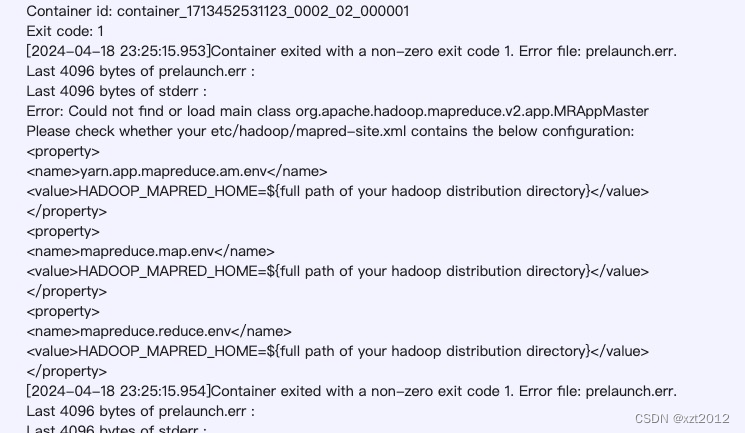

3. error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster Please check whether your etc/hadoop/mapred-site.xml contains the below configuration:

解决:

- 停止集群:

myhodoop.sh stop - 修改

/opt/module/hadoop-3.1.3/etc/hadoop/mapred-site.xml为以下内容:

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/opt/module/hadoop-3.1.3</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/opt/module/hadoop-3.1.3</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/opt/module/hadoop-3.1.3</value>

</property>

注意:用hadoop的完整地址,不能使用

${HADOOP_HOME}

- 分发到各个集群

xsync /opt/module/hadoop-3.1.3/etc/hadoop/mapred-site.xml - 重启hadoop集群:

myhadoop.sh start

4. Cannot run job locally: Input Size (= 460974586) is larger than hive.exec.mode.local.auto.inputbytes.max (= 134217728)

export HADOOP_OPTS=“$HADOOP_OPTS -Djava.library.path=/opt/module/hadoop-3.1.3/lib/native”

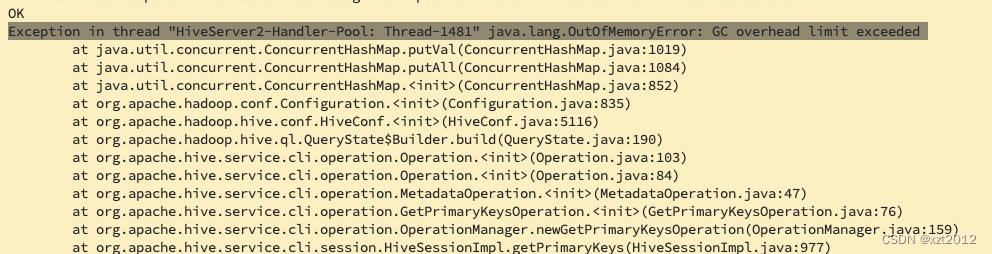

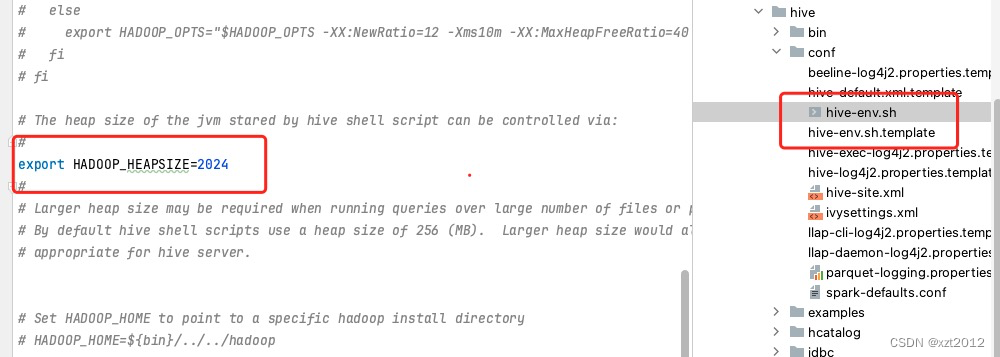

5. Exception in thread “HiveServer2-Handler-Pool: Thread-1481” java.lang.OutOfMemoryError: GC overhead limit exceeded

解决:

在hive的conf目录中,编辑hive-en.sh(如果没有,复制hive-en.sh.template一份文件,改成hive-en.sh),修改hive的内存大小,默认256M,这里我改成2024M:

export HADOOP_HEAPSIZE=2024

改好后,保存并重启hive

版权归原作者 xzt2012 所有, 如有侵权,请联系我们删除。