一、功能介绍

cpu idleu作用:在cpu空闲状态下进入低功耗模式,从而达到节省功耗的目的。

idle低功耗模式主要的省电策略:1)wfi 2)关闭cpu时钟 3)关闭设备等

实现原理:linux内核初始化时,会为每个cpu创建一个idle线程,当该cpu处于空闲状态,即cpu上没有可调度的线程或可执行的task,此时调度器会选择idle线程执行,进入idle低功耗状态。而idle状态又分为不同的层级,越深的层及省电越优,但系统恢复越慢,因此需要结合用户需求,选择进入哪个层级的idle状态。

二、功能框架

1)scheduler:cpu调度器,当cpu处于空闲状态(没有可执行的task),选择idle task执行,进入cpuidle模式。

2)cpuidle core:cpuidle core抽象出cpuidle device、cpuidle driver、cpuidle governor三个实体。向cpu sched模块提供接口,向user space提供用户节点、向cpuidle drivers模块,提供统一的driver注册和管理接口、向cpuidle governors模块,提供统一的governor注册和管理接口。

3)cpuidle governors:提供多种idle的策略,比如menu/ladder/teo/haltpoll。

4)cpuidle drivers:负责idle机制的实现,即:如何进入idle状态,什么条件下会退出。

(后续讲解以menu governor为例)

三、cpu idle工作流程

3.1 cpuidle初始化流程

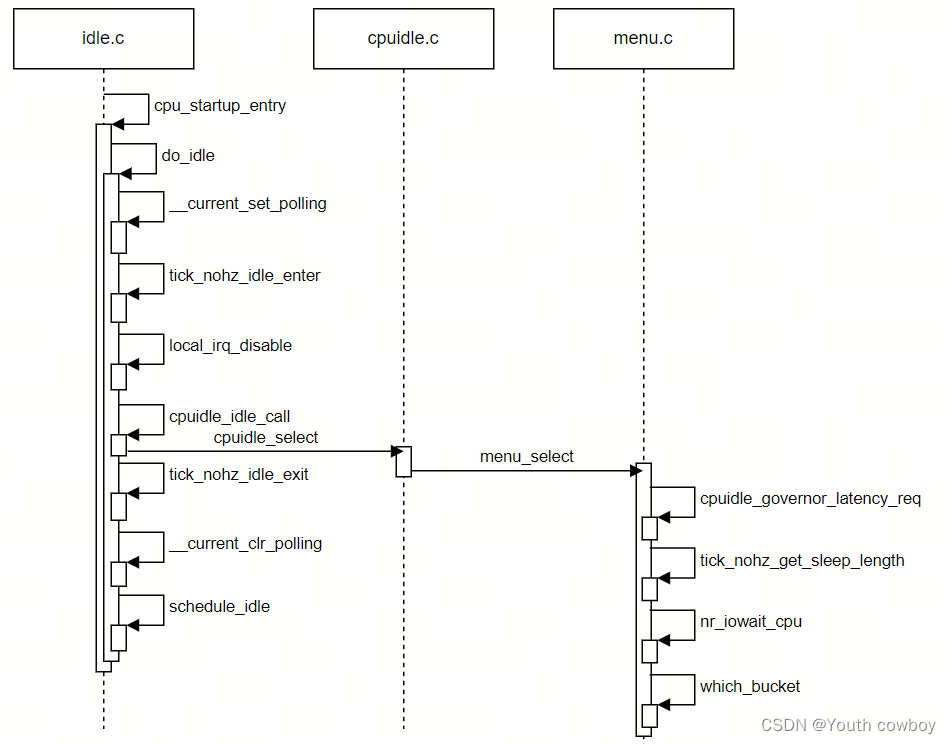

3.2 cpuidle触发流程

四、idle state选择

4.1 idle state选择 ---menu_select

决定选择进入哪个idle层级由系统容忍度延迟latency_req和预期idle的predicted_us时长决定。

/**

* menu_select - selects the next idle state to enter

* @drv: cpuidle driver containing state data

* @dev: the CPU

* @stop_tick: indication on whether or not to stop the tick

*/

static int menu_select(struct cpuidle_driver *drv, struct cpuidle_device *dev,

bool *stop_tick)

{

struct menu_device *data = this_cpu_ptr(&menu_devices);

// 1.获取系统容忍延迟

s64 latency_req = cpuidle_governor_latency_req(dev->cpu);

unsigned int predicted_us;

u64 predicted_ns;

u64 interactivity_req;

unsigned long nr_iowaiters;

ktime_t delta_next;

int i, idx;

// 2.更新上一次进入idle状态信息为本次进入idle状态的信息

if (data->needs_update) {

menu_update(drv, dev);

data->needs_update = 0;

}

// 3.获取下一次tick的到来时间

/* determine the expected residency time, round up */

data->next_timer_ns = tick_nohz_get_sleep_length(&delta_next);

// 4. 获取该cpu上IO wait等待线程(sleep状态)的数量

nr_iowaiters = nr_iowait_cpu(dev->cpu);

// 5. 根据next_timer_ns和nr_iowaiters获取校正因子

data->bucket = which_bucket(data->next_timer_ns, nr_iowaiters);

if (unlikely(drv->state_count <= 1 || latency_req == 0) ||

((data->next_timer_ns < drv->states[1].target_residency_ns ||

latency_req < drv->states[1].exit_latency_ns) &&

!dev->states_usage[0].disable)) {

/*

* In this case state[0] will be used no matter what, so return

* it right away and keep the tick running if state[0] is a

* polling one.

*/

*stop_tick = !(drv->states[0].flags & CPUIDLE_FLAG_POLLING);

return 0;

}

// 6.基于校正因子、next_timer_ns、NSEC_PER_USEC获取一个idle预期时长

/* Round up the result for half microseconds. */

predicted_us = div_u64(data->next_timer_ns *

data->correction_factor[data->bucket] +

(RESOLUTION * DECAY * NSEC_PER_USEC) / 2,

RESOLUTION * DECAY * NSEC_PER_USEC);

// 7.计算最小的idle预期时长

/* Use the lowest expected idle interval to pick the idle state. */

predicted_ns = (u64)min(predicted_us,

get_typical_interval(data, predicted_us)) *

NSEC_PER_USEC;

if (tick_nohz_tick_stopped()) {

/*

* If the tick is already stopped, the cost of possible short

* idle duration misprediction is much higher, because the CPU

* may be stuck in a shallow idle state for a long time as a

* result of it. In that case say we might mispredict and use

* the known time till the closest timer event for the idle

* state selection.

*/

if (predicted_ns < TICK_NSEC)

predicted_ns = delta_next;

} else {

/*

* Use the performance multiplier and the user-configurable

* latency_req to determine the maximum exit latency.

*/

// 8.计算系统iowaiter容忍度

interactivity_req = div64_u64(predicted_ns,

performance_multiplier(nr_iowaiters));

// 9.最后取前面两个系统容忍度中最小值,作为最小的系统容忍度

if (latency_req > interactivity_req)

latency_req = interactivity_req;

}

// 10.选择合适的idle state

/*

* Find the idle state with the lowest power while satisfying

* our constraints.

*/

idx = -1;

for (i = 0; i < drv->state_count; i++) {

struct cpuidle_state *s = &drv->states[i];

if (dev->states_usage[i].disable)

continue;

if (idx == -1)

idx = i; /* first enabled state */

if (s->target_residency_ns > predicted_ns) {

/*

* Use a physical idle state, not busy polling, unless

* a timer is going to trigger soon enough.

*/

if ((drv->states[idx].flags & CPUIDLE_FLAG_POLLING) &&

s->exit_latency_ns <= latency_req &&

s->target_residency_ns <= data->next_timer_ns) {

predicted_ns = s->target_residency_ns;

idx = i;

break;

}

if (predicted_ns < TICK_NSEC)

break;

if (!tick_nohz_tick_stopped()) {

/*

* If the state selected so far is shallow,

* waking up early won't hurt, so retain the

* tick in that case and let the governor run

* again in the next iteration of the loop.

*/

predicted_ns = drv->states[idx].target_residency_ns;

break;

}

/*

* If the state selected so far is shallow and this

* state's target residency matches the time till the

* closest timer event, select this one to avoid getting

* stuck in the shallow one for too long.

*/

if (drv->states[idx].target_residency_ns < TICK_NSEC &&

s->target_residency_ns <= delta_next)

idx = i;

return idx;

}

if (s->exit_latency_ns > latency_req)

break;

idx = i;

}

if (idx == -1)

idx = 0; /* No states enabled. Must use 0. */

/*

* Don't stop the tick if the selected state is a polling one or if the

* expected idle duration is shorter than the tick period length.

*/

if (((drv->states[idx].flags & CPUIDLE_FLAG_POLLING) ||

predicted_ns < TICK_NSEC) && !tick_nohz_tick_stopped()) {

*stop_tick = false;

if (idx > 0 && drv->states[idx].target_residency_ns > delta_next) {

/*

* The tick is not going to be stopped and the target

* residency of the state to be returned is not within

* the time until the next timer event including the

* tick, so try to correct that.

*/

for (i = idx - 1; i >= 0; i--) {

if (dev->states_usage[i].disable)

continue;

idx = i;

if (drv->states[i].target_residency_ns <= delta_next)

break;

}

}

}

return idx;

}

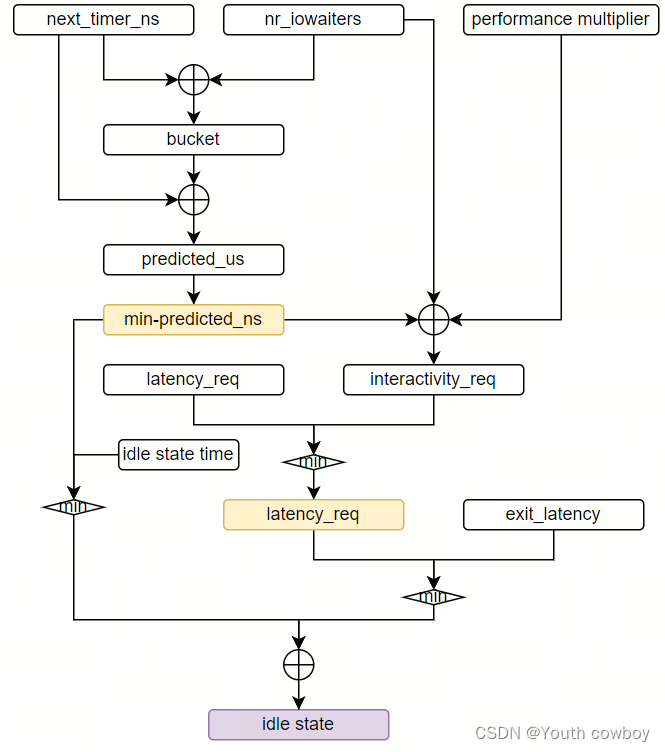

1)预期idle的predicted_us时长的计算

menu governor会将下一个tick到来的时间(next_timer_us)作为一个基础的predicted_us,并在这个基础上调整。

首先,因为predicted_us并不总是与next_timer_us直接相等,在等待下一个tick的过程很有可能被其他事件所唤醒,所以需要引入校正因子(correction factor)校正predicted_us。此校正因子从对应的bucket索引中选取。

menu governor使用了一个12组校正因子来预测idle时间,校正因子的值基于过去predicted_us和next_timer_us的比率,并且采用动态平均算法。

另外对于不同的next_timers_us,校正因子的影响程度是不一样的。对于不同的iowait场景,系统对校正因子也有着不同的敏感程度 。

随后尝试通过最近的8个过去的停留时间来查找重复间隔,如果他们的标准差小于一定阈值,则将这8个时间的平均值作为predicted_us。

最后取以上两个流程中的最小值。

2)系统容忍度延迟latency_req的计算

对于系统容忍度,menu governor使用性能乘数(performance multiplier)、预计停留时间(predicted)和系统延迟需求(latency requirement)来找出最大退出延迟。

系统延迟容忍时间作为第一个系统延迟容忍度。另外一个系统iowait容忍度计算如下:predicted_us / (1 +10 * iowaiters)。iowaiters指当前cpu上iowait的进程数。

最后取前面两个系统容忍度中最小值,作为最小的系统容忍度。

3)idle state的选取

最后根据前面计算出来的两个因素来选取具体的idle state,将计算出的predicted_us与所有idle状态的停留时间进行比较,选择特定idle state的条件是相应的停留时间应小于predicted_us。

另外,将状态的exit_latency与系统的延迟要求进行比较。基于两个等待时间因素,选择适当的idle state。

预期idle的predicted_us和系统容忍度延迟latency_req的计算总结:

next_timer_us:下一次tick到来的时间;

nr_iowaiters:该cpu上等待的IO线程数量;

bucket:校正因子;

predicted_us:idle预期时长;

min predicted_ns:最小idle预期时长;

performance_multiplier:性能乘积因子;

interactivity_req:系统iowaiter延迟容忍度;

latency_req:系统延迟容忍度;

min latency_req:系统最小延迟容忍度,min(interactivity_req, latency_req);

idle state time:每个idle state阶段所处的时长;

exit_latency_ns :每个idle state阶段退出时延;

4.1.1 获取系统的时延容忍度 ---cpuidle_governor_latency_req

基于设备的原始恢复延迟要求(响应)device_req 和CPU的延迟要求限制(响应)global_req ,计算出系统时延时间,即系统的时延容忍度。

/**

* cpuidle_governor_latency_req - Compute a latency constraint for CPU

* @cpu: Target CPU

*/

s64 cpuidle_governor_latency_req(unsigned int cpu)

{

// 1.获取该cpu的device(对cpu的硬件描述)

struct device *device = get_cpu_device(cpu);

// 2. 设备的原始恢复延迟要求(从低功耗状态恢复到正常工作状态所需的时间)

int device_req = dev_pm_qos_raw_resume_latency(device);

// 3. CPU的延迟要求限制(CPU在运行某些任务时,能够接受的最大延迟时间)

int global_req = cpu_latency_qos_limit();

// 4. 选择device_req和global_req中小的作为系统延时时间

if (device_req > global_req)

device_req = global_req;

return (s64)device_req * NSEC_PER_USEC;

}

EXPORT_SYMBOL_GPL(cpuidle_governor_latency_req);

4.1.2 获取下一次tick到来的时间 ---tick_nohz_get_sleep_length

计算当前休眠的预期时长,作为下一次tick到来的时间。

/**

* tick_nohz_get_sleep_length - return the expected length of the current sleep

* @delta_next: duration until the next event if the tick cannot be stopped

*

* Called from power state control code with interrupts disabled

*/

ktime_t tick_nohz_get_sleep_length(ktime_t *delta_next)

{

// 获取当前CPU的时钟事件设备对象

struct clock_event_device *dev = __this_cpu_read(tick_cpu_device.evtdev);

struct tick_sched *ts = this_cpu_ptr(&tick_cpu_sched);

int cpu = smp_processor_id();

/*

* The idle entry time is expected to be a sufficient approximation of

* the current time at this point.

*/

ktime_t now = ts->idle_entrytime;

ktime_t next_event;

WARN_ON_ONCE(!ts->inidle);

*delta_next = ktime_sub(dev->next_event, now);

if (!can_stop_idle_tick(cpu, ts))

return *delta_next;

// 获取tick_sched结构体中存储的下一个事件的时间

next_event = tick_nohz_next_event(ts, cpu);

if (!next_event)

return *delta_next;

/*

* If the next highres timer to expire is earlier than next_event, the

* idle governor needs to know that.

*/

// 使用min_t宏比较两者的时间,取较早的一个

next_event = min_t(u64, next_event,

hrtimer_next_event_without(&ts->sched_timer)); // 获取高精度定时器中下一个到期的事件

return ktime_sub(next_event, now);

}

EXPORT_SYMBOL_GPL(tick_nohz_get_sleep_length);

4.1.3 获取该cpu上的iowaiter线程 ---nr_iowait_cpu

从cpu的nr_iowait属性中获取到iowaiter线程的数量。

unsigned long nr_iowait_cpu(int cpu)

{

return atomic_read(&cpu_rq(cpu)->nr_iowait);

}

4.1.4 获取校正因子

根据下一次tick到来的时间和该cpu上等待的iowaiter线程数量,选择校正因子(校正因子存在BUCKETS中,选择bucket下标)。

static inline int which_bucket(u64 duration_ns, unsigned long nr_iowaiters)

{

int bucket = 0;

/*

* We keep two groups of stats; one with no

* IO pending, one without.

* This allows us to calculate

* E(duration)|iowait

*/

// 1. 该cpu上存在iowaiter线程

if (nr_iowaiters)

bucket = BUCKETS/2;

// 2. 根据tick到来时间选择不同的bucket

if (duration_ns < 10ULL * NSEC_PER_USEC)

return bucket;

if (duration_ns < 100ULL * NSEC_PER_USEC)

return bucket + 1;

if (duration_ns < 1000ULL * NSEC_PER_USEC)

return bucket + 2;

if (duration_ns < 10000ULL * NSEC_PER_USEC)

return bucket + 3;

if (duration_ns < 100000ULL * NSEC_PER_USEC)

return bucket + 4;

return bucket + 5;

}

五、相关源码目录

kernel/drivers/cpuidle/cpuidle-psci.c

kernel/kernel/sched/idle.c

kernel/drivers/cpuidle/cpuidle.c

kernel/drivers/cpuidle/driver.c

kernel/kernel/time/tick-sched.c

kernel/drivers/cpuidle/dt_idle_states.c

kernel/drivers/cpuidle/governors/menu.c

版权归原作者 Youth cowboy 所有, 如有侵权,请联系我们删除。