环境是linux,4台机器,版本3.6,kafka安装在node 1 2 3 上,zookeeper安装在node2 3 4上。

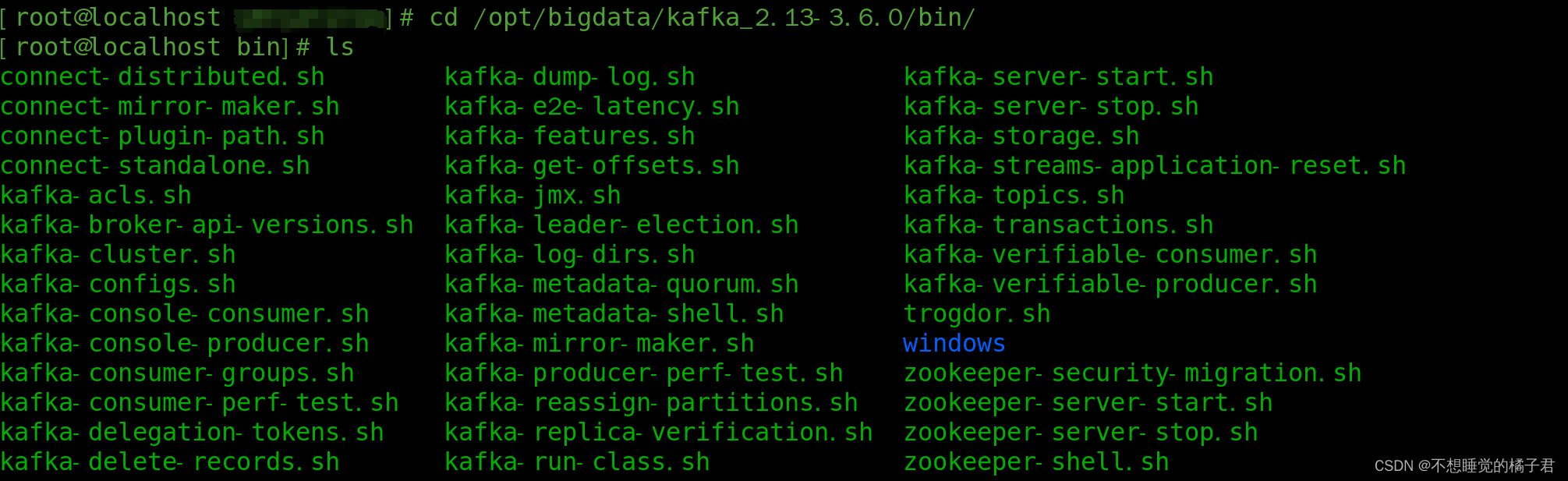

安装好kafka,进入bin目录,可以看到有很多sh文件,是我们执行命令的基础。

启动kafka,下面的命令的后面带的配置文件的相对路径

kafka-server-start.sh ./server.properties

遇到不熟悉的sh文件,直接输入名字并回车,就会提示你可用的命令参数。如果参数用错了,kafka也会提示你相应的错误。

[root@localhost bin]# kafka-topics.sh

Create, delete, describe, or change a topic.

Option Description

------ -----------

--alter Alter the number of partitions and

replica assignment. Update the

configuration of an existing topic

via --alter is no longer supported

here (the kafka-configs CLI supports

altering topic configs with a --

bootstrap-server option).

--at-min-isr-partitions if set when describing topics, only

show partitions whose isr count is

equal to the configured minimum.

--bootstrap-server <String: server to REQUIRED: The Kafka server to connect

connect to> to.

--command-config <String: command Property file containing configs to be

config property file> passed to Admin Client. This is used

only with --bootstrap-server option

for describing and altering broker

configs.

--config <String: name=value> A topic configuration override for the

topic being created or altered. The

following is a list of valid

configurations:

cleanup.policy

compression.type

delete.retention.ms

file.delete.delay.ms

flush.messages

flush.ms

follower.replication.throttled.

replicas

index.interval.bytes

leader.replication.throttled.replicas

local.retention.bytes

local.retention.ms

max.compaction.lag.ms

max.message.bytes

message.downconversion.enable

message.format.version

message.timestamp.after.max.ms

message.timestamp.before.max.ms

message.timestamp.difference.max.ms

message.timestamp.type

min.cleanable.dirty.ratio

min.compaction.lag.ms

min.insync.replicas

preallocate

remote.storage.enable

retention.bytes

retention.ms

segment.bytes

segment.index.bytes

segment.jitter.ms

segment.ms

unclean.leader.election.enable

See the Kafka documentation for full

details on the topic configs. It is

supported only in combination with --

create if --bootstrap-server option

is used (the kafka-configs CLI

supports altering topic configs with

a --bootstrap-server option).

--create Create a new topic.

--delete Delete a topic

--delete-config <String: name> A topic configuration override to be

removed for an existing topic (see

the list of configurations under the

--config option). Not supported with

the --bootstrap-server option.

--describe List details for the given topics.

--exclude-internal exclude internal topics when running

list or describe command. The

internal topics will be listed by

default

--help Print usage information.

--if-exists if set when altering or deleting or

describing topics, the action will

only execute if the topic exists.

--if-not-exists if set when creating topics, the

action will only execute if the

topic does not already exist.

--list List all available topics.

--partitions <Integer: # of partitions> The number of partitions for the topic

being created or altered (WARNING:

If partitions are increased for a

topic that has a key, the partition

logic or ordering of the messages

will be affected). If not supplied

for create, defaults to the cluster

default.

--replica-assignment <String: A list of manual partition-to-broker

broker_id_for_part1_replica1 : assignments for the topic being

broker_id_for_part1_replica2 , created or altered.

broker_id_for_part2_replica1 :

broker_id_for_part2_replica2 , ...>

--replication-factor <Integer: The replication factor for each

replication factor> partition in the topic being

created. If not supplied, defaults

to the cluster default.

--topic <String: topic> The topic to create, alter, describe

or delete. It also accepts a regular

expression, except for --create

option. Put topic name in double

quotes and use the '\' prefix to

escape regular expression symbols; e.

g. "test\.topic".

--topic-id <String: topic-id> The topic-id to describe.This is used

only with --bootstrap-server option

for describing topics.

--topics-with-overrides if set when describing topics, only

show topics that have overridden

configs

--unavailable-partitions if set when describing topics, only

show partitions whose leader is not

available

--under-min-isr-partitions if set when describing topics, only

show partitions whose isr count is

less than the configured minimum.

--under-replicated-partitions if set when describing topics, only

show under replicated partitions

--version Display Kafka version.

如这里,我们创建一个topic名为test。

kafka-topics.sh --create --topic test --bootstrap-server node1:9092 --partitions 2 --replication-factor 2

Created topic test.

连接其中node1上的kafka获得metedata里的topic列表

[root@localhost bin]# kafka-topics.sh --list --bootstrap-server node1:9092

test

查看某个topic的细节

[root@localhost bin]# kafka-topics.sh --describe --topic test --bootstrap-server node1:9092

Topic: test TopicId: WgjG4Ou_Q7iQvzgipRgzjg PartitionCount: 2 ReplicationFactor: 2 Configs:

Topic: test Partition: 0 Leader: 2 Replicas: 2,1 Isr: 2,1

Topic: test Partition: 1 Leader: 3 Replicas: 3,2 Isr: 3,2

在其中的一台机器上起一个生产者,在其他两台机器上起2个消费者,都在同一个组里。

[root@localhost bin]# kafka-console-producer.sh --broker-list node1:9092 --topic test

>hello 03

>1

>2

>3

>4

>5

>6

>7

>8

可以看到同一个组内,如果组内消费者注册情况不变化有且只有同一个consumer能够消费数据。可以满足对于消息要求顺序性,不能并发消费的情况。

[root@localhost bin]# kafka-console-consumer.sh --bootstrap-server node1:9092 --topic test --group msb

hello 03

1

2

3

4

5

6

7

8

查看某个组内的情况

[root@localhost bin]# kafka-consumer-groups.sh --bootstrap-server node2:9092 --group msb --describe

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

msb test 1 24 24 0 console-consumer-4987804d-6e59-4f4d-9952-9afb9aff6cbe /192.168.184.130 console-consumer

msb test 0 0 0 0 console-consumer-242992e4-7801-4a38-a8f3-8b44056ed4b6 /192.168.184.130 console-consumer

最后看一下zk中的情况吧。

zk根目录下多了一个kafka节点

[zk: localhost:2181(CONNECTED) 1] ls /

[kafka, node1, node6, node7, testLock, zookeeper]

kafka下面有很多metedata信息,包含在这些节点中,如,,

[zk: localhost:2181(CONNECTED) 2] ls /kafka

[admin, brokers, cluster, config, consumers, controller, controller_epoch, feature, isr_change_notification, latest_producer_id_block, log_dir_event_notification]

#集群id

[zk: localhost:2181(CONNECTED) 3] ls /kafka/cluster

[id]

[zk: localhost:2181(CONNECTED) 5] get /kafka/cluster/id

{"version":"1","id":"8t14lxoAS1SdXapY6ysw_A"}

#controller的id

[zk: localhost:2181(CONNECTED) 6] get /kafka/controller

{"version":2,"brokerid":3,"timestamp":"1698841142070","kraftControllerEpoch":-1}

可以看到topics中有一个__consumer_offsets,是kafka用来存储offset的topic。

[zk: localhost:2181(CONNECTED) 10] ls /kafka/brokers/topics

[__consumer_offsets, test]

[zk: localhost:2181(CONNECTED) 12] get /kafka/brokers/topics/__consumer_offsets

{"partitions":{"44":[1],"45":[2],"46":[3],"47":[1],"48":[2],"49":[3],"10":[3],"11":[1],"12":[2],"13":[3],"14":[1],"15":[2],"16":[3],"17":[1],"18":[2],"19":[3],"0":[2],"1":[3],"2":[1],"3":[2],"4":[3],"5":[1],"6":[2],"7":[3],"8":[1],"9":[2],"20":[1],"21":[2],"22":[3],"23":[1],"24":[2],"25":[3],"26":[1],"27":[2],"28":[3],"29":[1],"30":[2],"31":[3],"32":[1],"33":[2],"34":[3],"35":[1],"36":[2],"37":[3],"38":[1],"39":[2],"40":[3],"41":[1],"42":[2],"43":[3]},"topic_id":"RGxJyefAQlKrmY3LTVbKGw","adding_replicas":{},"removing_replicas":{},"version":3}

版权归原作者 不想睡觉的橘子君 所有, 如有侵权,请联系我们删除。