这里写目录标题

一、概述

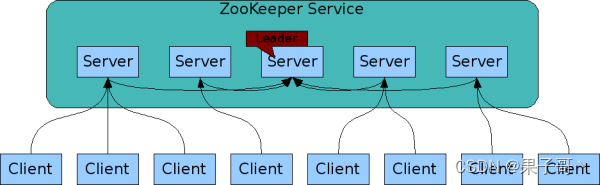

- Apache ZooKeeper 是一个集中式服务,用于维护配置信息、命名、提供分布式同步和提供组服务,ZooKeeper 致力于开发和维护一个开源服务器,以实现高度可靠的分布式协调,其实也可以认为就是一个分布式数据库,只是结构比较特殊,是树状结构。官网文档:

https://zookeeper.apache.org/doc/r3.8.0/

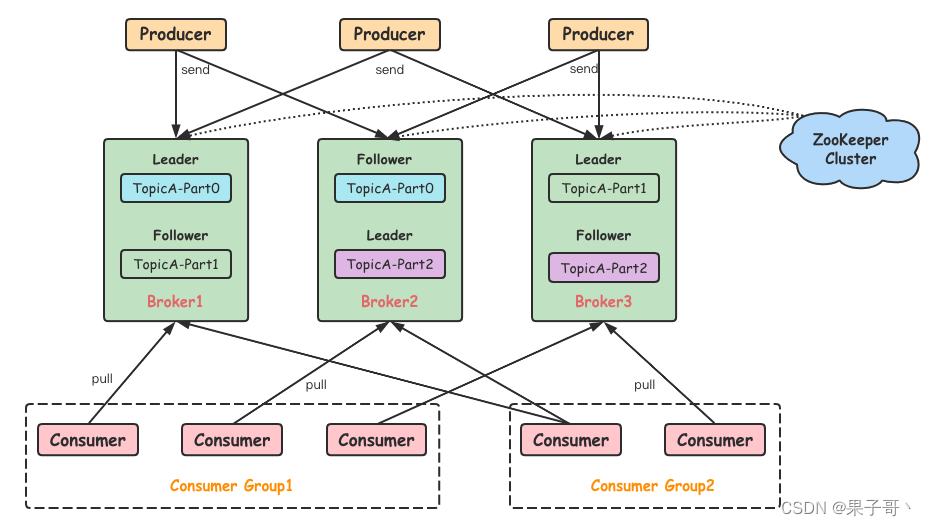

- Kafka是最初由 Linkedin 公司开发,是一个分布式、支持分区的(partition)、多副本的(replica),基于 zookeeper 协调的分布式消息系统。官方文档:

https://kafka.apache.org/documentation/

二、Zookeeper on k8s 部署

1)添加源

部署包地址:

https://artifacthub.io/packages/helm/zookeeper/zookeeper

helm repo add bitnami https://charts.bitnami.com/bitnami

helm pull bitnami/zookeeper

tar -xf zookeeper-10.2.1.tgz

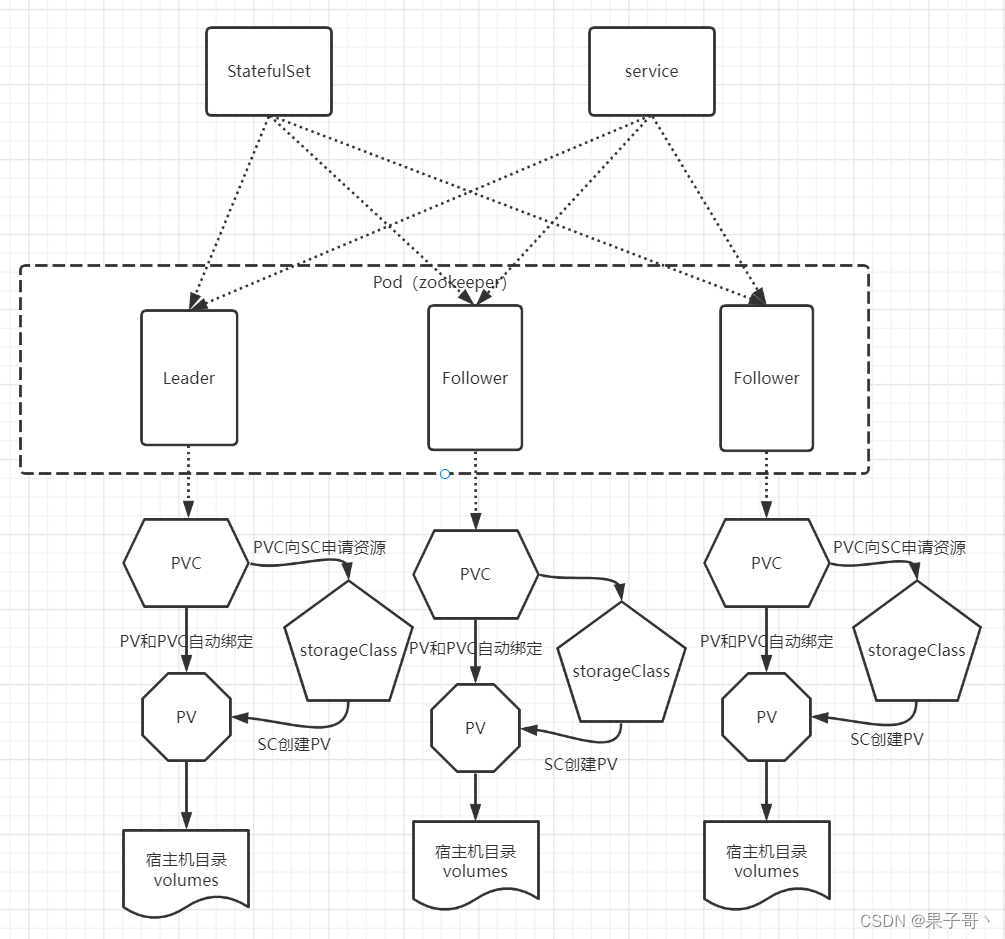

2)修改配置

修改zookeeper/values.yaml

image:registry: myharbor.com

repository: bigdata/zookeeper

tag: 3.8.0-debian-11-r36

...replicaCount:3...service:type: NodePort

nodePorts:#NodePort 默认范围是 30000-32767client:"32181"tls:"32182"...persistence:storageClass:"zookeeper-local-storage"size:"10Gi"# 目录需要提前在宿主机上创建local:-name: zookeeper-0host:"local-168-182-110"path:"/opt/bigdata/servers/zookeeper/data/data1"-name: zookeeper-1host:"local-168-182-111"path:"/opt/bigdata/servers/zookeeper/data/data1"-name: zookeeper-2host:"local-168-182-112"path:"/opt/bigdata/servers/zookeeper/data/data1"...# Enable Prometheus to access ZooKeeper metrics endpointmetrics:enabled:true

添加zookeeper/templates/pv.yaml

{{- range .Values.persistence.local }}---apiVersion: v1

kind: PersistentVolume

metadata:name:{{ .name }}labels:name:{{ .name }}spec:storageClassName:{{ $.Values.persistence.storageClass }}capacity:storage:{{ $.Values.persistence.size }}accessModes:- ReadWriteOnce

local:path:{{ .path }}nodeAffinity:required:nodeSelectorTerms:-matchExpressions:-key: kubernetes.io/hostname

operator: In

values:-{{ .host }}---{{- end }}

添加zookeeper/templates/storage-class.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:name:{{ .Values.persistence.storageClass }}provisioner: kubernetes.io/no-provisioner

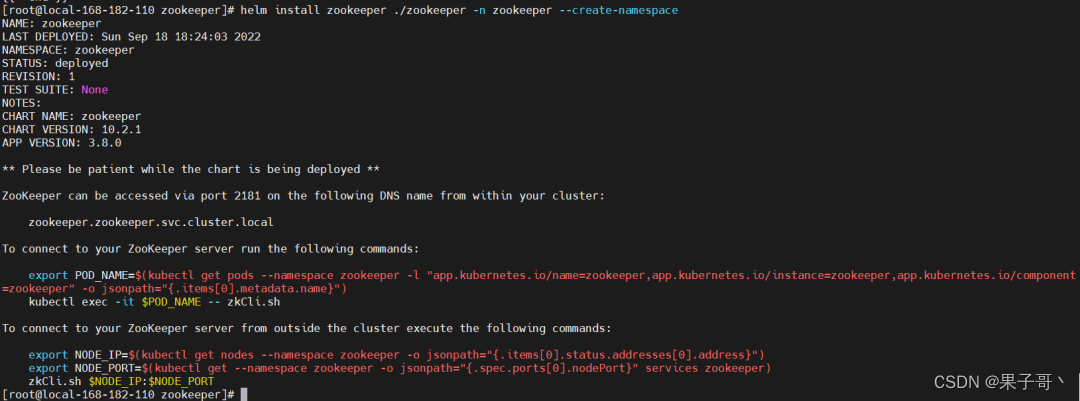

3)开始安装

# 先准备好镜像

docker pull docker.io/bitnami/zookeeper:3.8.0-debian-11-r36

docker tag docker.io/bitnami/zookeeper:3.8.0-debian-11-r36 myharbor.com/bigdata/zookeeper:3.8.0-debian-11-r36

docker push myharbor.com/bigdata/zookeeper:3.8.0-debian-11-r36

# 开始安装

helm install zookeeper ./zookeeper -n zookeeper --create-namespace

查看 pod 状态

kubectl get pods,svc -n zookeeper -o wide

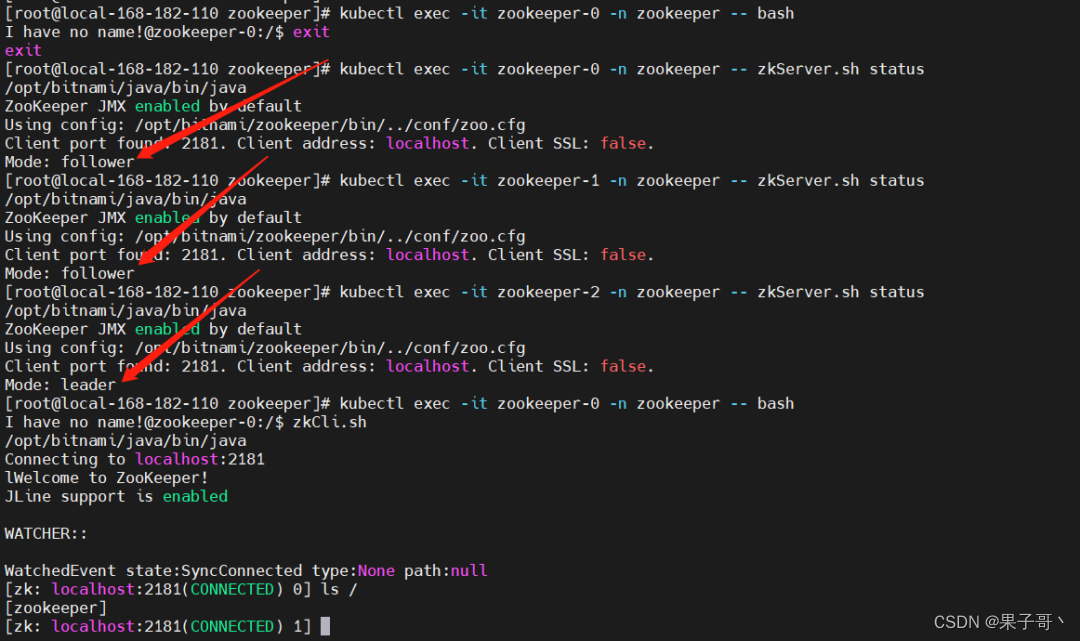

4)测试验证

# 登录zookeeper pod

kubectl exec -it zookeeper-0 -n zookeeper -- zkServer.sh status

kubectl exec -it zookeeper-1 -n zookeeper -- zkServer.sh status

kubectl exec -it zookeeper-2 -n zookeeper -- zkServer.sh status

kubectl exec -it zookeeper-0 -n zookeeper -- bash

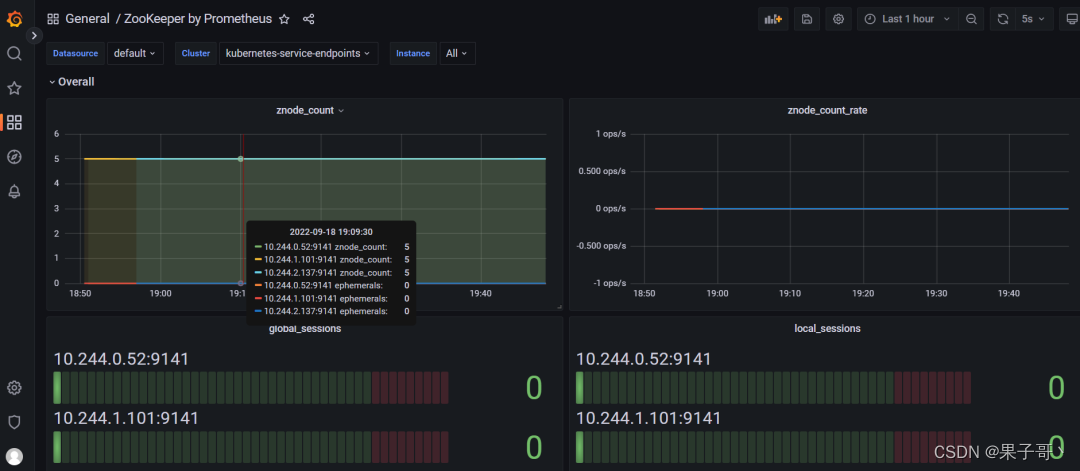

5)Prometheus 监控

Prometheus:https://prometheus.k8s.local/targets?search=zookeeper

可以通过命令查看采集数据

kubectl get --raw http://10.244.0.52:9141/metrics

kubectl get --raw http://10.244.1.101:9141/metrics

kubectl get --raw http://10.244.2.137:9141/metrics

Grafana:https://grafana.k8s.local/

账号:admin,密码通过下面命令获取

kubectl get secret --namespace grafana grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

导入 grafana 模板,集群资源监控:10465

官方模块下载地址:https://grafana.com/grafana/dashboards/

6)卸载

helm uninstall zookeeper -n zookeeper

kubectl delete pod -n zookeeper `kubectl get pod -n zookeeper|awk 'NR>1{print $1}'` --force

kubectl patch ns zookeeper -p '{"metadata":{"finalizers":null}}'

kubectl delete ns zookeeper --force

三、Kafka on k8s 部署

1)添加源

部署包地址:https://artifacthub.io/packages/helm/bitnami/kafka

helm repo add bitnami https://charts.bitnami.com/bitnami

helm pull bitnami/kafka

tar -xf kafka-18.4.2.tgz

2)修改配置

修改kafka/values.yaml

image:registry: myharbor.com

repository: bigdata/kafka

tag: 3.2.1-debian-11-r16

...replicaCount:3...service:type: NodePort

nodePorts:client:"30092"external:"30094"...

externalAccess

enabled:trueservice:type: NodePort

nodePorts:-30001-30002-30003useHostIPs:true...persistence:storageClass:"kafka-local-storage"size:"10Gi"# 目录需要提前在宿主机上创建local:-name: kafka-0host:"local-168-182-110"path:"/opt/bigdata/servers/kafka/data/data1"-name: kafka-1host:"local-168-182-111"path:"/opt/bigdata/servers/kafka/data/data1"-name: kafka-2host:"local-168-182-112"path:"/opt/bigdata/servers/kafka/data/data1"...metrics:kafka:enabled:trueimage:registry: myharbor.com

repository: bigdata/kafka-exporter

tag: 1.6.0-debian-11-r8

jmx:enabled:trueimage:registry: myharbor.com

repository: bigdata/jmx-exporter

tag: 0.17.1-debian-11-r1

annotations:prometheus.io/path:"/metrics"...zookeeper:enabled:false...

externalZookeeper

servers:- zookeeper-0.zookeeper-headless.zookeeper

- zookeeper-1.zookeeper-headless.zookeeper

- zookeeper-2.zookeeper-headless.zookeeper

添加kafka/templates/pv.yaml

{{- range .Values.persistence.local }}---apiVersion: v1

kind: PersistentVolume

metadata:name:{{ .name }}labels:name:{{ .name }}spec:storageClassName:{{ $.Values.persistence.storageClass }}capacity:storage:{{ $.Values.persistence.size }}accessModes:- ReadWriteOnce

local:path:{{ .path }}nodeAffinity:required:nodeSelectorTerms:-matchExpressions:-key: kubernetes.io/hostname

operator: In

values:-{{ .host }}---{{- end }}

添加kafka/templates/storage-class.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:name:{{ .Values.persistence.storageClass }}provisioner: kubernetes.io/no-provisioner

3)开始安装

# 先准备好镜像

docker pull docker.io/bitnami/kafka:3.2.1-debian-11-r16

docker tag docker.io/bitnami/kafka:3.2.1-debian-11-r16 myharbor.com/bigdata/kafka:3.2.1-debian-11-r16

docker push myharbor.com/bigdata/kafka:3.2.1-debian-11-r16

# node-export

docker pull docker.io/bitnami/kafka-exporter:1.6.0-debian-11-r8

docker tag docker.io/bitnami/kafka-exporter:1.6.0-debian-11-r8 myharbor.com/bigdata/kafka-exporter:1.6.0-debian-11-r8

docker push myharbor.com/bigdata/kafka-exporter:1.6.0-debian-11-r8

# JXM

docker.io/bitnami/jmx-exporter:0.17.1-debian-11-r1

docker tag docker.io/bitnami/jmx-exporter:0.17.1-debian-11-r1 myharbor.com/bigdata/jmx-exporter:0.17.1-debian-11-r1

docker push myharbor.com/bigdata/jmx-exporter:0.17.1-debian-11-r1

#开始安装

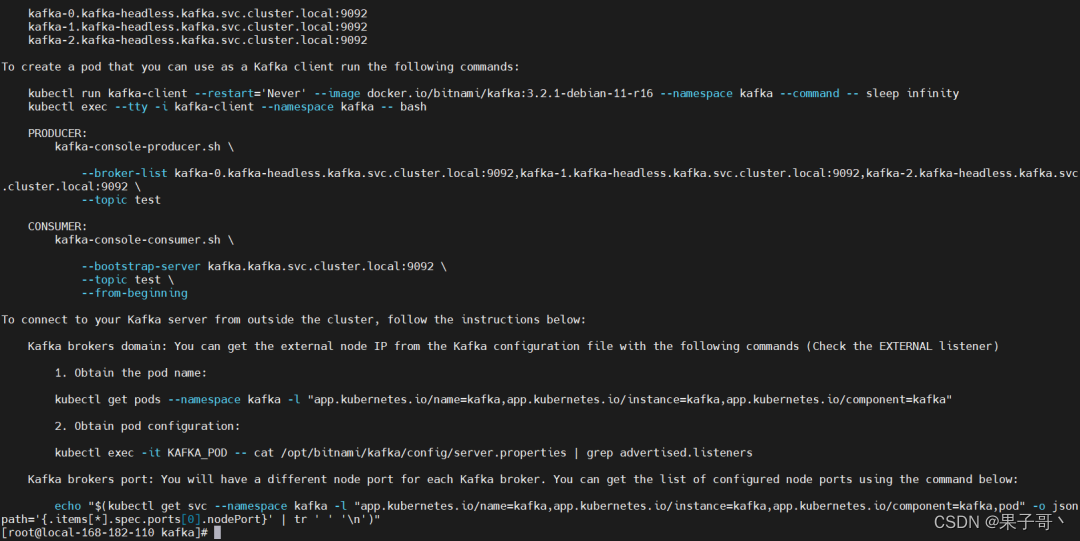

helm install kafka ./kafka -n kafka --create-namespace

查看 pod 状态

kubectl get pods,svc -n kafka -o wide

4)测试验证

# 登录zookeeper pod

kubectl exec -it kafka-0 -n kafka -- bash

1、创建 Topic(一个副本一个分区)

--create: 指定创建topic动作

--topic:指定新建topic的名称

--bootstrap-server: 指定kafka连接地址

--config:指定当前topic上有效的参数值,参数列表参考文档为: Topic-level configuration

--partitions:指定当前创建的kafka分区数量,默认为1个

--replication-factor:指定每个分区的复制因子个数,默认1个

kafka-topics.sh --create --topic test001 --bootstrap-server kafka.kafka:9092 --partitions 1 --replication-factor 1

# 查看

kafka-topics.sh --describe --bootstrap-server kafka.kafka:9092 --topic test001

2、查看 Topic 列表

kafka-topics.sh --list --bootstrap-server kafka.kafka:9092

3、生产者/消费者测试

【生产者】

kafka-console-producer.sh --broker-list kafka.kafka:9092 --topic test001

{"id":"1","name":"n1","age":"20"}{"id":"2","name":"n2","age":"21"}{"id":"3","name":"n3","age":"22"}

【消费者】

# 从头开始消费

kafka-console-consumer.sh --bootstrap-server kafka.kafka:9092 --topic test001 --from-beginning

# 指定从分区的某个位置开始消费,这里只指定了一个分区,可以多写几行或者遍历对应的所有分区

kafka-console-consumer.sh --bootstrap-server kafka.kafka:9092 --topic test001 --partition 0 --offset 100 --group test001

4、查看数据积压

kafka-consumer-groups.sh --bootstrap-server kafka.kafka:9092 --describe --group test001

5、删除 topic

kafka-topics.sh --delete --topic test001 --bootstrap-server kafka.kafka:9092

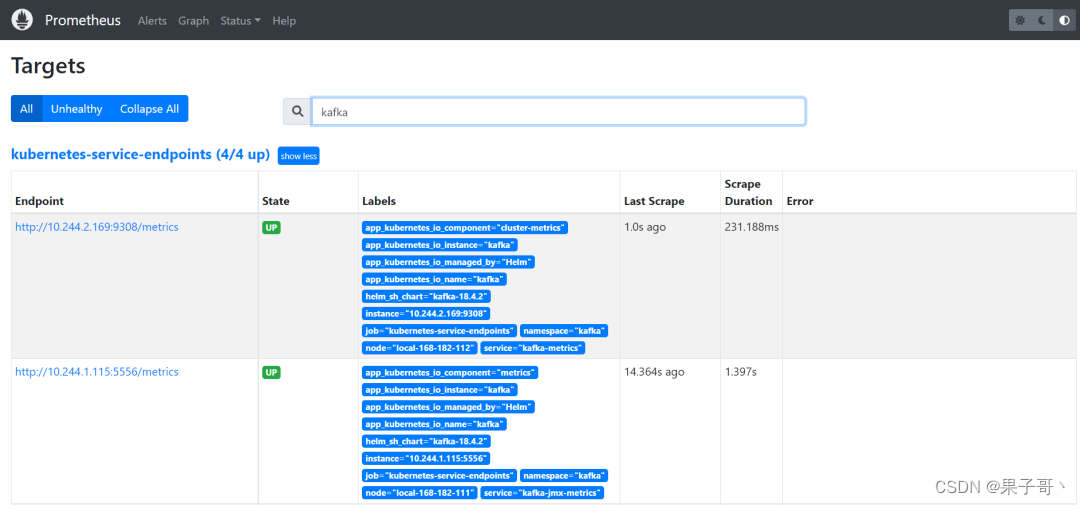

5)Prometheus 监控

Prometheus:https://prometheus.k8s.local/targets?search=kafka

可以通过命令查看采集数据

kubectl get --raw http://10.244.2.165:9308/metrics

Grafana:https://grafana.k8s.local/

账号:admin,密码通过下面命令获取

kubectl get secret --namespace grafana grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

导入 grafana 模板,集群资源监控:11962

官方模块下载地址:https://grafana.com/grafana/dashboards/

6)卸载

helm uninstall kafka -n kafka

kubectl delete pod -n kafka `kubectl get pod -n kafka|awk 'NR>1{print $1}'` --force

kubectl patch ns kafka -p '{"metadata":{"finalizers":null}}'

kubectl delete ns kafka --force

zookeeper + kafka on k8s 环境部署

版权归原作者 果子哥丶 所有, 如有侵权,请联系我们删除。