目录

Grafana

简介

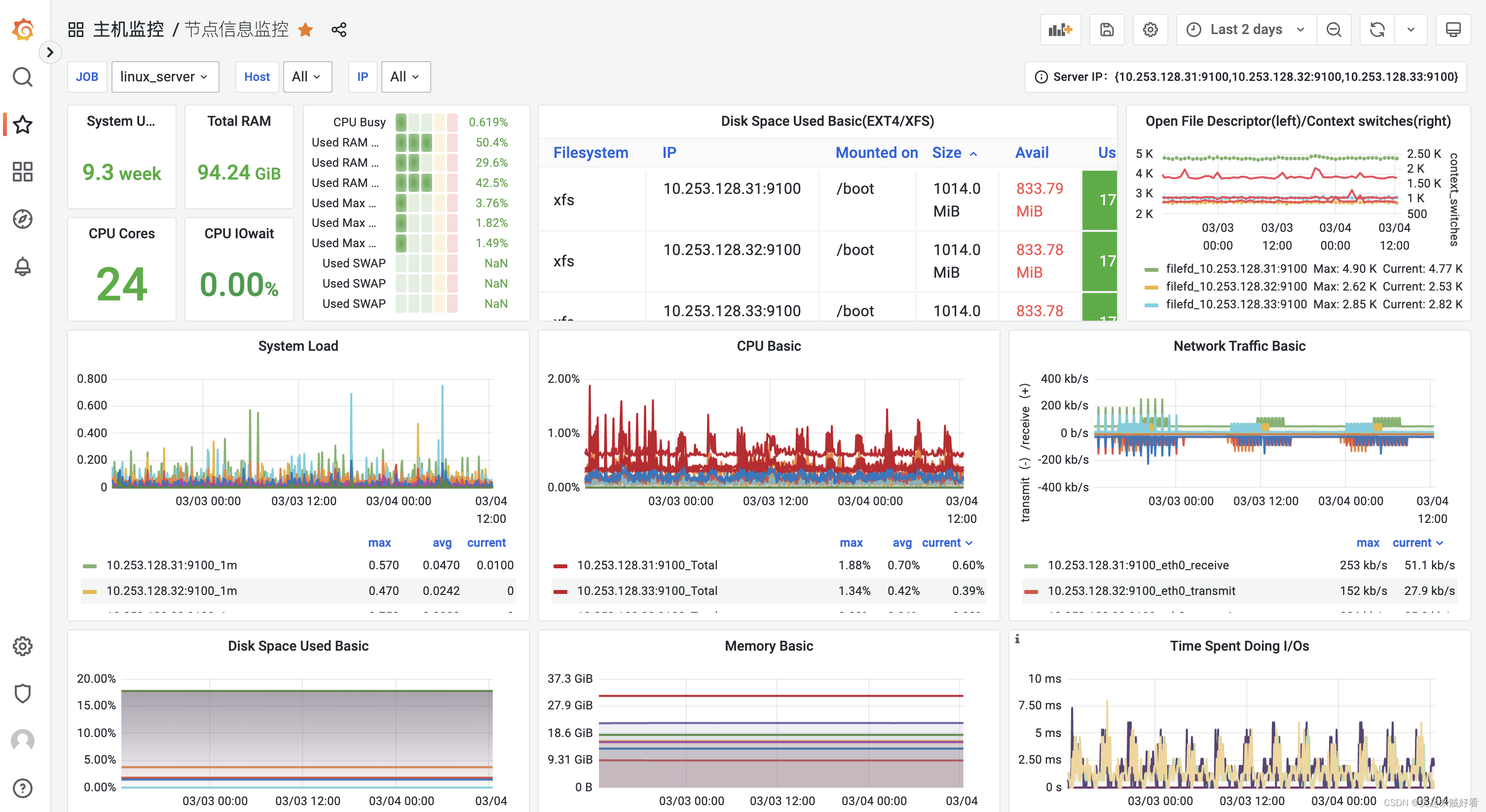

Grafana 是一款开源的数据可视化工具,使用 Grafana 可以非常轻松的将数据转成图表(如下图)的展现形式来做到数据监控以及数据统计。

下载软件包

wget https://dl.grafana.com/enterprise/release/grafana-enterprise-9.1.6.linux-amd64.tar.gz

安装部署

解压

tar-xvzf grafana-enterprise-9.1.6.linux-arm64.tar.gz

mv grafana-9.1.6 /data/apps/

cd /data/apps/grafana-9.1.6/conf

cp sample.ini grafana.ini

修改配置文件

vim grafana.ini

[paths]# Path to where grafana can store temp files, sessions, and the sqlite3 db (if that is used);data = /var/lib/grafana

data = /data/apps/grafana-9.1.6/data

logs = /data/apps/grafana-9.1.6/logs

plugins = /data/apps/grafana-9.1.6/plugins

[log]

mode =file

level = warn

创建用户

groupadd-g9100 monitor

useradd-g9100-u9100-s /sbin/nologin -M monitor

mkdir data &&mkdir logs &&mkdir plugins

chown-R monitor:monitor grafana-9.1.6

创建Systemd服务

vim /usr/lib/systemd/system/grafana.service

[Unit]Description=grafana serviceAfter=network.target

[Service]User=monitor

Group=monitor

KillMode=control-group

Restart=on-failure

RestartSec=60ExecStart=/data/apps/grafana-9.1.6/bin/grafana-server -config /data/apps/grafana-9.1.6/conf/grafana.ini -pidfile /data/apps/grafana-9.1.6/grafana.pid -homepath /data/apps/grafana-9.1.6

[Install]WantedBy=multi-user.target

启动 Grafana

systemctl daemon-reload

systemctl restart grafana.service

systemctl enable grafana.service

systemctl status grafana

Spark应用监控 Graphite_exporter

配置 mapping 文件

cd /usr/local/graphite_exporter

vim graphite_exporter_mapping

graphite_exportergraphite_exportengs:

- match: '*.*.executor.filesystem.*.*'

name: spark_app_filesystem_usage

labels:

application: $1

executor_id: $2

fs_type: $3

qty: $4

- match: '*.*.jvm.*.*'

name: spark_app_jvm_memory_usage

labels:

application: $1

executor_id: $2

mem_type: $3

qty: $4

- match: '*.*.executor.jvmGCTime.count'

name: spark_app_jvm_gcTime_count

labels:

application: $1

executor_id: $2

- match: '*.*.jvm.pools.*.*'

name: spark_app_jvm_memory_pools

labels:

application: $1

executor_id: $2

mem_type: $3

qty: $4

- match: '*.*.executor.threadpool.*'

name: spark_app_executor_tasks

labels:

application: $1

executor_id: $2

qty: $3

- match: '*.*.BlockManager.*.*'

name: spark_app_block_manager

labels:

application: $1

executor_id: $2

type: $3

qty: $4

- match: '*.*.DAGScheduler.*.*'

name: spark_app_dag_scheduler

labels:

application: $1

executor_id: $2

type: $3

qty: $4

- match: '*.*.CodeGenerator.*.*'

name: spark_app_code_generator

labels:

application: $1

executor_id: $2

type: $3

qty: $4

- match: '*.*.HiveExternalCatalog.*.*'

name: spark_app_hive_external_catalog

labels:

application: $1

executor_id: $2

type: $3

qty: $4

- match: '*.*.*.StreamingMetrics.*.*'

name: spark_app_streaming_metrics

labels:

application: $1

executor_id: $2

app_name: $3

type: $4

qty: $5

修改spark的metrics.properties配置文件,让其推送metrics到Graphite_exporter

*.sink.graphite.class=org.apache.spark.metrics.sink.GraphiteSink

*.sink.graphite.host=10.253.128.31

*.sink.graphite.port=9108

*.sink.graphite.period=10

*.sink.graphite.unit=seconds

#*.sink.graphite.prefix=<optional_value>

HDFS 监控

namenode.yaml

---startDelaySeconds:0hostPort: localhost:1234#master为本机IP(一般可设置为localhost);1234为想设置的jmx端口#jmxUrl: service:jmx:rmi:///jndi/rmi://127.0.0.1:1234/jmxrmissl:falselowercaseOutputName:falselowercaseOutputLabelNames:false

datanode.yaml

---startDelaySeconds:0hostPort: localhost:1244#master为本机IP(一般可设置为localhost);1244为想设置的jmx端口(可设置为未被占用的端口)#jmxUrl: service:jmx:rmi:///jndi/rmi://127.0.0.1:1234/jmxrmissl:falselowercaseOutputName:falselowercaseOutputLabelNames:false

配置 hadoop-env.sh

exportHADOOP_NAMENODE_JMX_OPTS="-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxremote.port=1234 -javaagent:/jmx_prometheus_javaagent-0.8.jar=9211:/namenode.yaml"exportHADOOP_DATANODE_JMX_OPTS="-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxremote.port=1244 -javaagent:/jmx_prometheus_javaagent-0.8.jar=9212:/datanode.yaml"

YARN 监控

yarn.yaml

---startDelaySeconds:0hostPort: localhost:2111#master为本机IP(一般可设置为localhost);1234为想设置的jmx端口#jmxUrl: service:jmx:rmi:///jndi/rmi://127.0.0.1:1234/jmxrmissl:falselowercaseOutputName:falselowercaseOutputLabelNames:false

配置 yarn-env.sh

export YARN_JMX_OPTS="-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxremote.port=2111 -javaagent:/jmx_prometheus_javaagent-0.8.jar=9323:/yarn.yaml"

HBase 监控

master.yaml

---startDelaySeconds:0hostPort: IP:1254#master为本机IP(一般可设置为localhost);1234为想设置的jmx端口(可设#jmxUrl: service:jmx:rmi:///jndi/rmi://127.0.0.1:1234/jmxrmissl:falselowercaseOutputName:falselowercaseOutputLabelNames:false

regionserver.yaml

---startDelaySeconds:0hostPort: IP:1255#master为本机IP(一般可设置为localhost);1234为想设置的jmx端口#jmxUrl: service:jmx:rmi:///jndi/rmi://127.0.0.1:1234/jmxrmissl:falselowercaseOutputName:falselowercaseOutputLabelNames:false

配置 hbase-env.sh

HBASE_M_JMX_OPTS="-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmx remote.port=1254 -javaagent:/jmx_prometheus_javaagent-0.8.jar=9523:/hbasem.yaml"

#======================================= prometheus jmx export start===================================

HBASE_JMX_OPTS="-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxre mote.port=1255 -javaagent:/jmx_prometheus_javaagent-0.8.jar=9522:/hbase.yaml"

#======================================= prometheus jmx export end ===================================

希望对正在查看文章的您有所帮助,记得关注、评论、收藏,谢谢您

版权归原作者 笑起来贼好看 所有, 如有侵权,请联系我们删除。