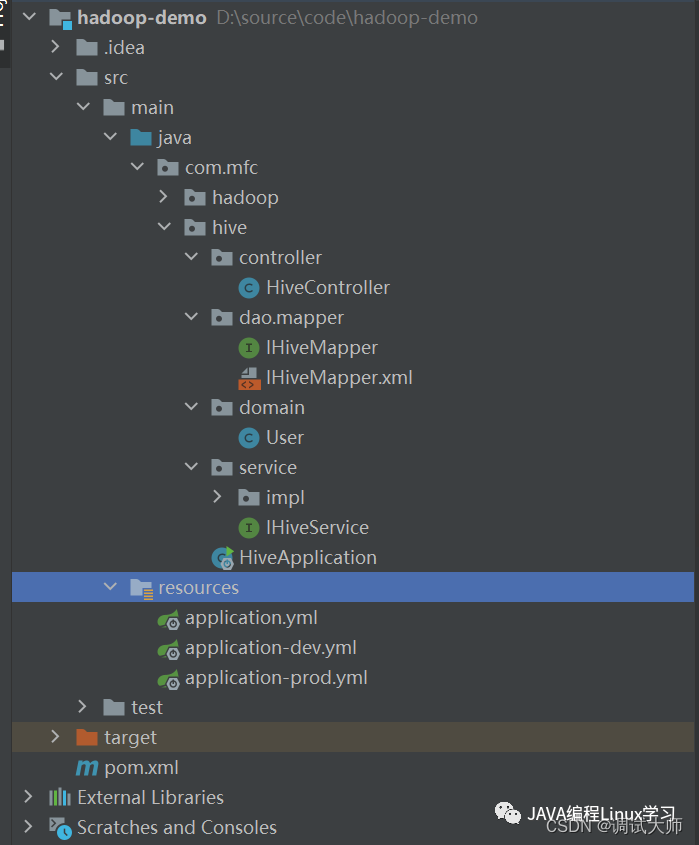

项目结构如下

mysql 执行如下建表语句,并插入一条测试数据

1

2

3

4

5

CREATE

TABLE

`

user

` (

`id`

int

(11)

NOT

NULL

,

`

name

`

varchar

(255)

COLLATE

utf8mb4_general_ci

DEFAULT

NULL

,

PRIMARY

KEY

(`id`)

) ENGINE=InnoDB

DEFAULT

CHARSET=utf8mb4

COLLATE

=utf8mb4_general_ci;

使用hive客户端执行如下建表语句,并插入一条测试数据

1

create

table

`

user

` (`id`

int

, `

name

` string);

1. 引入依赖 pom.xml如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

<?

xml

version

=

"1.0"

encoding

=

"UTF-8"

?>

<

project

xmlns

=

"http://maven.apache.org/POM/4.0.0"

xmlns:xsi

=

"http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation

=

"http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"

>

<

modelVersion

>4.0.0</

modelVersion

>

<

groupId

>org.example</

groupId

>

<

artifactId

>hadoop-demo</

artifactId

>

<

version

>1.0-SNAPSHOT</

version

>

<

properties

>

<

project.build.sourceEncoding

>UTF-8</

project.build.sourceEncoding

>

<

project.reporting.outputEncoding

>UTF-8</

project.reporting.outputEncoding

>

<

mybatis-spring-boot

>1.2.0</

mybatis-spring-boot

>

<

mysql-connector

>8.0.11</

mysql-connector

>

<

activiti.version

>5.22.0</

activiti.version

>

</

properties

>

<

parent

>

<

groupId

>org.springframework.boot</

groupId

>

<

artifactId

>spring-boot-starter-parent</

artifactId

>

<

version

>2.7.7</

version

>

</

parent

>

<

dependencies

>

<!--springBoot相关-->

<

dependency

>

<

groupId

>org.springframework.boot</

groupId

>

<

artifactId

>spring-boot-starter-web</

artifactId

>

</

dependency

>

<!-- https://mvnrepository.com/artifact/com.baomidou/mybatis-plus-boot-starter -->

<!-- https://mvnrepository.com/artifact/com.baomidou/dynamic-datasource-spring-boot-starter -->

<

dependency

>

<

groupId

>com.baomidou</

groupId

>

<

artifactId

>dynamic-datasource-spring-boot-starter</

artifactId

>

<

version

>3.6.1</

version

>

</

dependency

>

<!-- https://mvnrepository.com/artifact/com.baomidou/mybatis-plus-boot-starter -->

<

dependency

>

<

groupId

>com.baomidou</

groupId

>

<

artifactId

>mybatis-plus-boot-starter</

artifactId

>

<

version

>3.5.3.1</

version

>

</

dependency

>

<!--mybatis相关-->

<!-- <dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>${mybatis-spring-boot}</version>

</dependency>-->

<!--mysql驱动相关-->

<

dependency

>

<

groupId

>mysql</

groupId

>

<

artifactId

>mysql-connector-java</

artifactId

>

<

version

>${mysql-connector}</

version

>

</

dependency

>

<!--druid连接池相关-->

<

dependency

>

<

groupId

>com.alibaba</

groupId

>

<

artifactId

>druid</

artifactId

>

<

version

>1.1.12</

version

>

</

dependency

>

<!-- 添加hadoop依赖begin -->

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<

dependency

>

<

groupId

>org.apache.hadoop</

groupId

>

<

artifactId

>hadoop-common</

artifactId

>

<

version

>3.3.4</

version

>

</

dependency

>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

<

dependency

>

<

groupId

>org.apache.hadoop</

groupId

>

<

artifactId

>hadoop-hdfs</

artifactId

>

<

version

>3.3.4</

version

>

</

dependency

>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<

dependency

>

<

groupId

>org.apache.hadoop</

groupId

>

<

artifactId

>hadoop-client</

artifactId

>

<

version

>3.3.4</

version

>

</

dependency

>

<!--添加hadoop依赖end -->

<!-- 添加hive依赖begin -->

<

dependency

>

<

groupId

>org.apache.hive</

groupId

>

<

artifactId

>hive-jdbc</

artifactId

>

<

version

>3.1.3</

version

>

<

exclusions

>

<

exclusion

>

<

groupId

>org.eclipse.jetty.aggregate</

groupId

>

<

artifactId

>*</

artifactId

>

</

exclusion

>

<

exclusion

>

<

groupId

>org.eclipse.jetty</

groupId

>

<

artifactId

>jetty-runner</

artifactId

>

</

exclusion

>

</

exclusions

>

</

dependency

>

<!-- 添加hive依赖end -->

<!-- https://mvnrepository.com/artifact/org.projectlombok/lombok -->

<

dependency

>

<

groupId

>org.projectlombok</

groupId

>

<

artifactId

>lombok</

artifactId

>

<

version

>1.18.24</

version

>

<

scope

>provided</

scope

>

</

dependency

>

<

dependency

>

<

groupId

>junit</

groupId

>

<

artifactId

>junit</

artifactId

>

<

version

>4.12</

version

>

<

scope

>test</

scope

>

</

dependency

>

</

dependencies

>

<

build

>

<

finalName

>community</

finalName

>

<

resources

>

<

resource

>

<

directory

>src/main/java</

directory

>

<

includes

>

<

include

>**/*.properties</

include

>

<

include

>**/*.xml</

include

>

</

includes

>

<

filtering

>false</

filtering

>

</

resource

>

<

resource

>

<

directory

>src/main/resources</

directory

>

<

includes

>

<

include

>**/*.*</

include

>

</

includes

>

<

filtering

>false</

filtering

>

</

resource

>

</

resources

>

<

plugins

>

<

plugin

>

<

groupId

>org.springframework.boot</

groupId

>

<

artifactId

>spring-boot-maven-plugin</

artifactId

>

<

configuration

>

<

mainClass

>com.mfc.hive.HiveApplication</

mainClass

>

<

layout

>ZIP</

layout

>

<

includeSystemScope

>true</

includeSystemScope

>

</

configuration

>

<

executions

>

<

execution

>

<

goals

>

<

goal

>repackage</

goal

>

</

goals

>

</

execution

>

</

executions

>

</

plugin

>

</

plugins

>

</

build

>

</

project

>

2.application-dev.yml 配置如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

server:

port: 6080

spring:

application:

name: hadoop-service

datasource:

dynamic:

primary: master #设置默认的数据源,默认值为master

strict: false #是否弃用严格模式,如果启用在味匹配到指定数据源时抛出异常

datasource:

master:

driver-class-name: com.mysql.jdbc.Driver

type: com.alibaba.druid.pool.DruidDataSource

url: jdbc:mysql://localhost:3306/hadoop?useUnicode=true&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&allowPublicKeyRetrieval=true&serverTimezone=GMT%2b8

username: root

password: xxxx

hive:

driver-class-name: org.apache.hive.jdbc.HiveDriver

type: com.alibaba.druid.pool.DruidDataSource

url: jdbc:hive2://hadoop-master:21000

username: hadoop

password:

druid:

initialSize: 5 #初始化连接大小

minIdle: 5 #最小连接池数量

maxActive: 20 #最大连接池数量

maxWait: 60000 #获取连接时最大等待时间,单位毫秒

timeBetweenEvictionRunsMillis: 60000 #配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

minEvictableIdleTimeMillis: 300000 #配置一个连接在池中最小生存的时间,单位是毫秒

validationQuery: SELECT 1 from DUAL #测试连接

testWhileIdle: true #申请连接的时候检测,建议配置为true,不影响性能,并且保证安全性

testOnBorrow: false #获取连接时执行检测,建议关闭,影响性能

testOnReturn: false #归还连接时执行检测,建议关闭,影响性能

poolPreparedStatements: false #是否开启PSCache,PSCache对支持游标的数据库性能提升巨大,oracle建议开启,mysql下建议关闭

maxPoolPreparedStatementPerConnectionSize: 20 #开启poolPreparedStatements后生效

filters: stat,wall,log4j #配置扩展插件,常用的插件有=>stat:监控统计 log4j:日志 wall:防御sql注入

connectionProperties: 'druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000' #通过connectProperties属性来打开mergeSql功能;慢SQL记录

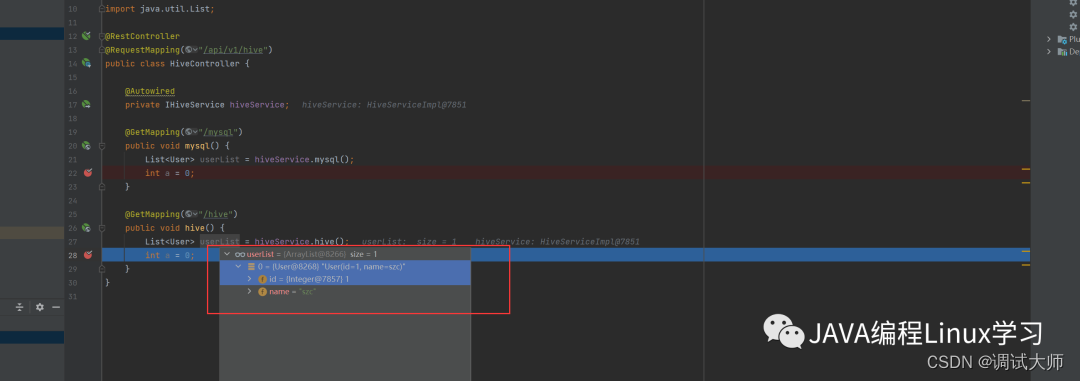

- 新增HiveController

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

package

com.mfc.hive.controller;

import

com.mfc.hive.domain.User;

import

com.mfc.hive.service.IHiveService;

import

org.springframework.beans.factory.annotation.Autowired;

import

org.springframework.web.bind.annotation.GetMapping;

import

org.springframework.web.bind.annotation.RequestMapping;

import

org.springframework.web.bind.annotation.RestController;

import

java.util.List;

@RestController

@RequestMapping

(

"/api/v1/hive"

)

public

class

HiveController {

@Autowired

private

IHiveService hiveService;

@GetMapping

(

"/mysql"

)

public

void

mysql() {

List<User> userList = hiveService.mysql();

int

a =

0

;

}

@GetMapping

(

"/hive"

)

public

void

hive() {

List<User> userList = hiveService.hive();

int

a =

0

;

}

}

4.新增service接口

1

2

3

4

5

6

7

8

9

10

11

12

package

com.mfc.hive.service;

import

com.mfc.hive.domain.User;

import

java.util.List;

public

interface

IHiveService {

List<User> mysql();

List<User> hive();

}

5.新增service实现

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

package

com.mfc.hive.service.impl;

import

com.mfc.hive.dao.mapper.IHiveMapper;

import

com.mfc.hive.domain.User;

import

com.mfc.hive.service.IHiveService;

import

org.springframework.beans.factory.annotation.Autowired;

import

org.springframework.stereotype.Service;

import

java.util.List;

@Service

public

class

HiveServiceImpl

implements

IHiveService {

@Autowired

private

IHiveMapper hiveMapper;

public

List<User> mysql() {

return

hiveMapper.mysql();

}

public

List<User> hive() {

return

hiveMapper.hive();

}

}

6.新增mapper接口

注意默认数据源为mysql,如需要使用hive数据源可以通过@Ds注解指定,如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

package

com.mfc.hive.dao.mapper;

import

com.baomidou.dynamic.datasource.annotation.DS;

import

com.mfc.hive.domain.User;

import

org.springframework.stereotype.Repository;

import

java.util.List;

@Repository

public

interface

IHiveMapper {

List<User> mysql();

@DS

(

"hive"

)

List<User> hive();

}

7.增加mapper配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<

mapper

namespace

=

"com.mfc.hive.dao.mapper.IHiveMapper"

>

<

resultMap

id

=

"userMap"

type

=

"com.mfc.hive.domain.User"

>

<

id

property

=

"id"

column

=

"id"

/>

<

result

property

=

"name"

column

=

"name"

/>

</

resultMap

>

<

select

id

=

"mysql"

parameterType

=

"String"

resultMap

=

"userMap"

>

select `id`,`name` from `user`

</

select

>

<

select

id

=

"hive"

parameterType

=

"String"

resultMap

=

"userMap"

>

select `id`,`name` from `user`

</

select

>

</

mapper

>

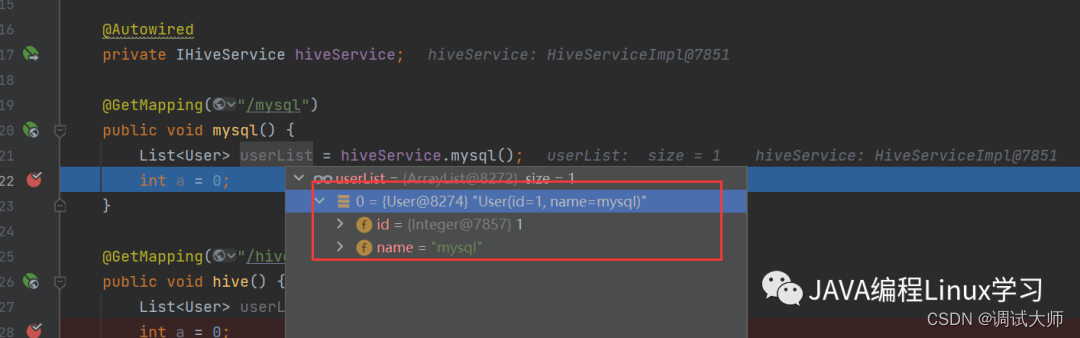

8.测试

打开postman选择get方法

8.1调用hive查询user表

输入地址localhost:6080/api/v1/hive/hive

8.2调用mysql查询user表

输入地址localhost:6080/api/v1/hive/mysql

版权归原作者 调试大师 所有, 如有侵权,请联系我们删除。