就是发送请求的时候,会经过,中间件。中间件会处理,你的请求

下面是代码:

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

# useful for handling different item types with a single interface

from itemadapter import is_item, ItemAdapter

class MidSpiderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, or item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request or item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info("Spider opened: %s" % spider.name)

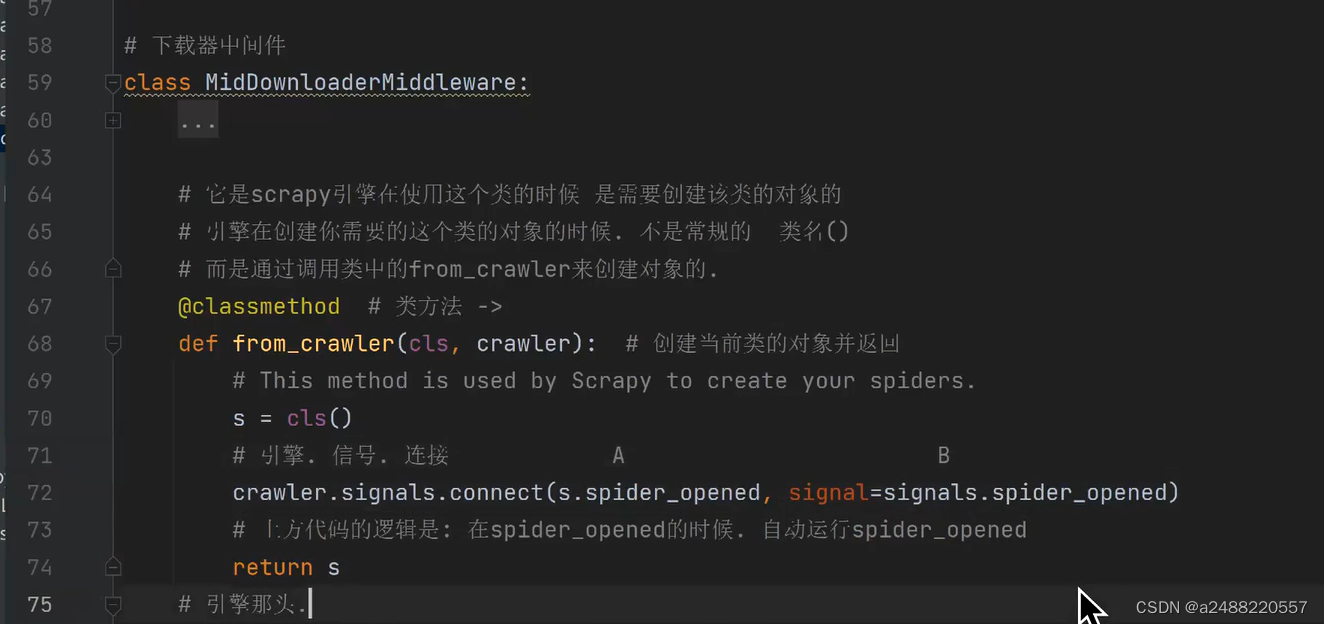

class MidDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

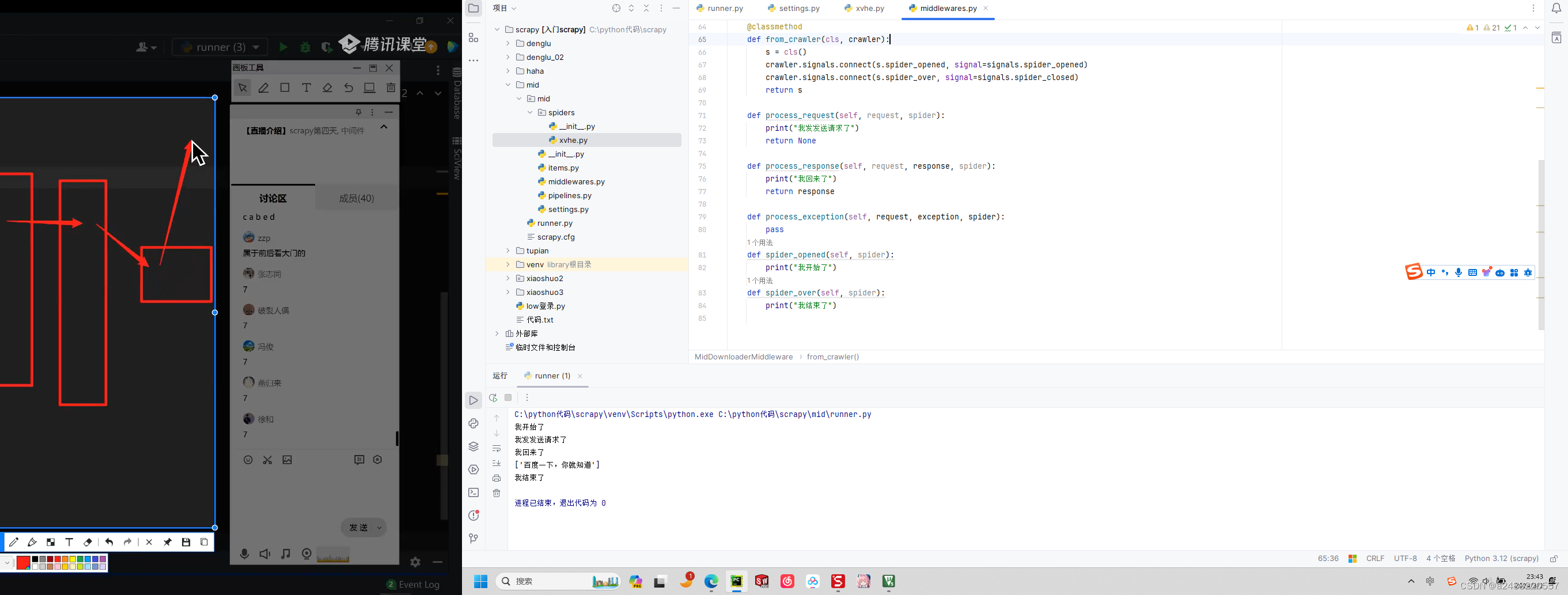

@classmethod

def from_crawler(cls, crawler):

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

crawler.signals.connect(s.spider_over, signal=signals.spider_closed)

return s

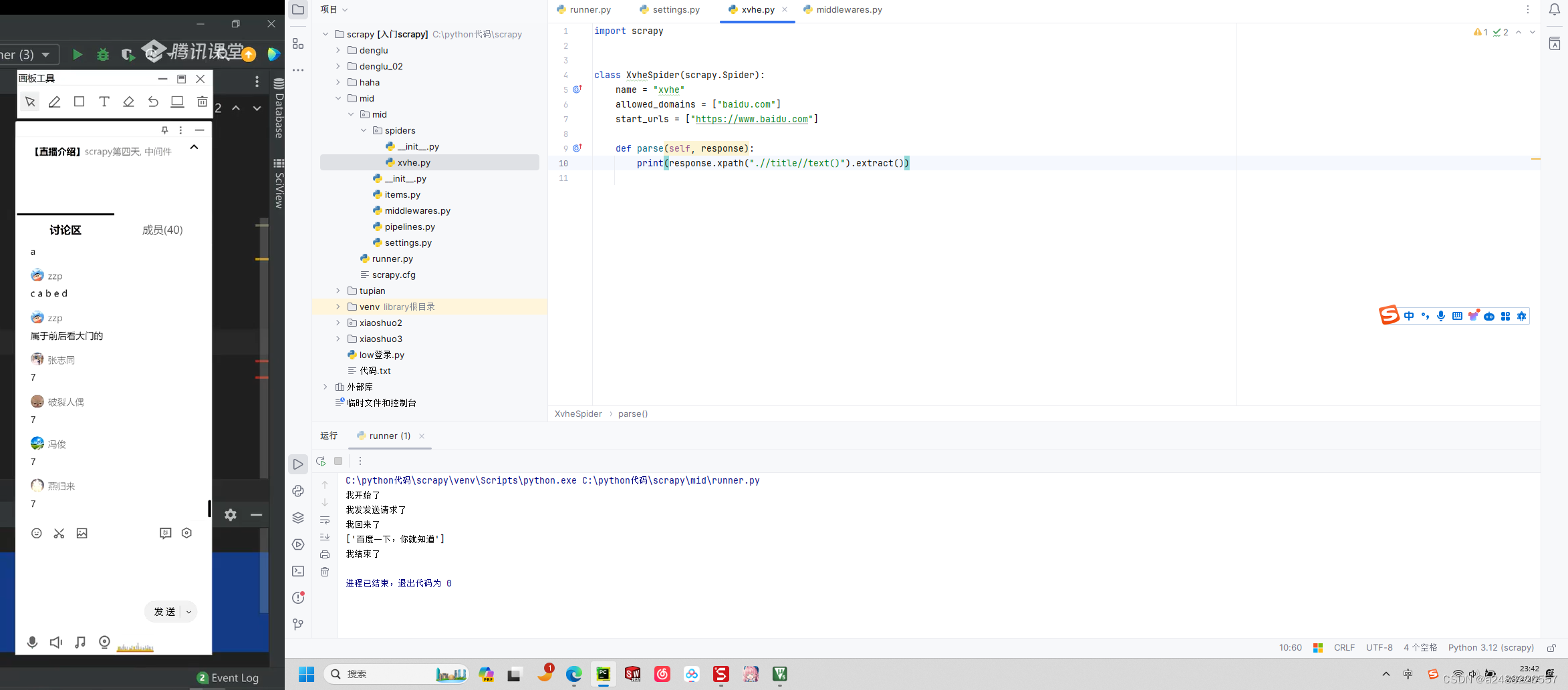

def process_request(self, request, spider):

print("我发发送请求了")

return None

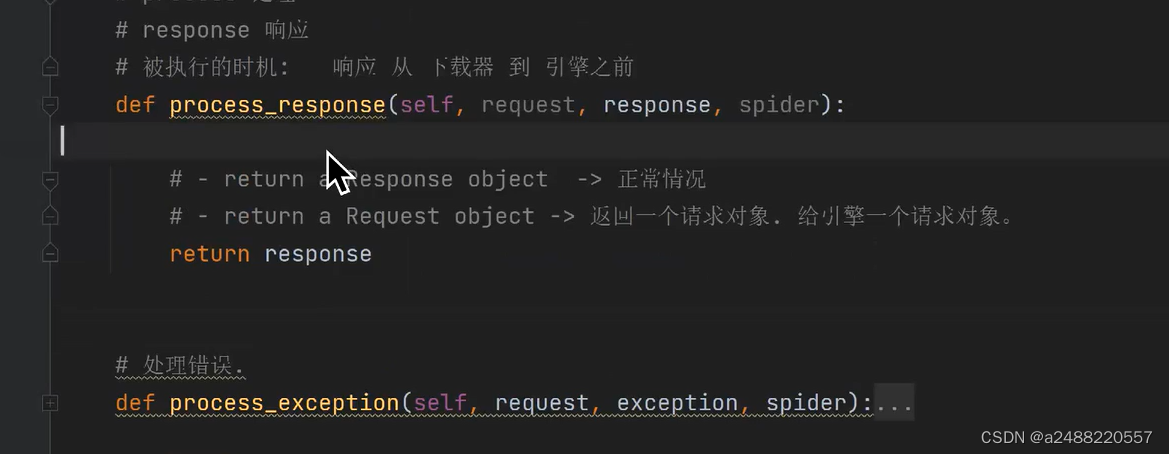

def process_response(self, request, response, spider):

print("我回来了")

return response

def process_exception(self, request, exception, spider):

pass

def spider_opened(self, spider):

print("我开始了")

def spider_over(self, spider):

print("我结束了")

本文转载自: https://blog.csdn.net/a2488220557/article/details/136425006

版权归原作者 a2488220557 所有, 如有侵权,请联系我们删除。

版权归原作者 a2488220557 所有, 如有侵权,请联系我们删除。