背景:在使用spark Structured Streaming将数据写入到kafka时,hudi compaction未按照相关参数正常执行。

无法正常compaction代码如下:

val spark = SparkSession

.builder

.config("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.appName("test_leo")

.enableHiveSupport()

.getOrCreate()

val df =

spark.readStream

.format("kafka")

.option("kafka.bootstrap.servers", "ip:port")

.option("subscribe", "hudi_leo_test").option("startingOffsets", "earliest").option("maxOffsetsPerTrigger", 2).option("failOnDataLoss",true)

.load()

df.writeStream.option("checkpointLocation","/spark/checkpoints/teststreaming01")

.format("hudi")

.option(DataSourceWriteOptions.OPERATION.key(), DataSourceWriteOptions.UPSERT_OPERATION_OPT_VAL)

.option(DataSourceWriteOptions.PAYLOAD_CLASS_NAME.key(), "org.apache.hudi.common.model.OverwriteWithLatestAvroPayload")

.option(DataSourceWriteOptions.STREAMING_IGNORE_FAILED_BATCH.key(), "false")

.option(DataSourceWriteOptions.TABLE_TYPE.key(), "MERGE_ON_READ")

.option(HoodieWriteConfig.TBL_NAME.key(), "test_hudi_leo_com")

.option(DataSourceWriteOptions.RECORDKEY_FIELD.key(), "id")

.option(DataSourceWriteOptions.PRECOMBINE_FIELD.key(), "ts")

.option(DataSourceWriteOptions.HIVE_SYNC_ENABLED.key(), true)

.option(DataSourceWriteOptions.HIVE_DATABASE.key(), "temp_db")

.option(DataSourceWriteOptions.HIVE_TABLE.key(), "test_hudi_leo_com")

.option(DataSourceWriteOptions.HIVE_URL.key(), hive_url)

.option(DataSourceWriteOptions.HIVE_USER.key(), "hudi")

.option(DataSourceWriteOptions.HIVE_PASS.key(), "hudi")

.option(HoodieWriteConfig.INSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.BULKINSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.UPSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.DELETE_PARALLELISM_VALUE.key,"40")

.option(HoodieIndexConfig.INDEX_TYPE.key(), HoodieIndex.IndexType.BLOOM.name())

.option(HoodieIndexConfig.BLOOM_INDEX_UPDATE_PARTITION_PATH_ENABLE.key(), "true")

.option(DataSourceWriteOptions.HIVE_SKIP_RO_SUFFIX_FOR_READ_OPTIMIZED_TABLE.key(), true)

.option(HoodieMemoryConfig.MAX_MEMORY_FRACTION_FOR_MERGE.key(), "0.8")

.option(HoodieMemoryConfig.MAX_MEMORY_FRACTION_FOR_COMPACTION.key(), "0.8")

.option(HoodieCompactionConfig.INLINE_COMPACT_NUM_DELTA_COMMITS.key(), "2")

.mode(SaveMode.Append)

.save("/user/testhudi/test_hudi_leo_com")

.start()

spark.streams.awaitAnyTermination()

正常compaction代码如下:

val spark = SparkSession

.builder

.config("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.appName("test_leo")

.enableHiveSupport()

.getOrCreate()

val df = spark.readStream

.format("kafka")

.option("kafka.bootstrap.servers", "ip:port")

.option("subscribe", "hudi_leo_test").option("startingOffsets", "earliest").option("maxOffsetsPerTrigger", 2).option("failOnDataLoss",true)

.load().selectExpr("CAST(value AS STRING) kafka_value", "CAST(timestamp AS long) ts", "CAST(topic AS STRING)", "CAST(partition AS STRING)", "CAST(offset AS STRING)")

df.writeStream.format("hudi")

.option(DataSourceWriteOptions.OPERATION.key(), DataSourceWriteOptions.UPSERT_OPERATION_OPT_VAL)

.option(DataSourceWriteOptions.PAYLOAD_CLASS_NAME.key(), "org.apache.hudi.common.model.OverwriteWithLatestAvroPayload")

.option(DataSourceWriteOptions.STREAMING_IGNORE_FAILED_BATCH.key(), "false")

.option(DataSourceWriteOptions.TABLE_TYPE.key(), "MERGE_ON_READ")

.option(HoodieWriteConfig.TBL_NAME.key(), "test_hudi_leo_com")

.option(DataSourceWriteOptions.RECORDKEY_FIELD.key(), "id")

.option(DataSourceWriteOptions.PRECOMBINE_FIELD.key(), "ts")

.option(DataSourceWriteOptions.HIVE_SYNC_ENABLED.key(), true)

.option(DataSourceWriteOptions.HIVE_DATABASE.key(), "temp_db")

.option(DataSourceWriteOptions.HIVE_TABLE.key(), "test_hudi_leo_com")

.option(DataSourceWriteOptions.HIVE_URL.key(), "jdbc:hive2://xxx:10000")

.option(DataSourceWriteOptions.HIVE_USER.key(), "hudi")

.option(DataSourceWriteOptions.HIVE_PASS.key(), "hudi")

.option(HoodieWriteConfig.INSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.BULKINSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.UPSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.DELETE_PARALLELISM_VALUE.key,"40")

.option(HoodieIndexConfig.INDEX_TYPE.key(), HoodieIndex.IndexType.BLOOM.name())

.option(HoodieIndexConfig.BLOOM_INDEX_UPDATE_PARTITION_PATH_ENABLE.key(), "true")

.option(DataSourceWriteOptions.HIVE_SKIP_RO_SUFFIX_FOR_READ_OPTIMIZED_TABLE.key(), true)

.option(HoodieMemoryConfig.MAX_MEMORY_FRACTION_FOR_MERGE.key(), "0.8")

.option(HoodieMemoryConfig.MAX_MEMORY_FRACTION_FOR_COMPACTION.key(), "0.8")

.option(DataSourceWriteOptions.ASYNC_COMPACT_ENABLE.key(), "true")

.option(HoodieCompactionConfig.INLINE_COMPACT_NUM_DELTA_COMMITS.key(), "2")

.option(HoodieCompactionConfig.INLINE_COMPACT_TIME_DELTA_SECONDS.key(), 30)

.option("checkpointLocation","/user/itleoc/testhudi/checkpoints/teststreaming03")

.outputMode(OutputMode.Append)

.start("/user/test/testhudi/test_hudi_leo_com")

spark.streams.awaitAnyTermination()

最后经过查看hudi源码,分析发现无法正常compaction的原因是因为写入到hudi中使用的是spark-datasource writes,而不是stream sink

源码解析:

1.async compaction

使用async compaction的方式可见官方文档:Compaction | Apache Hudi

hudi的async compaction主要分为两步:

1.Compaction Scheduling: 生成compaction plan

2.Compaction Execution: 执行compaction plan

无论使用spark datasource writes或者stream sink写入,都会调用HoodieSparkSqlWriter.write函数

,代码如下

def write(sqlContext: SQLContext,

mode: SaveMode,

optParams: Map[String, String],

df: DataFrame,

hoodieTableConfigOpt: Option[HoodieTableConfig] = Option.empty,

hoodieWriteClient: Option[SparkRDDWriteClient[HoodieRecordPayload[Nothing]]] = Option.empty,

asyncCompactionTriggerFn: Option[Function1[SparkRDDWriteClient[HoodieRecordPayload[Nothing]], Unit]] = Option.empty,

asyncClusteringTriggerFn: Option[Function1[SparkRDDWriteClient[HoodieRecordPayload[Nothing]], Unit]] = Option.empty

)

HoodieStreamingSink类中,在调用write函数是,会异步触发hudi compaction

Try(

HoodieSparkSqlWriter.write(

sqlContext, mode, updatedOptions, data, hoodieTableConfig, writeClient, Some(triggerAsyncCompactor), Some(triggerAsyncClustering))

)

上述代码中的triggerAsyncCompactor函数便会触发 async compaction

protected def triggerAsyncCompactor(client: SparkRDDWriteClient[HoodieRecordPayload[Nothing]]): Unit = {

if (null == asyncCompactorService) {

log.info("Triggering Async compaction !!")

asyncCompactorService = new SparkStreamingAsyncCompactService(new HoodieSparkEngineContext(new JavaSparkContext(sqlContext.sparkContext)),

client)

asyncCompactorService.start(new Function[java.lang.Boolean, java.lang.Boolean] {

override def apply(errored: lang.Boolean): lang.Boolean = {

log.info(s"Async Compactor shutdown. Errored ? $errored")

isAsyncCompactorServiceShutdownAbnormally = errored

reset(false)

log.info("Done resetting write client.")

true

}

})

// Add Shutdown Hook

Runtime.getRuntime.addShutdownHook(new Thread(new Runnable {

override def run(): Unit = reset(true)

}))

// First time, scan .hoodie folder and get all pending compactions

val metaClient = HoodieTableMetaClient.builder().setConf(sqlContext.sparkContext.hadoopConfiguration)

.setBasePath(client.getConfig.getBasePath).build()

val pendingInstants :java.util.List[HoodieInstant] =

CompactionUtils.getPendingCompactionInstantTimes(metaClient)

pendingInstants.foreach((h : HoodieInstant) => asyncCompactorService.enqueuePendingAsyncServiceInstant(h))

}

}

调用 asyncCompactorService.start 启动线程,执行compact

/**

* Start the service. Runs the service in a different thread and returns. Also starts a monitor thread to

* run-callbacks in case of shutdown

*

* @param onShutdownCallback

*/

public void start(Function<Boolean, Boolean> onShutdownCallback) {

Pair<CompletableFuture, ExecutorService> res = startService();

future = res.getKey();

executor = res.getValue();

started = true;

shutdownCallback(onShutdownCallback);

}

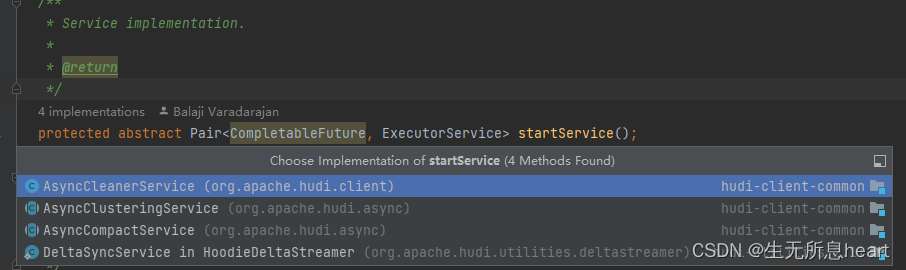

startService有多种实现,包含cleaner,clustering, compact, dletasync四种

AsyncCompactService便是compaction相关内容,在startService中主要调用了如下函数,从而启动async compaction

compactor.compact(instant);

上述函数中只是执行compaction plan,生成compactionplan的计划相关逻辑如下

回到最初的write函数中(HoodieSparkSqlWriter.write), 数据commit逻辑位于commitAndPerformPostOperations方法中

commitAndPerformPostOperations(sqlContext.sparkSession, df.schema,

writeResult, parameters, writeClient, tableConfig, jsc,

TableInstantInfo(basePath, instantTime, commitActionType, operation))

在commitAndPerformPostOperations函数中,有一段逻辑便是生成compaction plan

val asyncCompactionEnabled = isAsyncCompactionEnabled(client, tableConfig, parameters, jsc.hadoopConfiguration())

val compactionInstant: common.util.Option[java.lang.String] =

if (asyncCompactionEnabled) {

client.scheduleCompaction(common.util.Option.of(new java.util.HashMap[String, String](mapAsJavaMap(metaMap))))

} else {

common.util.Option.empty()

}

client.scheduleCompaction便是生成compaction plan

综上可以看到,生成compaction plan是在数据提交之后进行,执行compaction plan是异步触发,不会影响数据的正常写入,因此 async compaction可以提高数据写入hudi的速度,减少延迟。

2. inline compaction

待续。。。

版权归原作者 生无所息heart 所有, 如有侵权,请联系我们删除。