** 目录**

利用Zeppelin工具操作hive,需确保电脑已经启动Hadoop集群和hiveserver2服务。

在master主机上的hive安装目录下启动hiveserver2服务

[root@master hive]#bin/hiveserver2

1. Zeppelin安装

将压缩包zeppelin-0.10.1-bin-all.tgz上传到master主机的/export/software目录下,并解压到/export/servers/目录下和重命名文件

[root@master ~]#cd /export/software

[root@master software]#rz -be

[root@master software]#tar -zxvf zeppelin-0.10.1-bin-all.tgz -C /export/servers/

[root@master software]#cd /export/servers

[root@master servers]#mv zeppelin-0.10.1-bin-all zeppelin

将zeppelin/conf下的zeppelin-env.sh.template文件修改为zeppelin-env.sh以及

zeppelin-site.xml.template 文件修改为zeppelin-site.xml,并配置zeppelin-env.sh和

zeppelin-site.xml文件

[root@master servers]# cd /export/servers/zeppelin/conf

[root@master conf]# mv zeppelin-env.sh.template zeppelin-env.sh

zeppelin-env.sh文件配置以下内容:

export JAVA_HOME=/export/servers/jdk

export HADOOP_CONF_DIR=/export/servers/hadoop-3.1.3/etc/hadoop

[root@master conf]# mv zeppelin-site.xml.template zeppelin-site.xml

<name>zeppelin.server.addr</name>

<value>192.168.38.128</value>

<description>Server address</description>

<name>zeppelin.server.port</name>

<value>8080</value>

<description>Server port.</description>

启动zeppelin

[root@master conf]# cd ..

[root@master zeppelin]# bin/zeppelin-daemon.sh start

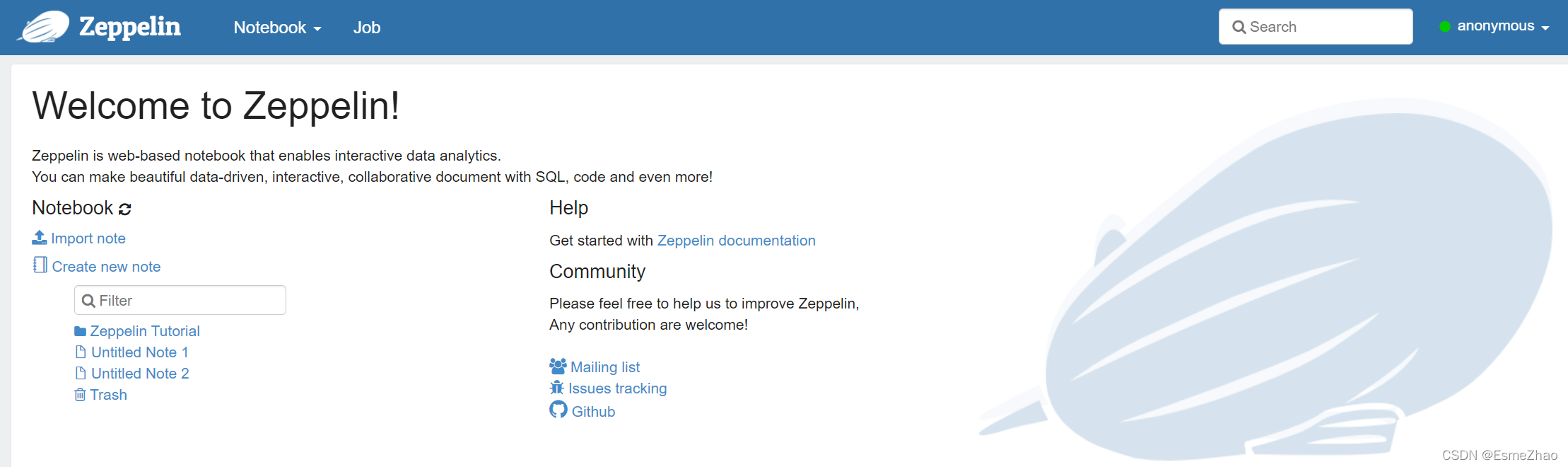

2. Zeppelin网页配置连接hive

打开Zeppelin网页,http://192.168.38.128:8080/

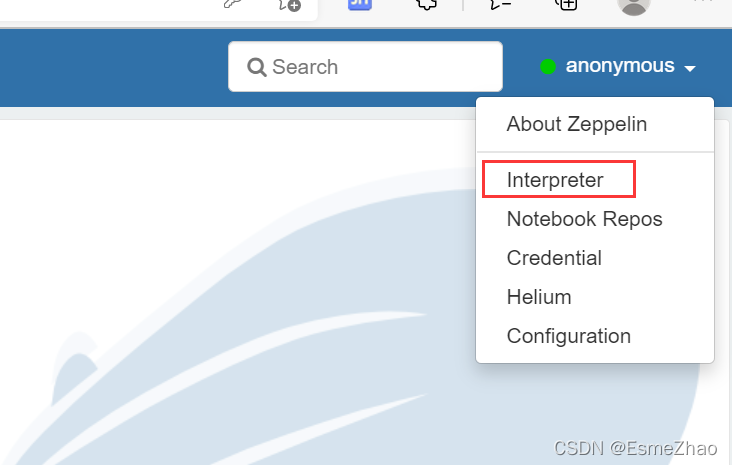

添加hive解释器

选中右上角下拉框的“Interpret”选项,跳转到创建页面,选中“create”按钮

配置hive解释器

Interpreter Name:hive

Interpreter group:jdbc

default.driver:org.apache.hive.jdbc.HiveDriver

default.url:jdbc:hive2://192.168.38.128:10000/

default.user:root

default.password:123456

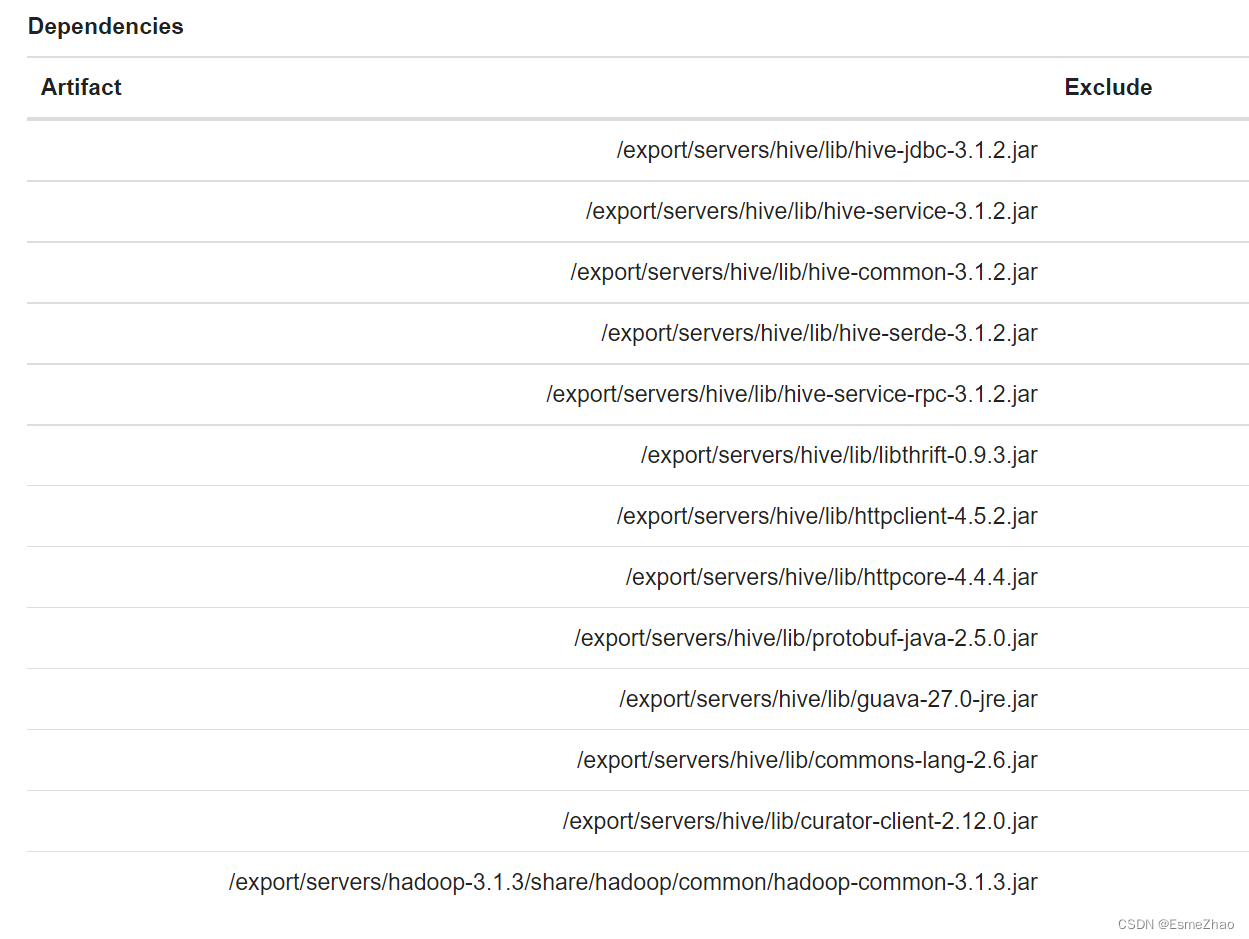

添加Hive(jdbc)相关jar包

/export/servers/hadoop-3.1.3/share/hadoop/common/hadoop-common-3.1.3.jar

/export/servers/hive/lib/curator-client-2.12.0.jar (该jar包若不存在,下载上传)

/export/servers/hive/lib/guava-27.0-jre.jar

/export/servers/hive/lib/hive-jdbc-3.1.2.jar

/export/servers/hive/lib/hive-common-3.1.2.jar

/export/servers/hive/lib/hive-serde-3.1.2.jar

/export/servers/hive/lib/hive-service-3.1.2.jar

/export/servers/hive/lib/hive-service-rpc-3.1.2.jar

/export/servers/hive/lib/libthrift-0.9.3.jar

/export/servers/hive/lib/protobuf-java-2.5.0.jar

/export/servers/hive/lib/commons-lang-2.6.jar

/export/servers/hive/lib/httpclient-4.5.2.jar

/export/servers/hive/lib/httpcore-4.4.4.jar

若出现以下错误

需要在core-site.xml文件中加入以下内容

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

master主机配置后,将core-site.xml文件分发给slave1和slave2主机,并重新启动集群。

[root@master ~]# cd /export/servers/hadoop-3.1.3/etc/hadoop

[root@master hadoop]# vi core-site.xml

[root@master hadoop]# core-site.xml

[root@master hadoop]# scp core-site.xml slave1:/export/servers/hadoop-3.1.3/etc/hadoop/

[root@master hadoop]# scp core-site.xml slave2:/export/servers/hadoop-3.1.3/etc/hadoop/

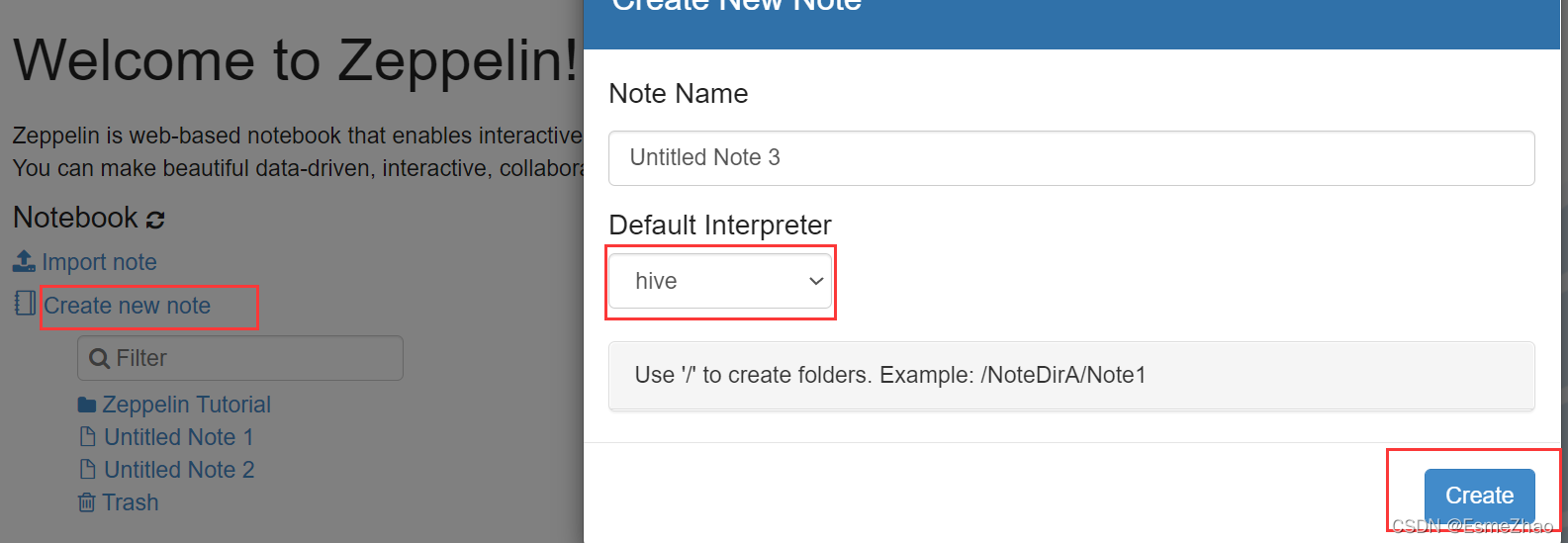

配置完成,点击保存。创建Notebook,解释器为选择为hive。

测试连接

版权归原作者 EsmeZhao 所有, 如有侵权,请联系我们删除。