tip:在这里用hive的建表和ddl(hive其中有自带的writer ,reader)

一、将数据现在已有的平台建立好,其中包括模板对应的各个可进行上传的sheet

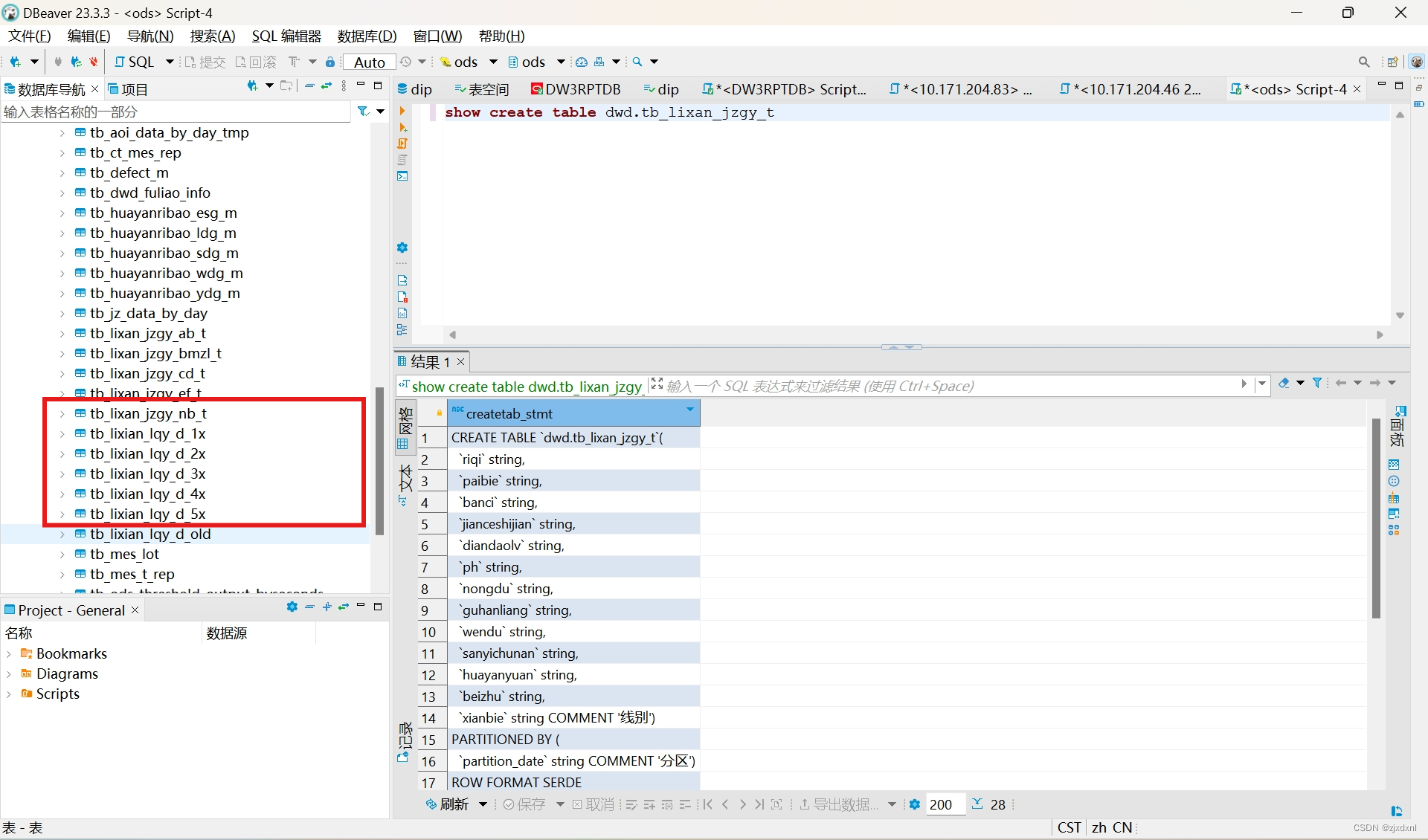

平台已经做好基于模板对应的sheet的存储:其中在做好对应关系和字段之后在数据库表也实现了对应表的创建。eg:创建对应12345x

tip:一般在ods存储实时表,然后在dwd里面存储对应的处理过的宽表(组合起来可能包括很多字段)

二、在dwd中进行创建组合表(如宽表那样)

CREATE TABLE `dwd.tb_lixan_jzgy_t`(

`riqi` string,

`paibie` string,

`banci` string,

`jianceshijian` string,

`diandaolv` string,

`ph` string,

`nongdu` string,

`guhanliang` string,

`wendu` string,

`sanyichunan` string,

`huayanyuan` string,

`beizhu` string,

`xianbie` string COMMENT '线别')

PARTITIONED BY (

`partition_date` string COMMENT '分区')

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'

LOCATION

'hdfs://ns/user/hive/warehouse/dwd.db/tb_lixan_jzgy_t'

TBLPROPERTIES (

'last_modified_by'='hdfs',

'last_modified_time'='1698041766',

'transient_lastDdlTime'='1698041766')

以上为hive的建表语句:

CREATE TABLEdwd.tb_lixan_jzgy_t``:创建一个名为tb_lixan_jzgy_t的表,该表位于dwd数据库中。- 之后的列定义(如

riqi string)描述了表的结构。表有多个字段,每个字段的名称和类型都被列出。这里,所有的字段都是字符串(string)类型。 PARTITIONED BY (partition_date string COMMENT '分区'):定义表的分区方式。这里,表被partition_date字段分区。分区可以提高数据查询的效率,尤其是在处理大量数据时。ROW FORMAT SERDE...STORED AS INPUTFORMAT...OUTPUTFORMAT... :定义表的存储格式和I/O格式。这里,表使用Parquet格式(在压缩文件方面具有更大优势)存储,这是一种列式存储格式,常用于Hadoop生态系统中。LOCATION 'hdfs://ns/user/hive/warehouse/dwd.db/tb_lixan_jzgy_t':定义表在HDFS(Hadoop分布式文件系统)上的物理存储位置。TBLPROPERTIES (...):定义表的属性。这里,表有三个属性:-last_modified_by:上次修改表的用户。-last_modified_time:上次修改表的时间(以Unix时间戳格式表示)。-transient_lastDdlTime:上次DDL(数据定义语言)操作的时间(以Unix时间戳格式表示)。

三、建表工作完成之后开始写将其组合在宽表中的shell

1.采用xxjob任务调度编写shell:

需要用corn转换工具转换好需要的执行时间。本次只要书写一个补数脚本,一达到自动补数的工作

复制前一个脚本后进行参数改动,在此基础上不需要各类时间,只需要达到${part_month}的传参,然后执行insert

#$1="2022-10-01,3" #2022-10-01 2022-10-02 2022-10-03

part_day=`date +"%Y%m%d" -d "-0 minutes"`

part_day_1=`date +"%Y%m%d" -d "-30 day"`

part_day_2=`date +"%Y%m%d" -d "-32 day"`

where_day=`date +"%Y-%m-%d" -d "-0 minutes"`

where_day_1=`date +"%Y-%m-%d" -d "-30 day"`

part_min=`date +"%Y%m%d%H%M"`

part_min_1=`date +"%Y%m%d%H%M" -d " -1 hour"`

part_day_before=`date +"%Y%m%d" -d "-1 day"`

part_month=`date +"%Y%m" -d "-0 minutes"`

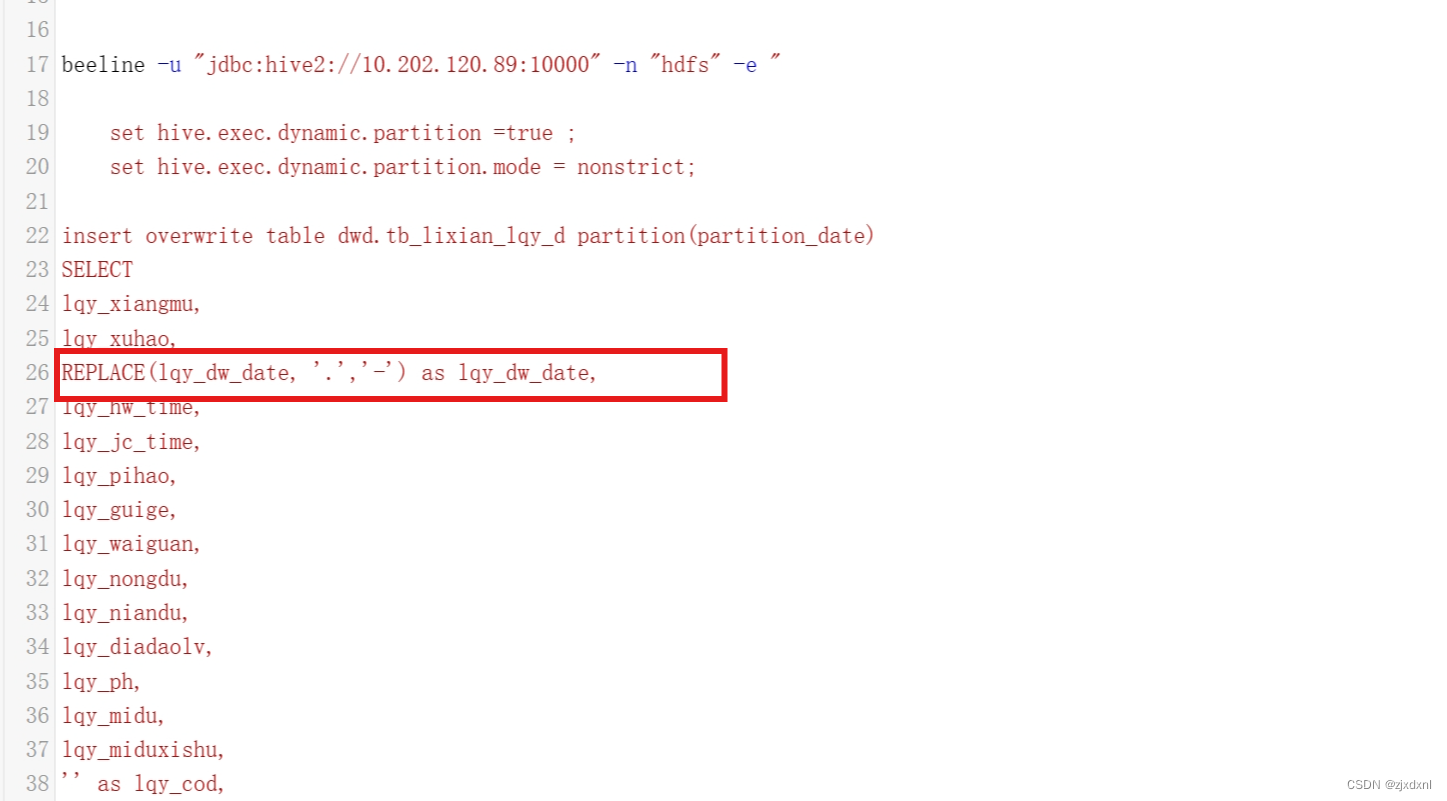

beeline -u "jdbc:hive2://10.202.120.89:10000" -n "hdfs" -e "

set hive.exec.dynamic.partition =true ;

set hive.exec.dynamic.partition.mode = nonstrict;

insert overwrite table dwd.tb_lixian_lqy_d partition(partition_date)

SELECT

lqy_xiangmu,

lqy_xuhao,

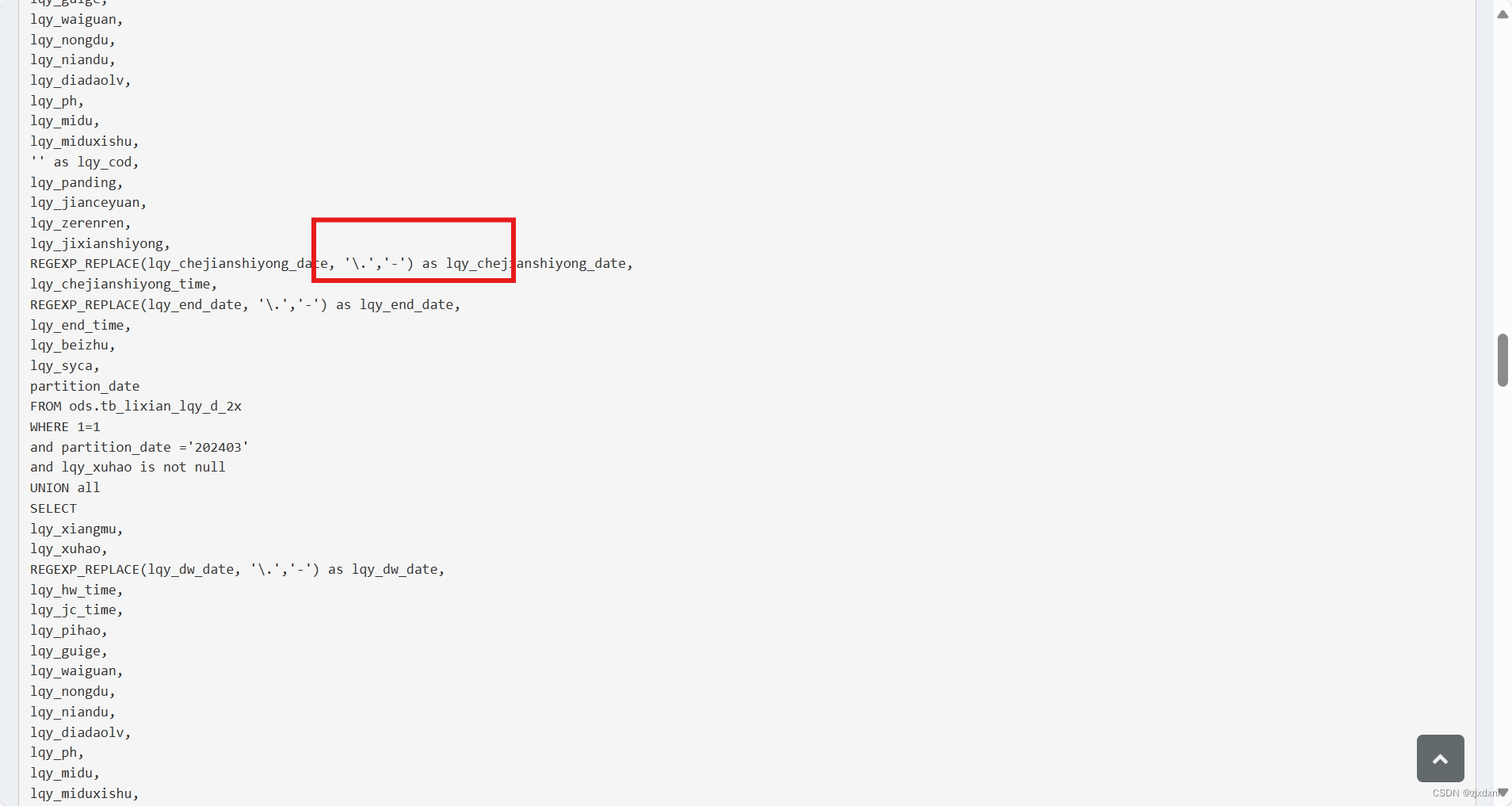

REGEXP_REPLACE(lqy_dw_date, '\\.','-') as lqy_dw_date,

lqy_hw_time,

lqy_jc_time,

lqy_pihao,

lqy_guige,

lqy_waiguan,

lqy_nongdu,

lqy_niandu,

lqy_diadaolv,

lqy_ph,

lqy_midu,

lqy_miduxishu,

'' as lqy_cod,

lqy_panding,

lqy_jianceyuan,

lqy_zerenren,

lqy_jixianshiyong,

REGEXP_REPLACE(lqy_chejianshiyong_date, '\\.','-') as lqy_chejianshiyong_date,

lqy_chejianshiyong_time,

REGEXP_REPLACE(lqy_end_date, '\\.','-') as lqy_end_date,

lqy_end_time,

lqy_beizhu,

lqy_syca,

partition_date

FROM ods.tb_lixian_lqy_d_1x

WHERE 1=1

and partition_date ='${part_month}'

and lqy_xuhao is not null

UNION all

SELECT

lqy_xiangmu,

lqy_xuhao,

REGEXP_REPLACE(lqy_dw_date, '\\.','-') as lqy_dw_date,

lqy_hw_time,

lqy_jc_time,

lqy_pihao,

lqy_guige,

lqy_waiguan,

lqy_nongdu,

lqy_niandu,

lqy_diadaolv,

lqy_ph,

lqy_midu,

lqy_miduxishu,

'' as lqy_cod,

lqy_panding,

lqy_jianceyuan,

lqy_zerenren,

lqy_jixianshiyong,

REGEXP_REPLACE(lqy_chejianshiyong_date, '\\.','-') as lqy_chejianshiyong_date,

lqy_chejianshiyong_time,

REGEXP_REPLACE(lqy_end_date, '\\.','-') as lqy_end_date,

lqy_end_time,

lqy_beizhu,

lqy_syca,

partition_date

FROM ods.tb_lixian_lqy_d_2x

WHERE 1=1

and partition_date ='${part_month}'

and lqy_xuhao is not null

UNION all

SELECT

lqy_xiangmu,

lqy_xuhao,

REGEXP_REPLACE(lqy_dw_date, '\\.','-') as lqy_dw_date,

lqy_hw_time,

lqy_jc_time,

lqy_pihao,

lqy_guige,

lqy_waiguan,

lqy_nongdu,

lqy_niandu,

lqy_diadaolv,

lqy_ph,

lqy_midu,

lqy_miduxishu,

'' as lqy_cod,

lqy_panding,

lqy_jianceyuan,

'' as lqy_zerenren,

lqy_jixianshiyong,

REGEXP_REPLACE(lqy_chejianshiyong_date, '\\.','-') as lqy_chejianshiyong_date,

lqy_chejianshiyong_time,

REGEXP_REPLACE(lqy_end_date, '\\.','-') as lqy_end_date,

lqy_end_time,

lqy_beizhu,

lqy_syca,

partition_date

FROM ods.tb_lixian_lqy_d_3x

WHERE 1=1

and partition_date ='${part_month}'

and lqy_xuhao is not null

UNION all

SELECT

lqy_xiangmu,

lqy_xuhao,

REGEXP_REPLACE(lqy_dw_date, '\\.','-') as lqy_dw_date,

lqy_hw_time,

lqy_jc_time,

lqy_pihao,

lqy_guige,

lqy_waiguan,

lqy_nongdu,

lqy_niandu,

lqy_diadaolv,

lqy_ph,

lqy_midu,

lqy_miduxishu,

lqy_cod,

lqy_panding,

lqy_jianceyuan,

lqy_zerenren,

lqy_jixianshiyong,

REGEXP_REPLACE(lqy_chejianshiyong_date, '\\.','-') as lqy_chejianshiyong_date,

lqy_chejianshiyong_time,

REGEXP_REPLACE(lqy_end_date, '\\.','-') as lqy_end_date,

lqy_end_time,

lqy_beizhu,

'' as lqy_syca,

partition_date

FROM ods.tb_lixian_lqy_d_4x

WHERE 1=1

and partition_date ='${part_month}'

and lqy_xuhao is not null

UNION all

SELECT

lqy_xiangmu,

lqy_xuhao,

REGEXP_REPLACE(lqy_dw_date, '\\.','-') as lqy_dw_date,

lqy_hw_time,

lqy_jc_time,

lqy_pihao,

lqy_guige,

lqy_waiguan,

lqy_nongdu,

lqy_niandu,

lqy_diadaolv,

lqy_ph,

lqy_midu,

lqy_miduxishu,

lqy_cod,

lqy_panding,

lqy_jianceyuan,

lqy_zerenren,

lqy_jixianshiyong,

REGEXP_REPLACE(lqy_chejianshiyong_date, '\\.','-') as lqy_chejianshiyong_date,

lqy_chejianshiyong_time,

REGEXP_REPLACE(lqy_end_date, '\\.','-') as lqy_end_date,

lqy_end_time,

lqy_beizhu,

lqy_syca,

partition_date

FROM ods.tb_lixian_lqy_d_5x

WHERE 1=1

and partition_date ='${part_month}'

and lqy_xuhao is not null

;

"

sh /data/addax/bin/addax.sh -p "-Dpart_month='${part_month}'" /data/addax/job/lixian_lqy_hive2ck_new.json

问题点:

${part_month}shell如何进行data格式转换:

#!/bin/bash

# 输入要提取的日期字符串

input_date="2021-12-31"

# 使用date命令将日期字符串转换为时间格式并提取年份和月份

year=$(echo $input_date | awk -F'-' '{print $1}')

month=$(echo $input_date | awk -F'-' '{print $2}')

# 打印结果

echo "Year: $year"

echo "Month: $month"

进行执行日志发现仍然存在日期不符合标准,展示为-------:查看日志发现转译字符的原因导致/消失

解决:将其写为.或者写为新版replace(HIVE2.0以后才提供)

版权归原作者 zjxdxnl 所有, 如有侵权,请联系我们删除。