文章目录

大家好,我是K同学啊,今天讲《深度学习100例》PyTorch版的第4个例子,前面一些例子主要还是以带大家了解PyTorch为主,建议手动敲一下代码,只有自己动手了,才能真正体会到里面的内容,光看不练是没有用的。今天的重点是在PyTorch调用VGG-16算法模型。先来了解一下PyTorch与TensorFlow的区别

PyTorch VS TensorFlow:

TensorFlow:简单,模块封装比较好,容易上手,对新手比较友好。在工业界最重要的是模型落地,目前国内的大部分企业支持TensorFlow模型在线部署,不支持Pytorch。PyTorch:前沿算法多为PyTorch版本,如果是你高校学生or研究人员,建议学这个。相对于TensorFlow,Pytorch在易用性上更有优势,更加方便调试。

当然如果你时间充足,我建议两个模型都是需要了解一下的,这两者都还是很重要的。

🍨 本文的重点:将讲解如何使用PyTorch构建神经网络模型(将对这一块展开详细的讲解)

🍖 我的环境:

- 语言环境:Python3.8

- 编译器:Jupyter Lab

- 深度学习环境: - torch==1.10.0+cu113- torchvision==0.11.1+cu113

- 创作平台:🔗 极链AI云

- 创作教程:🔎 操作手册

深度学习环境配置教程:小白入门深度学习 | 第四篇:配置PyTorch环境

👉 往期精彩内容

- 深度学习100例 | 第1例:猫狗识别 - PyTorch实现

- 深度学习100例 | 第2例:人脸表情识别 - PyTorch实现

- 🔥 本文选自专栏:《深度学习100例》Pytorch版

- ✨ 镜像专栏:《深度学习100例》TensorFlow版

一、导入数据

from torchvision.transforms import transforms

from torch.utils.data import DataLoader

from torchvision import datasets

import torchvision.models as models

import torch.nn.functional as F

import torch.nn as nn

import torch,torchvision

获取类别名字

import os,PIL,random,pathlib

data_dir ='./04-data/'

data_dir = pathlib.Path(data_dir)

data_paths =list(data_dir.glob('*'))

classeNames =[str(path).split("\\")[1]for path in data_paths]

classeNames

['Apple',

'Banana',

'Carambola',

'Guava',

'Kiwi',

'Mango',

'muskmelon',

'Orange',

'Peach',

'Pear',

'Persimmon',

'Pitaya',

'Plum',

'Pomegranate',

'Tomatoes']

加载数据文件

total_datadir ='./04-data/'# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([

transforms.Resize([224,224]),# 将输入图片resize成统一尺寸

transforms.ToTensor(),# 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize(# 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485,0.456,0.406],

std=[0.229,0.224,0.225])# 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。])

total_data = datasets.ImageFolder(total_datadir,transform=train_transforms)

total_data

Dataset ImageFolder

Number of datapoints: 12000

Root location: ./04-data/

StandardTransform

Transform: Compose(

Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)

划分数据

train_size =int(0.8*len(total_data))

test_size =len(total_data)- train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data,[train_size, test_size])

train_dataset, test_dataset

(<torch.utils.data.dataset.Subset at 0x24bbdb84ac0>,

<torch.utils.data.dataset.Subset at 0x24bbdb84610>)

train_size,test_size

(9600, 2400)

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=16,

shuffle=True,

num_workers=1)

test_loader = torch.utils.data.DataLoader(test_dataset,

batch_size=16,

shuffle=True,

num_workers=1)print("The number of images in a training set is: ",len(train_loader)*16)print("The number of images in a test set is: ",len(test_loader)*16)print("The number of batches per epoch is: ",len(train_loader))

The number of images in a training set is: 9600

The number of images in a test set is: 2400

The number of batches per epoch is: 600

for X, y in test_loader:print("Shape of X [N, C, H, W]: ", X.shape)print("Shape of y: ", y.shape, y.dtype)break

Shape of X [N, C, H, W]: torch.Size([16, 3, 224, 224])

Shape of y: torch.Size([16]) torch.int64

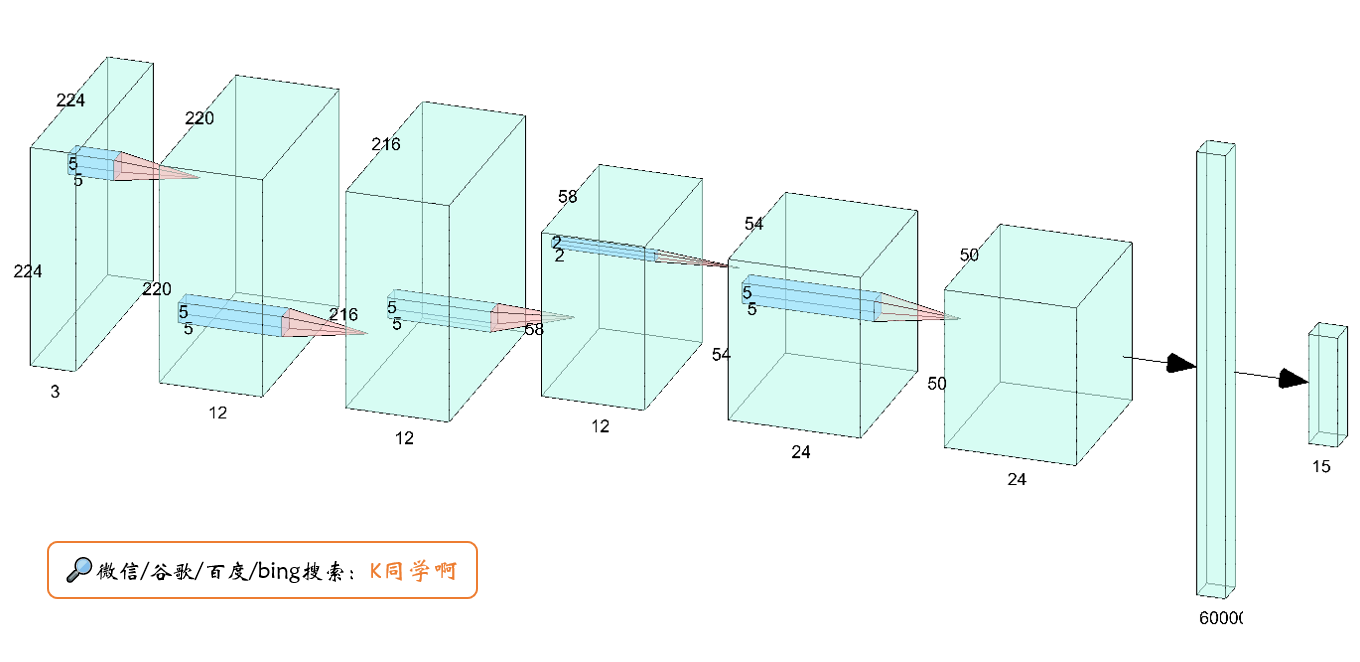

二、自建模型

nn.Conv2d()

函数:

- 第一个参数(in_channels)是输入的channel数量,彩色图片为3,黑白图片为1。

- 第二个参数(out_channels)是输出的channel数量

- 第三个参数(kernel_size)是卷积核大小

- 第四个参数(stride)是步长,就是卷积操作时每次移动的格子数,默认为1

- 第五个参数(padding)是填充大小,默认为0

这里大家最难理解的可能就是

nn.Linear(24*50*50, len(classeNames))

这行代码了,在理解它之前你需要先补习一下👉卷积计算 的相关知识,然后可参照下面的网络结构图来进行理解

classNetwork_bn(nn.Module):def__init__(self):super(Network_bn, self).__init__()"""

nn.Conv2d()函数:

第一个参数(in_channels)是输入的channel数量

第二个参数(out_channels)是输出的channel数量

第三个参数(kernel_size)是卷积核大小

第四个参数(stride)是步长,默认为1

第五个参数(padding)是填充大小,默认为0

"""

self.conv1 = nn.Conv2d(in_channels=3, out_channels=12, kernel_size=5, stride=1, padding=0)

self.bn1 = nn.BatchNorm2d(12)

self.conv2 = nn.Conv2d(in_channels=12, out_channels=12, kernel_size=5, stride=1, padding=0)

self.bn2 = nn.BatchNorm2d(12)

self.pool = nn.MaxPool2d(2,2)

self.conv4 = nn.Conv2d(in_channels=12, out_channels=24, kernel_size=5, stride=1, padding=0)

self.bn4 = nn.BatchNorm2d(24)

self.conv5 = nn.Conv2d(in_channels=24, out_channels=24, kernel_size=5, stride=1, padding=0)

self.bn5 = nn.BatchNorm2d(24)

self.fc1 = nn.Linear(24*25*25,len(classeNames))defforward(self, x):

x = F.relu(self.bn1(self.conv1(x)))

x = F.relu(self.bn2(self.conv2(x)))

x = self.pool(x)

x = F.relu(self.bn4(self.conv4(x)))

x = F.relu(self.bn5(self.conv5(x)))

x = self.pool(x)

x = x.view(-1,24*50*50)

x = self.fc1(x)return x

device ="cuda"if torch.cuda.is_available()else"cpu"print("Using {} device".format(device))

model = Network_bn().to(device)

model

Using cuda device

Network_bn(

(conv1): Conv2d(3, 12, kernel_size=(5, 5), stride=(1, 1))

(bn1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(12, 12, kernel_size=(5, 5), stride=(1, 1))

(bn2): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv4): Conv2d(12, 24, kernel_size=(5, 5), stride=(1, 1))

(bn4): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv5): Conv2d(24, 24, kernel_size=(5, 5), stride=(1, 1))

(bn5): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc1): Linear(in_features=60000, out_features=15, bias=True)

)

三、模型训练

1. 优化器与损失函数

optimizer = torch.optim.Adam(model.parameters(), lr=0.0001, weight_decay=0.0001)

loss_model = nn.CrossEntropyLoss()

from torch.autograd import Variable

deftest(model, test_loader, loss_model):

size =len(test_loader.dataset)

num_batches =len(test_loader)

model.eval()

test_loss, correct =0,0with torch.no_grad():for X, y in test_loader:

X, y = X.to(device), y.to(device)

pred = model(X)

test_loss += loss_model(pred, y).item()

correct +=(pred.argmax(1)== y).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")return correct,test_loss

deftrain(model,train_loader,loss_model,optimizer):

model=model.to(device)

model.train()for i,(images, labels)inenumerate(train_loader,0):

images = Variable(images.to(device))

labels = Variable(labels.to(device))

optimizer.zero_grad()

outputs = model(images)

loss = loss_model(outputs, labels)

loss.backward()

optimizer.step()if i %1000==0:print('[%5d] loss: %.3f'%(i, loss))

2. 模型的训练

test_acc_list =[]

epochs =30for t inrange(epochs):print(f"Epoch {t+1}\n-------------------------------")

train(model,train_loader,loss_model,optimizer)

test_acc,test_loss = test(model, test_loader, loss_model)

test_acc_list.append(test_acc)print("Done!")

Epoch 1

-------------------------------

[ 0] loss: 2.780

Test Error:

Accuracy: 85.8%, Avg loss: 0.440920

Epoch 2

-------------------------------

[ 0] loss: 0.468

Test Error:

Accuracy: 89.2%, Avg loss: 0.377265

......

Epoch 29

-------------------------------

[ 0] loss: 0.000

Test Error:

Accuracy: 91.2%, Avg loss: 0.885408

Epoch 30

-------------------------------

[ 0] loss: 0.000

Test Error:

Accuracy: 91.8%, Avg loss: 0.660563

Done!

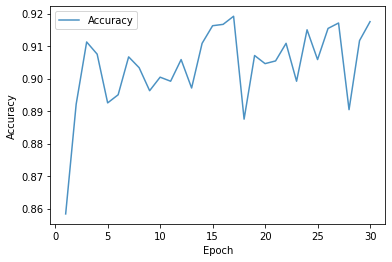

四、结果分析

import numpy as np

import matplotlib.pyplot as plt

x =[i for i inrange(1,31)]

plt.plot(x, test_acc_list, label="Accuracy", alpha=0.8)

plt.xlabel("Epoch")

plt.ylabel("Accuracy")

plt.legend()

plt.show()

版权归原作者 K同学啊 所有, 如有侵权,请联系我们删除。