1、 Hue的安装

1.1 上传解压安装包

Hue的安装支持多种方式,包括rpm包的方式进行安装、tar.gz包的方式进行安装以及cloudera manager的方式来进行安装等,我们这里使用tar.gz包的方式来进行安装

Hue的压缩包的下载地址:

http://archive.cloudera.com/cdh5/cdh/5/

我们这里使用的是CDH5.14.0这个对应的版本,具体下载地址为

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.14.0.tar.gz

tar -zxf hue-3.9.0-cdh5.14.0.tar.gz

1.2 联网安装各种必须的依赖包

yum install -y asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make openldap-devel python-devel sqlite-devel gmp-devel

1.3 Hue初始化配置

cd /export/servers/hue-3.9.0-cdh5.14.0/desktop/conf

vim hue.ini

根据自己配置修改以下内容

#通用配置

[desktop]

secret_key=jFE93j;2[290-eiw.KEiwN2s3['d;/.q[eIW^y#e=+Iei*@Mn<qW5o

#通过http访问hue界面的主机名

http_host=node01

#是否启用新版hue界面

is_hue_4=true

#时区

time_zone=Asia/Shanghai

server_user=root

server_group=root

default_user=root

default_hdfs_superuser=root

#配置使用mysql作为hue的存储数据库,大概在hue.ini的587行左右

[[database]]

#设置database为mysql

engine=mysql

#mysql所在节点

host=node01

port=3306

#mysql用户名及密码

user=root

password=Hadoop

#mysql数据库名

name=hue

1.4 创建mysql中Hue使用的DB

create database hue default character set utf8 default collate utf8_general_ci;

1.5 编译Hue

cd /export/servers/hue-3.9.0-cdh5.14.0

make apps

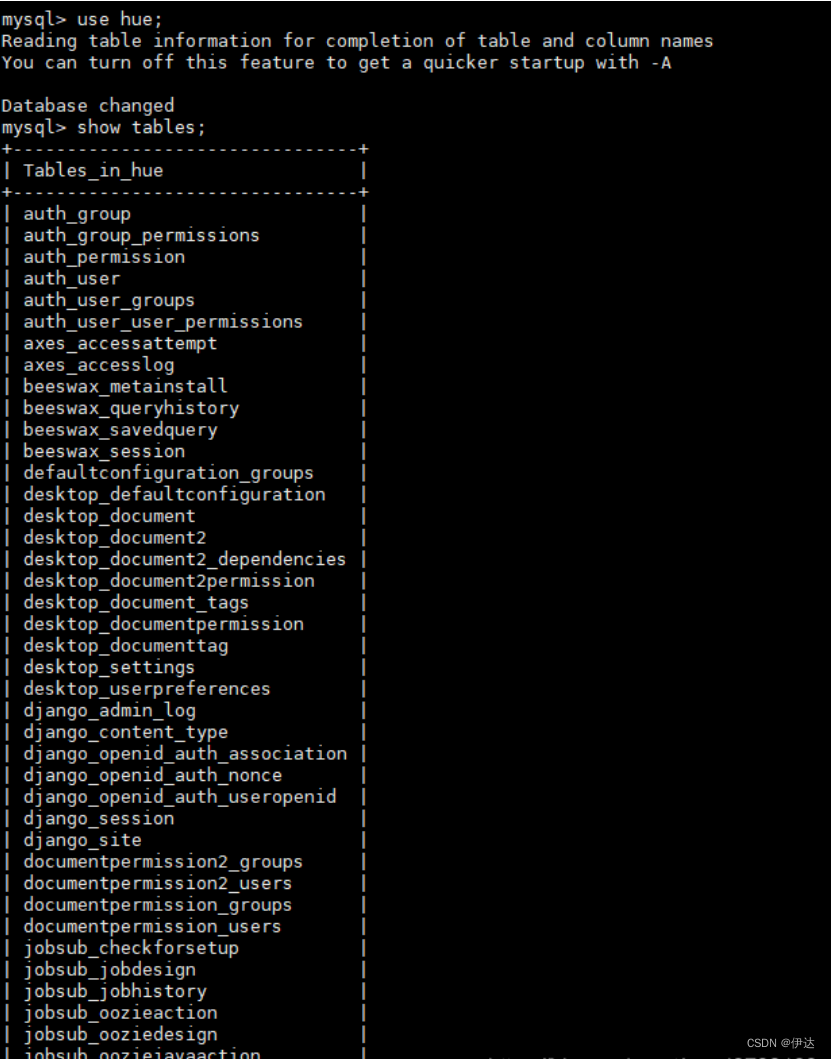

编译成功之后,会在hue数据库中创建许多初始化表

1.6 启动Hue、Web UI访问

cd /export/servers/hue-3.9.0-cdh5.14.0

前台启动:

./build/env/bin/supervisor

后台启动:

./build/env/bin/supervisor &WEB页面访问路径

http://node01:8888

2、 Hue与软件的集成

2.1、 Hue集成HDFS

2.1.1、修改core-site.xml配置

<!—允许通过httpfs方式访问hdfs的主机名 -->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value></value>

</property>

<!—允许通过httpfs方式访问hdfs的用户组 -->

<property>

<name>hadoop.proxyuser.root.groups</name>

<value></value>

</property>

2.1.2、修改hdfs-site.xml配置

<property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property>

2.1.3、修改hue.ini

[[hdfs_clusters]]

[[[default]]]

fs_defaultfs=hdfs://node01:8020

webhdfs_url=http://node01:50070/webhdfs/v1

hadoop_hdfs_home=/export/servers/hadoop-2.6.0-cdh5.14.0

hadoop_bin=/export/servers/hadoop-2.6.0-cdh5.14.0/bin

hadoop_conf_dir=/export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

3.1.4、重启HDFS、Hue

启动HDFS

start-dfs.sh启动hue进程

cd /export/servers/hue-3.9.0-cdh5.14.0

./build/env/bin/supervisor

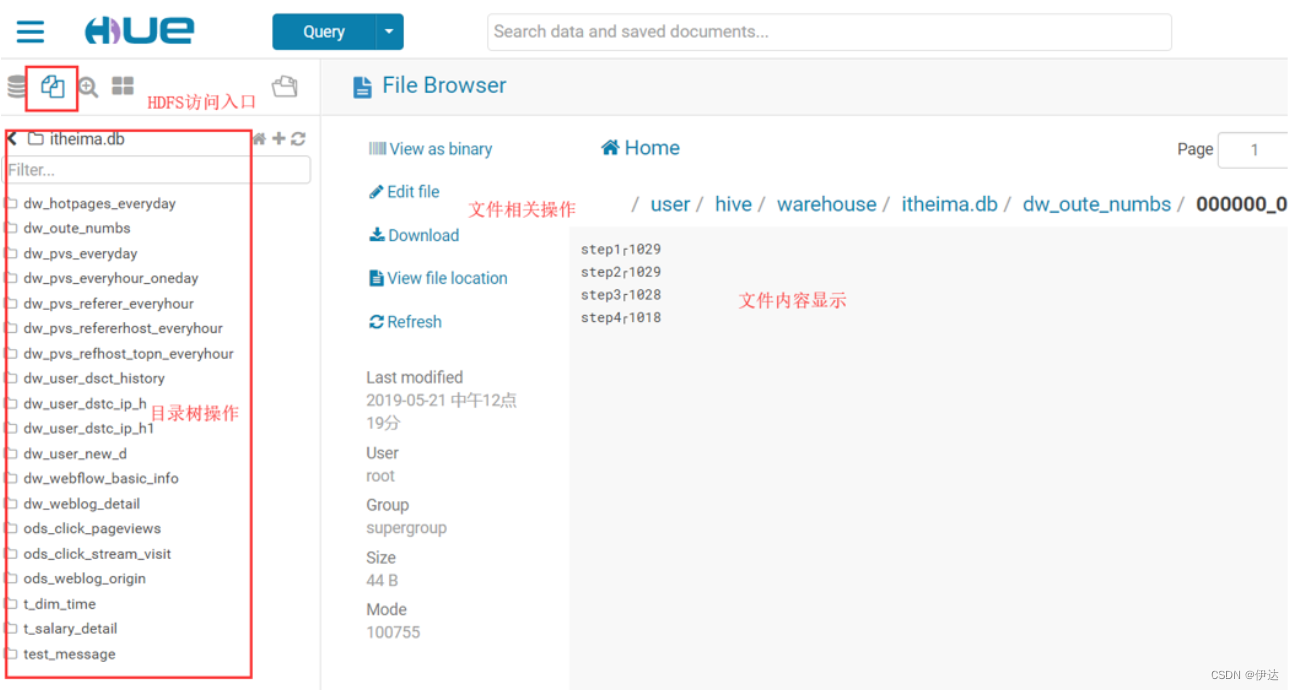

3.1.5、WEB界面查看

2.2、 Hue集成YARN

2.2.1、修改hue.ini

[[yarn_clusters]]

[[[default]]]

resourcemanager_host=node01

resourcemanager_port=8032

submit_to=True

resourcemanager_api_url=http://node01:8088

history_server_api_url=http://node01:19888

2.2.2、开启yarn日志聚集服务

MapReduce 是在各个机器上运行的, 在运行过程中产生的日志存在于各个机器上,为了能够统一查看各个机器的运行日志,将日志集中存放在 HDFS 上, 这个过程就是日志聚集

<property> ##是否启用日志聚集功能。

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property> ##设置日志保留时间,单位是秒。

<name>yarn.log-aggregation.retain-seconds</name>

<value>106800</value>

</property>

2.2.3、重启Yarn、Hue

启动yarn

start-yarn.sh启动hue进程

cd /export/servers/hue-3.9.0-cdh5.14.0

./build/env/bin/supervisor

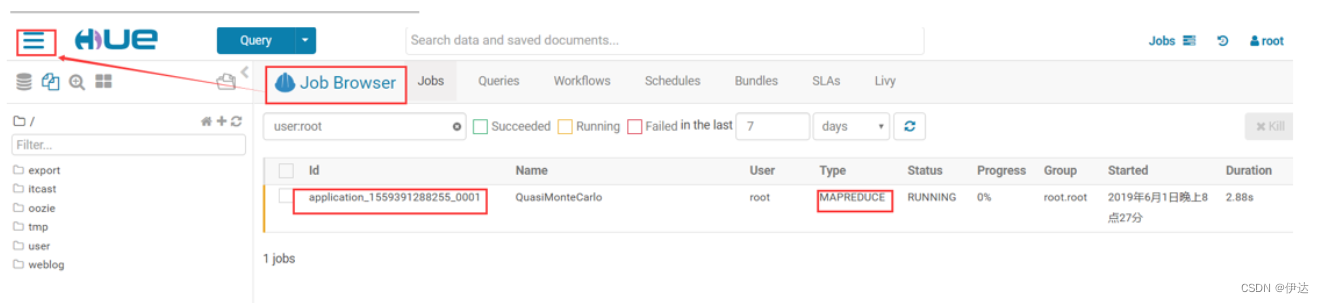

2.2.4、WEB界面查看

2.3、 Hue集成Hive

如果需要配置hue与hive的集成,我们需要启动hive的metastore服务以及hiveserver2服务(impala需要hive的metastore服务,hue需要hvie的hiveserver2服务)

2.3.1、修改Hue.ini

[beeswax]

hive_server_host=node01

hive_server_port=10000

hive_conf_dir=/export/servers/hive-1.1.0-cdh5.14.0/conf

server_conn_timeout=120

auth_username=root

auth_password=hadoop[metastore]

#允许使用hive创建数据库表等操作

enable_new_create_table=true

2.3.2、启动Hive服务、重启hue

在node01机器上启动hive的 metastore 以及 hiveserver2 服务

后台启动 metastore 以及 hiveserver2 服务

cd /export/servers/hive-1.1.0-cdh5.14.0

nohup bin/hive --service metastore &

nohup bin/hive --service hiveserver2 &启动hue进程

cd /export/servers/hue-3.9.0-cdh5.14.0

./build/env/bin/supervisor

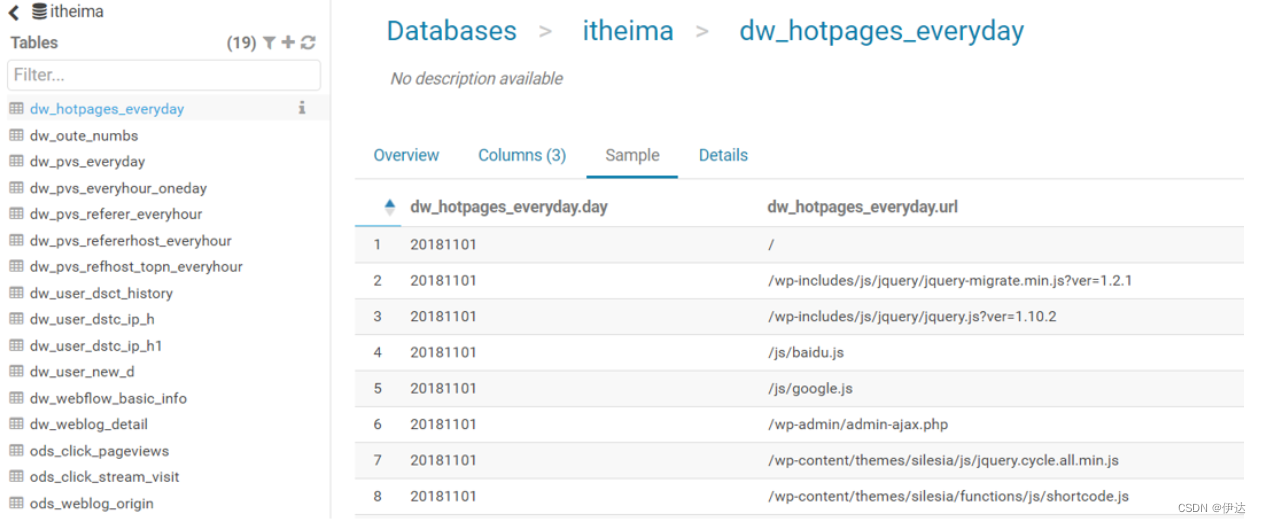

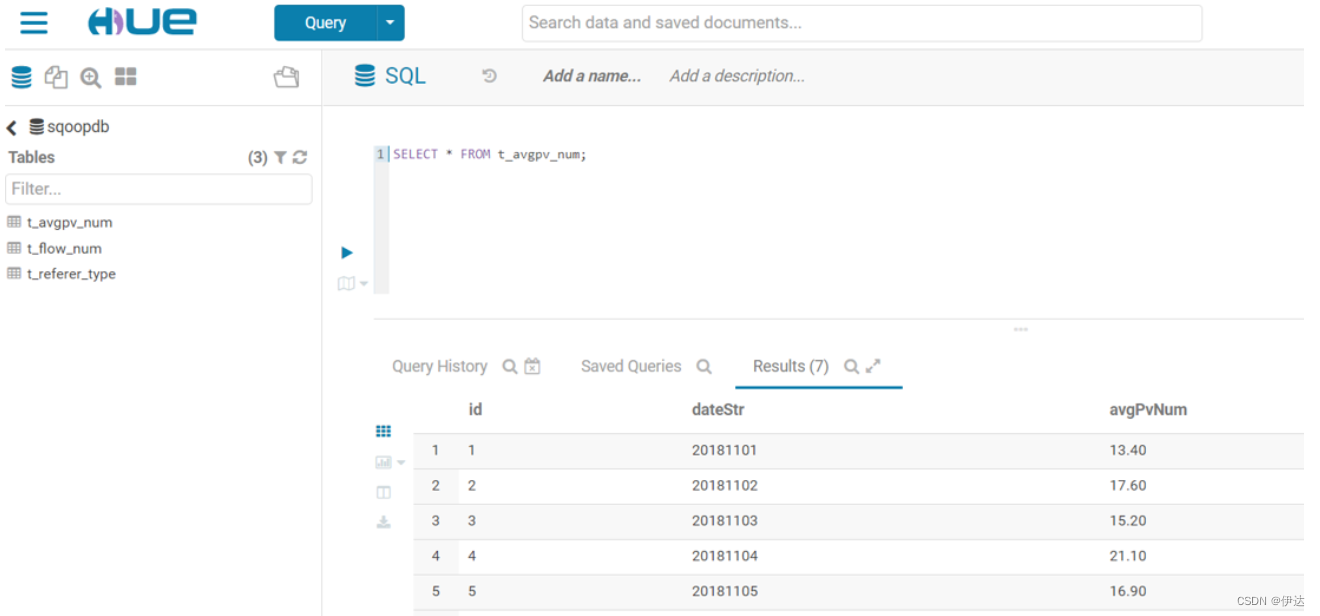

2.3.3、WEB界面查看

2.4、Hue集成Mysql

2.4.1、修改hue.ini

需要把mysql的注释给去掉;大概位于1546行

[[[mysql]]]

nice_name="My SQL DB"

engine=mysql

host=node01

port=3306

user=root

password=hadoop

2.4.2、重启hue

启动hue进程

cd /export/servers/hue-3.9.0-cdh5.14.0/

./build/env/bin/supervisor

2.4.3、WEB界面查看

2.5、Hue集成Oozie

2.5.1、修改hue配置文件hue.ini

[liboozie]

The URL where the Oozie service runs on. This is required in order for

users to submit jobs. Empty value disables the config check.

oozie_url=http://node01:11000/oozie

Requires FQDN in oozie_url if enabled

security_enabled=false

Location on HDFS where the workflows/coordinator are deployed when submitted.

remote_deployement_dir=/export/service/oozie/oozie_works

[oozie]

Location on local FS where the examples are stored.

local_data_dir=/export/servers/oozie-4.1.0-cdh5.14.0/examples/apps

Location on local FS where the data for the examples is stored.

sample_data_dir=/export/servers/oozie-4.1.0-cdh5.14.0/examples/input-data

Location on HDFS where the oozie examples and workflows are stored.

Parameters are $TIME and $USER, e.g. /user/$USER/hue/workspaces/workflow-$TIME

remote_data_dir=/user/root/oozie_works/examples/apps

Maximum of Oozie workflows or coodinators to retrieve in one API call.

oozie_jobs_count=100

Use Cron format for defining the frequency of a Coordinator instead of the old frequency number/unit.

enable_cron_scheduling=true

Flag to enable the saved Editor queries to be dragged and dropped into a workflow.

enable_document_action=true

Flag to enable Oozie backend filtering instead of doing it at the page level in Javascript. Requires Oozie 4.3+.

enable_oozie_backend_filtering=true

Flag to enable the Impala action.

enable_impala_action=true

[filebrowser]

Location on local filesystem where the uploaded archives are temporary stored.

archive_upload_tempdir=/tmp

Show Download Button for HDFS file browser.

show_download_button=true

Show Upload Button for HDFS file browser.

show_upload_button=true

Flag to enable the extraction of a uploaded archive in HDFS.

enable_extract_uploaded_archive=true

2.5.2、启动hue、oozie

启动hue进程

cd /export/servers/hue-3.9.0-cdh5.14.0

./build/env/bin/supervisor启动oozie进程

cd /export/servers/oozie-4.1.0-cdh5.14.0

./bin/oozied.sh start

2.5.3、WEB界面查看

2.6、Hue集成Hbase

2.6.1、修改hbase配置

在hbase-site.xml配置文件中的添加如下内容,开启hbase thrift服务

修改完成之后scp给其他机器上hbase安装包

<property> <name>hbase.thrift.support.proxyuser</name> <value>true</value> </property> <property> <name>hbase.regionserver.thrift.http</name> <value>true</value> </property>

2.6.2、修改hadoop配置

在core-site.xml中确保 HBase被授权代理,添加下面内容

<property> <name>hadoop.proxyuser.hbase.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.hbase.groups</name> <value>*</value> </property>

把修改之后的配置文件scp给其他机器和hbase安装包conf目录下

2.6.3、修改Hue配置

[hbase]

Comma-separated list of HBase Thrift servers for clusters in the format of '(name|host:port)'.

Use full hostname with security.

If using Kerberos we assume GSSAPI SASL, not PLAIN.

hbase_clusters=(Cluster|node01:9090)

HBase configuration directory, where hbase-site.xml is located.

hbase_conf_dir=/export/servers/hbase-1.2.1/conf

Hard limit of rows or columns per row fetched before truncating.

truncate_limit = 500

'buffered' is the default of the HBase Thrift Server and supports security.

'framed' can be used to chunk up responses,

which is useful when used in conjunction with the nonblocking server in Thrift.

thrift_transport=buffered

2.6.4、启动hbase(包括thrift服务)、hue

需要启动hdfs和hbase,然后再启动thrift

start-dfs.sh

start-hbase.sh

./hbase-daemon.sh start thrift重新启动hue

cd /export/servers/hue-3.9.0-cdh5.14.0/

./build/env/bin/supervisor

2.6.5、WEB界面查看

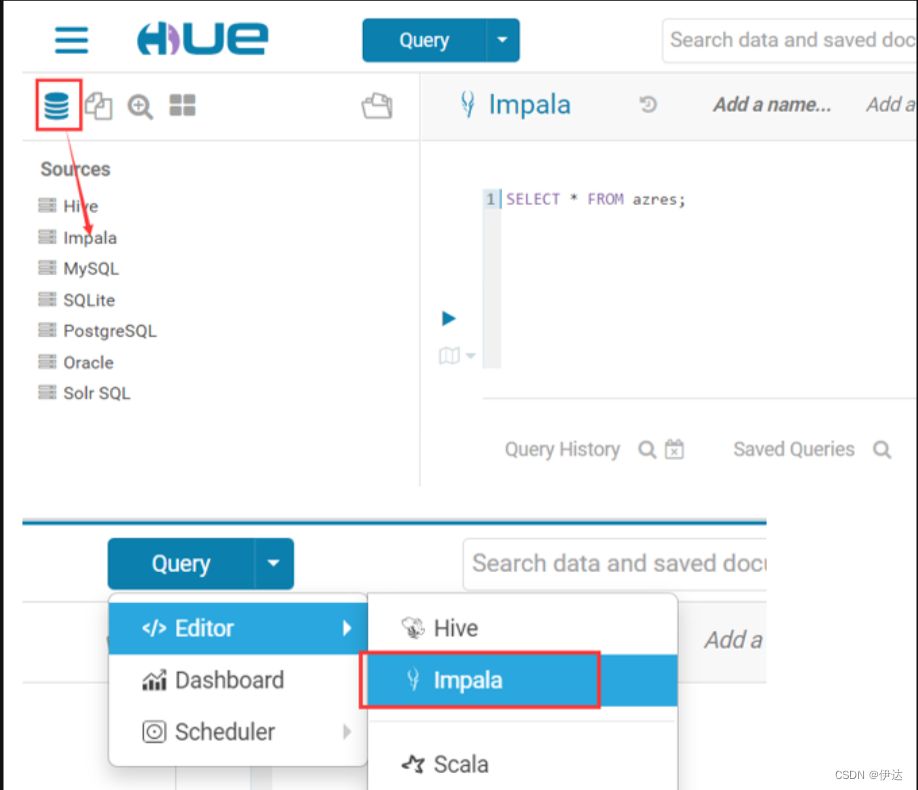

2.7、Hue集成Impala

2.7.1、修改hue配置文件hue.ini

[impala]

server_host=node01

server_port=21050

impala_conf_dir=/etc/impala/conf

2.7.2、重启Hue

cd /export/servers/hue-3.9.0-cdh5.14.0/

./build/env/bin/supervisor

2.7.3、WEB界面查看

版权归原作者 Allen_lixl 所有, 如有侵权,请联系我们删除。