一、准备环境包:

scala-2.11.8.tgz、spark-3.2.1-bin-hadoop2.7.tgz、hadoop-2.7.1.tar、jdk-8u152-linux-x64.tar

二、创建centos7虚拟机并配置ip

三、链接Xshell并上环境包

四、安装 JDK

1.解压jdk

[root@master ~]# tar -zxvf /opt/software/jdk-8u152-linux-x64.tar.gz -C /usr/local/src/

[root@master ~]# ls /usr/local/src/

jdk1.8.0_152

2.设置 JAVA 环境变量

[root@master ~]# vi /etc/profile

#在文档最后添加下面两行

export JAVA_HOME=/usr/local/src/jdk1.8.0_152 #注意自己的文件路径

export PATH=$PATH:$JAVA_HOME/bin

3.执行 source 使设置生效:

[root@master ~]# source /etc/profile

4.检查 JAVA 是否可用。

[root@master ~]# echo $JAVA_HOME /usr/local/src/jdk1.8.0_152

[root@master ~]# java -version

java version "1.8.0_152"

Java(TM) SE Runtime Environment (build 1.8.0_152-b16)

Java HotSpot(TM) 64-Bit Server VM (build 25.152-b16, mixed mode)

能够正常显示 Java 版本则说明 JDK 安装并配置成功。

五、免密登陆

1.创建ssh秘钥,输入如下命令,生成公私密钥

[root@master ~]# ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

2.将master公钥id_dsa复制到master进行公钥认证,实现本机免密登陆,测试完exit退出

[root@master ~]# ssh-copy-id -i /root/.ssh/id_dsa.pub master

[root@master ~]# ssh master

[root@master ~]# exit

六、安装 Hadoop 软件

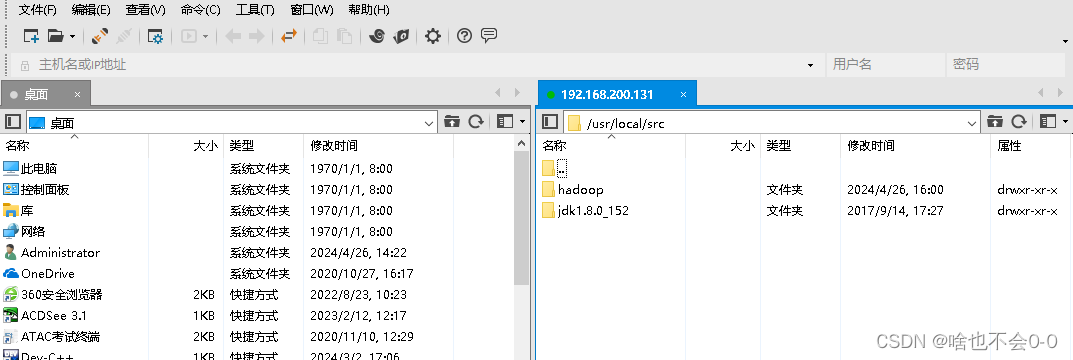

1.解压hadoop

[root@master ~]# tar -zxvf /opt/software/hadoop-2.7.1.tar.gz -C /usr/local/src/

[root@master src]# ls

hadoop jdk1.8.0_152

[root@master src]# cd

[root@master ~]# ll /usr/local/src/

total 0

drwxr-xr-x 11 hadoop hadoop 172 Apr 26 16:00 hadoop #将hadoop-2.7.1重命名为hadoop

drwxr-xr-x 8 hadoop hadoop 255 Sep 14 2017 jdk1.8.0_152

2.配置 Hadoop 环境变量

[root@master ~]# vi /etc/profile

#在文档最后添加下面两行

export HADOOP_HOME=/usr/local/src/hadoop-2.7.1 #注意自己的文件路径

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

3.执行 source 使用设置生效并验证:

[root@master ~]# source /etc/profile

[root@master ~]# hadoop

Usage: hadoop [--config confdir] [COMMAND | CLASSNAME]

CLASSNAME run the class named CLASSNAME

or

where COMMAND is one of:

fs run a generic filesystem user client

version print the version

jar <jar> run a jar file

note: please use "yarn jar" to launch

YARN applications, not this command.

checknative [-a|-h] check native hadoop and compression libraries availability

distcp <srcurl> <desturl> copy file or directories recursively

archive -archiveName NAME -p <parent path> <src>* <dest> create a hadoop archive

classpath prints the class path needed to get the

credential interact with credential providers

Hadoop jar and the required libraries

daemonlog get/set the log level for each daemon

trace view and modify Hadoop tracing settingsMost commands print help when invoked w/o parameters.

出现上述 Hadoop 帮助信息就说明 Hadoop 已经安装好了。

七、配置伪分布hadoop

1.修改/usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

[root@master ~]# cd /usr/local/src/hadoop/etc/hadoop/

[root@master hadoop]# vi hadoop-env.sh

找到并修改配置文件为jdk的安装路径:

export JAVA_HOME=/usr/local/src/jdk1.8.0_152

**2.**修改/usr/local/src/hadoop/etc/hadoop/core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://master</value>#注意这里是ip映射可改为自己的ip地址 </property> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/src/hadoop/tmp</value>#注意修改路径 </property> </configuration>

3.修改/usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>3</value> </property> </configuration>

4.重命名mapred-site.xml.template为mapred-site.xml并配置

[root@master hadoop]# vi mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

5.修改/usr/local/src/hadoop/etc/hadoop/yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

6.修改/usr/local/src/hadoop/etc/hadoop/slaves

这里同样为ip映射

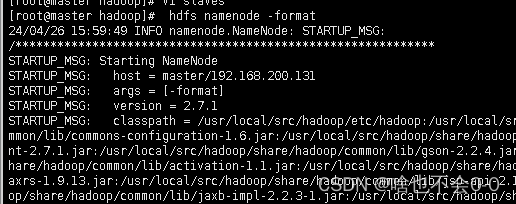

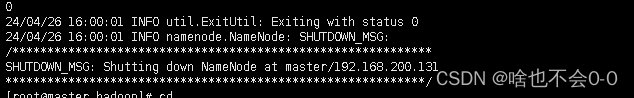

7.格式化hdfs

[root@master hadoop]# hdfs namenode -format

8.启动集群 jps查看,登录网页

[root@master ~]# start-all.sh

[root@master ~]# jps

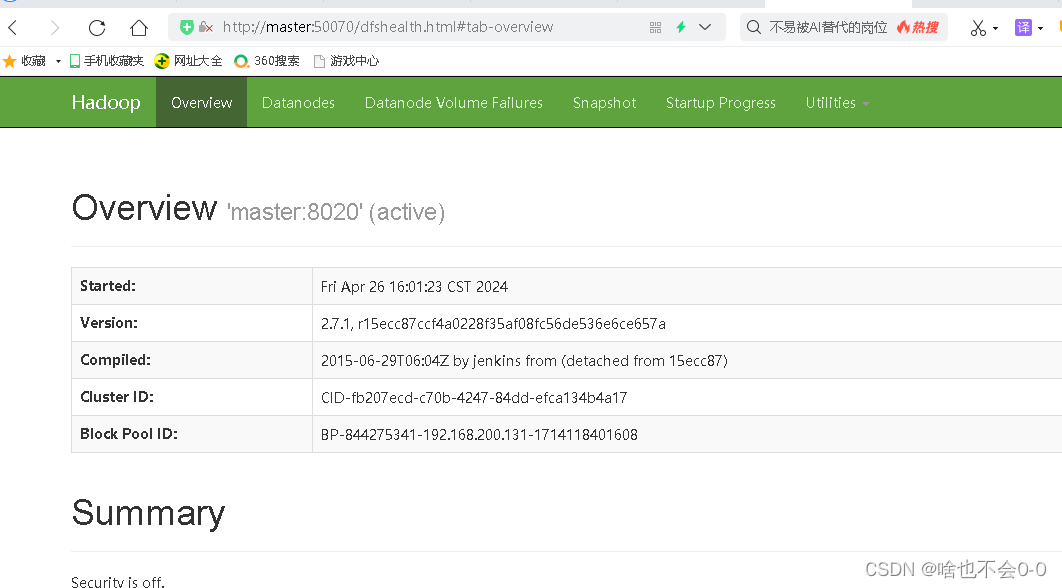

9.在浏览器的地址栏输入http://master:50070,进入页面可以查看NameNode和DataNode 信息

八、 安装伪分布式spark

1.解压spark

[root@master ~]# tar -zxf spark-3.2.1-bin-hadoop2.7.tgz -C /usr/local/

2.重命名spark-env.sh.template为spark-env.sh并配置

[root@master conf]# cp spark-env.sh.template spark-env.sh

[root@master conf]# vi spark-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_152#注意自己的路径

export HADOOP_HOME=/usr/local/src/hadoop#注意自己的路径和是否重命名过hadoop

export HADOOP_CONF_DIR=/usr/local/src/hadoop/etc/hadoop#注意自己的路径

export SPARK_MASTER_IP=master

export SPART_LOCAL_IP=master

3.进入spark目录的/sbin下启动spark集群,jps查看

[root@master conf]# cd /usr/local/spark-3.2.1-bin-hadoop2.7/sbin/

[root@master sbin]# ./start-all.sh

[root@master sbin]# jps

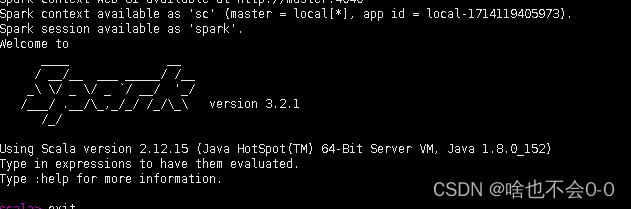

4.启动spark-ahell

[root@master ~]# cd /usr/local/spark-3.2.1-bin-hadoop2.7/

[root@master spark-3.2.1-bin-hadoop2.7]# ./bin/spark-shell

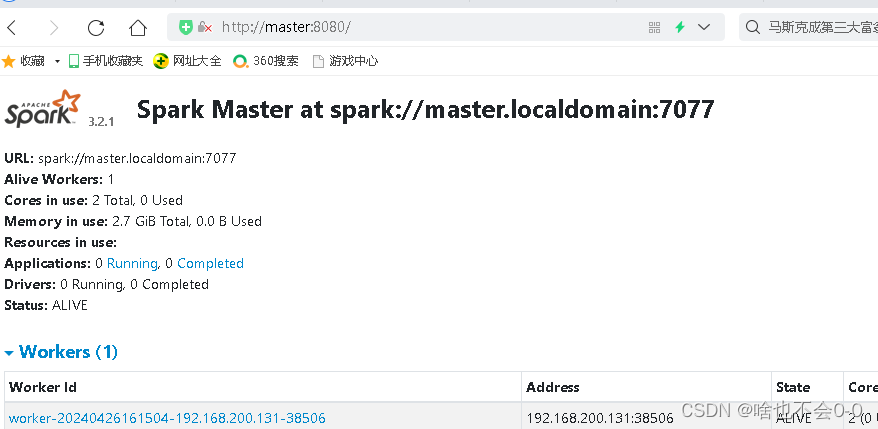

5.查看网页http://master:8080

八、安装伪分布式scala

1.解压Scala包

tar -zxf scala-2.11.8.tgz -C /usr/local

2.配置scala环境变量,重新加载配置文件,运行scala

[root@master ~]# vim /etc/profile

export SCALA_HOME=/usr/local/scala-2.11.8

export PATH=$PATH:$SCALA_HOME/bin[root@master ~]# source /etc/profile

[root@master ~]# scala

Welcome to Scala 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_152).

Type in expressions for evaluation. Or try :help.scala>

版权归原作者 啥也不会0-0 所有, 如有侵权,请联系我们删除。