刘二大人 PyTorch深度学习实践 笔记 P7 处理多维特征的输入

P7 处理多维特征的输入

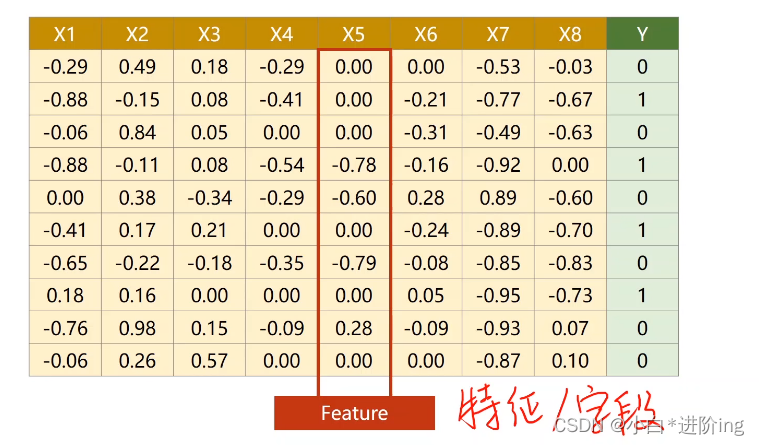

1、行为记录,列为特征

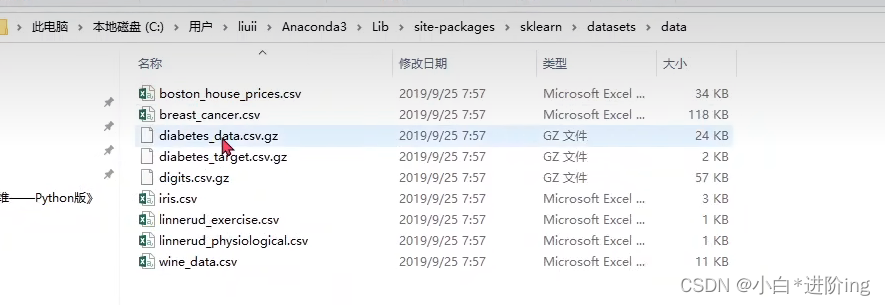

2、Anaconda3 数据存储路径

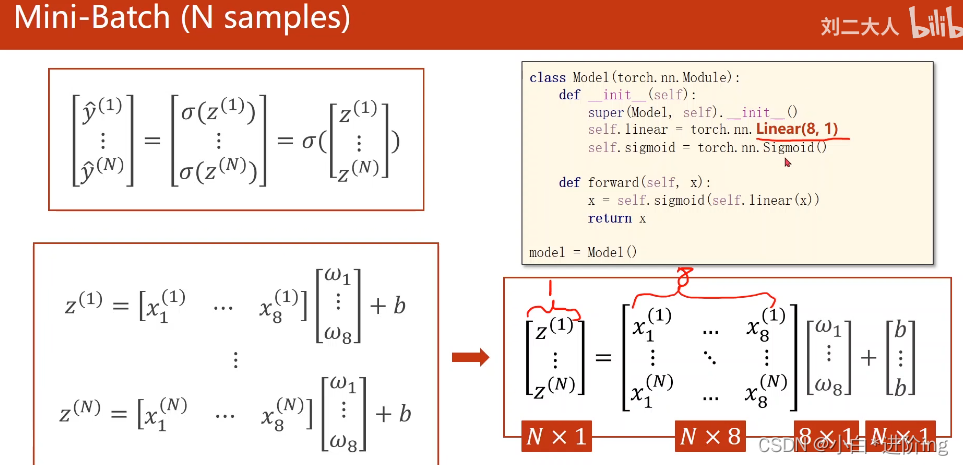

3、转化

转化成矩阵向量化的计算,可以利用CPU等进行并行计算,提高计算的速度

一般来说,中间层数越多,网络对非线性映射的拟合程度越好,学习能力越强,但是学习能力太强可能会将输入的噪声也学习进去,所以网络学习能力太强也不好,学习应该具有泛化能力。

|:😐 学习新知识,提高泛化能力——学会读文档+计算机系统基本架构理解

4、代码实现:

import numpy as np

import torch

import matplotlib.pyplot as plt

# gz压缩包里的文件名一样,就可以用loadtxt把数据读出来# delimiter=',' , 以逗号作为分隔符# dtype=np.float32 , 数据类型为32位的浮点数

xy = np.loadtxt('dataset/diabetes.csv.gz', delimiter=',', dtype=np.float32)# 该函数会创建两个tensor张量出来

x_data = torch.from_numpy(xy[:,:-1])# 所有行,除了最后一列

y_data = torch.from_numpy(xy[:,[-1]])# 所有行,最后一列 转为矩阵而不是向量classModel(torch.nn.Module):def__init__(self):super(Model, self).__init__()

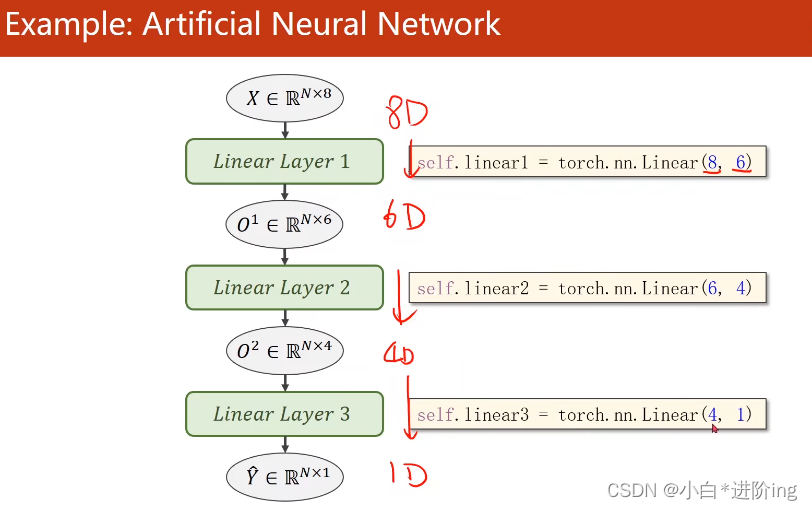

self.linear1 = torch.nn.Linear(8,6)# 第一层是8维到6维的非线性空间变换

self.linear2 = torch.nn.Linear(6,4)# 第二层是6维到4维的非线性空间变换

self.linear3 = torch.nn.Linear(4,1)# 第三层是4维到1维的非线性空间变换

self.sigmoid = torch.nn.Sigmoid()# 作为一个运算模块defforward(self, x):

x = self.sigmoid(self.linear1(x))

x = self.sigmoid(self.linear2(x))

x = self.sigmoid(self.linear3(x))return x

model = Model()

criterion = torch.nn.BCELoss(reduction='mean')# loss均值

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

epoch_list =[]

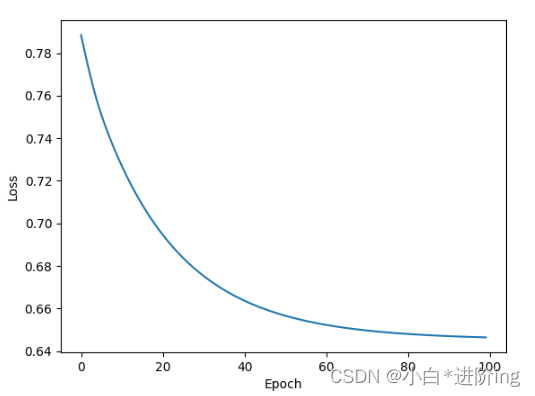

loss_list =[]for epoch inrange(100):

y_pred = model(x_data)# 并没有做mini-batch

loss = criterion(y_pred, y_data)print(epoch, loss.item())

epoch_list.append(epoch)

loss_list.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

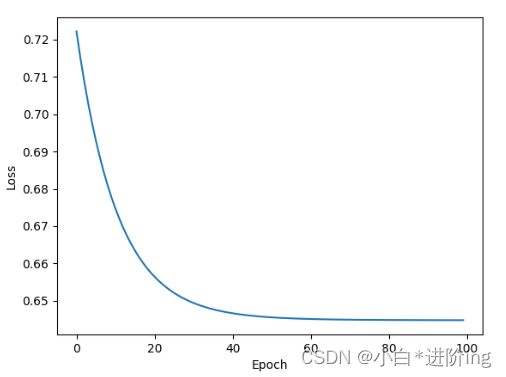

plt.plot(epoch_list, loss_list)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.show()

输出:

00.722206056118011510.715146780014038120.70873147249221830.702902495861053540.69760715961456350.692797243595123360.688428580760955870.684460759162902880.680857121944427590.6775842308998108100.6746116876602173110.6719117164611816120.6694590449333191130.6672309637069702140.6652066707611084150.6633674502372742160.6616960167884827170.6601769924163818180.6587962508201599190.657541036605835200.6563997864723206210.655362069606781220.6544182300567627230.6535598039627075240.6527788639068604250.6520683765411377260.6514217257499695270.6508333683013916280.6502977609634399290.649810254573822300.6493661999702454310.6489620804786682320.6485939025878906330.6482585668563843340.6479530930519104350.6476748585700989360.6474213600158691370.6471902132034302380.646979570388794390.6467875838279724400.6466125249862671410.6464529037475586420.6463074088096619430.6461746692657471440.6460535526275635450.6459430456161499460.6458422541618347470.6457502841949463480.6456663608551025490.6455896496772766500.6455196738243103510.6454557776451111520.645397424697876530.6453441381454468540.6452954411506653550.6452507972717285560.6452100872993469570.6451728940010071580.6451389193534851590.6451077461242676600.6450792551040649610.6450531482696533620.6450293064117432630.645007312297821640.6449873447418213650.6449688673019409660.6449519991874695670.6449365615844727680.6449223160743713690.6449092626571655700.6448972225189209710.644886314868927720.6448760628700256730.6448667049407959740.6448581218719482750.6448501348495483760.644842803478241770.6448360085487366780.6448296904563904790.6448239088058472800.6448184251785278810.6448134183883667820.6448087096214294830.6448043584823608840.6448003053665161850.6447965502738953860.6447930335998535870.6447896361351013880.6447865962982178890.644783616065979900.6447808146476746910.6447781920433044920.6447756886482239930.6447734832763672940.644771158695221950.6447690725326538960.6447670459747314970.6447651386260986980.6447632908821106990.6447615027427673

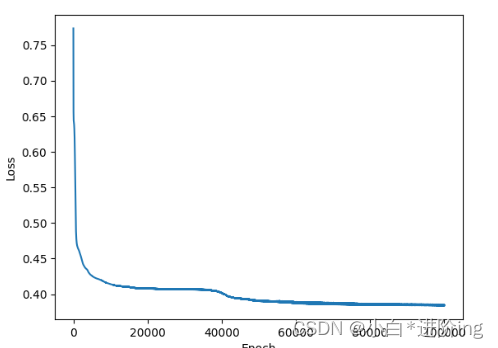

将训练次数提升,精确度明显提升了

for epoch inrange(100000):

y_pred = model(x_data)# 并没有做mini-batch

loss = criterion(y_pred, y_data)# print(epoch, loss.item())

epoch_list.append(epoch)

loss_list.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()if epoch %100==0:# 三目运算

y_pred_label = torch.where(y_pred >=0.5, torch.tensor([1.0]), torch.tensor([0.0]))# 计算精确度

acc = metrics.accuracy_score(y_pred_label, y_data, normalize=True)print("loss = ", loss.item(),"acc = ", acc)

输出:

...

loss =0.3848259449005127 acc =0.8287220026350461

loss =0.3847823441028595 acc =0.8287220026350461

loss =0.38398289680480957 acc =0.8326745718050066

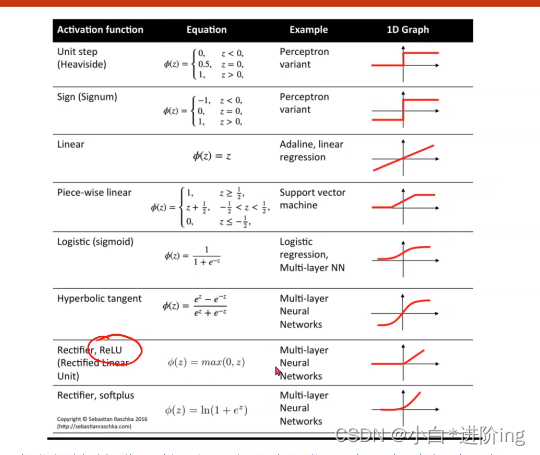

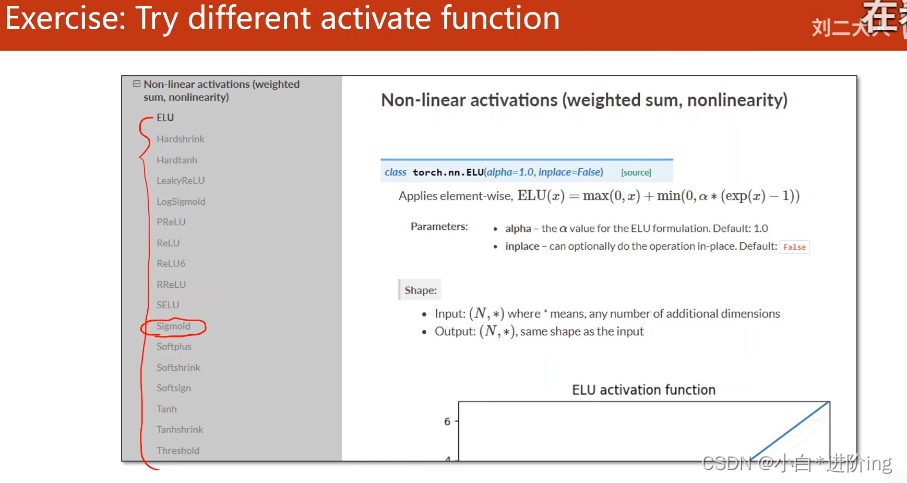

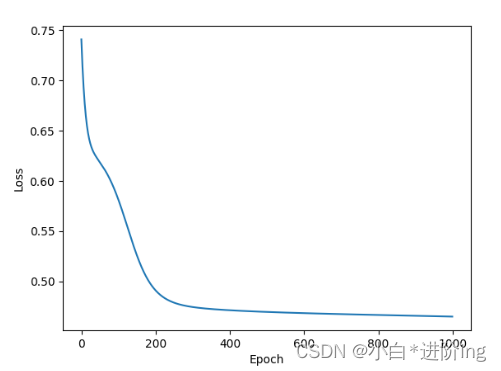

5、练习

只需要修改如下代码即可

classModel(torch.nn.Module):def__init__(self):super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8,6)

self.linear2 = torch.nn.Linear(6,4)

self.linear3 = torch.nn.Linear(4,1)

self.sigmoid = torch.nn.Sigmoid()# 作为一个运算模块

self.relu = torch.nn.ReLU()# 作为一个运算模块defforward(self, x):

x = self.relu(self.linear1(x))

x = self.relu(self.linear2(x))

x = self.sigmoid(self.linear3(x))return x

输出:

00.788561046123504610.779748737812042220.771511852741241530.763806223869323740.756889343261718850.75084280967712460.745381176471710270.740325272083282580.735585868358612190.7311105728149414100.726868212223053...900.6470083594322205910.6469311714172363920.6468574404716492930.6467872262001038940.6467201113700867950.6466561555862427960.6465951204299927970.6465367674827576980.6464811563491821990.646428108215332

Tanh函数

其他大同小异,略,请自行测试。

版权归原作者 小白*进阶ing 所有, 如有侵权,请联系我们删除。