一、环境准备:3台centos7服务器

修改hosts(所有服务器都需要修改)

vim /etc/hosts

10.9.5.114 cdh1

10.9.5.115 cdh2

10.9.5.116 cdh3

修改主机名,cdh1为主机名,根据自己定义

sysctl kernel.hostname=cdh1

安装远程同步工具rsync,用于服务器间同步配置文件

yum install -y rsync

设置时间同步,如果时间相差过大启动会报ClockOutOfSyncException异常,默认是30000ms

安装以下包,否则可能会报No such file or directory

yum install autoconf automake libtool

配置root用户免密登录(所有服务器执行,因为host配置的是IP,所以本机也需要执行公钥上传)

cd ~/.ssh/

ssh-keygen -t rsa #生成免密登录公私钥,根据提示按回车或y

ssh-copy-id -i ~/.ssh/id_rsa.pub root@cdh1 #将本机的公钥上传至cdh1机器上,实现对cdh1机器免密登录

ssh-copy-id -i ~/.ssh/id_rsa.pub root@cdh2

ssh-copy-id -i ~/.ssh/id_rsa.pub root@cdh3

关闭防火墙,或者放行以下端口:9000、50090、8022、50470、50070、49100、8030、8031、8032、8033、8088、8090

二、下载hadoop3.3.4

下载地址:https://archive.apache.org/dist/hadoop/common/hadoop-3.3.4/hadoop-3.3.4.tar.gz

三、安装Hadoop

1、登录cdh1服务器,将下载的安装包上传至/home/software目录

进入/home/service目录并解压hadoop

cd /home/servers/

tar -zxvf ../software/hadoop-3.3.1.tar.gz

2、将Hadoop添加到环境变量vim /etc/profile

vim /etc/profile

export HADOOP_HOME=/home/servers/hadoop-3.3.4

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

3、新建目录

mkdir /home/hadoop

mkdir /home/hadoop/tmp

mkdir /home/hadoop/var

mkdir /home/hadoop/dfs

mkdir /home/hadoop/dfs/name

mkdir /home/hadoop/dfs/data

4、Hadoop集群配置 = HDFS集群配置 + MapReduce集群配置 + Yarn集群配置

HDFS集群配置

1. 将JDK路径明确配置给HDFS(修改hadoop-env.sh)

2. 指定NameNode节点以及数据存储目录(修改core-site.xml)

3. 指定SecondaryNameNode节点(修改hdfs-site.xml)

4. 指定DataNode从节点(修改workers文件,每个节点配置信息占一行) MapReduce集群配置

1. 将JDK路径明确配置给MapReduce(修改mapred-env.sh)

2. 指定MapReduce计算框架运行Yarn资源调度框架(修改mapred-site.xml)

Yarn集群配置

1. 将JDK路径明确配置给Yarn(修改yarn-env.sh)

2. 指定ResourceManager老大节点所在计算机节点(修改yarn-site.xml)

3. 指定NodeManager节点(会通过workers文件内容确定)

修改hadoop-env.sh,放开注释改成jdk安装的路径

cd /home/servers/hadoop-3.3.1/etc/hadoop

vim hadoop-env.sh

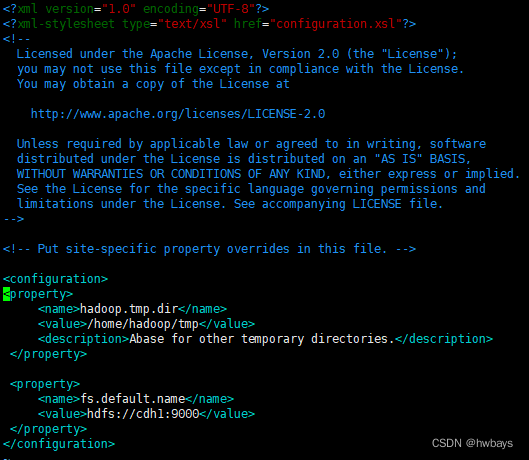

修改core-site.xml,在文件的configrue标签内加入以下内容

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://cdh1:9000</value>

</property>

修改hdfs-site.xml文件,在文件的configrue标签内加入以下内容

<property>

<name>dfs.name.dir</name>

<value>/home/hadoop/dfs/name</value>

<description>Path on the local filesystem where theNameNode stores the namespace and transactions logs persistently.</description>

</property>

<property>

<name>dfs.data.dir</name>

<value>/home/hadoop/dfs/data</value>

<description>Comma separated list of paths on the localfilesystem of a DataNode where it should store its blocks.</description>

</property>

<!-- 指定Hadoop辅助名称节点主机配置 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>cdh3:50090</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address</name>

<value>cdh1:8022</value>

</property>

<property>

<name>dfs.https.address</name>

<value>cdh1:50470</value>

</property>

<property>

<name>dfs.https.port</name>

<value>50470</value>

</property>

<!-- 配置namenode的web界面-->

<property>

<name>dfs.namenode.http-address</name>

<value>cdh1:50070</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

修改workers文件加入节点信息,每个节点占一行

cdh1

cdh2

cdh3

修改mapred-env.sh文件,在文件末尾添加JDK路径

export JAVA_HOME=/usr/local/jdk1.8.0_291

修改mapred-site.xml文件,在文件的configrue标签内加入以下内容

<property>

<name>mapred.job.tracker</name>

<value>cdh1:49001</value>

</property>

<property>

<name>mapred.local.dir</name>

<value>/home/hadoop/var</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

修改yarn-env.sh,,在文件末尾添加JDK路径

export JAVA_HOME=/usr/local/jdk1.8.0_291

修改yarn-site.xml,在文件的configrue标签内加入以下内容

<property>

<name>yarn.resourcemanager.hostname</name>

<value>cdh1</value>

</property>

<property>

<description>The address of the applications manager interface in the RM.</description>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<description>The address of the scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>The https adddress of the RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<description>The address of the RM admin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>1024</value>

<discription>每个节点可用内存,单位MB,默认8182MB</discription>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

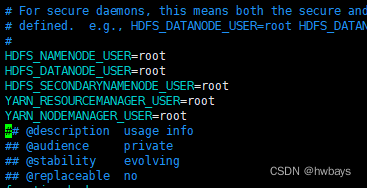

** 修改start-dfs.sh,stop-dfs.sh文件,在文件头部添加以下配置**

cd /home/servers/hadoop-3.3.1/sbin/

vim start-dfs.sh 和 vim stop-dfs.sh

HDFS_NAMENODE_USER=root

HDFS_DATANODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

YARN_RESOURCEMANAGER_USER=root

YARN_NODEMANAGER_USER=root

修改**start-yarn.sh,stop-yarn.sh文件,在文件头部添加以下配置**

vim start-yarn.sh 和 vim stop-yarn.sh

RN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

YARN_RESOURCEMANAGER_USER=root

四、分发配置,

4.1、使用rsync分发配置到其他服务器

cd /home

rsync -rvl hadoop root@cdh2:/home/

rsync -rvl hadoop root@cdh3:/home/

cd /home/service

rsync -rvl hadoop-3.3.1 root@cdh2:/home/servers/

rsync -rvl hadoop-3.3.1 root@cdh3:/home/servers/

rsync /etc/profile root@cdh2:/etc/profile

rsync /etc/profile root@cdh3:/etc/profile

4.2、所有服务器执行以下命令,使环境变量生效

source /etc/profile

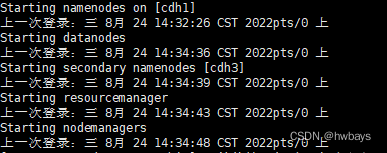

五、启动服务

5.1、hadoop初始化(只需在主服务器执行即可(NameNode节点))

cd /home/servers/hadoop-3.3.4/bin

./hadoop namenode -format

有提示以下这行,说明格式化成功

common.Storage: Storage directory /home/hadoop/dfs/name has been successfully formatted

5.2、启动hadoop

cd /home/servers/hadoop-3.3.1/sbin/

./start-all.sh

启动没报错即可

5.3使用jps查看服务

版权归原作者 hwbays 所有, 如有侵权,请联系我们删除。